Here are my slides: docs.google.com/presentation... . Learn more and comment!

#AISec #CCS2025 #Agents #AISecurity #AISafety #ContextualIntegrity 6/6

Here are my slides: docs.google.com/presentation... . Learn more and comment!

#AISec #CCS2025 #Agents #AISecurity #AISafety #ContextualIntegrity 6/6

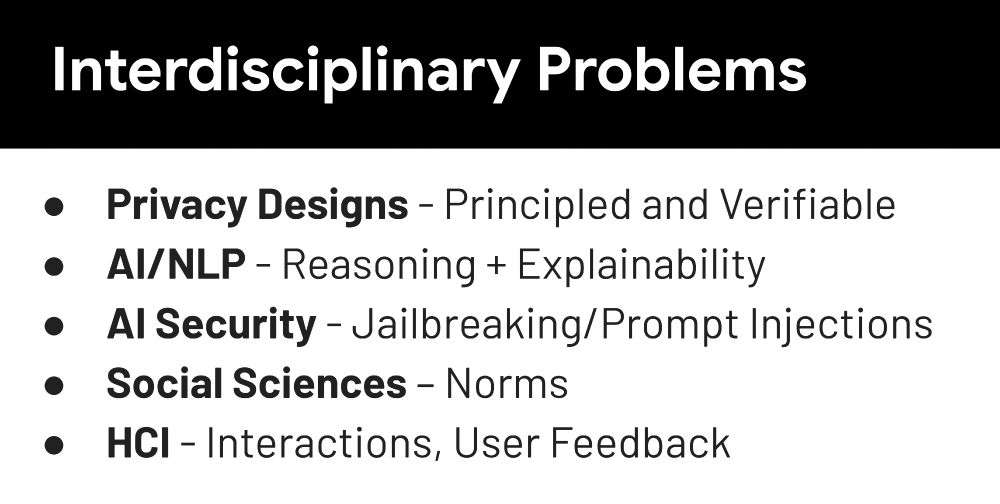

Future problems:

* HCI/UX designs: How to design new interactions?

* Evaluations and datasets: What datasets will enable to accelerate progress?

* Social norms: How do we know what is appropriate and not?

* Multi-agent systems: When multiple agents interact, who is right? 5/6

While these problems are unsolvable in general, Contextual Privacy and Security for Agents offer new way to define policies for each situation to mitigate these threats. We can use both Model-level (reasoning) and System-level (reference monitors) designs to operationalize the policies. 4/6

06.11.2025 14:53 — 👍 0 🔁 0 💬 1 📌 0

We can break this into three questions for agents:

* Subjectivity of outputs: Does AI-generated text or image have spin or bias?

* Reality/ambiguity of inputs: What part of inputs can we trust?

* System complexity: What new issues complex reasoning and action plans (research agents) will bring 3/5

Core idea: agent security isn’t just "can the model do X?", but "should it do X here?" and that answer depends on context. Without contextual policies we either lose in utility or open agents to new attacks. 2/6

06.11.2025 14:53 — 👍 0 🔁 0 💬 1 📌 0

I just gave a keynote at the 18th(!) AI Security workshop CCS'25 in beautiful Taipei. I talked about challenges that future AI agents will face and argued that for defenses we must rely on the context and generate dynamic policies that define what is appropriate to share and do in each context! 1/6

06.11.2025 14:53 — 👍 2 🔁 0 💬 1 📌 0

We're excited to welcome 28 new AI2050 Fellows! This 4th cohort of researchers are pursuing projects that include building AI scientists, designing trustworthy models, and improving biological and medical research, among other areas.

05.11.2025 15:43 — 👍 15 🔁 2 💬 0 📌 2Wanted to get into Multi-Agent System Safety research? Look no more! Terrarium 🐍 by @masonnaka.bsky.social enables different types of attacks: from misalignment to prompt injection to privacy leakage. Realistic tasks, clear metrics, nice abstractions!

31.10.2025 20:07 — 👍 3 🔁 0 💬 0 📌 0