a silly doodle of naked snake from metal gear solid 3 with the text “year of the snake”

happy year of the THIS GUY!! 🐍

02.01.2025 03:32 — 👍 3610 🔁 1251 💬 50 📌 12@picocreator.bsky.social

Serverless 🦙 @ https://featherless.ai Build Attention-Killers AI (RWKV) from scratch @ http://wiki.rwkv.com Also built uilicious & GPU.js (http://gpu.rocks)

a silly doodle of naked snake from metal gear solid 3 with the text “year of the snake”

happy year of the THIS GUY!! 🐍

02.01.2025 03:32 — 👍 3610 🔁 1251 💬 50 📌 12

I'll get straight to the point.

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

Random opinion on modern love, we need to normalize

- breaking up & being friend

- dating friend

- friend groups being chill & supportive, with all of it (getting together, or breaking up)

Starting romantic relations, without knowing your partner as a person is weird to me

bias: I married a friend

Damn. Thanks for doing this - was planning to dive into it as well - after the discussion we had that day

Gives more context than I expected. 👍

My personal high belief / high conviction hot take:

32-72B is all we need for human level of AGI 🤖

Anything higher is just us being inefficient in architecture / code / etc

#NeurIPS2024

Lots of people are only looking out for themselves which is why they fear everything.

11.12.2024 16:09 — 👍 166 🔁 18 💬 11 📌 2PS: once we get 70B converted and working at ~128k context length

We will be able to cover the vast majority of enterprise AI workloads without QKV attention

Let that sink in 😎

Also as O1 style reasoning datasets "comes online" in

the dataset space

We plan to do more training on these new line of QRWKV and LLaMA-RWKV models, over larger context lengths so that they can be true transformer killer

If your @ Neurips, you can find me with an RWKV7 Goose

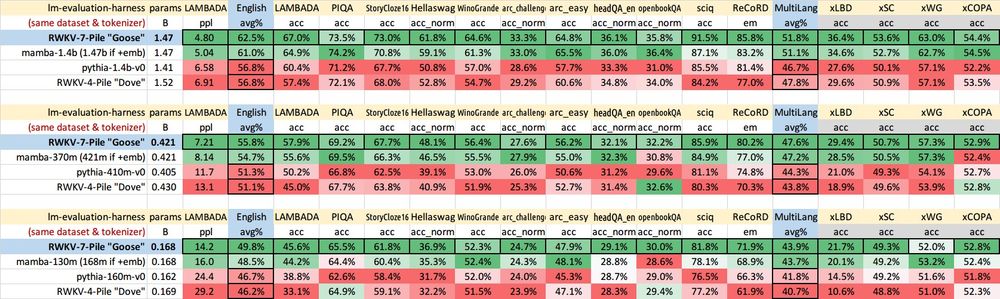

This is in addition to our latest candidate RWKV-7 "Goose" 🪿 architecture. Which we are excited for, as it shows early signs of a step jump from our v6 finch 🐤 models.

Which we are scheduled to do a conversion run as well for 32B, and 70B class models

x.com/BlinkDL_AI/s...

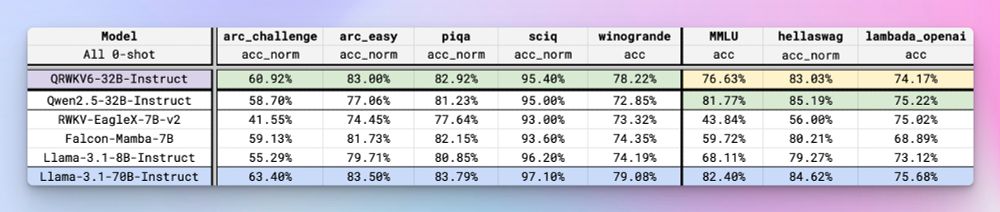

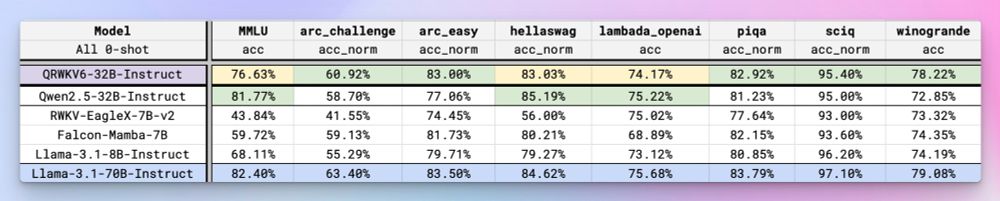

While the Q-RWKV-32B Preview takes the crown as our new Frontier RWKV model, unfortunately this is not trained on our usual 100+ language dataset

For that refer to our other models coming live today. Including a 37B MoE model, and updated 7B

substack.recursal.ai/p/qrwkv6-and...

More details on how we did this soon, but tldr:

- take qwen 32B

- freeze feedforward

- replace QKV attention layers, with RWKV6 layers

- train RWKV layers

- unfreeze feedforward & train all layers

You can try the models on featherless AI today:

featherless.ai/models/recur...

This also prove out that the RWKV attention head mechanic works on larger scales

Our 72B model is already "on its way" before the end of the month.

Once we cross that line, RWKV would now be at the scale which meets most Enterprise needs

Release Details:

substack.recursal.ai/p/q-rwkv-6-3...

Why is this important?

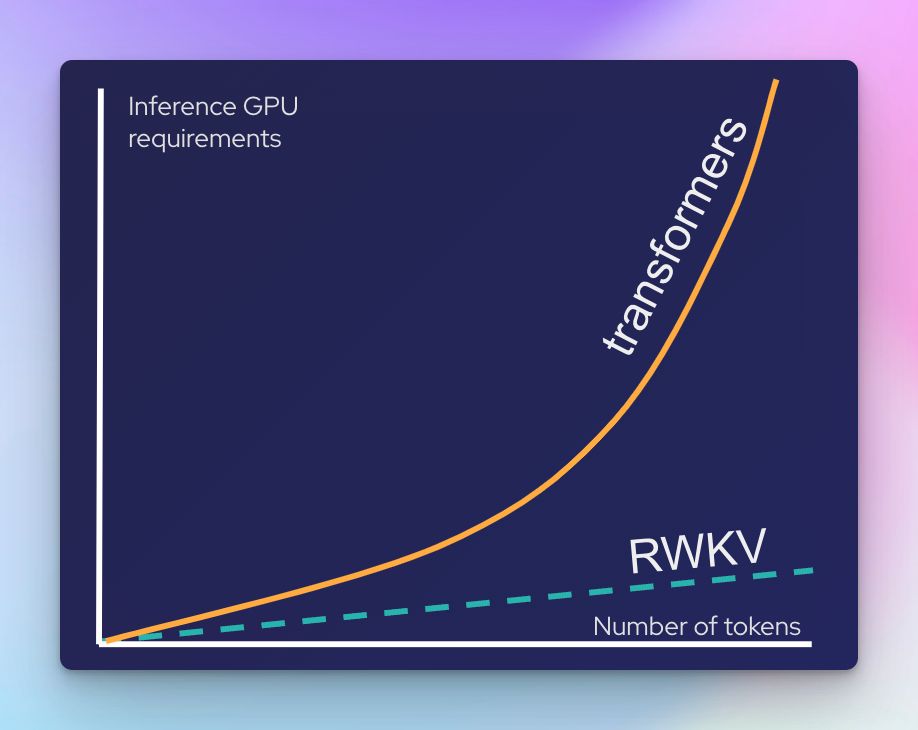

With the move to inference time thinking (O1-reasoning, chain-of-thought, etc). There is an increasing need for scalable inference over larger context lengths

The quadratic inference cost scaling of transformer models is ill suited for such long contexts

QKV Attention is **not** all you need

We release QRWKV6-32B-Instruct preview, a model converted from Qwen-32B instruct, trained for several hours on 2 MI300 nodes.

Surpassing all previous known open linear models (StateSpace, Hybrid, etc)

Unlocking 1000x+ lower inference cost

You can find out more details here

substack.recursal.ai/p/q-rwkv-6-3...

Considering its 3AM where I am.

I will be napping first before adding more details, and doing a more formal tweet thread

So yea, we just finished up the best subquadratic model for our QRWKV varient. Landing in hot during neurips

Matching transformer level performance despite the lack of "Quadratic Attention", using RWKV Attention instead

Proving Attention is **not** all you need

Last neurips I spent more time meeting ppl outside the convention

Then the posters itself: so that’s fair haha 😅

Did the RNG bless your NeurIPS lottery?

Are you in SF? Wish to nerd out on AI & ML?

Join our holiday get together + community potluck + discussion of the best new AI research with me,

@swyx.io @eraqian @dylan522p @vibhuuuus 😎

At 4pm today

lu.ma/25mwbwcm

Did you hear that joke about Ice Cream?

Best I keep that frozen for awhile!

Honestly even internal org A/B human eval with a handful of people…. is a step up from what most orgs are doing 😭

07.12.2024 19:14 — 👍 4 🔁 3 💬 1 📌 0Repeat after me:

I will build evals for my tasks.

I will build evals for my tasks.

I will build evals for my tasks.

This is such an interesting angle, because the number of large organizations that have this level of discipline to evaluating new models is likely to be tiny

Running a bunch of test prompts specific to what your company does through a new model feels like it should be pretty low hanging fruit

A test of how seriously your firm is taking AI: when o-1 (& the new Gemini model) came out this week, were there assigned folks who immediately ran the model through your internal, validated, firm-specific benchmarks to see how useful it as? Did you update any plans or goals as a result?

07.12.2024 16:34 — 👍 196 🔁 26 💬 11 📌 11

Finally done with work meetings and got to chill and slowly experience proper Canadian food:

Poutine and beer at a bar

Discord Quebec gang: Toronto Poutine ain’t real Poutine 🤣

(Will be back in SF tomorrow)

Clearly. Ain’t enough to make a proper snowball (I tried sadly 🥹)

06.12.2024 22:57 — 👍 1 🔁 0 💬 1 📌 0Have mercy 🙏 I couldn’t last a kilometer walking in this “light winter” 🤣 ❄️

(I tried while walking the shops downtown)

Singapore is about ~85 F / 30 C 🥵☀️

You Canadians have admirable resiliency against the winter cold 💪

I'm heading to #NeurIPS next week, I'll be in town Wednesday-Friday!

I'll be at a couple things:

- Wed 1-2pm: talking Transformer killers with

@picocreator.bsky.social at @swyx.io @latentspacepod.bsky.social live!

- Wed 11am: RedPajama poster (spotlight) with

Maurice Weber

1/2

Just landed in Toronto 🇨🇦

Me as South East Asian: oooo… snow ☃️

My Canadian friends: that’s barely any snow ❄️

The true Canadian experience needs to have at least knee high snow I guess 🤣

For those in SF 🌉 before Neurips (8th Dec), doing a casual pre-neurips gathering; Food, Friends, Papers.

To chill and talk, before flying over to Canada 🇨🇦

lu.ma/25mwbwcm

So what does one do, if they use more then 1TB of storage on huggingface?

Seems like pro (~$9/month) is limited to 1TB?