🚀 BALROG is open submission! We welcome submission of new foundation models and new agentic pipelines.

Check it out here:

github.com/balrog-ai/BA...

@dpaglieri.bsky.social

PhD Student at UCL. Previously AI Research Engineer at Bending Spoons

🚀 BALROG is open submission! We welcome submission of new foundation models and new agentic pipelines.

Check it out here:

github.com/balrog-ai/BA...

This suggests that high performance on popular static benchmarks does not necessarily translate to dynamic agentic tasks, and training data contamination may also play a role.

🆕BALROG introduces a new type of agentic benchmark designed to be robust to train data contamination.

🚨This week's new entry on balrogai.com is Microsoft Phi-4 (14B model)

While Phi-4 excels on benchmarks like math competitions, BALROG reveals that Phi-4 falls short as an agent. More research on how to improve agentic performance is needed.

Interested in submitting to BALROG? Check out the instructions here!

balrogai.com/submit.html

Some big models we are looking to evaluate:

OpenAI O1

Gemini 2.0 Flash

Grok-2

Llama-3.1-405B

Pixtral-120B

Mistral-Large (123B)

If you have resources to contribute, feel free to reach out!

Llama-3.3-70B-it 🫤 -> Not as good as the 3.1-70B version on BALROG's tasks.

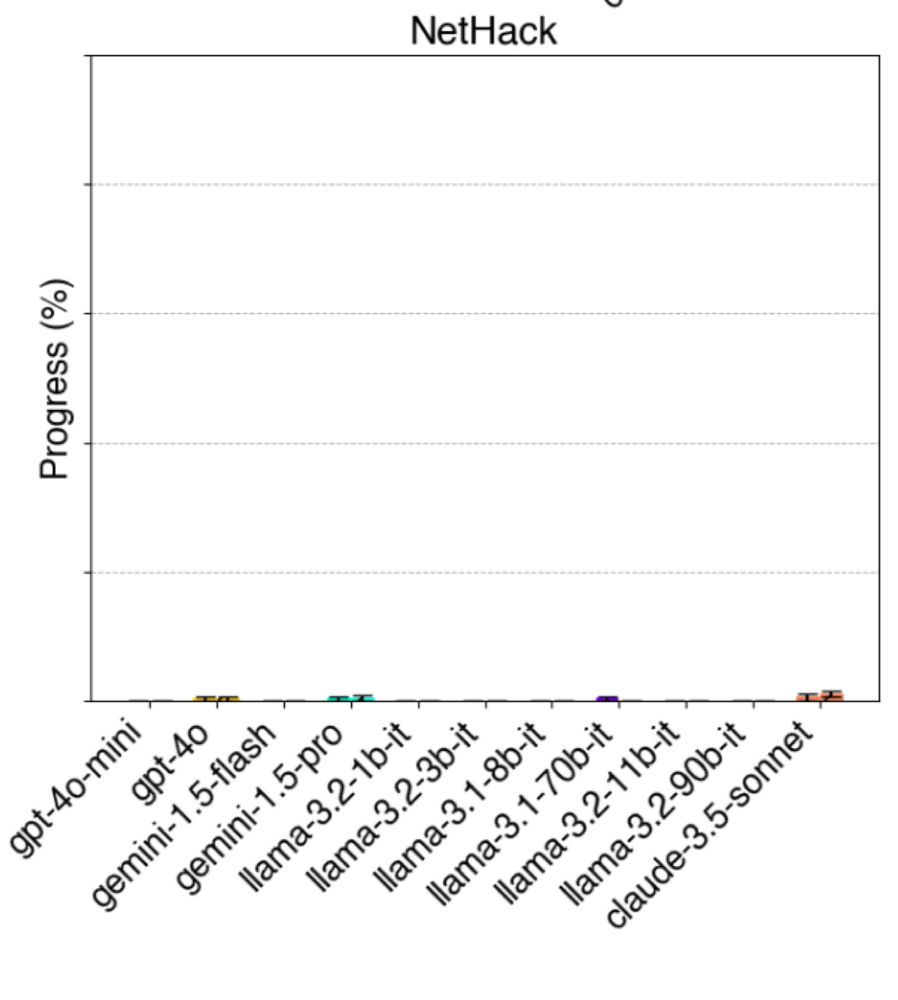

Claude 3.5 Haiku✨ -> A little gem, the best of the smaller closed-source models. It even gets 1.1% progression on NetHack! 🏰 Was it trained on NLE? 🤔

Mistral-Nemo-it 🆗 -> Okay for its size (12B)

🚨BALROG leaderboard update

This week's new entries on balrogai.com are:

Llama 3.3 70B Instruct 🫤

Claude 3.5 Haiku✨

Mistral-Nemo-it (12B) 🆗

Github: github.com/balrog-ai/BA...

I'm excited to share a new paper: "Mastering Board Games by External and Internal Planning with Language Models"

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

Introducing 🧞Genie 2 🧞 - our most capable large-scale foundation world model, which can generate a diverse array of consistent worlds, playable for up to a minute. We believe Genie 2 could unlock the next wave of capabilities for embodied agents 🧠.

04.12.2024 16:01 — 👍 234 🔁 60 💬 15 📌 30It's great to see BALROG featured on Jack Clark's Import AI newsletter!

Check out what he had to say about it here:

jack-clark.net

And check out BALROG's leaderboard on balrogai.com

Do you know what rating you’ll give after reading the intro? Are your confidence scores 4 or higher? Do you not respond in rebuttal phases? Are you worried how it will look if your rating is the only 8 among 3’s? This thread is for you.

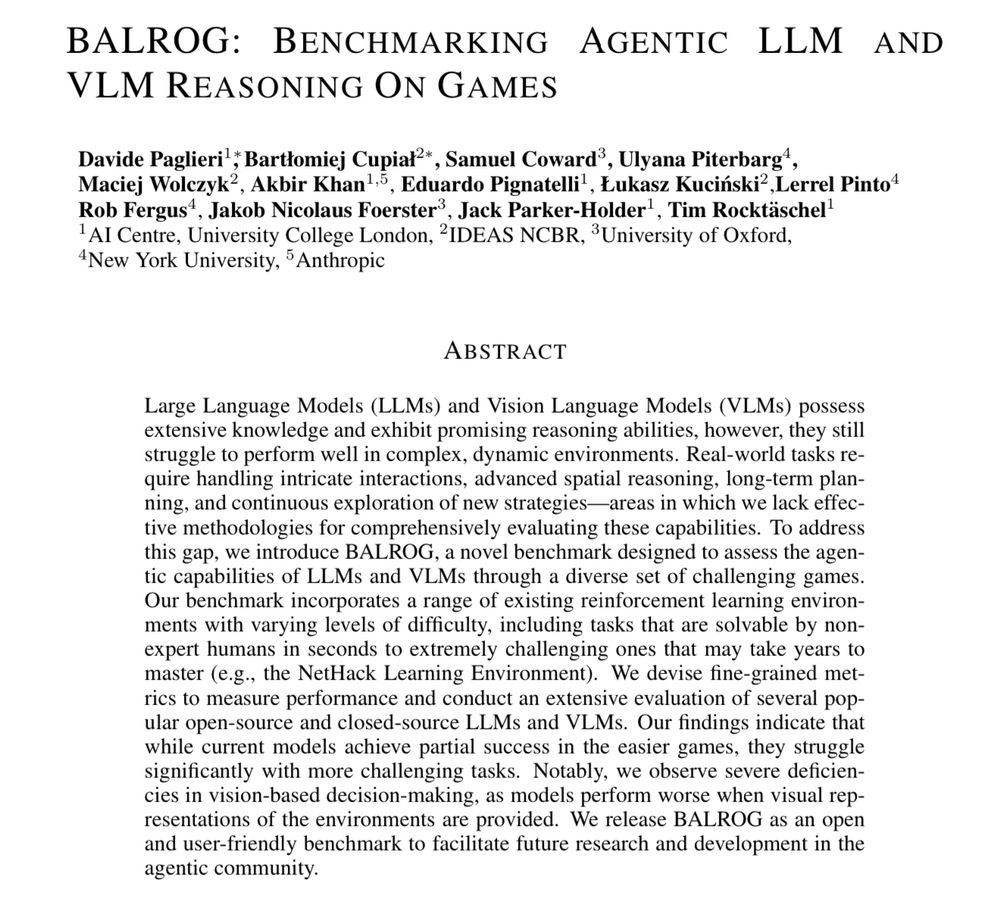

27.11.2024 17:25 — 👍 78 🔁 20 💬 4 📌 3Excited to announce "BALROG: a Benchmark for Agentic LLM and VLM Reasoning On Games" led b UCL DARK's @dpaglieri.bsky.social! Douwe Kiela plot below is maybe the scariest for AI progress — LLM benchmarks are saturating at an accelerating rate. BALROG to the rescue. This will keep us busy for years.

22.11.2024 11:27 — 👍 125 🔁 15 💬 3 📌 1

This may sound odd, but game-based benchmarks are some of the most useful for AI, since we have human scores and they require reasoning, planning & vision

The hardest of all is Nethack. No AI is close, and I suspect that an AI that can fairly win/ascend would need to be AGI-ish. Paper: balrogai.com

Your LLM shall not pass! 🧙♂️

... unless it's really good in reasoning and games!

Check out this new amazing benchmark BALROG 👾 from @dpaglieri.bsky.social and team 👇

🚨 BALROG is LIVE 🚨

🔗 Website with leaderboard: balrogai.com

📰 Paper: arxiv.org/abs/2411.13543

📜 Code: github.com/balrog-ai/BA...

No more excuses about saturated or lack of Agentic LLM/VLM benchmarks. BALROG is here!

The ultimate test? NetHack 🏰

This beast remains unsolved: the best model, o1-preview, achieved just 1.5% average progression. BALROG pushes boundaries, uncovering where LLMs/VLMs struggle the most. Will your model fare better? 🤔

They’re nowhere near capable enough yet!

And the results are in!

🤖 GPT-4o leads the pack in LLM performance

👁️ Claude 3.5 Sonnet shines as the top VLM

📈 LLaMA models show scaling laws from 1B to 70B, holding their own impressively!

🧠 Curious about how your model stacks up? Submit now!

What makes BALROG unique?

✅Easy evaluation for LLM/VLM agents locally or via popular APIs

✅Highly parallel, efficient setup

✅Supports zero-shot eval & more complex strategies

It’s plug-and-play for anyone benchmarking LLMs/VLMs. 🛠️🚀

BALROG brings together 6 challenging RL environments, including Crafter, BabaIsAI and the notoriously challenging NetHack.

BALROG is designed to give meaningful signal for both weak and strong models, making it a game-changer for the wider AI community. 🕹️ #AIResearch

Tired of saturated benchmarks? Want scope for a significant leap in capabilities?

🔥 Introducing BALROG: a Benchmark for Agentic LLM and VLM Reasoning On Games!

BALROG is a challenging benchmark for LLM agentic capabilities, designed to stay relevant for years to come.

1/🧵