Now published in Attention, Perception & Psychophysics @psychonomicsociety.bsky.social

Open Access link: doi.org/10.3758/s134...

12.06.2025 07:21 — 👍 14 🔁 8 💬 0 📌 0

APA PsycNet

As always, thanks to @chrispaffen.bsky.social, @suryagayet.bsky.social, and @stigchel.bsky.social!

For the APA/JEP fans, here's the DOI: doi.org/10.1037/xlm0...

Also, how cool is the Taverne Amendment? It made it article open access, didn't even have to ask!

www.openaccess.nl/en/policies/...

29.05.2025 07:48 — 👍 2 🔁 0 💬 0 📌 0

a poster for indiana jones and the last crusade with a man in a hat

ALT: a poster for indiana jones and the last crusade with a man in a hat

Surprisingly, NO! They didn't load their memory more, even though they had capacity to spare (they did load more for an other manipulation).

They instead behaved more cautiously/less risky!

29.05.2025 07:48 — 👍 2 🔁 0 💬 1 📌 0

an edmonton oilers hockey player sits in the stands

ALT: an edmonton oilers hockey player sits in the stands

We used a copying task: PPs copied colored shapes from an (always available) example.

Now comes the kicker: we put PPs in a penalty box for 0.5 or 5 whole seconds every time they copied a piece incorrectly.

Did they try to memorize the info better when 5s (vs 0.5s) of their life was at stake?

29.05.2025 07:48 — 👍 1 🔁 0 💬 1 📌 0

a soccer player with the number 10 on his jersey is being shown a red card

ALT: a soccer player with the number 10 on his jersey is being shown a red card

Long overdue! Didn't promote this one amid twitter/X chaos. But nearing the end of my PhD, I want to do this project justice and post it here:

Is visual working memory used differently when errors are penalized?

Out already 1+ year ago in JEP:LMC: research-portal.uu.nl/ws/files/258...

🧵 (1/3)

29.05.2025 07:48 — 👍 11 🔁 1 💬 1 📌 1

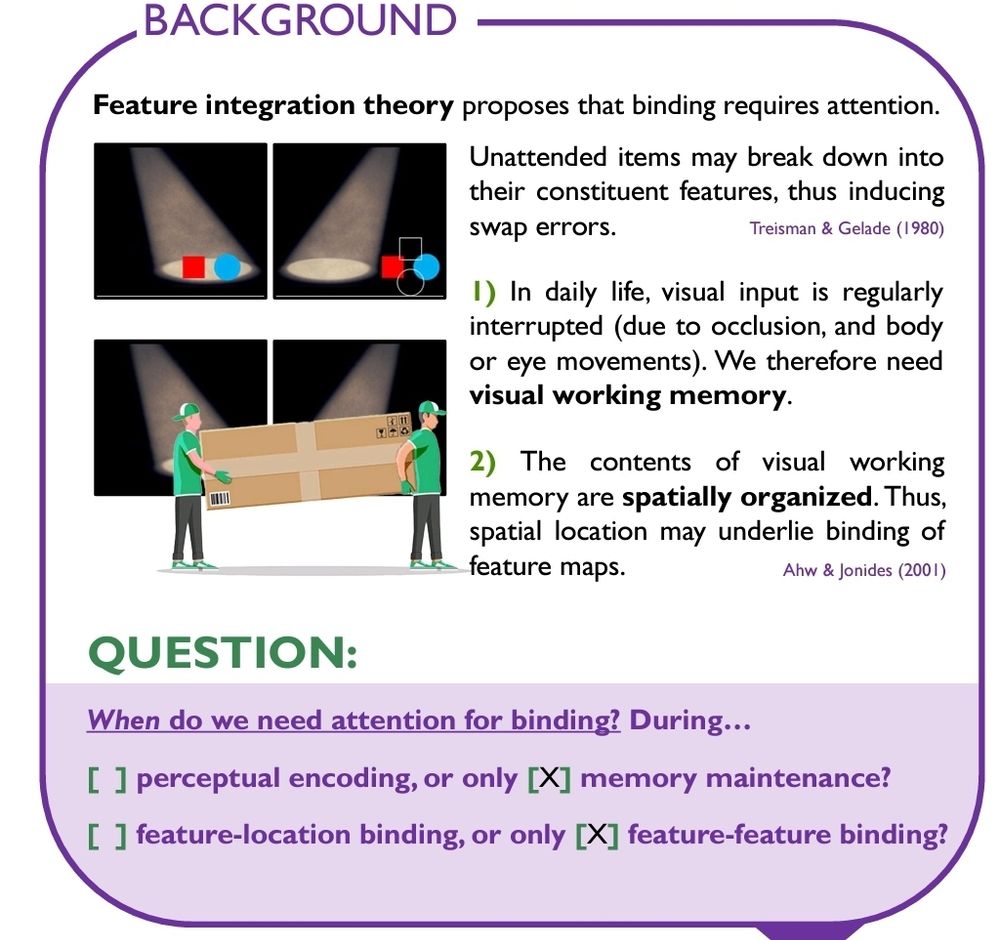

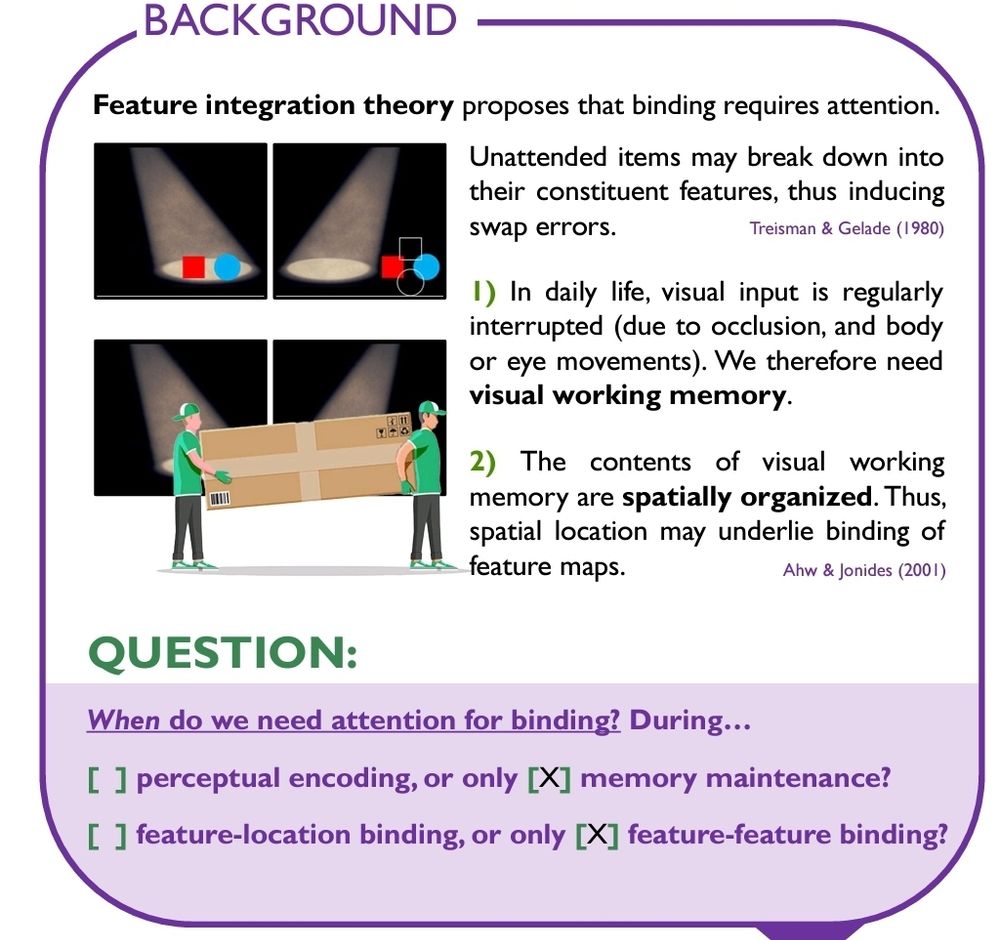

Good morning #VSS2025, if you care for a chat about the role of attention in binding object features (during perceptual encoding and memory maintenance), drop by my poster now (8:30-12:30) in the pavilion (422). Hope to see you there!

19.05.2025 12:36 — 👍 14 🔁 3 💬 3 📌 0

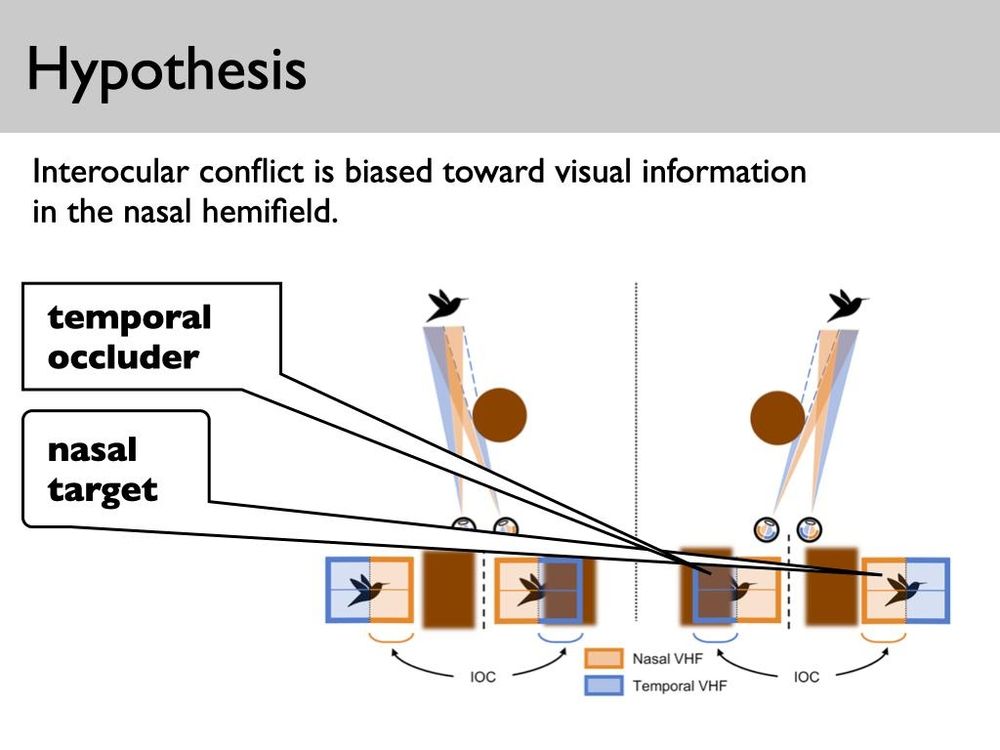

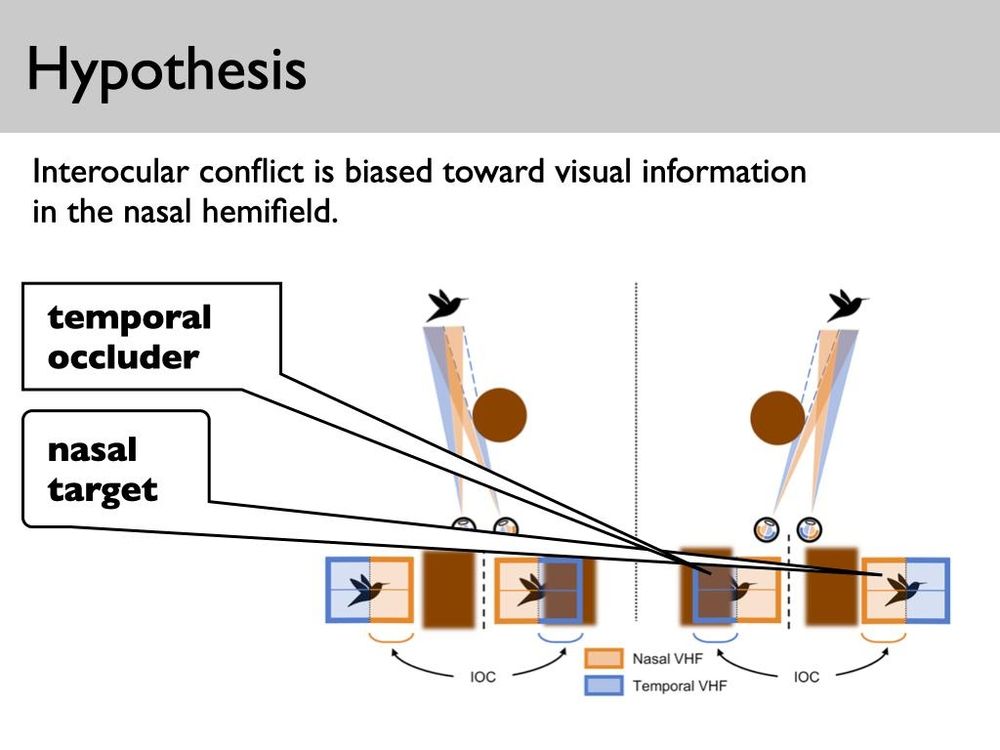

If you are at #VSS2025, come to @chrispaffen.bsky.social talk (room 2 at 3PM), where he discusses how binocular conflict in real-world vision may be resolved in an adaptive manner (favoring either nasal or temporal hemi-fields) to optimally perceive partially occluded objects of interest.

18.05.2025 16:12 — 👍 7 🔁 2 💬 0 📌 0

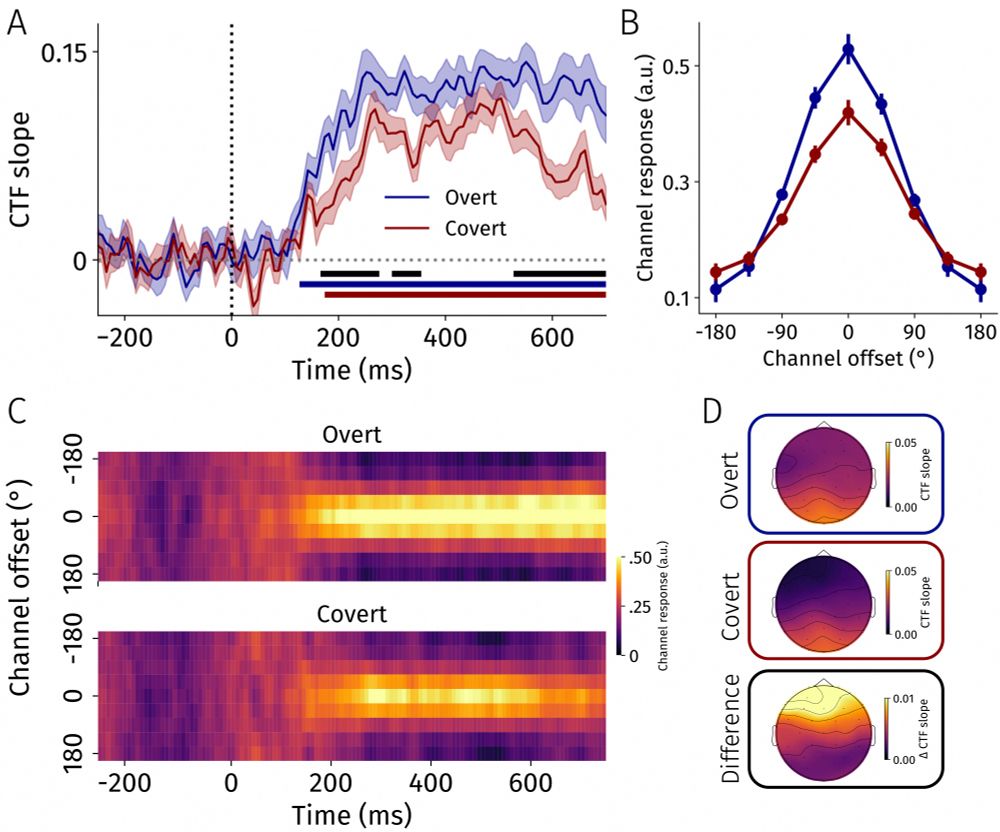

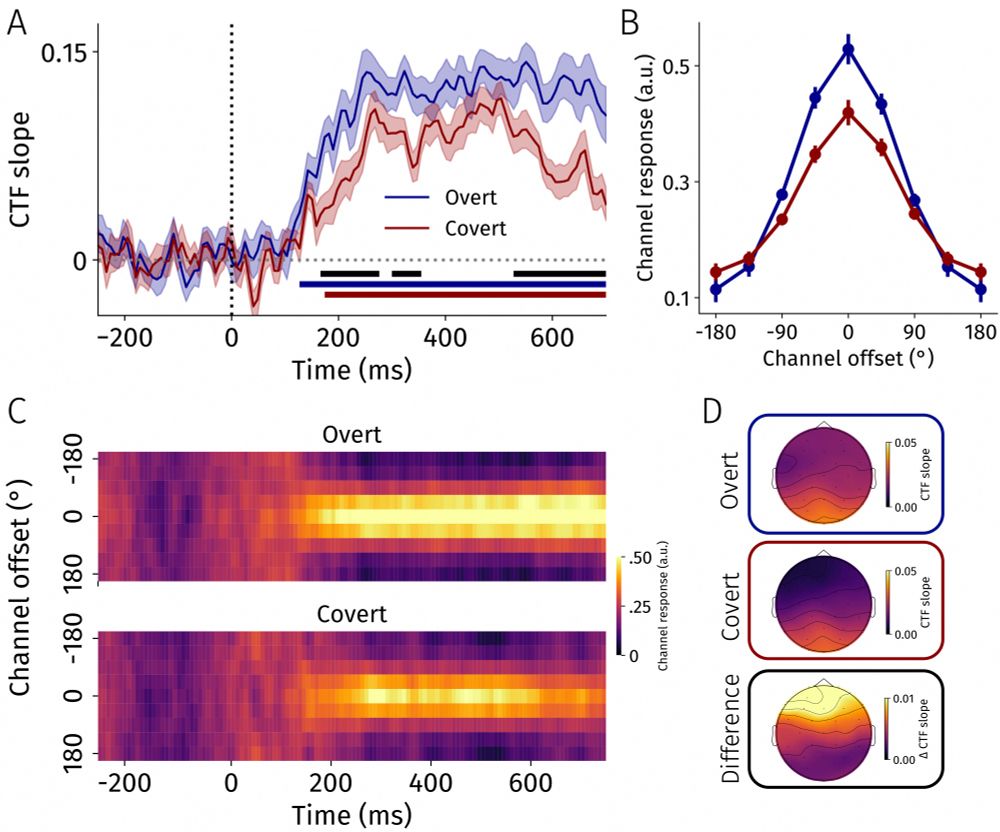

Attending @vssmtg.bsky.social? Come check out my talk on EEG decoding of preparatory overt and covert attention!

Tomorrow in the Attention: Neural Mechanisms session at 17:15. You can check out the preprint in the meantime:

17.05.2025 18:06 — 👍 6 🔁 3 💬 1 📌 0

Frequentist stats can only reject the null, or not. An unrejected null is no evidence for absence of the effect by design.

Frequentist equivalence tests are one solution. Or a Bayesian approach with a well defined stopping rule (stopping at X amount of evidence in favor OR against the effect).

16.05.2025 19:33 — 👍 0 🔁 0 💬 0 📌 0

We previously showed that affordable eye movements are preferred over costly ones. What happens when salience comes into play?

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

16.05.2025 13:36 — 👍 9 🔁 4 💬 1 📌 0

@cstrauch.bsky.social, this one!

15.05.2025 16:30 — 👍 1 🔁 0 💬 0 📌 0

Huge thanks to advisors/co-authors @suryagayet.bsky.social, @chrispaffen.bsky.social and @stigchel.bsky.social .

15.05.2025 09:56 — 👍 2 🔁 0 💬 0 📌 0

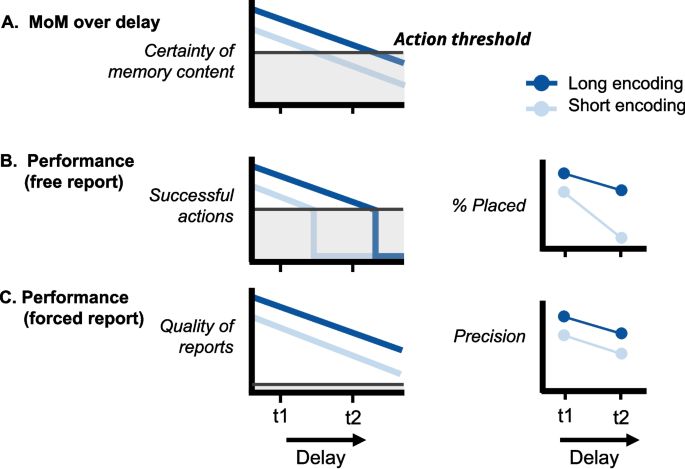

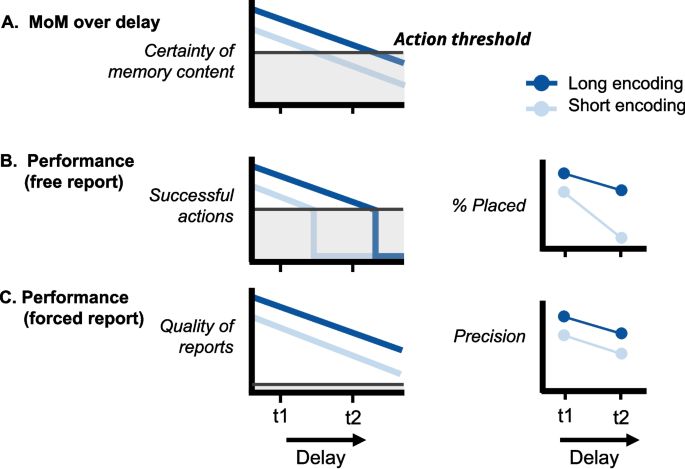

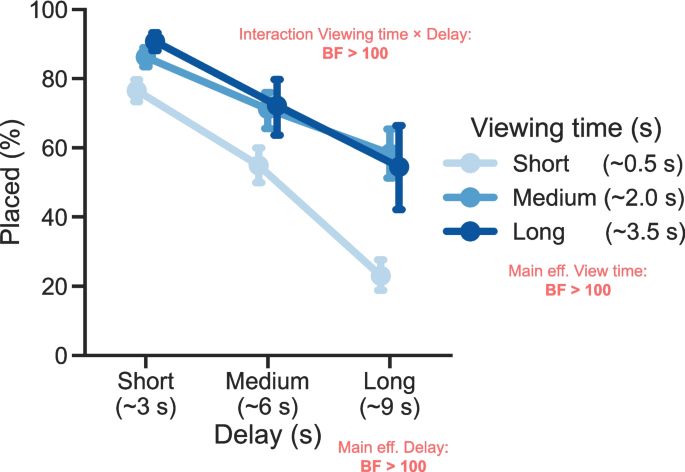

One of the interesting solutions we discuss is that the unrestricted vs. forced-choice distinction is key.

We argue that incorporating aspects of natural behavior in VWM paradigms, can reveal a lot about how humans actually use their VWM.

15.05.2025 09:56 — 👍 2 🔁 0 💬 1 📌 0

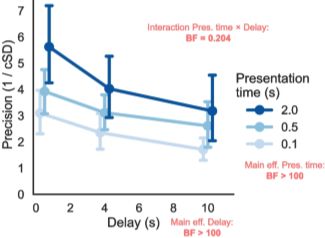

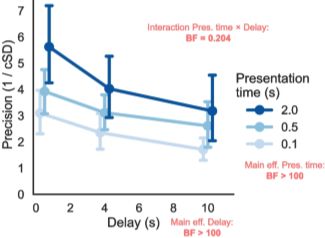

Now for the less obvious beans: this pattern (longer view -> slower decay) does not show up in the typical (forced-choice) VWM paradigms: decay rates are independent from viewing time.

What might be up?

15.05.2025 09:56 — 👍 1 🔁 0 💬 1 📌 0

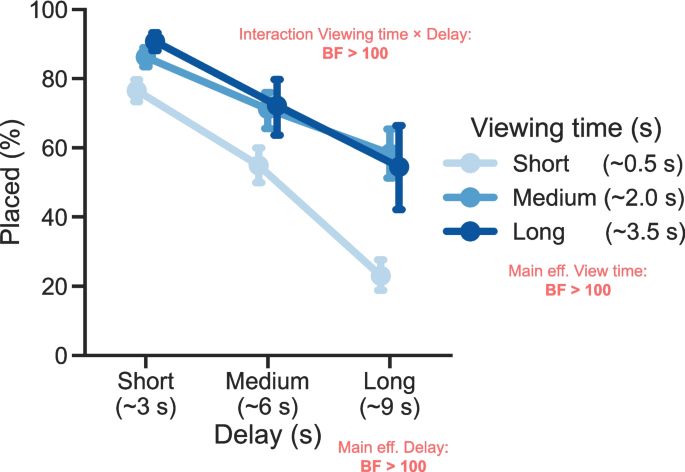

To spill the obvious beans first: memory performance got better with longer views, and it got worse with longer delays after viewing.

What's more: the LONGER a view was, the SLOWER performance got worse.

15.05.2025 09:56 — 👍 2 🔁 0 💬 1 📌 0

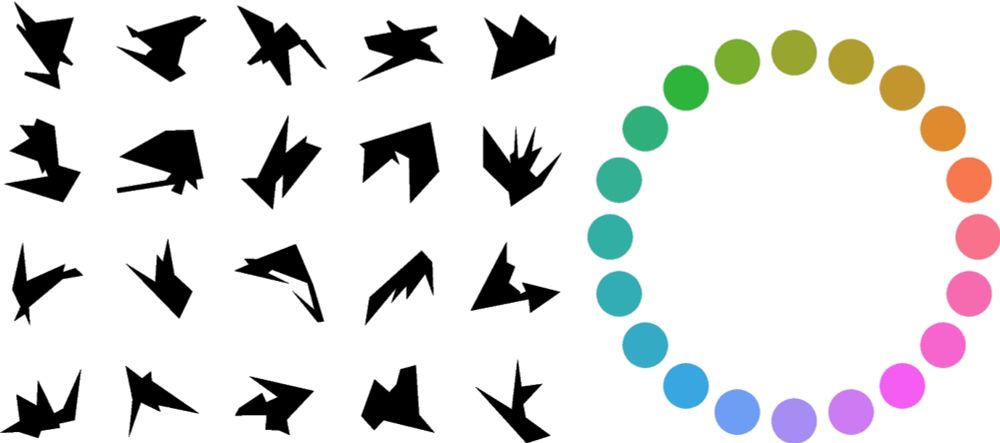

We had a bunch of people recreate example arrangements of funky shapes, however they wanted, while we tracked where they looked and what they did.

15.05.2025 09:56 — 👍 1 🔁 0 💬 1 📌 0

Preparing overt eye movements and directing covert attention are neurally coupled. Yet, this coupling breaks down at the single-cell level. What about populations of neurons?

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

13.05.2025 07:51 — 👍 16 🔁 8 💬 2 📌 2

Thrilled to share that, as of May 1st, I have started as a postdoc at The University of Manchester!

I will investigate looked-but-failed-to-see (LBFTS) errors in visual search, under the expert guidance of Johan Hulleman and Jeremy Wolfe. Watch this space!

07.05.2025 12:37 — 👍 16 🔁 2 💬 1 📌 0

@suryagayet.bsky.social

01.05.2025 17:21 — 👍 2 🔁 0 💬 0 📌 0

A move you can afford

Where a person will look next can be predicted based on how much it costs the brain to move the eyes in that direction.

We show that eye-movements are selected based on effort minimization - finally final in @elife.bsky.social

eLife's digest:

elifesciences.org/digests/9776...

& the 'convincing & important' paper:

elifesciences.org/articles/97760

I consider this my coolest ever project!

#VisionScience #Neuroscience

07.04.2025 19:28 — 👍 81 🔁 26 💬 4 📌 3

yea I imagine it's not really an issue for most use cases.

Haha so cool to hear you're planning to do copy tasks! And very happy to hear you found the plugin to be useful :)

Feel free to reach out if you have any copy task/plugin related questions. Very curious what you're up to!

23.04.2025 14:28 — 👍 1 🔁 0 💬 0 📌 0

DataPipe

Yes! Check out pipe.jspsych.org, if you haven't already. The setup is quite straightforward, and has worked well for me.

Only potential drawback (if i recall correctly) is that your experiment files are accessible (also to participants), unless you have a paid/private github account.

22.04.2025 17:45 — 👍 1 🔁 0 💬 1 📌 0

Last Friday the irreplaceable @luzixu.bsky.social successfully defended her PhD (at @utrechtuniversity.bsky.social). This has been an incredibly productive 3+ years, and we are sad to see her leave, but are very proud of her accomplishments (with @attentionlab.bsky.social, @chrispaffen.bsky.social)!

17.03.2025 15:05 — 👍 19 🔁 1 💬 2 📌 1

Computational cognition. Vision. Working memory.

We are the University of Groningen (short: UG) (Dutch: Rijksuniversiteit Groningen, kort: RUG), an open academic community since 1614 🎓 www.rug.nl

Posts in Dutch and English.

Questions? Our chat is open! 📩

MSc student & research intern at attentionlab UU ☀️ Interested in (disorders of) attention & visual perception & consciousness

PhD student @Ernst Strüngmann Institute, Frankfurt | Working memory, Eye movement behaviour, Open science, other stuff |

Professor (developmental cognitive neuroscience and visual psychophysics) at a public University. Living around Boston. Dad of two daughters. (Views are my own)

Scientist studying cognitive development, mom of 2 girls, immigrant from Hungary, Brookline Town Meeting member (2022-25)

EiC team: Johan Wagemans, Ian Dobbins, Ori Friedman, and Katrien Segaert

PhD candidate Utrecht University|AttentionLab UU | CAP-Lab | Visual working memory | Attention

PhD student at Umass Boston in the Early Minds Lab! Studying working memory and cognitive effort in all ages, with Zsuzsa Kaldy and Erik Blaser

Professor for data science at HSD, @zdd-hsd.bsky.social

| ML fan & critic | current research mostly #datascience, #machinelearning, #cheminformatics #dataviz #nlp | ✨ #openscience #openaccess #rse | living data point 🚲

Post-Doctoral Researcher at UCSD studying voluntary memory control. For all my science: https://josephmsaito.github.io/

Just PhD-ed at University of Maryland. Peking U alum. Interested in how we represent objects, structures and aesthetics cross language and vision, with EEG/SQUID & OPM MEG/.... Personal website: https://sites.google.com/view/xinchiyu/home

Postdoc @UCSD | working memory 🧠

Social Psychology Prof at University of Amsterdam. Prejudice & social cognition using experimental, computational, neuro, & AI approaches.

A project of The Center For Scientific Integrity https://centerforscientificintegrity.org/

Daily newsletter http://eepurl.com/bNRlUn

Database http://retractiondatabase.org

Tips team@retractionwatch.com (better than @ replies)

Cognitive Neuroscientist | Predictive Processing & Perception Researcher.

At: CIMCYC, Granada. Formerly: VU Amsterdam & Donders Institute.

https://www.richter-neuroscience.com/