Stéphane Thiell

@sthiell.bsky.social

I do HPC storage at Stanford and always monitor channel 16 ⛵

@sthiell.bsky.social

I do HPC storage at Stanford and always monitor channel 16 ⛵

Lustre Developer Day at Stanford University on April 3, 2025

Thrilled to host Lustre Developer Day at @stanford-rc.bsky.social post-LUG 2025! 🌟 With 14+ top organizations like DDN, LANL, LLNL, HPE, CEA, AMD, ORNL, AWS, Google, NVIDIA, Sandia and Jefferson Lab represented, we discussed HSM, Trash Can, and upstreaming Lustre in Linux.

25.04.2025 18:39 — 👍 5 🔁 0 💬 1 📌 0

@stanford-rc.bsky.social was proud to host the Lustre User Group 2025 organized with OpenSFS! Thanks to everyone who participated and our sponsors! Slides are already available at srcc.stanford.edu/lug2025/agenda 🤘Lustre! #HPC #AI

04.04.2025 17:05 — 👍 10 🔁 2 💬 0 📌 1

Getting things ready for next week's Lustre User Group 2025 at Stanford University!

28.03.2025 19:07 — 👍 6 🔁 1 💬 0 📌 0Why not use inode quotas to catch that earlier?

15.03.2025 14:24 — 👍 1 🔁 0 💬 1 📌 0

Join us for the Lustre User Group 2025 hosted by @stanford-rc.bsky.social in collaboration with OpenSFS.

Check out the exciting agenda! 👉 srcc.stanford.edu/lug2025/agenda

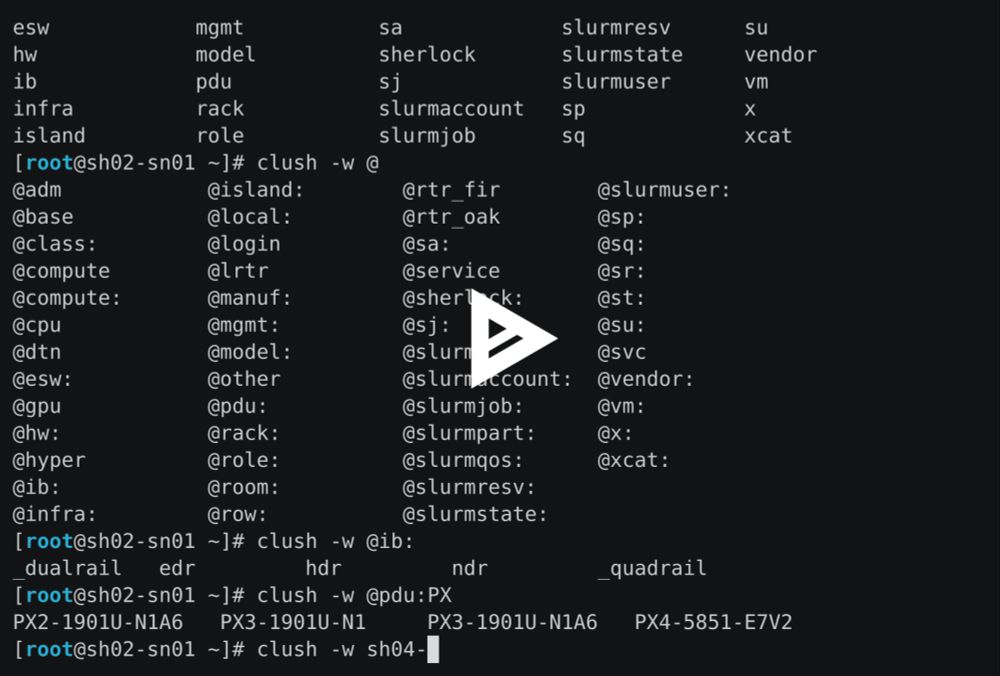

ClusterShell 1.9.3 is now available in EPEL and Debian. Not using clustershell groups on your #HPC cluster yet?! Check out the new bash completion feature! Demo recorded on Sherlock at @stanford-rc.bsky.social with ~1,900 compute nodes and many group sources!

asciinema.org/a/699526

SAS 24Gb/s (12 x 4 x 24Gb/s) switch from SpectraLogic on display at #SC24. Built by Astek Corporation.

29.01.2025 04:45 — 👍 2 🔁 0 💬 0 📌 0

Dual Astek SAS switches on top of TFinity tape library.

We started it! blocksandfiles.com/2025/01/28/s...

Check out my LAD'24 presentation:

www.eofs.eu/wp-content/u...

Just another day for Sherlock's home-built scratch Lustre filesystem at Stanford: Crushing it with 136+GB/s aggregate read on real research workload! 🚀 #Lustre #HPC #Stanford

11.01.2025 19:59 — 👍 24 🔁 3 💬 0 📌 0

A great show of friendly open source competition and collaboration: the lead developers of Environment Modules and Lmod (Xavier of CEA and Robert of @taccutexas.bsky.social) at #SC24. They often exchange ideas and push each other to improve their tools!

21.11.2024 15:26 — 👍 5 🔁 2 💬 0 📌 0

Lustre User Group 2025 save-the-date card: April 1-2, 2025 at Stanford University

Newly announced at the #SC24 Lustre BoF! Lustre User Group 2025, organized by OpenSFS, will be hosted at Stanford University on April 1-2, 2025. Save the date!

20.11.2024 14:40 — 👍 10 🔁 8 💬 0 📌 1

Fun fact: the Georgia Aquarium (nonprofit), next to the Congress center is the largest in the U.S. and the only one that houses whale sharks. I went on Sunday and it was worth it. Just in case you need a break from SC24 this week… 🦈

18.11.2024 17:39 — 👍 1 🔁 0 💬 0 📌 0

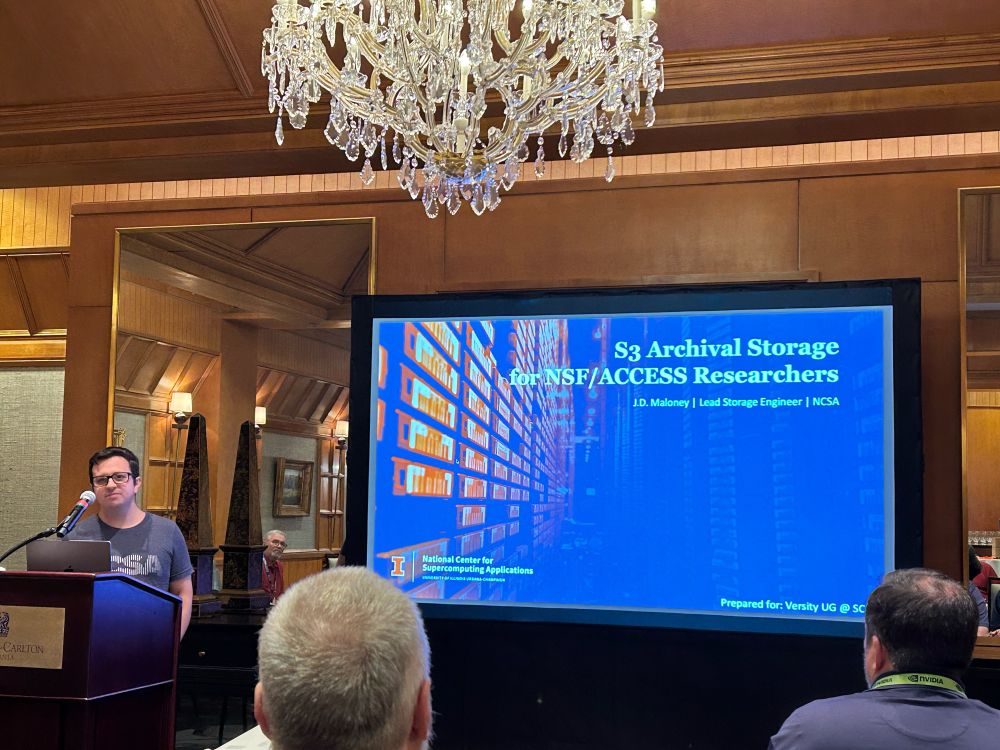

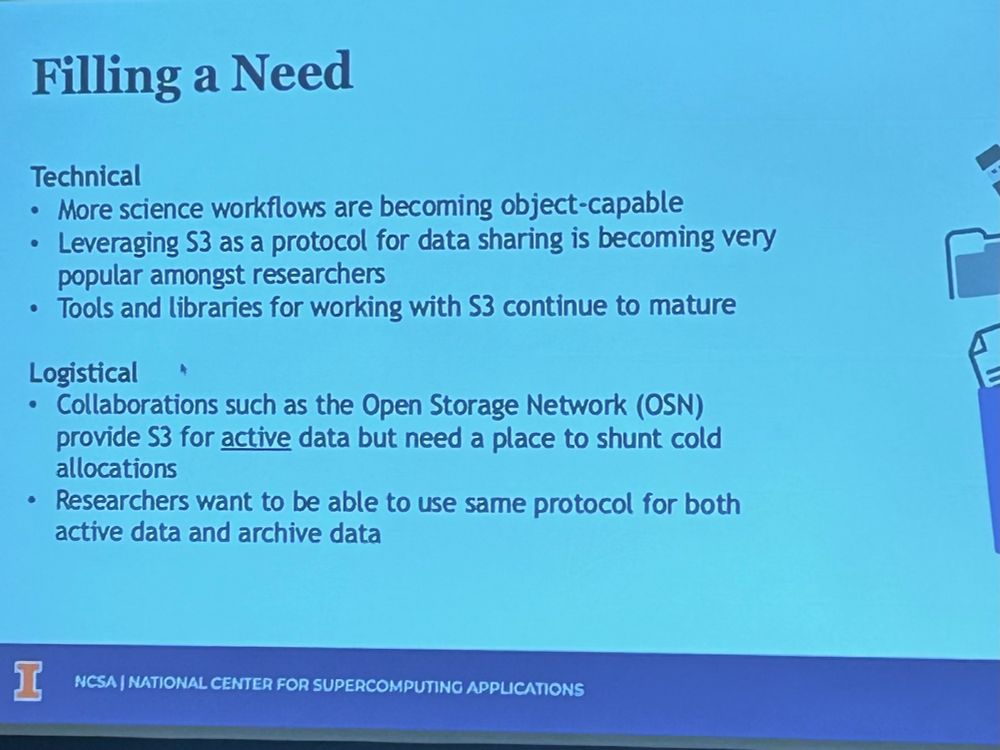

I always enjoy an update from JD Maloney (NCSA), but even more when it is about using S3 for Archival Storage, something we are deploying at scale at Stanford this year (using MinIO server and Lustre/HSM!)

18.11.2024 17:10 — 👍 6 🔁 1 💬 0 📌 0

Our branch of robinhood-lustre-3.1.7 on Rocky Linux 9.3 with our own branch of Lustre 2.15.4 and MariaDB 10.11 can ingest more than 35K Lustre changelogs/sec. Those gauges seem appropriate for Pi Day, no?

github.com/stanford-rc/...

github.com/stanford-rc/...

Sherlock goes full flash!

The scratch file system of Sherlock, Stanford's HPC cluster, has been revamped to provide 10 PB of fast flash storage on Lustre

news.sherlock.stanford.edu/publications...

Cannot say for all Stanford but at Research Computing we manage more than 60PB of on-prem research storage with most of it in use and growing...

We keep a few interesting numbers here: www.sherlock.stanford.edu/docs/tech/fa...

Racks of Fir Storage at the Stanford Research Computing Facility (SRCF)

df command showing 9.8PB /scratch

Artist photo of an OSS pair of Fir with HDR-200

Filesystem upgrade complete! Stanford cares about HPC I/O! The Sherlock cluster has now ~10PB of full flash Lustre scratch storage at 400 GB/s, to support a wide range of research jobs on large datasets! Fully built in-house!

25.01.2024 01:27 — 👍 6 🔁 1 💬 1 📌 0

The Internet lied to me

My header image is an extract from this photo taken at the The Last Bookstore in Los Angeles, a really cool place.

28.10.2023 01:03 — 👍 1 🔁 0 💬 0 📌 0👋

28.10.2023 00:55 — 👍 4 🔁 0 💬 0 📌 0