Guy Moss (@gmoss13.bsky.social) developed and applied simulation-based inference methods to solve inference problems in glaciology, in collaboration with @geophys-tuebingen.bsky.social. E.g., openreview.net/forum?id=yB5... 3/3

13.01.2026 15:42 — 👍 8 🔁 0 💬 1 📌 0

Julius Vetter (@vetterj.bsky.social) worked on deep generative modeling and simulation-based Bayesian inference, with applications to (physiological) time series data. E.g., openreview.net/forum?id=kN0... 2/3

13.01.2026 15:42 — 👍 8 🔁 0 💬 2 📌 0

Happy 2026 everyone! Two freshly minted PhDs 🧑🎓emerged from our lab at the end of last year.

We congratulate Dr Julius Vetter (@vetterj.bsky.social) and Dr Guy Moss (@gmoss13.bsky.social)! Here seen celebrating with the lab 🎳. 1/3

13.01.2026 15:42 — 👍 18 🔁 3 💬 1 📌 0

AutoSBI Poster: Tuesday 2 Dec 10:30am at the Amortized ProbML workshop, Copenhagen 11/11

01.12.2025 16:16 — 👍 4 🔁 0 💬 0 📌 0

Fifth, we bring AutoML to SBI pipelines with a practical performance metric that does not require ground-truth posteriors, improving inference quality on the SBI benchmark! By @swagatam.bsky.social, @gmoss13.bsky.social, @keggensperger.bsky.social, @jakhmack.bsky.social 10/11

01.12.2025 16:16 — 👍 4 🔁 0 💬 1 📌 0

Fourth, in collaboration with Ian C Tanoh and Scott Linderman, we used the Jaxley toolbox and extended Kalman filters to estimate the marginal log-likelihood of a biophysical neuron model. We showed that this enables identifying biophysical parameters given extracellular recordings. 8/11

01.12.2025 16:16 — 👍 4 🔁 0 💬 1 📌 0

Third, in collaboration with @kyrakadhim.bsky.social, @philipp.hertie.ai, and others, we built a task- and data-constrained biophysical network of the outer plexiform layer of the mouse retina. To optimize this model, we built it on top of our Jaxley toolbox for differentiable simulation. 6/11

01.12.2025 16:16 — 👍 3 🔁 1 💬 1 📌 0

Second, come by to check out NPE-PFN: We leverage the power of tabular foundation models for training-free and simulation-efficient SBI. SBI has never been so effortless! By @vetterj.bsky.social, Manuel Gloeckler, @danielged.bsky.social, @jakhmack.bsky.social 4/11

01.12.2025 16:16 — 👍 2 🔁 0 💬 1 📌 1

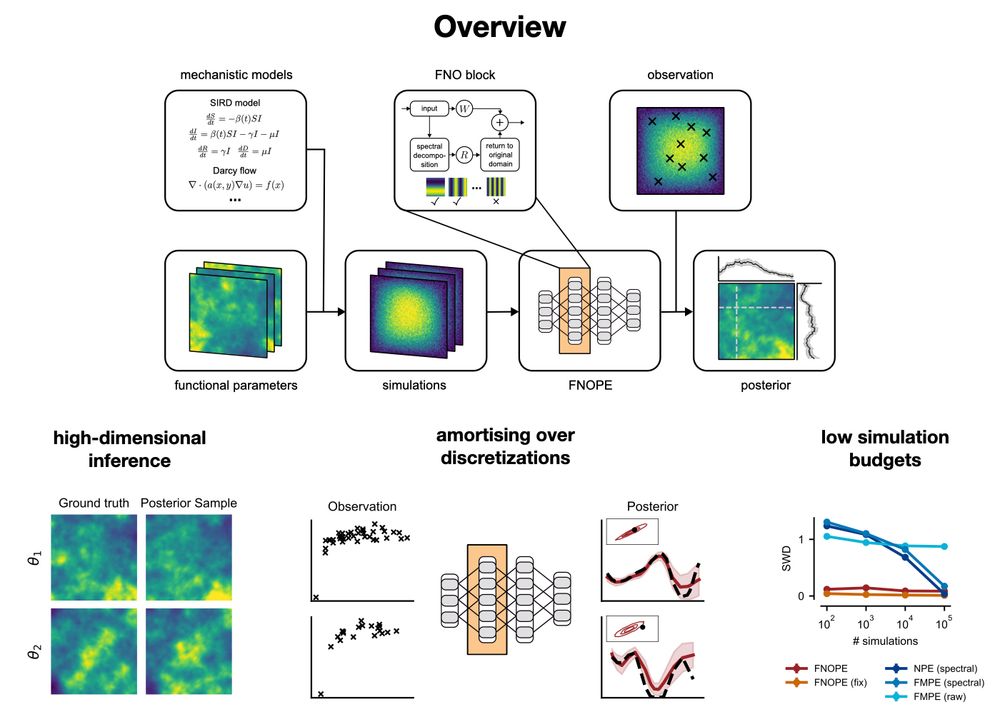

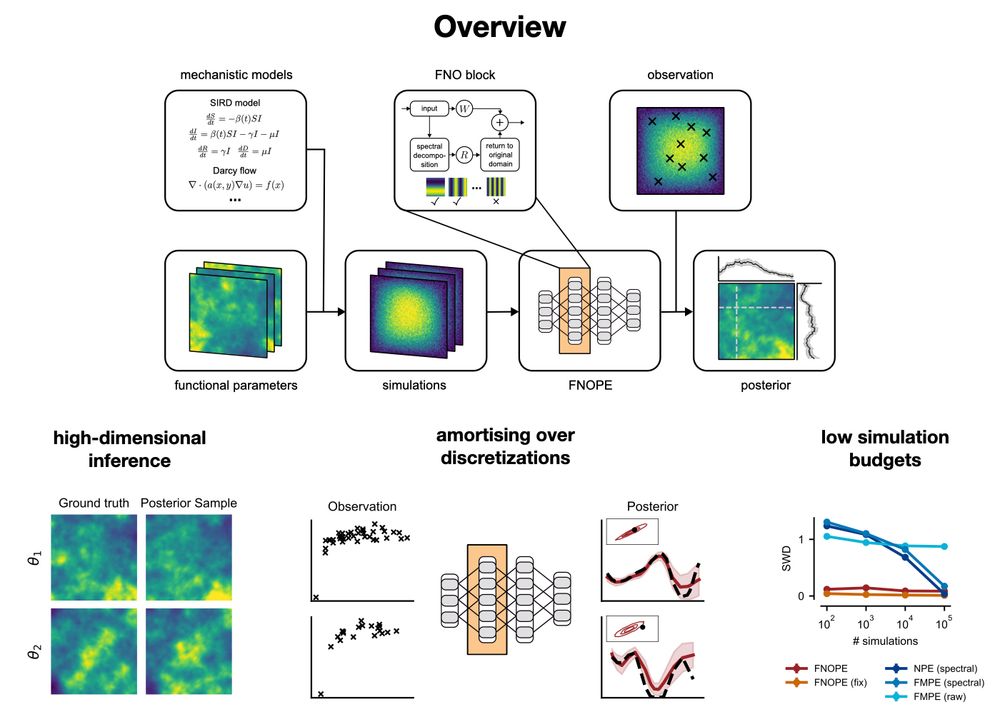

First, we introduce FNOPE, a new simulation-based inference approach for efficiently and flexibly inferring function-valued parameters. By @gmoss13.bsky.social, @leahsmuhle.bsky.social, Reinhard Drews, @jakhmack.bsky.social and @coschroeder.bsky.social 2/11

01.12.2025 16:16 — 👍 5 🔁 0 💬 1 📌 0

Our group is at NeurIPS and EurIPS this year with four papers and one workshop poster. If you are either curious about SBI with autoML, with foundation models, or on function spaces or about differentiable simulators with Jaxley, have a look below 👇 1/11

01.12.2025 16:16 — 👍 24 🔁 4 💬 1 📌 1

Jobs - mackelab

The MackeLab is a research group at the Excellence Cluster Machine Learning at Tübingen University!

We are looking for a Research Engineer (E13 TV-L) to work at the intersection of #ML and #compneuro! 🤖🧠

Help us build large-scale bio-inspired neural networks, write high-quality research code, and contribute to open-source tools like jaxley, sbi, and flyvis 🪰.

More info: www.mackelab.org/jobs/

28.11.2025 13:54 — 👍 13 🔁 4 💬 0 📌 2

Maren joined the lab as PhD student in October to work on connectome-constrained models of neural activity and behavior in the fruit fly. She holds an MSc Computational Neuroscience from the BCCN Berlin. 6/7

28.11.2025 10:26 — 👍 3 🔁 0 💬 1 📌 0

Byoungsoo (@byoungsookim.bsky.social) joined as a research scientist in October, upon finishing his Master's in Computational Neuroscience in the lab. He is working on modeling optomotor response circuits with a 3D compound eye model of the fruit fly. 5/7

28.11.2025 10:26 — 👍 5 🔁 0 💬 1 📌 0

Isaac joined as a master’s thesis student working on representation learning for connectome-constrained models. Now, as a PhD student since July, he’s applying this to models of the fruitfly. He previously did an MSc at AIMS South Africa. 4/7

28.11.2025 10:26 — 👍 4 🔁 0 💬 1 📌 0

Nicolas previously worked on computational neuroscience and NLP projects at EPFL. He joined the lab in June as a PhD student and is interested in building foundational models for neurophysiology data and applying LLMs for scientific discovery. 3/7

28.11.2025 10:26 — 👍 3 🔁 0 💬 1 📌 0

Stefan (@stewah.bsky.social) joined the lab as a PhD student in June. He completed his Bachelor’s and Master’s degrees in Physics at Heidelberg University. He works on using LLMs to discover scientific models. 2/7

28.11.2025 10:26 — 👍 4 🔁 0 💬 1 📌 0

MackeLab has grown! 🎉 Warm welcome to 5(!) brilliant and fun new PhD students / research scientists who joined our lab in the past year — we can’t wait to do great science and already have good times together! 🤖🧠 Meet them in the thread 👇 1/7

28.11.2025 10:26 — 👍 19 🔁 4 💬 1 📌 1

Nicolas has previously worked on computational neuroscience and NLP projects at EPFL. He joined the lab in June as a PhD student and is currently interested in building foundational models for neurological data and applying LLMs for scientific discovery. 3/7

28.11.2025 09:18 — 👍 0 🔁 0 💬 0 📌 0

Stefan (@stewah.bsky.social) joined the lab as a PhD student in June. He completed his Bachelor’s and Master’s degrees in Physics at Heidelberg University. At the moment, he is working on using LLMs to discover scientific models. 2/7

28.11.2025 09:18 — 👍 0 🔁 0 💬 1 📌 0

..., @ppjgoncalves.bsky.social, @janmatthis.bsky.social, @coschroeder.bsky.social, @jakhmack.bsky.social

21.11.2025 15:08 — 👍 0 🔁 0 💬 0 📌 0

Simulation-Based Inference: A Practical Guide

A central challenge in many areas of science and engineering is to identify model parameters that are consistent with prior knowledge and empirical data. Bayesian inference offers a principled framewo...

Led by @deismic.bsky.social and @janboelts.bsky.social with Peter Steinbach, @gmoss13.bsky.social, @tommoral.bsky.social, Manuel Gloeckler, @plcrodrigues.bsky.social, Julia Linhart, @lappalainenjk.bsky.social, @bkmi.bsky.social,...

21.11.2025 15:08 — 👍 1 🔁 1 💬 1 📌 0

Finally, we built a database of SBI applications across fields as a community resource. We catalogued 100+ papers, available in an interactive web app. Users can explore what's been done, find similar problems to theirs, and contribute new applications: sbi-applications-explorer.streamlit.app

21.11.2025 15:08 — 👍 0 🔁 0 💬 1 📌 0

The appendix covers advanced topics and recent developments: sequential methods, model misspecification, function-valued parameters, score-based methods, flow matching. Extended practical guidelines help practitioners navigate the evolving SBI landscape.

21.11.2025 15:08 — 👍 0 🔁 0 💬 1 📌 0

We also cover a range of diagnostic tools that assess whether the posterior distributions returned by SBI are trustworthy. We discuss Posterior Predictive Checks and Calibration tests, as well as their advantages and limitations.

21.11.2025 15:08 — 👍 0 🔁 0 💬 1 📌 0

Neuro-AI PhD at @c3neuro.bsky.social and @mackelab.bsky.social, Tübingen AI Center

Across many scientific disciplines, researchers in the Bernstein Network connect experimental approaches with theoretical models to explore brain function.

Master's student in Computational Neuroscience @gtc-tuebingen.bsky.social | Research Assistant at @mackelab.bsky.social | Formerly RA at RoLiLab @mpicybernetics.bsky.social & NeLy @epfl-brainmind.bsky.social

Research scientist at Google in Zurich

http://research.google/teams/connectomics

PhD from @mackelab.bsky.social

We are a joint partnership of University of Tübingen and Max Planck Institute for Intelligent Systems. We aim at developing robust learning systems and societally responsible AI. https://tuebingen.ai/imprint

https://tuebingen.ai/privacy-policy#c1104

Dedicated to helping neuroscientists stay current and build connections. Subscribe to receive the latest news and perspectives on neuroscience: www.thetransmitter.org/newsletters/

Understanding life. Advancing health.

Looking at protists with the eyes of a theoretical neuroscientist.

Looking at brains with the eyes of a protistologist.

(I also like axon initial segments)

Forthcoming book: The Brain, in Theory.

http://romainbrette.fr/

Neuroscientist and physician specializing in epilepsy. Interested in brain function and translating discoveries to improve patient care. https://liebelab.github.io

Led by PI @stefanieliebe.bsky.social : We use #AI tools to analyze neural activity and behavior in humans, bridging basic research on cognition and clinical applications, with a focus on #epilepsy. Based in Tübingen, Germany.

https://liebelab.github.io

PhD student at @mackelab.bsky.social

Full Professor of Computational Statistics at TU Dortmund University

Scientist | Statistician | Bayesian | Author of brms | Member of the Stan and BayesFlow development teams

Website: https://paulbuerkner.com

Opinions are my own

Asst. Prof. University of Amsterdam, rock climber, husband, father. Model-based (Mathematical) Cognitive Neuroscientist. I study decision-making, EEG, 🧠, statistical methods, etc.

🇺🇦🇪🇺

Professor of Statistics and Machine Learning at UCL Statistical Science. Interested in computational statistics, machine learning and applications in the sciences & engineering.

Full Professor at @deptmathgothenburg.bsky.social | simulation-based inference | Bayes | stochastic dynamical systems | https://umbertopicchini.github.io/

Machine learner & physicist. At CuspAI, I teach machines to discover materials for carbon capture. Previously Qualcomm AI Research, NYU, Heidelberg U.

Posting about the One World Approximate Bayesian Inference (ABI) Seminar, details at https://warwick.ac.uk/fac/sci/statistics/news/upcoming-seminars/abcworldseminar/