the logging is done in rstan. so a fix if needed will have to be there I assume.

01.06.2025 18:21 — 👍 1 🔁 0 💬 0 📌 0

there is not unfortunately. I didn't have time to look into it anymore.

23.05.2025 18:24 — 👍 1 🔁 0 💬 1 📌 0

Image of a graduating PhD student in the trending Studio Ghibli style.

I defended my PhD last week ✨

Huge thanks to:

• My supervisors @paulbuerkner.com @stefanradev.bsky.social @avehtari.bsky.social 👥

• The committee @ststaab.bsky.social @mniepert.bsky.social 📝

• The institutions @ellis.eu @unistuttgart.bsky.social @aalto.fi 🏫

• My wonderful collaborators 🧡

#PhDone 🎓

27.03.2025 18:31 — 👍 119 🔁 2 💬 18 📌 1

can you post a reprex on github?

21.03.2025 19:29 — 👍 1 🔁 0 💬 1 📌 0

What advice do folks have for organising projects that will be deployed to production? How do you organise your directories? What do you do if you're deploying multiple "things" (e.g. an app and an api) from the same project?

27.02.2025 14:15 — 👍 102 🔁 30 💬 27 📌 4

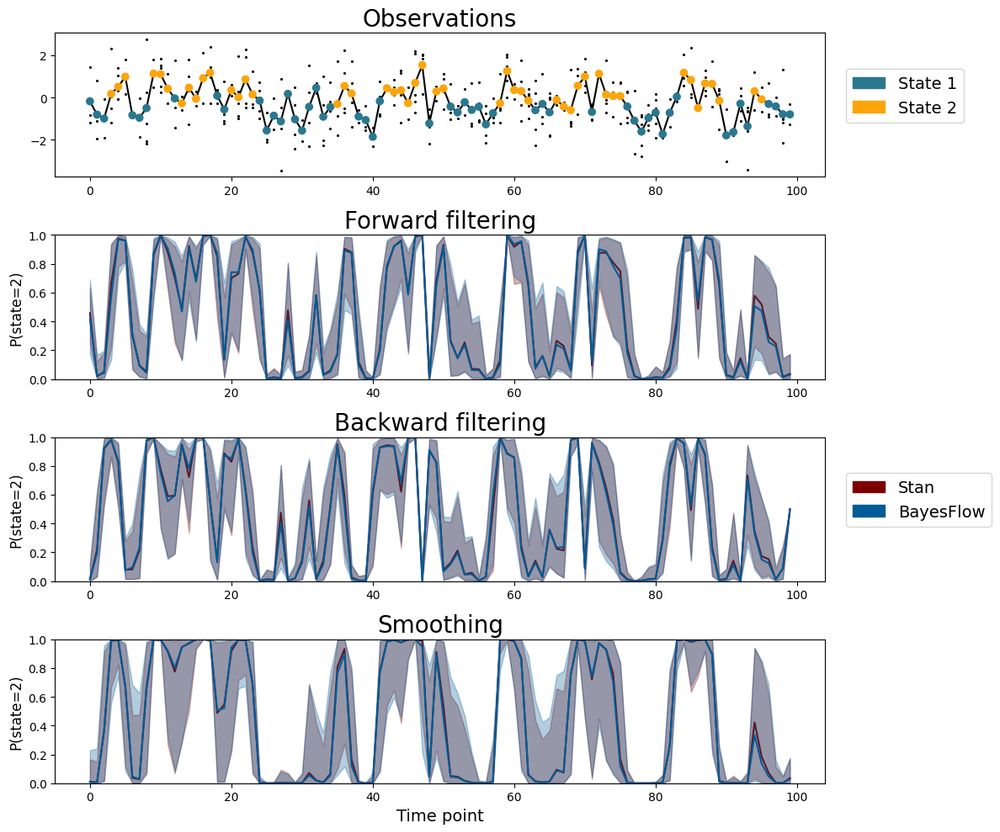

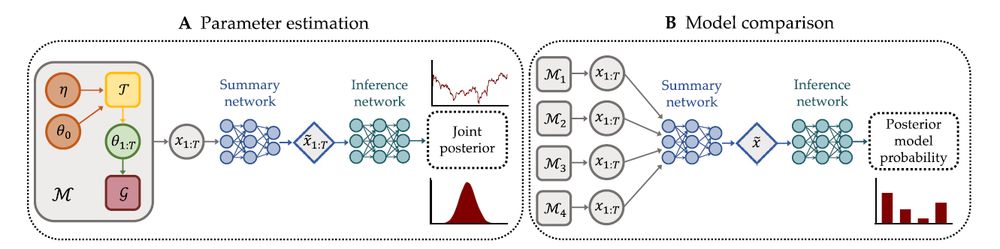

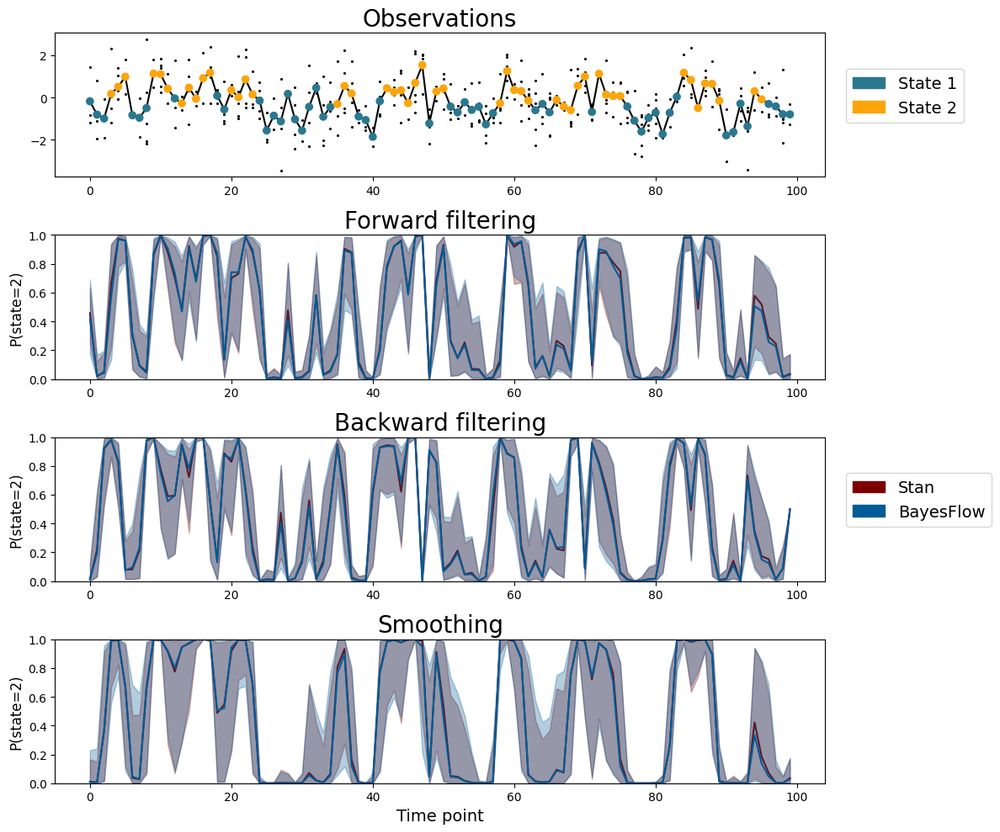

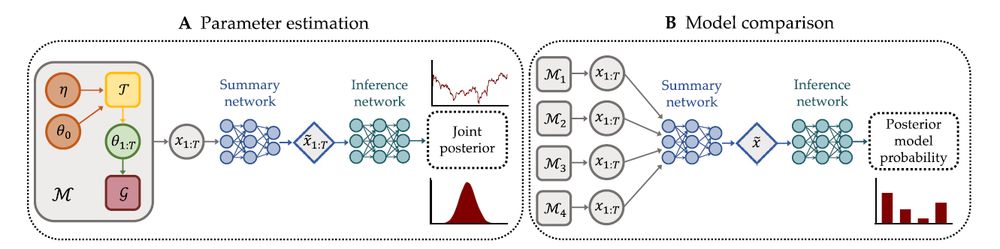

Amortized inference for finite mixture models ✨

The amortized approximator from BayesFlow closely matches the results of expensive-but-trustworthy HMC with Stan.

Check out the preprint and code by @kucharssim.bsky.social and @paulbuerkner.com👇

11.02.2025 08:53 — 👍 16 🔁 1 💬 0 📌 0

Finite mixture models are useful when data comes from multiple latent processes.

BayesFlow allows:

• Approximating the joint posterior of model parameters and mixture indicators

• Inferences for independent and dependent mixtures

• Amortization for fast and accurate estimation

📄 Preprint

💻 Code

11.02.2025 08:48 — 👍 28 🔁 6 💬 0 📌 1

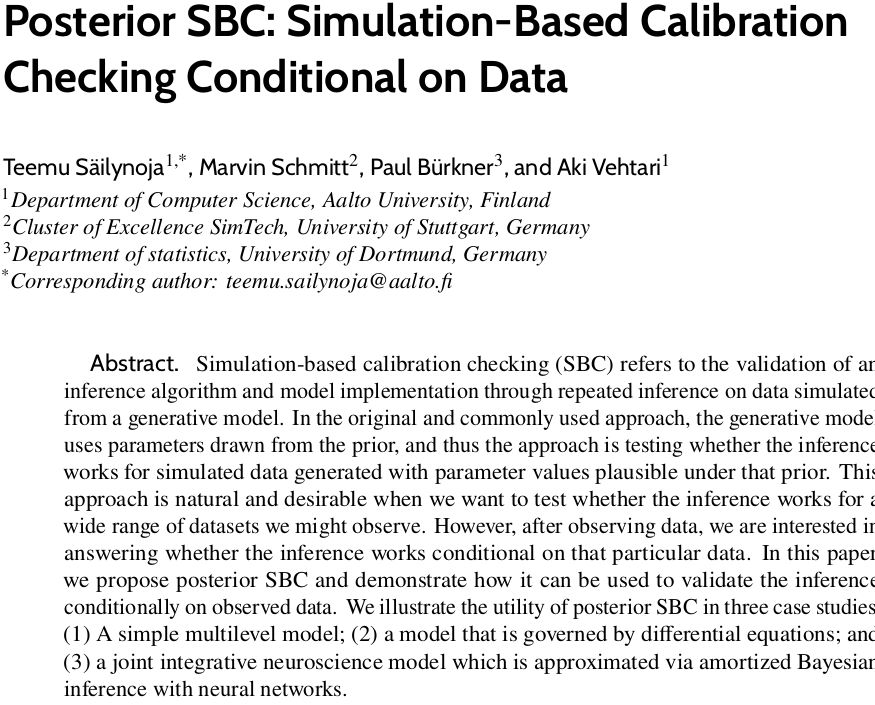

Title: Posterior SBC: Simulation-Based Calibration Checking Conditional on Data

Authors: Teemu Säilynoja, Marvin Schmitt, Paul Bürkner, Aki Vehtari

Abstract: Simulation-based calibration checking (SBC) refers to the validation of an inference algorithm and model implementation through repeated inference on data simulated from a generative model. In the original and commonly used approach, the generative model uses parameters drawn from the prior, and thus the approach is testing whether the inference works for simulated data generated with parameter values plausible under that prior. This approach is natural and desirable when we want to test whether the inference works for a wide range of datasets we might observe. However, after observing data, we are interested in answering whether the inference works conditional on that particular data. In this paper, we propose posterior SBC and demonstrate how it can be used to validate the inference conditionally on observed data. We illustrate the utility of posterior SBC in three case studies: (1) A simple multilevel model; (2) a model that is governed by differential equations; and (3) a joint integrative neuroscience model which is approximated via amortized Bayesian inference with neural networks.

If you know simulation based calibration checking (SBC), you will enjoy our new paper "Posterior SBC: Simulation-Based Calibration Checking Conditional on Data" with Teemu Säilynoja, @marvinschmitt.com and @paulbuerkner.com

arxiv.org/abs/2502.03279 1/7

06.02.2025 10:10 — 👍 46 🔁 15 💬 4 📌 1

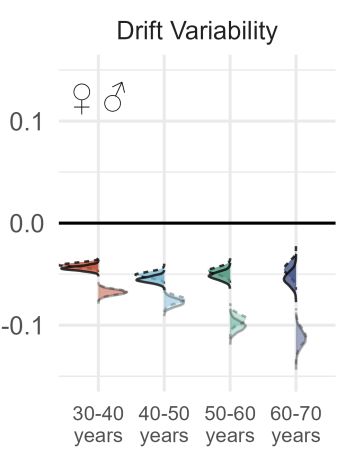

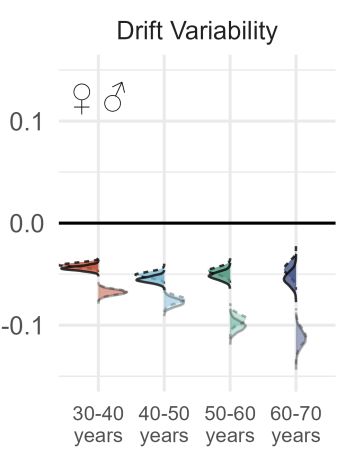

A study with 5M+ data points explores the link between cognitive parameters and socioeconomic outcomes: The stability of processing speed was the strongest predictor.

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

03.02.2025 12:21 — 👍 18 🔁 6 💬 0 📌 0

cool idea! I will think about how to achieve something like this. can you open an issue on GitHub so I don't forget aboht it?

29.01.2025 08:26 — 👍 1 🔁 0 💬 1 📌 0

Join us this Thursday for a talk on efficient mixture and multilevel models with neural networks by @paulbuerkner.com at the new @approxbayesseminar.bsky.social!

28.01.2025 05:06 — 👍 11 🔁 4 💬 0 📌 0

Paul Bürkner (TU Dortmund University), will give our next talk. This will be about "Amortized Mixture and Multilevel Models", and is scheduled on Thursday the 30th January at 11am. To receive the link to join, sign up at listserv.csv.warwick...

14.01.2025 12:00 — 👍 16 🔁 4 💬 0 📌 3

Paul Bürkner (@paulbuerkner.com) will talk about amortized Bayesian multilevel models in the next Approximate Bayes Seminar on January 30 ⭐️

Sign up to the seminar’s mailing list below to get the meeting link 👇

14.01.2025 12:43 — 👍 21 🔁 4 💬 1 📌 0

Hochschulen und Forschungsinstitutionen verlassen Plattform X - Gemeinsam für Vielfalt, Freiheit und Wissenschaft

More than 60 German universities and research outfits are announcing that they will end their activities on twitter.

Including my alma mater, the University of Münster.

HT @thereallorenzmeyer.bsky.social nachrichten.idw-online.de/2025/01/10/h...

10.01.2025 12:02 — 👍 551 🔁 134 💬 19 📌 15

what are your best tips to fit shifted lognormal models (in #brms / Stan)? I'm using:

- checking the long tails (few long RTs make the tail estimation unwieldy)

- low initial values for ndt

- careful prior checks

- pathfinder estimation of initial values

still with increasing data, chains get stuck

10.01.2025 10:43 — 👍 7 🔁 5 💬 2 📌 0

I think this should be documented in the brms_families vignette. perhaps you can double check if the information you are looking for is indeed there.

09.01.2025 17:54 — 👍 1 🔁 0 💬 1 📌 0

happy to work with you on that if we find the time :-)

03.01.2025 13:00 — 👍 2 🔁 0 💬 0 📌 0

indeed, I saw it at StanCon but I am not sure anymore how production ready the method was.

02.01.2025 17:26 — 👍 2 🔁 0 💬 0 📌 0

something like this, yes. but ensuring the positive definiteness of arbitrary constraint correlation matrices is not trivial. so there may need to be some restrictions of what correlation patterns are allowed.

02.01.2025 07:53 — 👍 3 🔁 0 💬 1 📌 0

I already thought about this. a complete SEM syntax in brms would support selective error correlations by generalizing set_rescor()

23.12.2024 16:47 — 👍 6 🔁 0 💬 2 📌 0

Full Luxury Bayesian Structural Equation Modeling with brms

OK, here is a very rough draft of a tutorial for #Bayesian #SEM using #brms for #rstats. It needs work, polish, has a lot of questions in it, and I need to add a references section. But, I think a lot of folk will find this useful, so.... jebyrnes.github.io/bayesian_sem... (use issues for comments!)

21.12.2024 19:49 — 👍 226 🔁 61 💬 9 📌 1

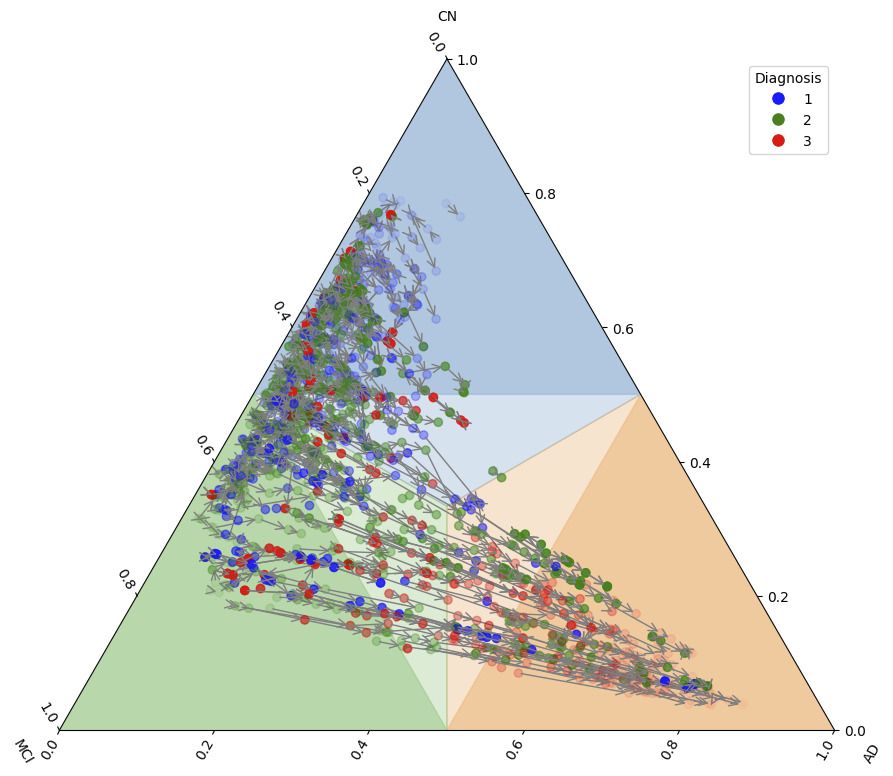

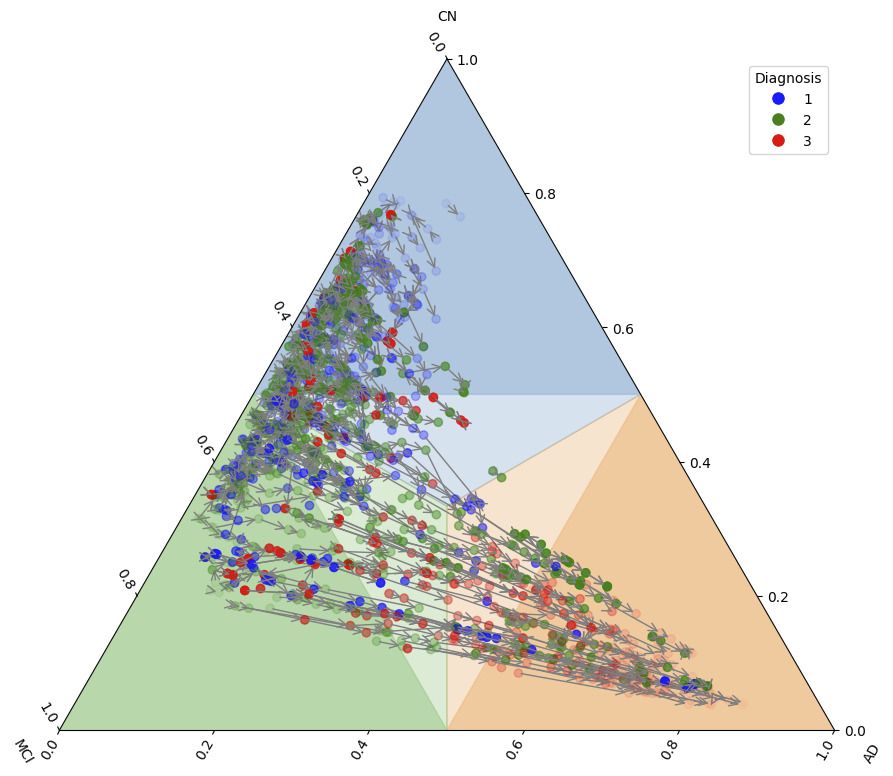

A ternary plot visualizing data points classified into three groups: CN (Cognitively Normal), MCI (Mild Cognitive Impairment), and AD (Alzheimer's Disease dementia). The triangular axes represent predicted probability corresponding to each diagnosis category, with the corners labeled CN (top), MCI (bottom left), and AD (bottom right). Each point is colored according to its real diagnosis group (blue for CN, green for MCI and red for AD). Trajectories of predicted probabilities pertaining to the same subject are denoted with arrows connecting the points. Shaded regions in blue, green, and orange further highlight distinct areas of the plot associated with CN, MCI, and AD, respectively. A legend on the right identifies the diagnosis categories by color.

Ternary plots to represent data in a simplex, yay or nay? #stats #statistics #neuroscience

17.12.2024 23:08 — 👍 14 🔁 2 💬 3 📌 2

Writing is thinking.

It’s not a part of the process that can be skipped; it’s the entire point.

12.12.2024 15:08 — 👍 6861 🔁 1802 💬 159 📌 150

1️⃣ An agent-based model simulates a dynamic population of professional speed climbers.

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

10.12.2024 01:34 — 👍 41 🔁 6 💬 0 📌 0

Neural superstatistics are a framework for probabilistic models with time-varying parameters:

⋅ Joint estimation of stationary and time-varying parameters

⋅ Amortized parameter inference and model comparison

⋅ Multi-horizon predictions and leave-future-out CV

📄 Paper 1

📄 Paper 2

💻 BayesFlow Code

06.12.2024 12:21 — 👍 21 🔁 4 💬 0 📌 1

I think the link you cited points to the wrong paper.

07.12.2024 08:42 — 👍 1 🔁 0 💬 1 📌 0

yeah indeed it seems we don't have it yet. but perhaps may be worthwhile to implement? I will ask on the Stan forums.

05.12.2024 08:51 — 👍 2 🔁 0 💬 0 📌 0

I don't know. didn't check yet

05.12.2024 00:10 — 👍 1 🔁 0 💬 1 📌 0

I am always looking for count data distributions that can handle both under and overdispersion without being a computational nightmare. PRs are welcome :)

04.12.2024 23:20 — 👍 3 🔁 1 💬 1 📌 0

President of the @ec.europa.eu

Mother of seven. Brussels-born. European by heart. 🇪🇺

Posting about the One World Approximate Bayesian Inference (ABI) Seminar, details at https://warwick.ac.uk/fac/sci/statistics/news/upcoming-seminars/abcworldseminar/

milkshake, boys, yards, schreiben über Patriarchat (erst der Dinge, jetzt der Mythen)

1/2 von @femshelfcontrol.bsky.social

Foto: Andrew Collberg

English/Irish/EU in Spain. PhD in psychology & self-appointed data police cadet. Interested in the lower tail of many distributions. Not yet disabled.

ID confirmation: https://x.com/sTeamTraen/status/1858181372126408774

VP and Distinguished Scientist at Microsoft Research NYC. AI evaluation and measurement, responsible AI, computational social science, machine learning. She/her.

One photo a day since January 2018: https://www.instagram.com/logisticaggression/

Building a co-pilot for hardware designers at Vinci4d. Formerly SVP of Engineering Iron Ox. WiML president, COO Mayfield Robotics Roboticist/Machine Learning Researcher

Assistant Professor in CS: researching ML/AI in sociotechnical systems & teaching Data Science and Dev tools with an emphasis responsible computing

New Englander, NSBE lifetime member

profile pic: me in a purplish sweater with math vaguely on the w

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

Machine Learning researcher. Former stats faculty. Works for Google Research, and on better days, herself.

Researcher in machine learning and computer vision for science. Senior Group Leader at HHMI Janelia Research Campus. Supporter of DEIB in science and tech. CV: https://bit.ly/BransonCV

Personal Account

Founder: The Distributed AI Research Institute @dairinstitute.bsky.social.

Author: The View from Somewhere, a memoir & manifesto arguing for a technological future that serves our communities (to be published by One Signal / Atria

PhD student in Machine Learning @ MPI-IS Tübingen, Tübingen AI Center, IMPRS-IS

PhD Candidate, University of Washington

https://salonidash.com/

Harvard CS PhD Candidate. Interested in algorithmic decision-making, data-centric ML, and applications to public sector operations

Research on AI and biodiversity 🌍

Asst Prof at MIT CSAIL,

AI for Conservation slack and CV4Ecology founder

#QueerInAI 🏳️🌈

ML for remote sensing @Mila_Quebec * UdeM x McGill CS alum

Interests: Responsible ML for climate & societal impacts, STS, FATE, AI Ethics & Safety

prev: SSofCS lab

📍🇨🇦 Montreal (allegedly)

TW: @XMichellelinX

https://mchll-ln.github.io/

She/Her • AI Engineer @ IBM Research • MSc in AI @ UFMG 🇧🇷 | Interested in AI Ethics, Safety, ML fairness & bias mitigations • @blackinai👩🏽💻 (I’m trying to leave twitter🙂↕️)

https://mirianfsilva.github.io

information science professor (tech ethics + internet stuff)

kind of a content creator (elsewhere also @professorcasey)

though not influencing anyone to do anything except maybe learn things

she/her

more: casey.prof

digital ethicist leading #responsibleAI and emerging tech risk work at BMO // 🇨🇦🇬🇧

AI • quantum • data // oxford • university of toronto // video game enthusiast

all views my own

PhD student at University of Michigan School of Information. Data and evaluation practices for language models / language models as cultural technologies.

https://meera-desai.com