Very happy to see this preprint out! The amazing @danwang7.bsky.social was on fire sharing this work at #ECVP2025, gathering loads of attention, and here you can find the whole thing!

Using RIFT we reveal how the competition between top-down goals and bottom-up saliency unfolds within visual cortex.

28.08.2025 10:09 — 👍 12 🔁 4 💬 0 📌 0

I'll show some (I think) cool stuff about how we can measure the phenomenology of synesthesia in a physiological way at #ECVP - Color II, atrium maximum, 9:15, Thursday.

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

27.08.2025 21:13 — 👍 9 🔁 1 💬 0 📌 0

And now without bluesky making the background black...

24.08.2025 20:57 — 👍 16 🔁 6 💬 0 📌 0

#ECVP2025 starts with a fully packed room!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

24.08.2025 16:28 — 👍 18 🔁 3 💬 1 📌 1

Excited to give a talk at #ECVP2025 (Tuesday morning, Attention II) on how spatially biased attention during VWM does not boost excitability the same way it does when attending the external world, using Rapid Invisible Frequency Tagging (RIFT). @attentionlab.bsky.social @ecvp.bsky.social

24.08.2025 13:13 — 👍 14 🔁 3 💬 0 📌 1

Excited to share that I’ll be presenting my poster at #ECVP2025 on August 26th (afternoon session)!

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

24.08.2025 13:28 — 👍 9 🔁 3 💬 0 📌 0

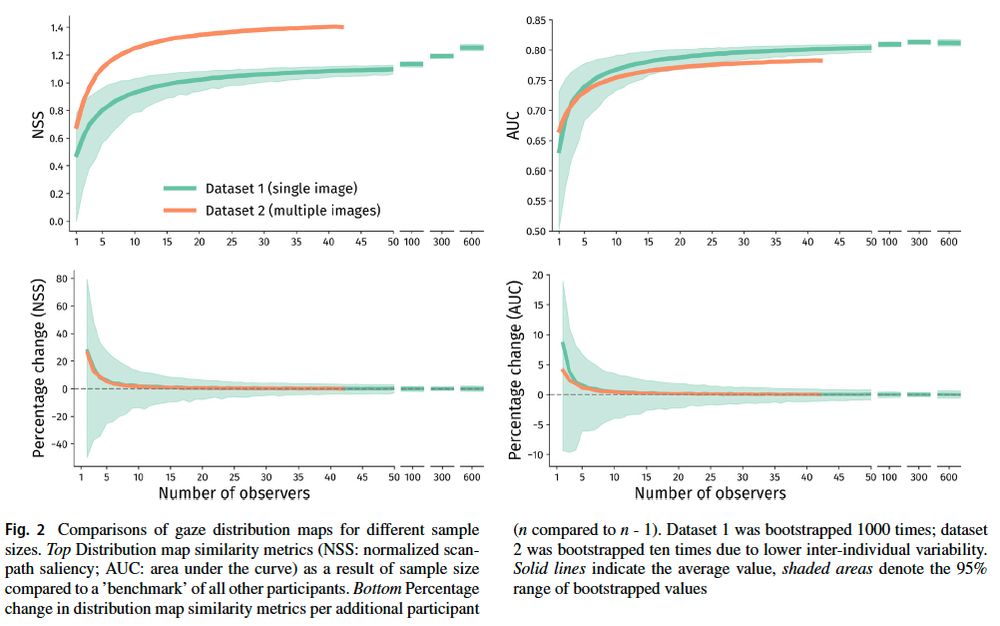

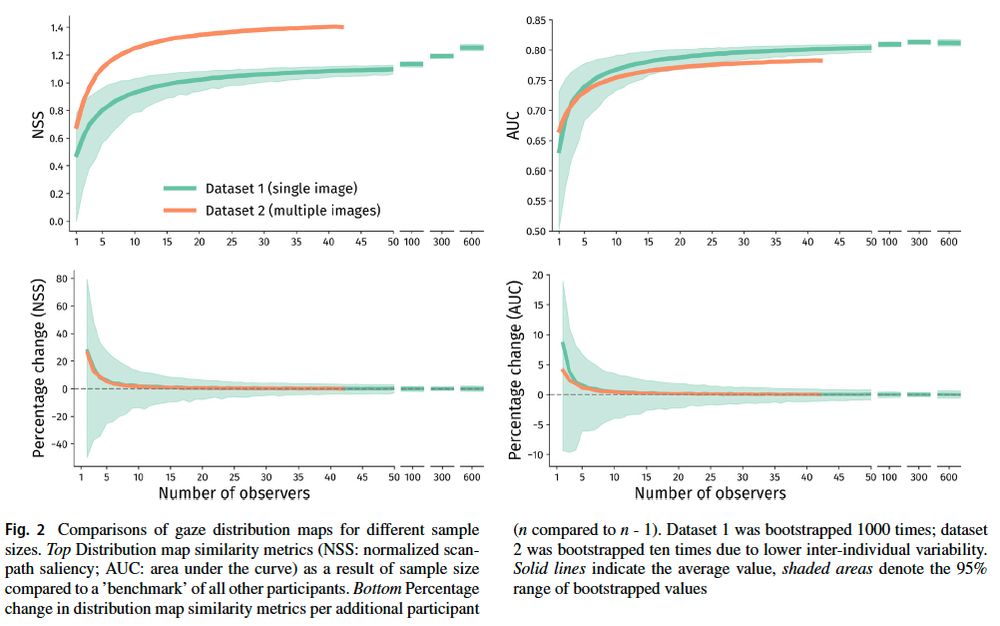

data saturation for gaze heatmaps. Initially, any additional participant will bring the total NSS or AUC as measures for heatmap similarity a lot closer to the full sample. However, the returns diminish increasingly at higher n.

Gaze heatmaps (are popular especially for eye-tracking beginners and in many applied domains. How many participants should be tested?

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

29.07.2025 07:37 — 👍 9 🔁 2 💬 1 📌 0

Thrilled to share that I successfully defended my PhD dissertation on Monday June 16th!

The dissertation is available here: doi.org/10.33540/2960

18.06.2025 14:21 — 👍 16 🔁 2 💬 3 📌 1

Now published in Attention, Perception & Psychophysics @psychonomicsociety.bsky.social

Open Access link: doi.org/10.3758/s134...

12.06.2025 07:21 — 👍 14 🔁 8 💬 0 📌 0

And Monday morning:

@suryagayet.bsky.social

has a poster (pavilion) on:

Feature Integration Theory revisited: attention is not needed to bind stimulus features, but prevents them from falling apart.

Happy @vssmtg.bsky.social #VSS2025 everyone, enjoy the meeting and the very nice coffee mugs!

18.05.2025 09:56 — 👍 4 🔁 0 💬 0 📌 0

@vssmtg.bsky.social

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

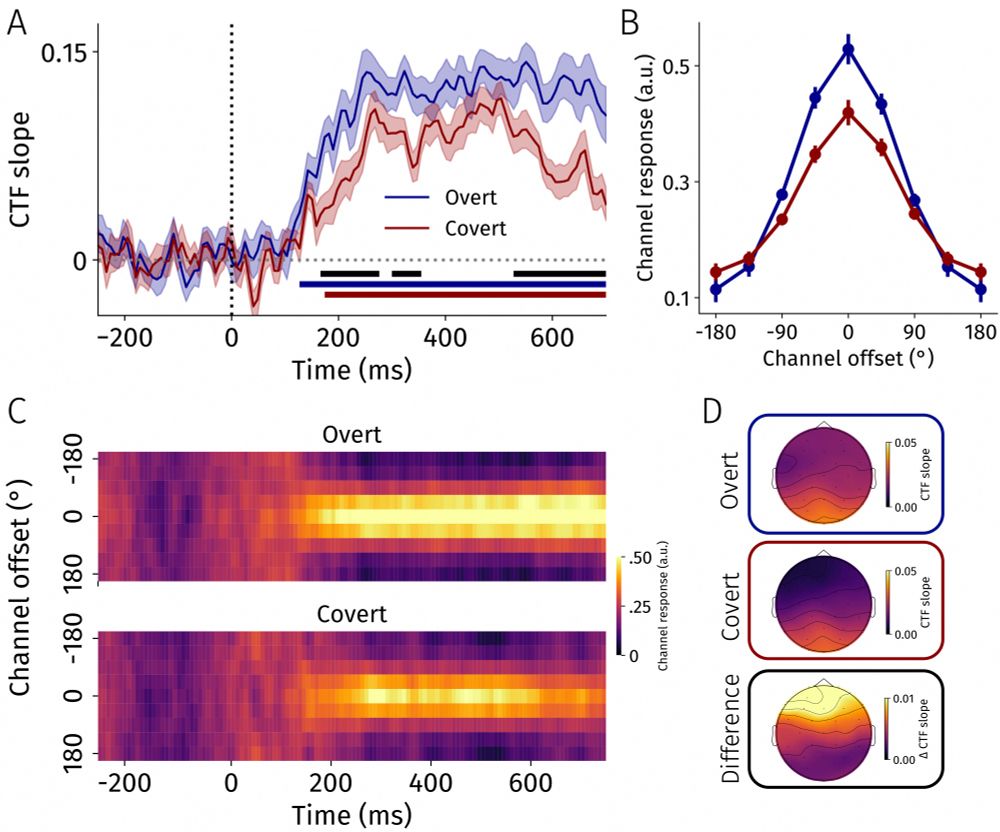

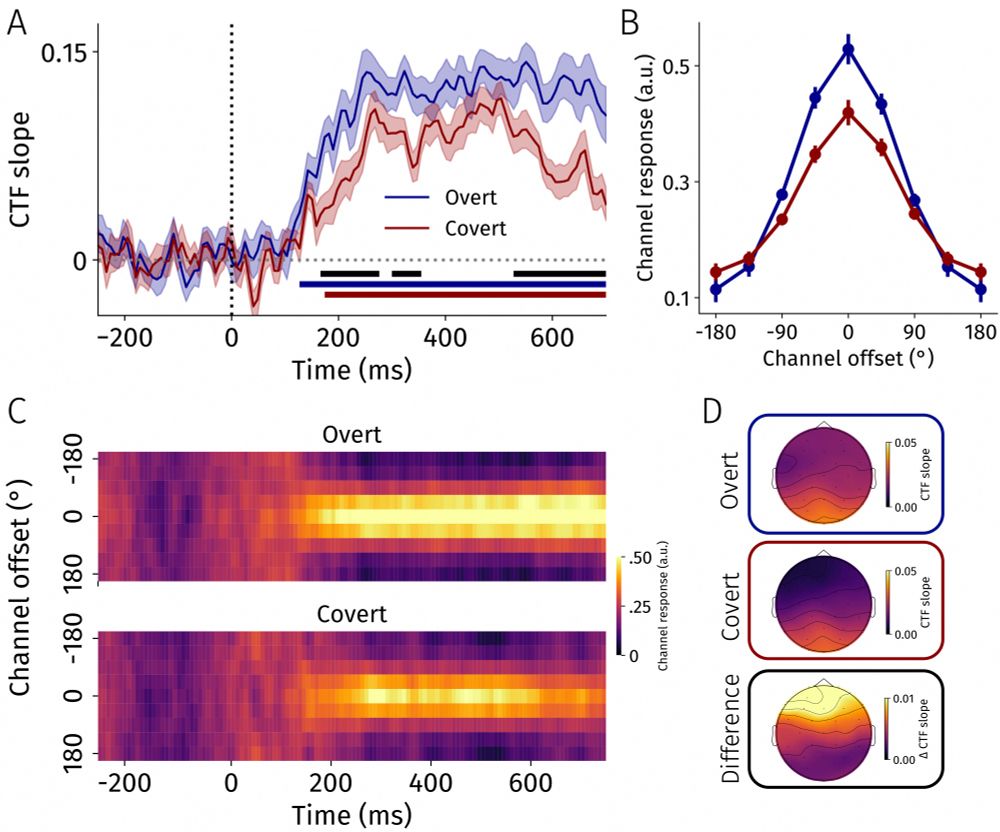

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

18.05.2025 09:41 — 👍 6 🔁 4 💬 1 📌 0

and tomorrow, Monday:

Surya Gayet in the Pavilion in the morning session:

Feature Integration Theory revisited: attention is not needed to bind stimulus features, but prevents them from falling apart.

Enjoy VSS everyone!

18.05.2025 09:40 — 👍 0 🔁 0 💬 0 📌 0

We previously showed that affordable eye movements are preferred over costly ones. What happens when salience comes into play?

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

16.05.2025 13:36 — 👍 9 🔁 4 💬 1 📌 0

Preparing overt eye movements and directing covert attention are neurally coupled. Yet, this coupling breaks down at the single-cell level. What about populations of neurons?

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

13.05.2025 07:51 — 👍 16 🔁 8 💬 2 📌 2

In our latest paper @elife.bsky.social we show that we choose to move our eyes based on effort minimization. Put simply, we prefer affordable over more costly eye movements.

eLife's digest:

elifesciences.org/digests/9776...

The paper:

elifesciences.org/articles/97760

#VisionScience

08.04.2025 08:06 — 👍 13 🔁 4 💬 1 📌 2

Heat map of gaze locations overlaid on top of a feature-rich collage image. There is a seascape with a kitesurfer, mermaid, turtle, and more.

New preprint!

We present two very large eye tracking datasets of museum visitors (4-81 y.o.!) who freeviewed (n=1248) or searched for a +/x (n=2827) in a single feature-rich image.

We invite you to (re)use the dataset and provide suggestions for future versions 📋

osf.io/preprints/os...

28.03.2025 09:34 — 👍 23 🔁 6 💬 2 📌 1

<em>Psychophysiology</em> | SPR Journal | Wiley Online Library

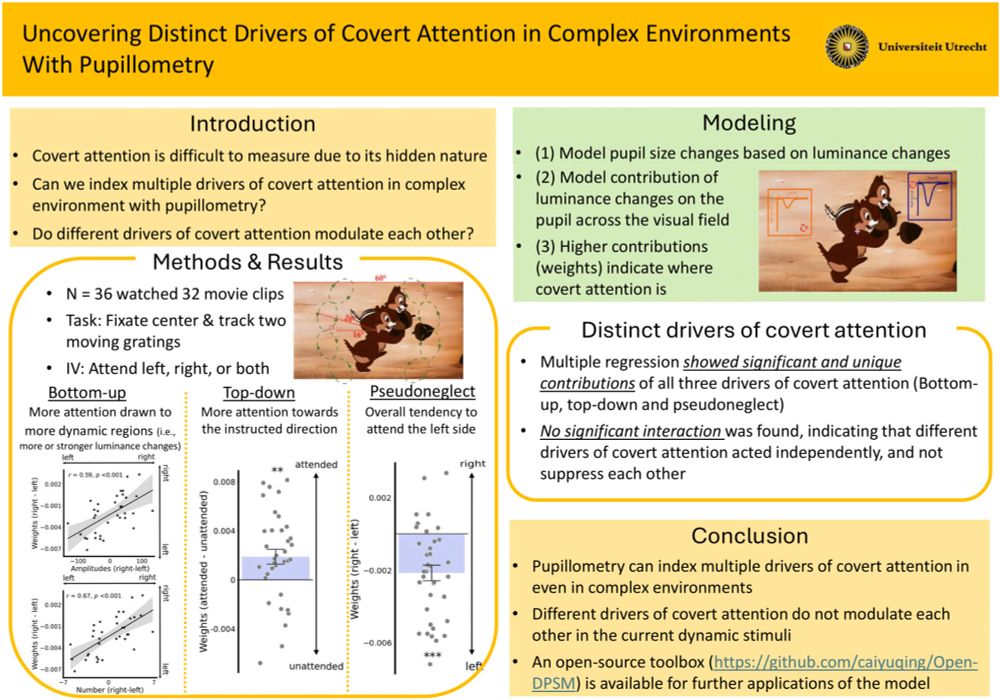

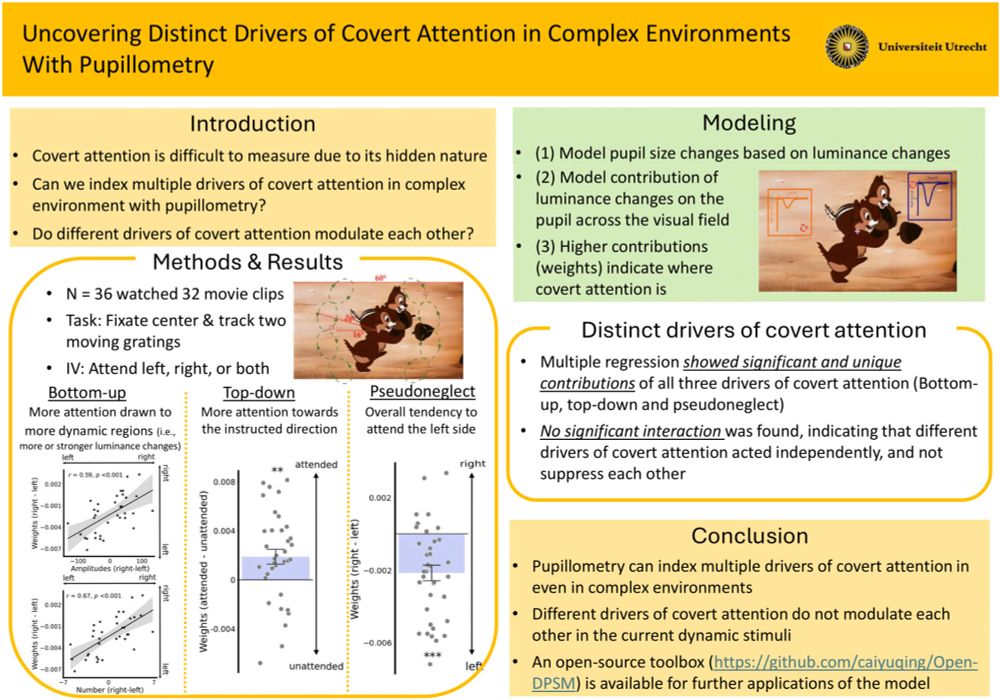

Previous studies have shown that the pupillary light response (PLR) can physiologically index covert attention, but only with highly simplistic stimuli. With a newly introduced technique that models ....

Out in Psychophysiology (OA):

Typically, pupillometry struggles with complex stimuli. We introduced a method to study covert attention allocation in complex video stimuli -

effects of top-down attention, bottom-up attention, and pseudoneglect could all be recovered.

doi.org/10.1111/psyp.70036

21.03.2025 14:53 — 👍 23 🔁 6 💬 2 📌 0

Congrats to Luzi @luzixu.bsky.social! We're very proud of you! 🎊

19.03.2025 13:01 — 👍 4 🔁 1 💬 0 📌 0

<em>Psychophysiology</em> | SPR Journal | Wiley Online Library

Dominant theories posit that attentional shifts prior to saccades enable a stable visual experience despite abrupt changes in visual input caused by saccades. However, recent work may challenge this ...

Presaccadic attention facilitates visual continuity across eye movements. However, recent work may suggest that presaccadic attention doesn't shift upward. What's going on?

Our paper shows that presaccadic attention moves up- and downward using the pupil light response.

doi.org/10.1111/psyp.70047

19.03.2025 08:28 — 👍 8 🔁 2 💬 1 📌 0

Psyche | on the human condition

Psyche is a digital magazine from Aeon Media that illuminates the human condition through psychology, philosophy and the arts.

New popscience piece on why pupil size changes are so cool. psyche.co/ideas/the-pu...

Included: an assignment that lets you measure pupil size. In my classes, this replicates Hess & Polt's 1964 effort finding without an eyetracker. Feel free to use it!

#VisionScience #neuroscience #psychology 🧪

06.01.2025 12:21 — 👍 14 🔁 6 💬 1 📌 0

In conclusion, observers can flexibly de-prioritize and re-prioritize VWM contents based on current task demands, allowing observers to exert control over the extent to which VWM contents influence concurrent visual processing.

20.12.2024 00:26 — 👍 3 🔁 1 💬 1 📌 0

As always, excellent work by Damian et al.!

11.12.2024 14:31 — 👍 5 🔁 0 💬 0 📌 0

Happy to have published my first paper during my PhD🥳 Huge thanks to @cstrauch.bsky.social,

Leendert Van Maanen, Stefan Van der Stigchel!

05.12.2024 08:46 — 👍 12 🔁 2 💬 0 📌 0

OSF

New paper accepted in JEP-General (preprint: osf.io/preprints/socarxiv/uvzdh) with @suryagayet.bsky.social & Nathan van der Stoep. We show that observers use both hearing & vision for localizing static objects, but rely on a single modality to report & predict the location of moving objects. 1/9

05.12.2024 12:03 — 👍 18 🔁 2 💬 1 📌 2

Want to keep track of AttentionLab's members? We have our own starter pack!

Follow all or make a selection, up to you! Will update this list whenever more lab members join Bluesky 🙂

go.bsky.app/4yHiToK

19.11.2024 15:40 — 👍 6 🔁 1 💬 0 📌 1

Trying to understand how the brain makes sense of the world with (and despite) eye movements. Active visual cognition, Combined eye-tracking/EEG, EEG methods. Toolboxes: EYE-EEG, opticat, UNFOLD. Previously @Berlin. Tenured Asst. professor @Groningen

Lecturer in Psychology at the University of Stirling 🏴 www.gemmalearmonth.com

Cognitive Neuroscience & Neuroergonomics

Back soon - just need to write up my PhD..

Established 2020, the Centre for Transformative Neuroscience is an interdisciplinary hub advancing fundamental & translational neuroscience. Our goal: bring transformative benefits to patients & society by understanding & treating brain disorders.

MSc student & research intern at attentionlab UU ☀️ Interested in (disorders of) attention & visual perception & consciousness

PI of Action & Perception Lab at UCL. Professor. Cognitive neuroscience, action, perception, learning, prediction. Cellist, lazy runner, mum.

https://www.ucl.ac.uk/pals/action-and-perception-lab/

https://www.fil.ion.ucl.ac.uk/team/action-and-perception/

Professor in Cognitive Neuroscience, Donders Institute, Radboud University.

www.peelenlab.nl

https://www.ru.nl/personen/peelen-m

PhD student at Tel-Aviv University in Dominique Lamy's lab, studying visual attention | Data Scientist

https://twitter.com/ToledanoDaniel7

https://www.researchgate.net/profile/Daniel-Toledano-2

https://www.linkedin.com/in/daniel-toledano-aa14181b8/

Brain & Cognition, KU Leuven

PhD Student in Cognitive Neuroscience @doellerlab.bsky.social

PhD Candidate in Artificial Intelligence and Cognitive Neuroscience | Investigating how AI can help understand the brain | Utrecht University | COBRA & AttentionLab

Prof for Neuro-Cognitive Psychology @ LMU, PI of the www.SceneGrammarLab.com

Institute for Brain and Behaviour Amsterdam. Promoting interdisciplinary research between psychological and movement sciences.