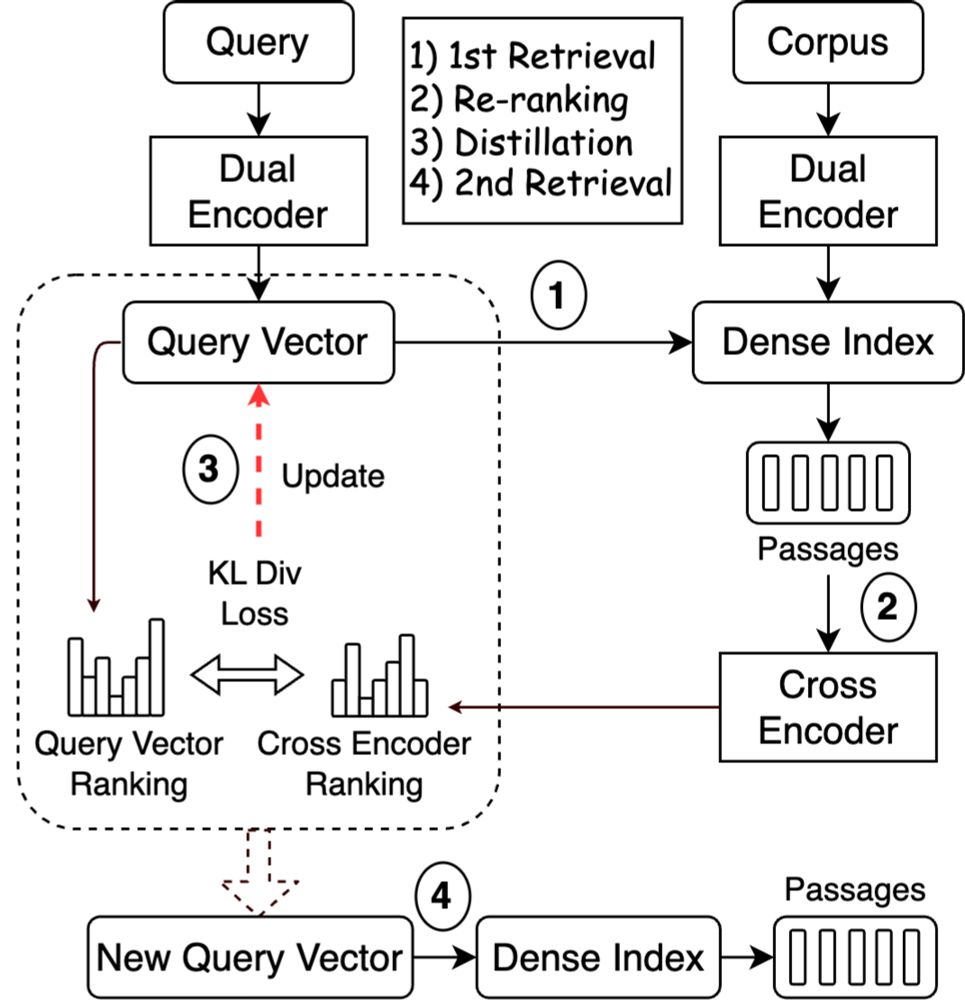

Results? If read tables correctly, there's only very modest boost in both recall & NDCG, which is within 2%. Given that the procedure requires a second retrieval, it does not seem to worth an effort.

🟦

dl.acm.org/doi/abs/10.1...

@srchvrs.bsky.social

Machine learning scientist and engineer speaking πtorch & C++ (ph-D CMU) working on (un)natural language processing, speaking πtorch & C++. Opinions sampled from MY OWN 100T param LM.

Results? If read tables correctly, there's only very modest boost in both recall & NDCG, which is within 2%. Given that the procedure requires a second retrieval, it does not seem to worth an effort.

🟦

dl.acm.org/doi/abs/10.1...

PRF was not forgotten in the neural IR times, but how does it perform really? Revanth Gangi Reddy & colleagues ran a rather thorough experiment and published it SIGIR.

↩️

It was doc2query before doc2query and, in fact, it improved performance (by a few%) of the IBM Watson QA system that beat human champions in Jeopardy!

↩️

research.ibm.com/publications...

I think this is a problem of completely unsupervised and blind approach of adding terms to the query. If we had some supervision signal to filter out potentially bad terms, this would work out better. In fact, a supervised approach was previously used to add terms to documents!

↩️

Fixing this issue produced a sub-topic in the IR community devoted to fixing this issue and identifying cases where performance degrades substantially in advance. Dozens of approaches were proposed, but I do not think it was successful. Why⁉️

↩️

PRF tends to improve things on average, but has a rather nasty property of tanking outcomes for some queries rather dramatically: When things go wrong (i.e., unlucky unrelated terms are added to the query), they can go very wrong. ↩️

18.07.2025 18:01 — 👍 0 🔁 0 💬 1 📌 0

PRF is an old technique introduced 40 years ago in the SMART system (arguably the first open-source IR system). ↩️

x.com/srchvrs/stat...

🧵Pseudo-relevance feedback (PRF) (also known as blind feedback) is a technique of first retrieving/re-ranking top-k documents and adding some of their words to the initial query. Then, a second retrieval/ranking stage uses an updated query. ↩️

18.07.2025 18:01 — 👍 1 🔁 1 💬 1 📌 0If you submitted a messy paper, it's pointless to address every little comment and promise fixing it in the final version. 🟦

02.07.2025 03:04 — 👍 0 🔁 0 💬 0 📌 0Instead, think hard about questions you can ask. What is the main misunderstanding? What will you have to do so that a reviewer will accept your work next time. Which concise questions can you ask to avoid misunderstanding in the future? ↩️

02.07.2025 03:04 — 👍 0 🔁 0 💬 1 📌 0🧵 Dear (scientific) authors: I am being in the same boat too. However, if you receive a ton of detailed complaints regarding paper quality, do NOT try to address them during the rebuttal phase. It's just a waste of everybody's time. ↩️

02.07.2025 03:04 — 👍 0 🔁 0 💬 1 📌 0

@microsoft.com faces an interesting issue that might affect others selling wrappers around ChatGPT and Claude models: users prefer to use ChatGPT directly rather than engage with Microsoft's Copilot.

futurism.com/microsoft-co...

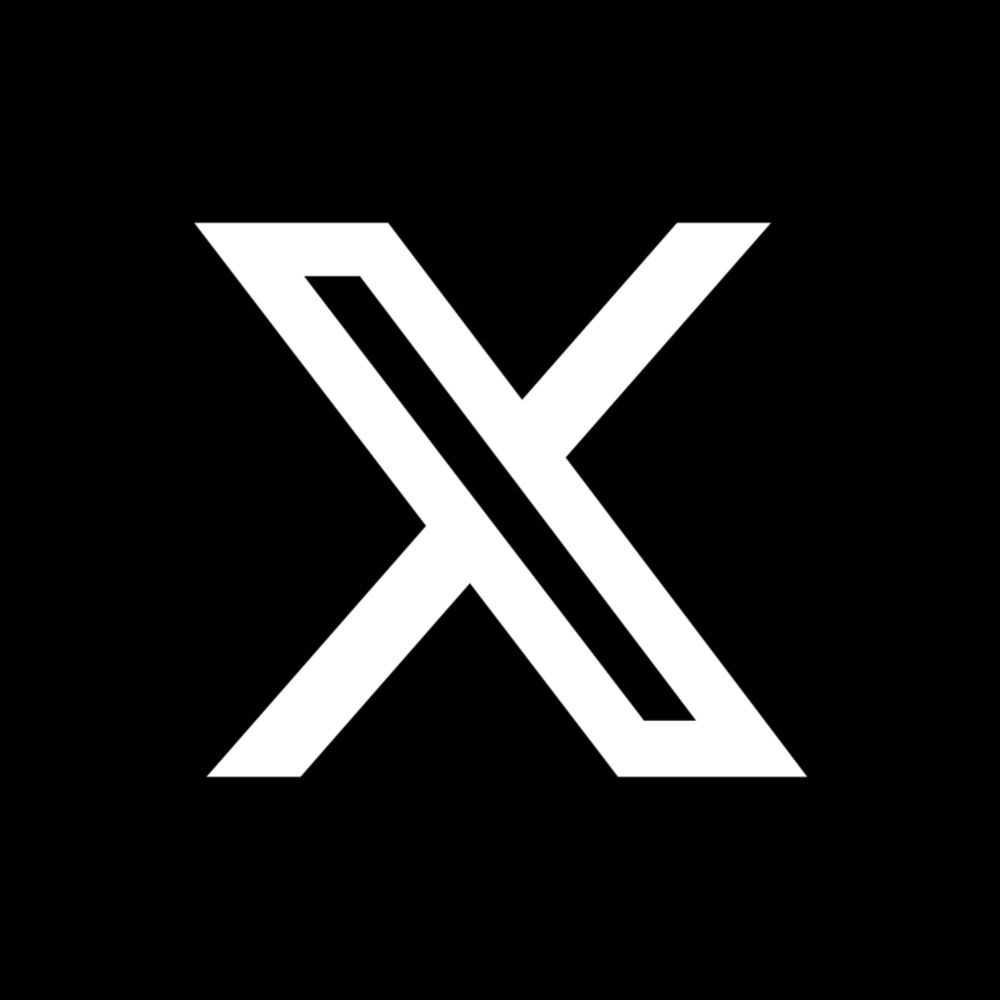

This is a rather blockbuster piece of news: the @hf.co library is dropping support for both Jax and Tensorflow.

www.linkedin.com/posts/lysand...

Humans are creating AGI and you claim that their intelligence is overrated?

22.05.2025 04:26 — 👍 2 🔁 0 💬 0 📌 0Laptop keyboards are close to being unusable. Tremendous productivity hit.

27.04.2025 03:16 — 👍 1 🔁 0 💬 0 📌 0Found a hidden gem on IR evaluation methodology from Microsoft "What Matters in a Measure? A Perspective from Large-Scale Search Evaluation."

dl.acm.org/doi/pdf/10.1...

Parental advice: if you master algebra you will know how to deal with your x-es.

@ccanonne.bsky.social feel free to borrow!

Some people say: A prompt is worth a thousand words! Excuse, but have you seen these ones? They are way longer!

16.04.2025 01:42 — 👍 1 🔁 0 💬 0 📌 0However, unlike many others who see threat in the form of a "terminator-like" super-intelligence, @lawrennd.bsky.social worries about unpredictability of automated decision making by entity that's superior in some ways, inferior in others, but importantly is disconnected from the needs of humans. ⏹️

07.04.2025 02:24 — 👍 1 🔁 0 💬 0 📌 0🧵A fascinating perspective on the nature of intelligence and the history of automation/ (and ahem development of AI). It is also a cautionary story of how to not trust AI too much. ↩️

07.04.2025 02:24 — 👍 4 🔁 1 💬 1 📌 0Thus, it was quite insightful to read a recent blog post by

@netflix

detailing their experience in training foundation RecSys LLMs. It’s an informative read, packed with detailed, behind-the-scenes information.

🟦

Pre-training can be non-trivial. If you represent a set of users or items using fixed IDs, your model will not generalize well to a domain with different set of users or items (although there are some workarounds arxiv.org/abs/2405.03562).

↩️

🧵Although pre-trained Transformer models took NLP by storm, they were less successful for recommender systems (arxiv.org/abs/2306.11114). RecSys is hard:

1. The number of users is high.

2. The number of items is high.

3. A cold-start problem is a hard one.

↩️

" ... that preference leakage is a pervasive issue that is harder to detect compared to previously identified biases in LLM-as-a-judge scenarios. All of these findings imply that preference leakage is a widespread and challenging problem in the area of LLM-as-a-judge. "

arxiv.org/abs/2502.01534

🧵"Through extensive experiments, we empirically confirm the bias of judges towards their related student models caused by preference leakage across multiple LLM baselines and benchmarks. Further analysis suggests ..." ↩️

04.02.2025 14:52 — 👍 2 🔁 0 💬 1 📌 0So you mean specifically GRPO or more like a combination of GRPO and binary rewards?

31.01.2025 02:56 — 👍 2 🔁 0 💬 0 📌 0

We also talked about k-NN search, approaches to learning sparse & dense representations for text retrieval, as well as about integration of vector search into traditional DB systems. 🟦

www.youtube.com/watch?v=gzWE...

🧵It was my great pleasure to appear on the vector podcast with @dmitrykan.bsky.social . We covered the history of our NMSLIB library and how it helped shape the vector search industry! ↩️

17.01.2025 16:48 — 👍 7 🔁 1 💬 1 📌 0They gathered some of the best people. They surely have a fighting chance to reduce the cost of serving and reach profitability within a couple of years.

06.01.2025 08:51 — 👍 5 🔁 0 💬 0 📌 0Ok, please, show us a minimal-viable example of doing so. Do not forget that no everyone knows jax and not everyone is willing to learn it. It is not as widely used as numpy, pytorch, or even tensorflow.

06.01.2025 06:36 — 👍 2 🔁 0 💬 0 📌 0