Wrote up some results around reproducing length control from the L1 paper in RL.

ivison.id.au/2026/02/02/r...

Wrote up some results around reproducing length control from the L1 paper in RL.

ivison.id.au/2026/02/02/r...

We’re live on Reddit! Ask us Anything about our OLMo family of models. We have six of our researchers on hand to answer all your questions.

08.05.2025 15:12 — 👍 9 🔁 2 💬 1 📌 1I’ll be around for this! Come ask us questions about olmo and tulu :)

07.05.2025 16:00 — 👍 4 🔁 1 💬 0 📌 0

Excited to be back home in Australia (Syd/Melb) for most of April! Email or DM if you want to grab a coffee :)

27.03.2025 16:10 — 👍 3 🔁 0 💬 0 📌 0

@vwxyzjn.bsky.social and @hamishivi.bsky.social have uploaded intermediate checkpoints for our recent RL models at Ai2. Folks should do research into how RL finetuning is impacting the weights!

Models with it: OLMo 2 7B, 13B, 32B Instruct; Tulu 3, 3.1 8B; Tulu 3 405b

8/8 Please check out the paper for many, many more details, including ablations on RDS+, Tulu 3 results, and analysis on what gets selected! Thanks for reading!

Many thanks to my collaborators on this, the dream team of @muruzhang.bsky.social, Faeze Brahman, Pang Wei Koh, and @pdasigi.bsky.social!

7/8 To me, this highlights the importance of evaluating these methods at large (> 1M) data pools! We release all the data and code used for these experiments to aid in future work:

💻 github.com/hamishivi/au...

📚 huggingface.co/collections/...

📄 arxiv.org/abs/2503.01807

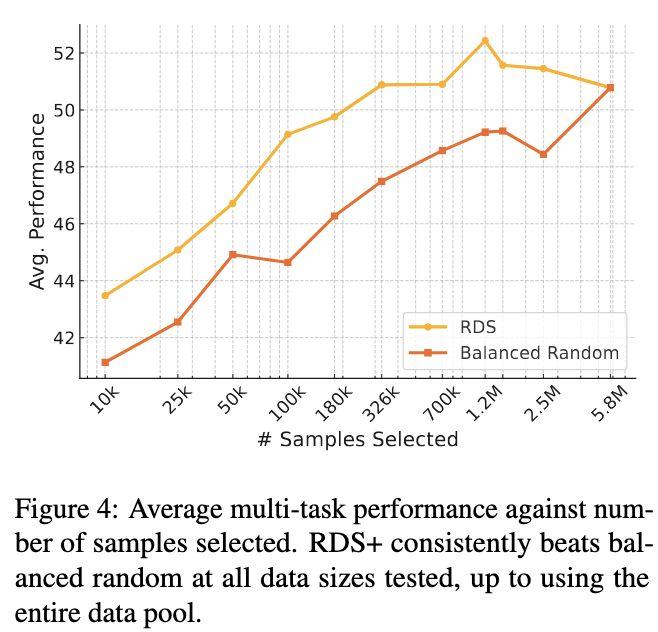

6/8 We further investigate RDS+, selecting up to millions of samples, and comparing it to random selection while taking total compute into account. RDS+ plus beats random selection at all data sizes, and taking compute into account, performs significantly better at larger sizes.

04.03.2025 17:10 — 👍 0 🔁 0 💬 1 📌 0

5/8 We also investigate how well these methods work when selecting one dataset for multiple downstream tasks. The best performing method, RDS+, outperforms the Tulu 2 mixture. We also see strong results selecting using Arena Hard samples as query points with RDS+.

04.03.2025 17:10 — 👍 0 🔁 0 💬 1 📌 0

4/8 Notably, the best-performing method overall is RDS+ – just using cosine similarity with embeddings produced by pretrained models.

While a common baseline, by doing a little tuning, we get stronger performance with this method than more costly alternatives such as LESS.

3/8 We test a variety of different data selection methods on these pools.

We select 10k samples from a downsampled pool of 200k samples, and then test selecting 10k samples from all 5.8M samples. Surprisingly, many methods drop in performance when the pool size increases!

2/8 We begin by constructing data pools for selection, using Tulu 2/3 as a starting point. These pools contain over 4 million samples – all data initially considered for the Tulu models. We then perform selection and evaluation across seven different downstream tasks.

04.03.2025 17:10 — 👍 0 🔁 0 💬 1 📌 0

How well do data-selection methods work for instruction-tuning at scale?

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

(8/8) This project was co-led with Jake Tae, with great advice from @armancohan.bsky.social and @shocheen.bsky.social. We are also quite indebted and build off the prior TESS work (aclanthology.org/2024.eacl-lo...). Thanks for reading!

20.02.2025 18:08 — 👍 1 🔁 0 💬 0 📌 0

(7/8) Please check out the paper for more! We release our code and model weights. I think there's a lot of interesting work to be done here!

📜 Paper: arxiv.org/abs/2502.13917

🧑💻 Code: github.com/hamishivi/te...

🤖 Demo: huggingface.co/spaces/hamis...

🧠 Models: huggingface.co/collections/...

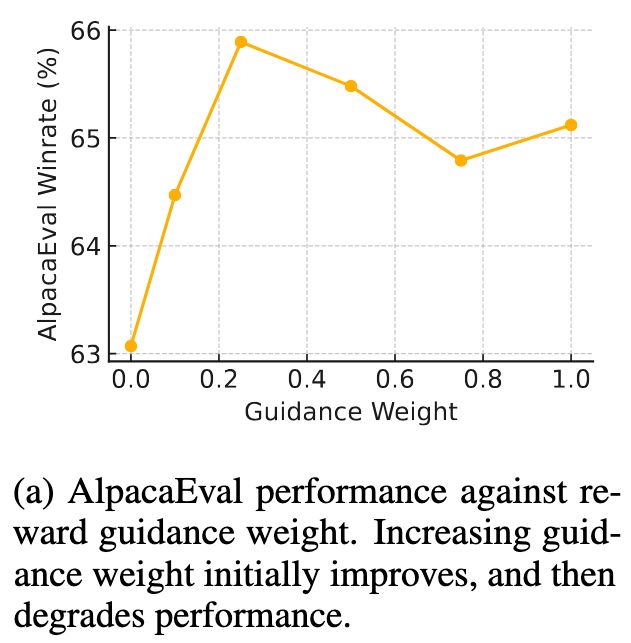

(6/8) Second, using classifier guidance with an off-the-shelf reward model (which we call reward guidance). Increasing the weight of the RM guidance improves AlpacaEval winrate. If you set the guidance really high, you get high-reward but nonsensical generations (reward-hacking!).

20.02.2025 18:08 — 👍 0 🔁 0 💬 1 📌 0

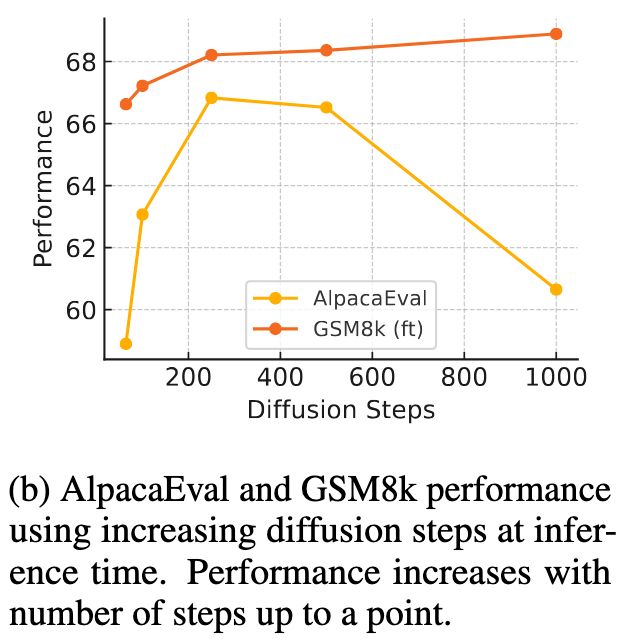

(5/8) First, as we increase diffusion steps, we see GSM8k scores improve consistently! We also see AlpacaEval improve, and then reduce, as the model generations also get more repetitive.

20.02.2025 18:08 — 👍 0 🔁 0 💬 1 📌 0

(4/8) We also further improve performance without additional training in two key ways:

(1) Using more diffusion steps

(2) Using reward guidance

Explained below 👇

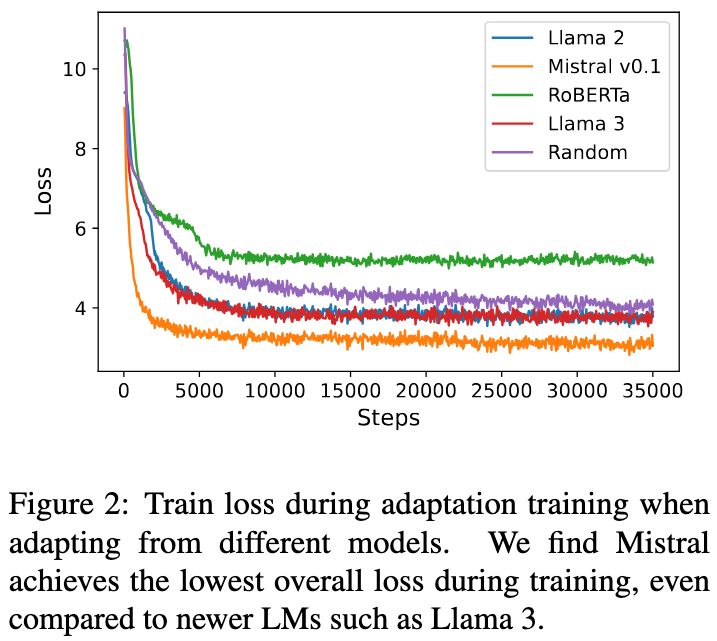

(3/8) We train TESS 2 by (1) performing 200k steps of diffusion adaptation training, (2) instruction tuning on Tulu. We found that adapting Mistral models (v0.1/0.3) performed much better than Llama!

20.02.2025 18:08 — 👍 0 🔁 0 💬 1 📌 0

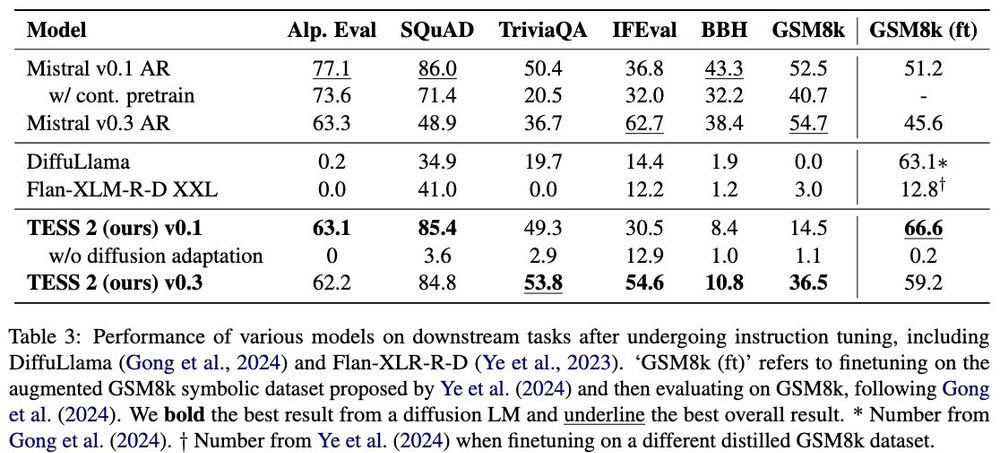

(2/8) We find that TESS 2 performs well in QA, but lags in reasoning-heavy tasks (GSM8k, BBH). However, when we train on GSM8k-specific data, we beat AR models!

It may be that instruction-tuning mixtures need to be adjusted for diffusion models (we just used Tulu 2/3 off the shelf).

(1/8) Excited to share some new work: TESS 2!

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

GRPO makes everything better 😌

12.02.2025 17:42 — 👍 2 🔁 0 💬 0 📌 0

We took our most efficient model and made an open-source iOS app📱but why?

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

This was a fun side effort with lots of help from everyone on the Tulu 3 team. Special shoutouts to @vwxyzjn.bsky.social (who did a lot on the training+infra side) and @ljvmiranda.bsky.social (who helped with DPO data generation). I leave you with the *unofficial* name for this release:

30.01.2025 15:38 — 👍 2 🔁 0 💬 0 📌 0This significant improvement in MATH was especially surprising to me, especially considering I tried training on just MATH at 8B scale and it only yielded improvements after 100s of RL steps! It may be that using larger base models (or higher quality?) makes RLVR with more difficult data easier.

30.01.2025 15:38 — 👍 0 🔁 0 💬 1 📌 0

This is more or less the exact Tulu 3 recipe, with one exception: We just did RLVR on the MATH train set. This almost instantly gave > 5 point gains, as you can see in the RL curves below.

(multiply y-axis by 10 to get MATH test perf)

li'l holiday project from the tulu team :)

Scaling up the Tulu recipe to 405B works pretty well! We mainly see this as confirmation that open-instruct scales to large-scale training -- more exciting and ambitious things to come!

Even with just an 8B model and the MATH/GSM8k train sets, you can see the start of trends like those seen in the R1 poster (avg output length goes up, perf not saturated).

We also see backtracking emerge in some cases, albeit I found it rare and hard to repro consistently...

Seems like a good time to share this: a poster from a class project diving a little more into Tulu 3's RLVR. Deepseek R1 release today shows that scaling this sort of approach up can be very very effective!

20.01.2025 19:04 — 👍 1 🔁 0 💬 1 📌 0