another great paper from @mh-christiansen.bsky.social, showing that non-constituents* can be primed

It's more evidence that traditional linguists were mistaken to believe memory was in short supply:

Human memory is compressed, clustered, implicit and vast

09.02.2026 13:12 — 👍 18 🔁 4 💬 1 📌 0

Careers | Human Resources

We are hiring a research specialist, to start this summer! This position would be a great fit for individuals looking to get more experience in computational and cognitive neuroscience research before applying to graduate school. #neurojobs Apply here: research-princeton.icims.com/jobs/21503/r...

04.02.2026 13:12 — 👍 36 🔁 30 💬 0 📌 3

Does memory fade slowly, or in drops and bursts? We analyzed 728k tests from 210k people. Key finding: “stability” isn’t a trait you either have or don’t have - it’s often a time-limited state at different points in aging. Preprint "Punctuated Memory Change": 👇 www.biorxiv.org/content/10.6...

23.01.2026 07:17 — 👍 15 🔁 12 💬 2 📌 0

Congrats Jonathan! Excited to see these amazing results get published officially!

23.01.2026 13:34 — 👍 0 🔁 0 💬 1 📌 0

Episodic memory facilitates flexible decision-making via access to detailed events - Nature Human Behaviour

Nicholas and Mattar found that people use episodic memory to make decisions when it is unclear what will be needed in the future. These findings reveal how the rich representational capacity of episod...

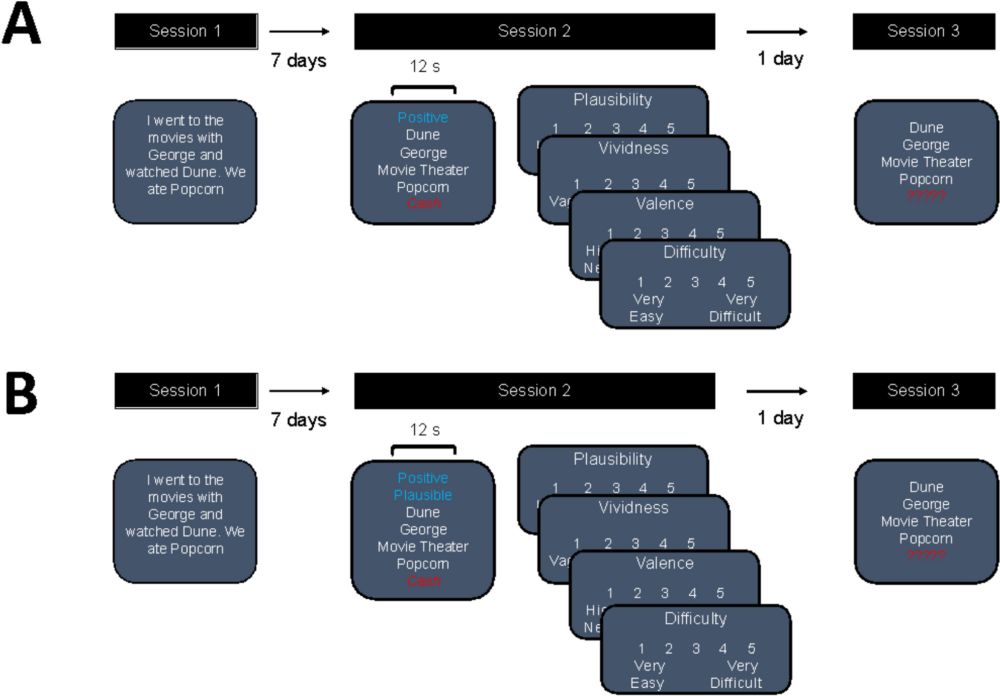

Our experiences have countless details, and it can be hard to know which matter.

How can we behave effectively in the future when, right now, we don't know what we'll need?

Out today in @nathumbehav.nature.com , @marcelomattar.bsky.social and I find that people solve this by using episodic memory.

23.01.2026 13:18 — 👍 119 🔁 47 💬 7 📌 2

Fantastic thread and a must-read for anyone working on spatial cognition.

10.01.2026 23:37 — 👍 7 🔁 2 💬 1 📌 0

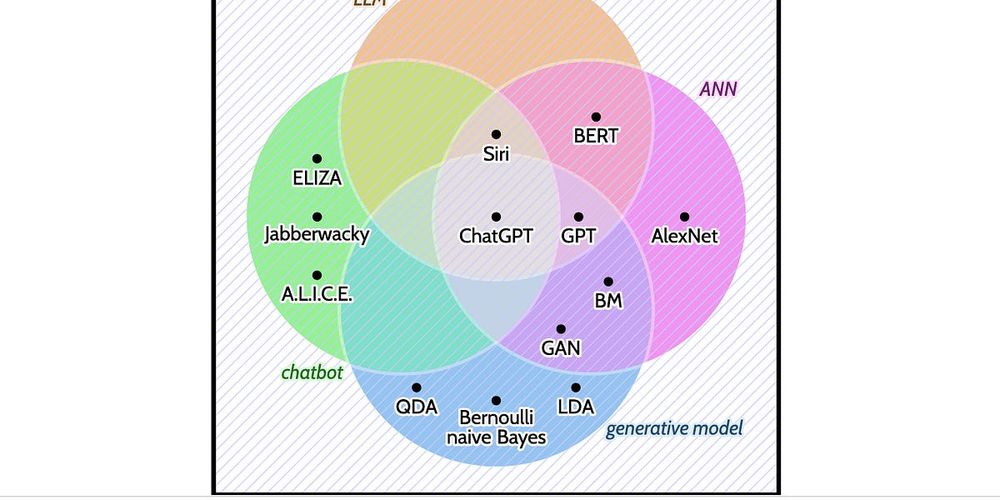

Excited to announce a new book telling the story of mathematical approaches to studying the mind, from the origins of cognitive science to modern AI! The Laws of Thought will be published in February and is available for pre-order now.

18.12.2025 15:59 — 👍 162 🔁 38 💬 2 📌 5

What a privilege and a delight to work with @coltoncasto.bsky.social @ev_fedorenko and @neuranna

on this new speculative piece on What it means to understand language, nicely summarized in this

Tweeprint from @coltoncasto.bsky.social arxiv.org/abs/2511.19757

26.11.2025 16:34 — 👍 35 🔁 6 💬 2 📌 0

I am really proud that eLife have published this paper. It is a very nice paper, but you need to also read the reviews to understand why! 1/n

25.11.2025 20:34 — 👍 78 🔁 12 💬 2 📌 4

I'm going to present our latest memory model that learns causal inference during narrative comprehension! Stop by the poster on Monday to chat about causality, memory, brain🧠, and AI🤖!

#sfn2025 #sfn25

15.11.2025 02:41 — 👍 24 🔁 5 💬 0 📌 1

A RNN with episodic memory, trained on free recall, learned the memory palace strategy -- the network developed an abstract item index code so that it can “walk along” the same trajectory in the hidden state space to encode/retrieve item sequences!

Feedback appreciated!

22.10.2025 13:29 — 👍 17 🔁 2 💬 0 📌 0

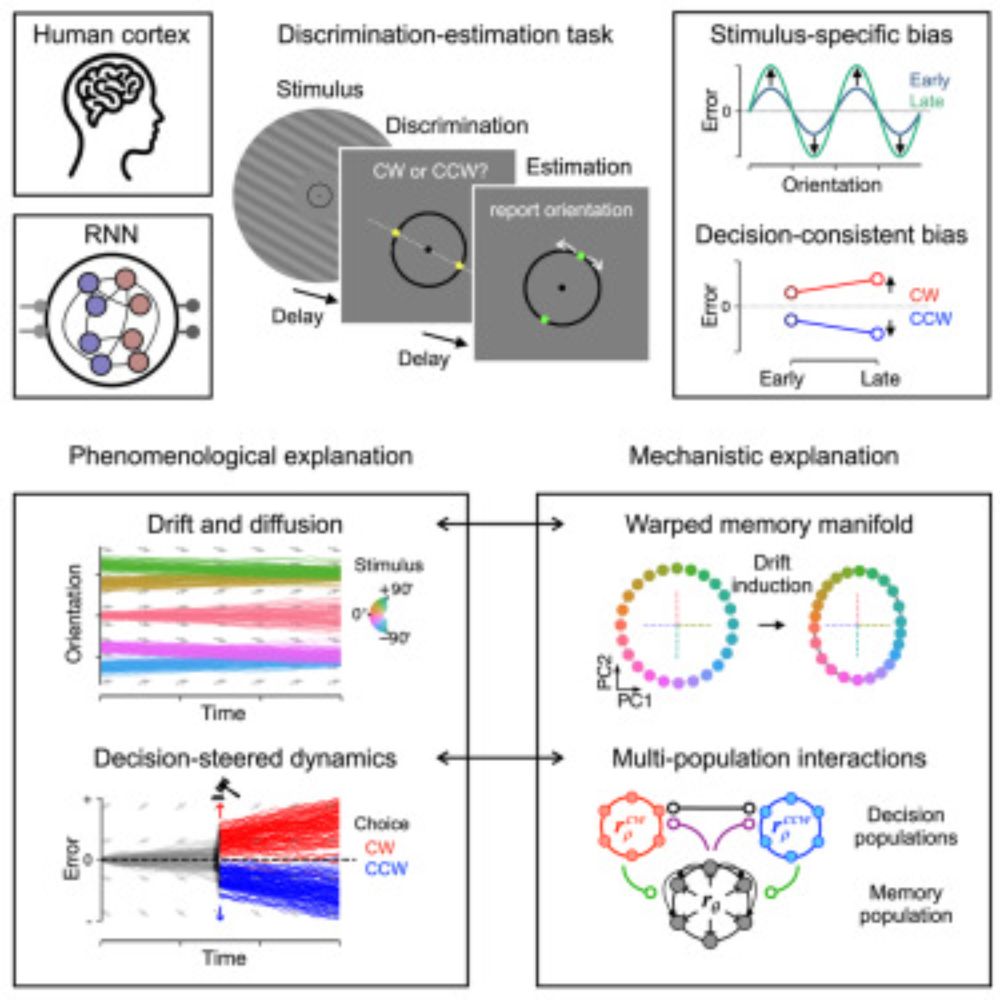

I’m super excited to finally put my recent work with @behrenstimb.bsky.social on bioRxiv, where we develop a new mechanistic theory of how PFC structures adaptive behaviour using attractor dynamics in space and time!

www.biorxiv.org/content/10.1...

24.09.2025 09:52 — 👍 219 🔁 86 💬 9 📌 9

We present our new preprint titled "Large Language Model Hacking: Quantifying the Hidden Risks of Using LLMs for Text Annotation".

We quantify LLM hacking risk through systematic replication of 37 diverse computational social science annotation tasks.

For these tasks, we use a combined set of 2,361 realistic hypotheses that researchers might test using these annotations.

Then, we collect 13 million LLM annotations across plausible LLM configurations.

These annotations feed into 1.4 million regressions testing the hypotheses.

For a hypothesis with no true effect (ground truth $p > 0.05$), different LLM configurations yield conflicting conclusions.

Checkmarks indicate correct statistical conclusions matching ground truth; crosses indicate LLM hacking -- incorrect conclusions due to annotation errors.

Across all experiments, LLM hacking occurs in 31-50\% of cases even with highly capable models.

Since minor configuration changes can flip scientific conclusions, from correct to incorrect, LLM hacking can be exploited to present anything as statistically significant.

🚨 New paper alert 🚨 Using LLMs as data annotators, you can produce any scientific result you want. We call this **LLM Hacking**.

Paper: arxiv.org/pdf/2509.08825

12.09.2025 10:33 — 👍 303 🔁 106 💬 6 📌 23

Our new lab for Human & Machine Intelligence is officially open at Princeton University!

Consider applying for a PhD or Postdoc position, either through Computer Science or Psychology. You can register interest on our new website lake-lab.github.io (1/2)

08.09.2025 13:59 — 👍 54 🔁 14 💬 1 📌 0

Key-value memory network can learn to represent event memories by their causal relations to support event cognition!

Congrats to @hayoungsong.bsky.social on this exciting paper! So fun to be involved!

05.09.2025 13:07 — 👍 12 🔁 2 💬 0 📌 0

Our new study (Titled: Memory Loves Company) asks whether working memory hold more when objects belong together.

And yes, when everyday objects are paired meaningfully (Bow-Arrow), people remember them better than when they’re unrelated (Glass-Arrow). (mini thread)

28.08.2025 12:07 — 👍 69 🔁 15 💬 5 📌 0

Now out in print at @jephpp.bsky.social ! doi.org/10.1037/xhp0...

Yu, X., Thakurdesai, S. P., & Xie, W. (2025). Associating everything with everything else, all at once: Semantic associations facilitate visual working memory formation for real-world objects. JEP:HPP.

27.06.2025 01:24 — 👍 13 🔁 2 💬 0 📌 1

Successful prediction of the future enhances encoding of the present.

I am so delighted that this work found a wonderful home at Open Mind. The peer review journey was a rollercoaster but it *greatly* improved the paper.

direct.mit.edu/opmi/article...

09.08.2025 16:27 — 👍 75 🔁 22 💬 2 📌 2

Professor of psychology. Center for Lifespan Changes in Brain and Cognition. University of Oslo. Interested in the brain from the start to the end. www.lcbc.uio.no

Agents, memory, representations, robots, vision. Sr Research Scientist at Google DeepMind. Previously at Oxford Robotics Institute. Views my own.

Ph.D. candidate in affective and cognitive neuroscience at UC Santa Barbara. Interested in self-other representations, emotion, and memory. Passionate about bridging the gap between findings in laboratory and naturalistic settings.

Cognitive neuroscientist.

I use M/EEG to study how our brain predicts and attends to the world around us.

Based at CIMeC, University of Trento (IT).

Guest at Donders Institute, Radboud University (NL).

🧠 PhD student at the University of Toronto | The Memory & Perception Lab and The Duncan Lab

postdoc at brown studying learning and memory 🧠

https://futingzou.github.io/

Cognitive Neuroscientist🧠, I work on too many topics that it’s hard to say my interest. I wish to live in a small town where it snows, but somehow I can't. I love ice cream

Academic Associate and PhD student at York St John University interested in phonological learning and memory.

Assistant Professor @ CityUHK | Loot Boxes; Video Game Law 🎮🎰 | Empirical Legal & Policy Research | Pro Screenshotter 📱📸 | leonxiao.com

Computational neuroscientist || Postdoc with Tim Behrens || Sainsbury Wellcome Centre @ UCL

Joint postdoc at Columbia/NYU. Sponsored by New York Academy of Sciences through Leon Levy Foundation. PhD from Princeton University, Yale '19

Grad student studying cognition and the brain 🧠

@ the University of Chicago Psychology

Interested in how we pay attention and learn

Postdoc @ UCL studying causal judgment, counterfactual thinking, and metacognition

https://kevingoneill.github.io

human

mom of two

developmental cognitive scientist @Rutgers

co-Host @theitsinnatepc.bsky.social

more info https://sites.rutgers.edu/jinjing-jenny-wang/

Ph.D. student at Stanford. Interested in how the brain makes sense of the world.

PhD student @yale.edu

Interested in the neural basis of attention & memory 🧠

We're a neuroscience blog trying to make neuroscience accessible for everyone! Check it out here: https://neurofrontiers.blog

Interested in understanding the neural mechanisms underlying music cognition via neuroimaging and computational modeling

Neuroscience PhD from Princeton

Neuroscience Postdoc at MIT

https://scholar.google.co.uk/citations?user=RjmK2NgAAAAJ&hl=en