But, as I’ve heard from others, the AIs often suggest possible connections, related results, or avenues to pursue that hadn’t occured to me. Unfortunately, these are usually dead ends.

29.12.2025 17:25 — 👍 1 🔁 0 💬 1 📌 0

I’ve found that the pro-version AIs are great for proving known theorems or new theorems that could be considered homework problems, but so far I have had no success using them to solve truly open/novel/challenging math problems.

29.12.2025 17:08 — 👍 2 🔁 0 💬 1 📌 0

Since I can’t get it out of my head, I wrote up my thoughts on @kevinbaker.bsky.social's critique of AI-automated science and the logical end of processes that can't self-correct.

15.12.2025 15:33 — 👍 44 🔁 9 💬 3 📌 2

Kevin Baker's essay is probably the best thing I have read in 2025.

15.12.2025 23:42 — 👍 1 🔁 0 💬 0 📌 0

Yes. Just write your thoughts in a rough and unpolished form, say rough paragraphs that contain terse points you want to make. then let 'er rip

31.10.2025 19:21 — 👍 1 🔁 0 💬 1 📌 0

Section 7 is a wonderful description of the process they went through.

25.10.2025 15:57 — 👍 1 🔁 0 💬 1 📌 0

something just isn't fully clicking. if you look at total yards and time of possession, they should have blown them out. well, better anyway to peak later in season, so let's hope that's what happens (like two seasons ago)

13.10.2025 02:09 — 👍 1 🔁 0 💬 0 📌 0

Packers get the win, but it wasn't pretty.

13.10.2025 00:45 — 👍 0 🔁 0 💬 1 📌 0

Thanks for participating and presenting your work!

08.09.2025 17:14 — 👍 2 🔁 0 💬 0 📌 0

Google promotes box shirts too

05.09.2025 18:19 — 👍 1 🔁 0 💬 0 📌 0

Pour into

27.08.2025 14:36 — 👍 2 🔁 0 💬 0 📌 0

Announcing the first workshop on Foundations of Language Model Reasoning (FoRLM) at NeurIPS 2025!

📝Soliciting abstracts that advance foundational understanding of reasoning in language models, from theoretical analyses to rigorous empirical studies.

📆 Deadline: Sept 3, 2025

11.08.2025 15:40 — 👍 11 🔁 3 💬 1 📌 1

“the only way to predict or to control the functioning of such systems is by an intricate system of charms, spells, and incantations”

05.07.2025 16:01 — 👍 2 🔁 0 💬 0 📌 0

See you there!

21.06.2025 15:23 — 👍 2 🔁 0 💬 0 📌 0

More likely midges. The truest sign of a healthy ecosystem

16.05.2025 22:55 — 👍 1 🔁 0 💬 1 📌 0

Looking forward to a great MMLS!

25.04.2025 12:21 — 👍 3 🔁 0 💬 0 📌 0

This is collaboration with Ziyue Luo, @shroffness and @kevinlauka

07.02.2025 02:55 — 👍 0 🔁 0 💬 0 📌 0

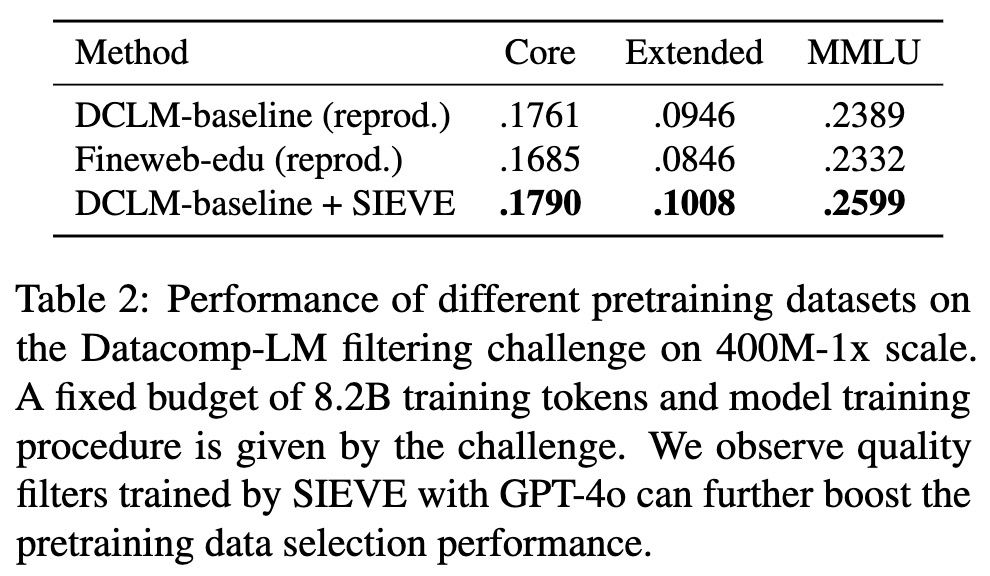

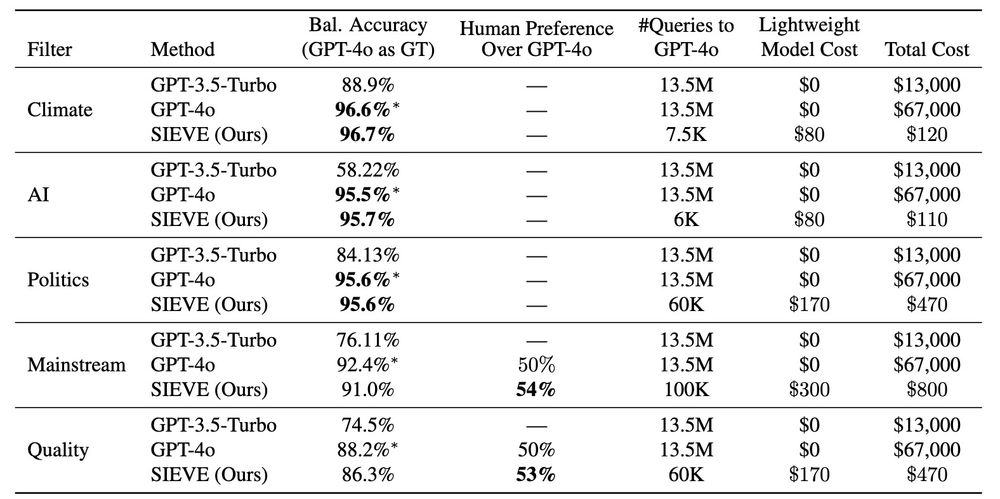

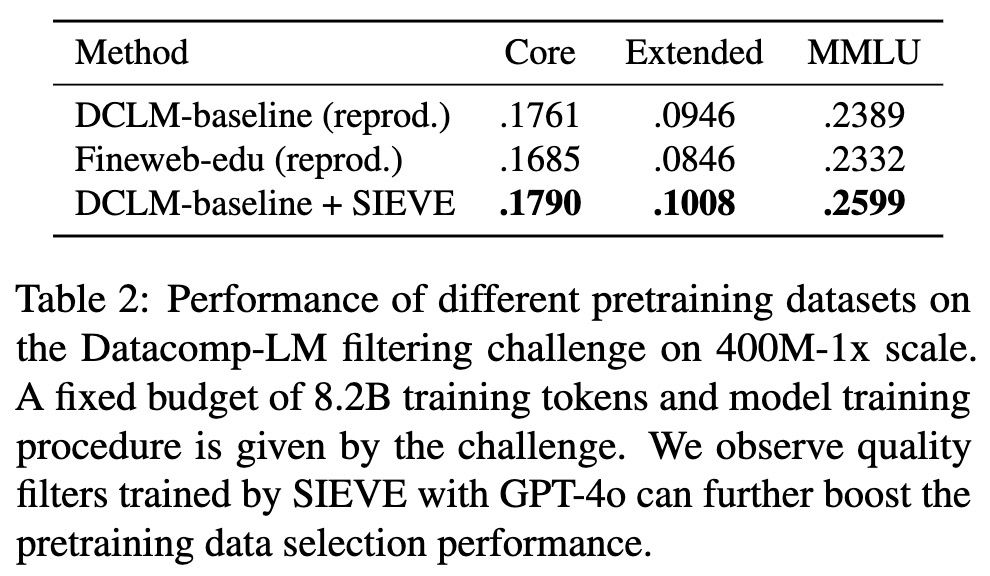

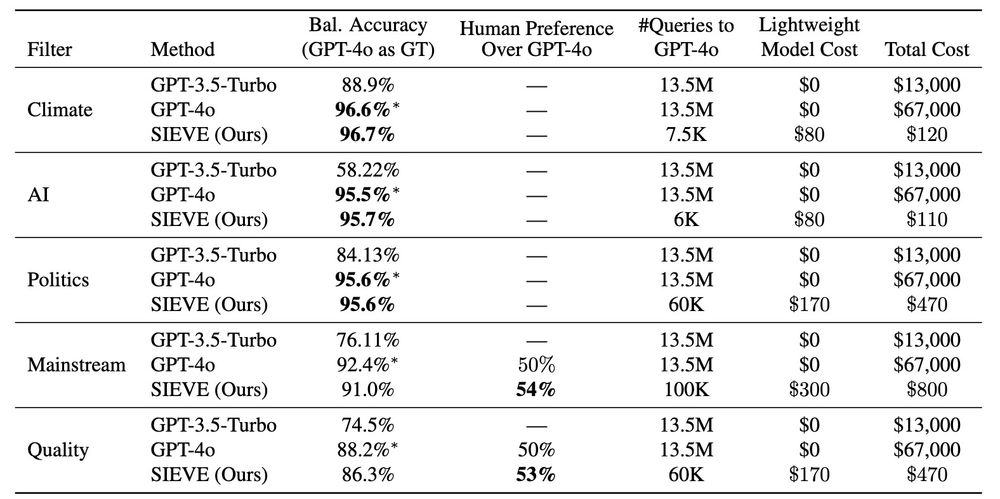

SIEVE improves upon existing quality filtering methods in the DataComp-LM challenge, producing better LLM pretraining data that led to improved model performance.

This work is part of Jifan's broader research on efficient ML training, from active learning to label-efficient SFT for LLMs.

07.02.2025 02:55 — 👍 0 🔁 0 💬 1 📌 0

Why does this matter? High-quality data is the bedrock of LLM training. SIEVE enables filtering trillions of web data for specific domains like medical/legal text with customizable natural language prompts.

07.02.2025 02:55 — 👍 0 🔁 0 💬 1 📌 0

SIEVE distills GPT-4's data filtering capabilities into lightweight models at <1% of the cost. Not just minor improvements - we're talking 500x more efficient filtering operations.

07.02.2025 02:55 — 👍 0 🔁 0 💬 1 📌 0

🧵 Heard all the buzz around distilling from OpenAI models? Check out @jifanz's latest work SIEVE - showing how strategic distillation can make LLM development radically more cost-effective while matching quality.

07.02.2025 02:55 — 👍 4 🔁 0 💬 1 📌 1

Maybe Trump should have read my mom's book: "For the first six weeks, the embryo, whether XX or XY, coasts along in sexual ambiguity." p. 25

23.01.2025 00:25 — 👍 2 🔁 0 💬 0 📌 0

Good luck with that

04.01.2025 01:20 — 👍 0 🔁 0 💬 1 📌 0

p.s. we don't know for sure if I said this or not

04.01.2025 00:36 — 👍 1 🔁 0 💬 1 📌 0

Is the solution treating everything electronic as "fake"?

Maybe?

04.01.2025 00:35 — 👍 1 🔁 0 💬 1 📌 0

Advocate for Peace (Inner and Outer)

https://litian96.github.io/

Limnologist and Associate Professor at UW-Madison. Canadian-American. Pocket and labrador enthusiast.

Professor at IST Austria | Machine learning, information theory | Prev: Stanford, EPFL | opinions my own

Assistant Professor at UC San Diego

Professor in Operations Research at Copenhagen Business School. In ❤️ with Sevilla and its Real Betis Balompié.

Associate Professor of Science and Technology Communication, Computational Social Science at University of Wisconsin-Madison | Stanford/

Columbia / Fudan University alumni | Pianist

www.kaipingchen.com

Computer engineer and Professor/Department Chair at UT Austin #Energy #Computing #DiversityInTech. Math, art, literature lover. Opinions are my own. She/her.

Does research on machine learning at Sony AI, Barcelona. Works on audio analysis, synthesis, and retrieval. Likes tennis, music, and wine.

https://serrjoa.github.io/

Post-doc @ VU Amsterdam, prev University of Edinburgh.

Neurosymbolic Machine Learning, Generative Models, commonsense reasoning

https://www.emilevankrieken.com/

Wisconsin CS. Snorkel AI.

Working on machine learning & information theory.

https://pages.cs.wisc.edu/~fredsala/

Senior Staff Research Scientist @Google DeepMind, former Chair Prof @Oxford Uni

Distinguished Professor of ECE and Feedzai Professor of machine learning @istecnico.bsky.social.

Professor, photographer, music lover, curious about almost everything.

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

International Conference on Learning Representations https://iclr.cc/

Powered by American Family Insurance

Researcher at Google. Improving LLM factuality, RAG and multimodal alignment and evaluation. San Diego. he/him ☀️🌱🧗🏻🏐 Prev UCSD, MSR, UW, UIUC.

Searching for principles of neural representation | Neuro + AI @ enigmaproject.ai | Stanford | sophiasanborn.com