🚨🧪 Announcing our #ICLR2026 Workshop, Generative AI in Genomics (Gen2): Barriers and Frontiers! @iclr-conf.bsky.social

📣Call for: Full workshop papers (5-8 pages) and Tiny papers (2-4 pages)

📅Submission deadline: 7 February 2026 AoE

🌐Learn more: genai-in-genomics.github.io

(1/7)

12.01.2026 03:15 —

👍 4

🔁 3

💬 1

📌 0

Really nice work!

04.12.2025 11:08 —

👍 2

🔁 0

💬 0

📌 0

Really interesting paper indeed.

20.11.2025 10:15 —

👍 1

🔁 0

💬 0

📌 0

Student Researcher, 2026 — Google Careers

🔥 WANTED: Student Researcher to join me, @vdebortoli.bsky.social, Jiaxin Shi, Kevin Li and @arthurgretton.bsky.social in DeepMind London.

You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

15.11.2025 17:23 —

👍 29

🔁 14

💬 0

📌 0

We figured out flow matching over states that change dimension. With "Branching Flows", the model decides how big things must be! This works wherever flow matching works, with discrete, continuous, and manifold states. We think this will unlock some genuinely new capabilities.

10.11.2025 09:09 —

👍 24

🔁 12

💬 4

📌 2

Really nice.

05.11.2025 09:44 —

👍 3

🔁 1

💬 0

📌 0

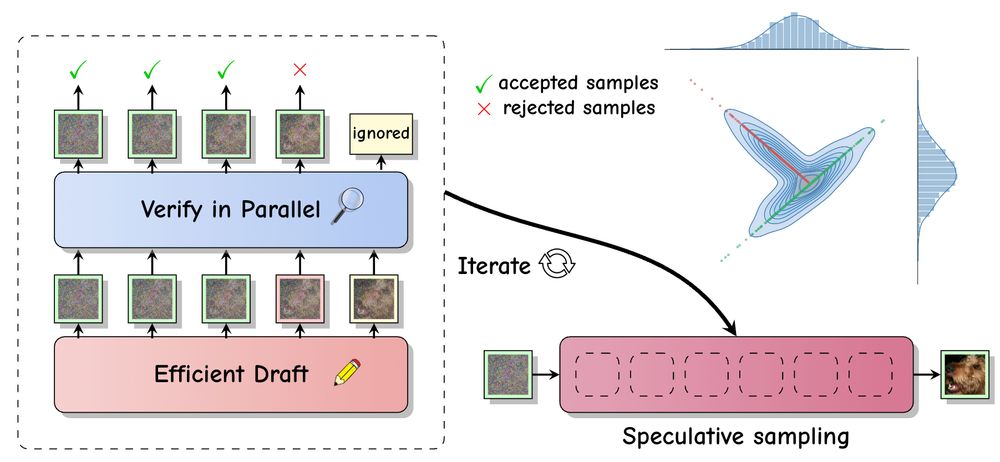

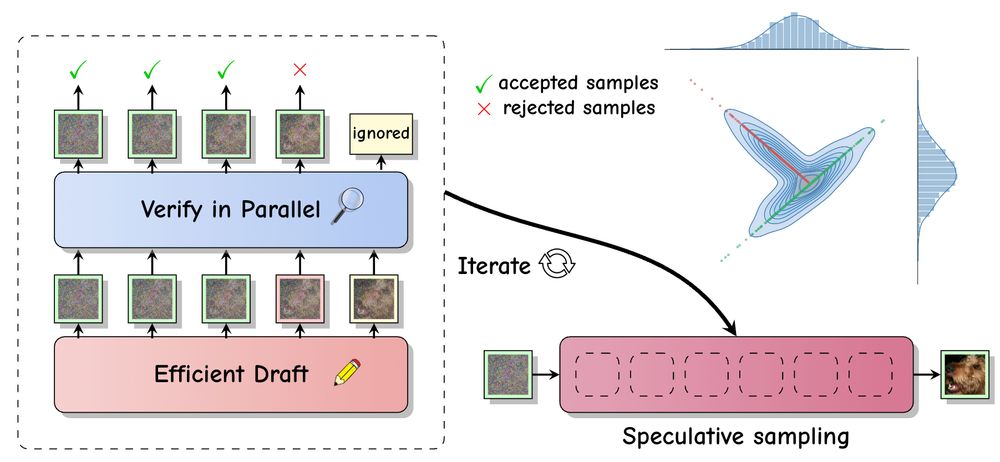

Very excited to share our preprint: Self-Speculative Masked Diffusions

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

07.10.2025 22:09 —

👍 13

🔁 6

💬 0

📌 0

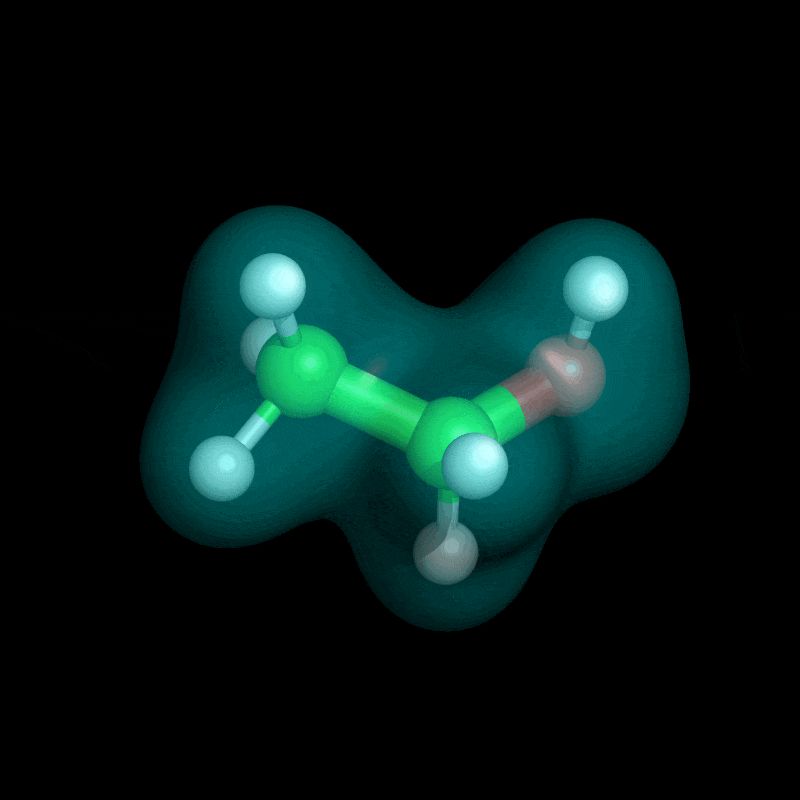

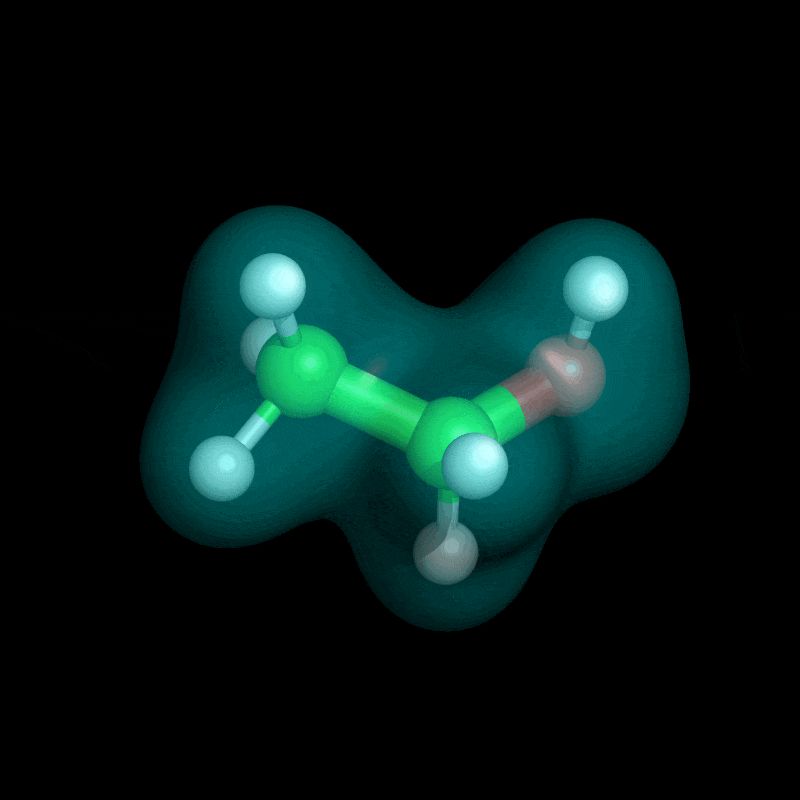

(1/n)🚨Train a model solving DFT for any geometry with almost no training data

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

10.06.2025 19:49 —

👍 12

🔁 4

💬 1

📌 1

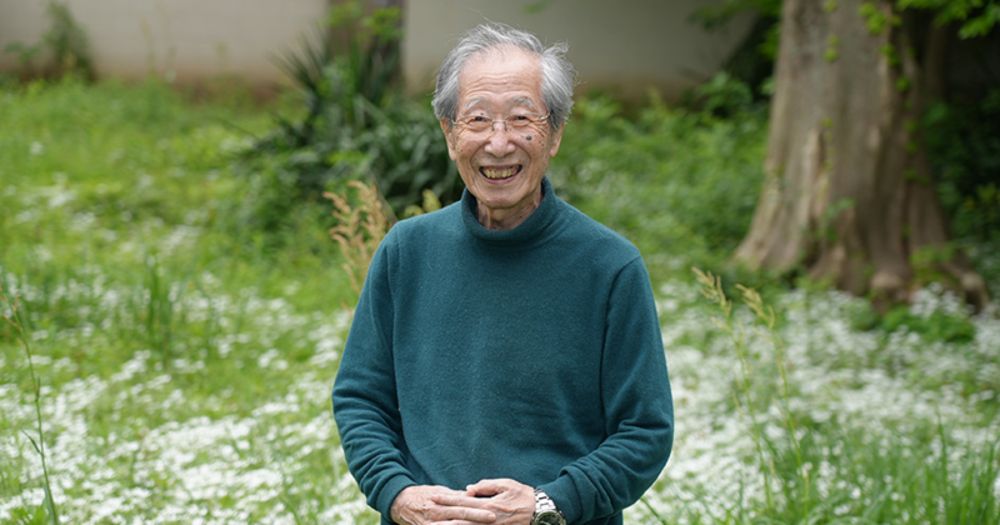

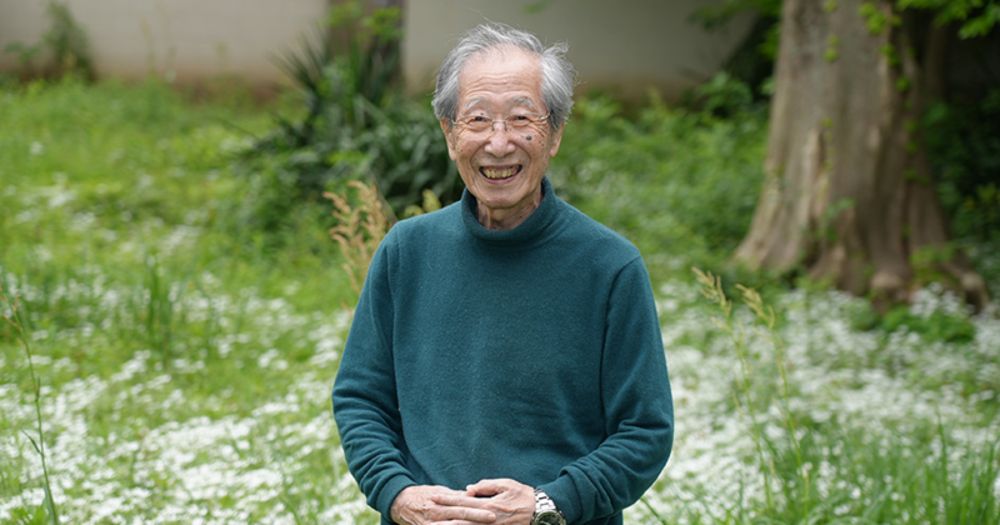

甘利 俊一 栄誉研究員が「京都賞」を受賞

甘利 俊一栄誉研究員(本務:帝京大学 先端総合研究機構 特任教授)は、人工ニューラルネットワーク、機械学習、情報幾何学分野での先駆的な研究が評価され、第40回(2025)京都賞(先端技術部門 受賞対象分野:情報科学)を受賞しました。

Shunichi Amari has been awarded the 40th (2025) Kyoto Prize in recognition of his pioneering research in the fields of artificial neural networks, machine learning, and information geometry

www.riken.jp/pr/news/2025...

20.06.2025 13:26 —

👍 35

🔁 12

💬 2

📌 0

LOGML 2025

London Geometry and Machine Learning Summer School, July 7-11 2025

🌟Applications open- LOGML 2025🌟

👥Mentor-led projects, expert talks, tutorials, socials, and a networking night

✍️Application form: logml.ai

🔬Projects: www.logml.ai/projects.html

📅Apply by 6th April 2025

✉️Questions? logml.committee@gmail.com

#MachineLearning #SummerSchool #LOGML #Geometry

11.03.2025 15:24 —

👍 20

🔁 9

💬 2

📌 1

Just write a short informal email. If the person needs a long-winded polite email to answer, then perhaps you don't want to have to interact with them.

09.03.2025 13:42 —

👍 1

🔁 0

💬 1

📌 0

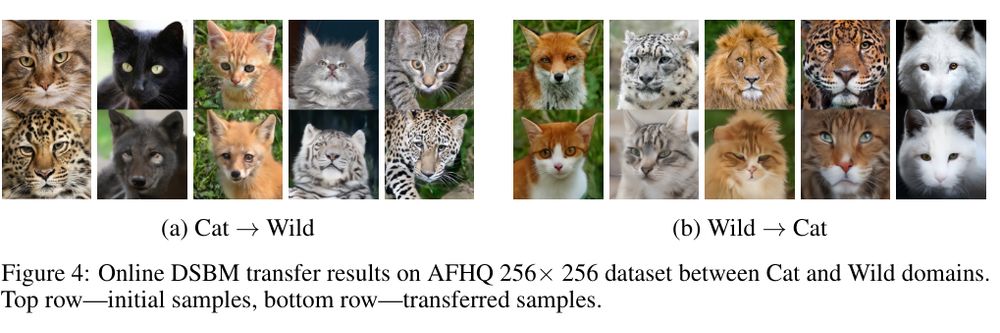

SuperDiff goes super big!

- Spotlight at #ICLR2025!🥳

- Stable Diffusion XL pipeline on HuggingFace huggingface.co/superdiff/su... made by Viktor Ohanesian

- New results for molecules in the camera-ready arxiv.org/abs/2412.17762

Let's celebrate with a prompt guessing game in the thread👇

06.03.2025 21:06 —

👍 14

🔁 4

💬 1

📌 1

Why academia is sleepwalking into self-destruction. My editorial @brain1878.bsky.social If you agree with the sentiments please repost. It's important for all our sakes to stop the madness

academic.oup.com/brain/articl...

06.03.2025 19:15 —

👍 539

🔁 309

💬 51

📌 104

Excited to see our paper “Computing Nonequilibrium Responses with Score-Shifted Stochastic Differential Equations” in Physical Review Letters this morning as an Editor’s Suggestion! We uses ideas from generative modeling to unravel a rather technical problem. 🧵 journals.aps.org/prl/abstract...

04.03.2025 18:45 —

👍 10

🔁 2

💬 1

📌 0

Great intro to PAC-Bayes bounds. Highly recommended!

05.03.2025 09:55 —

👍 12

🔁 2

💬 0

📌 0

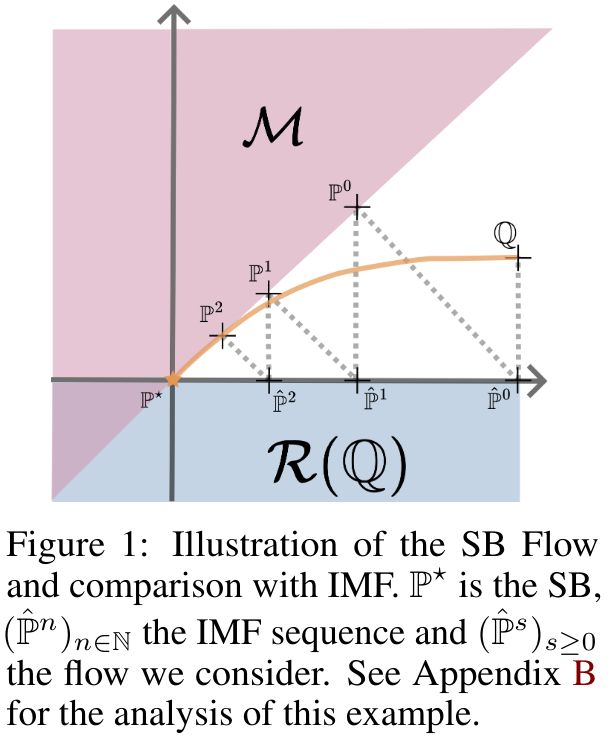

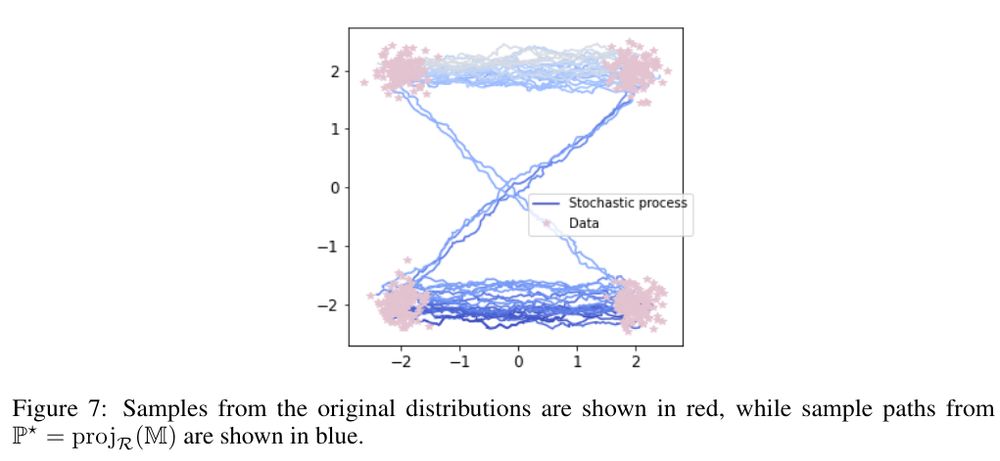

Well you can do it but we don't have any proof. We actually also ran alpha-DSBM with zero-variance noise, i.e. so really an "alpha-rectified flow": experimentally it does "work" but we have no proof of convergence for the procedure.

08.02.2025 17:54 —

👍 3

🔁 0

💬 0

📌 0

Yes the trajectories are not quite smooth as they correspond to a Brownian bridge and, as the variance of the reference Brownian motion of your SB goes to zero, you get back to the deterministic and straight paths of OT.

08.02.2025 12:02 —

👍 4

🔁 1

💬 1

📌 0

Better diffusions with scoring rules!

Fewer, larger denoising steps using distributional losses; learn the posterior distribution of clean samples given the noisy versions.

arxiv.org/pdf/2502.02483

@vdebortoli.bsky.social Galashov Guntupalli Zhou @sirbayes.bsky.social @arnauddoucet.bsky.social

05.02.2025 14:23 —

👍 29

🔁 8

💬 1

📌 3

On the Asymptotics of Importance Weighted Variational Inference

For complex latent variable models, the likelihood function is not available in closed form. In this context, a popular method to perform parameter estimation is Importance Weighted Variational Infere...

A standard ML approach for parameter estimation in latent variable models is to maximize the expectation of the logarithm of an importance sampling estimate of the intractable likelihood. We provide consistency/efficiency results for the resulting estimate: arxiv.org/abs/2501.08477

16.01.2025 17:43 —

👍 29

🔁 2

💬 0

📌 0

Speculative sampling accelerates inference in LLMs by drafting future tokens which are verified in parallel. With @vdebortoli.bsky.social , A. Galashov & @arthurgretton.bsky.social , we extend this approach to (continuous-space) diffusion models: arxiv.org/abs/2501.05370

10.01.2025 16:30 —

👍 45

🔁 10

💬 0

📌 0

I personally read at least a couple of hours per day. It is not particularly focused and I might "waste" time but I just enjoy it.

08.01.2025 08:05 —

👍 14

🔁 1

💬 1

📌 1

Very nice paper indeed. I like it.

27.12.2024 16:38 —

👍 2

🔁 0

💬 0

📌 0

🔊 Super excited to announce the first ever Frontiers of Probabilistic Inference: Learning meets Sampling workshop at #ICLR2025 @iclr-conf.bsky.social!

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

18.12.2024 19:09 —

👍 84

🔁 19

💬 2

📌 3

BreimanLectureNeurIPS2024_Doucet.pdf

The slides of my NeurIPS lecture "From Diffusion Models to Schrödinger Bridges - Generative Modeling meets Optimal Transport" can be found here

drive.google.com/file/d/1eLa3...

15.12.2024 18:40 —

👍 327

🔁 67

💬 9

📌 5

One #postdoc position is still available at the National University of Singapore (NUS) to work on sampling, high-dimensional data-assimilation, and diffusion/flow models. Applications are open until the end of January. Details:

alexxthiery.github.io/jobs/2024_di...

15.12.2024 14:46 —

👍 41

🔁 18

💬 0

📌 0

I couldn't speak for the following 3 days :-)

14.12.2024 22:13 —

👍 2

🔁 0

💬 0

📌 0

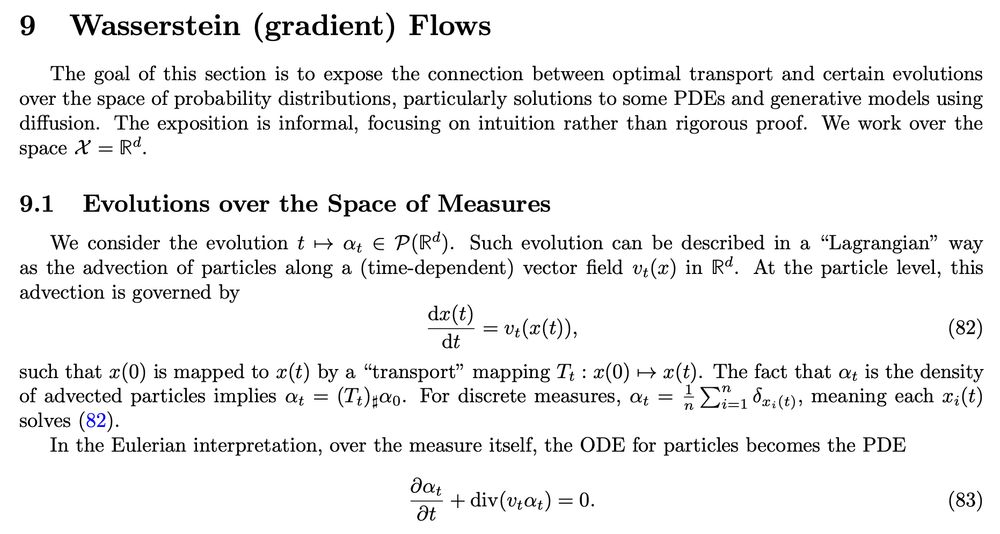

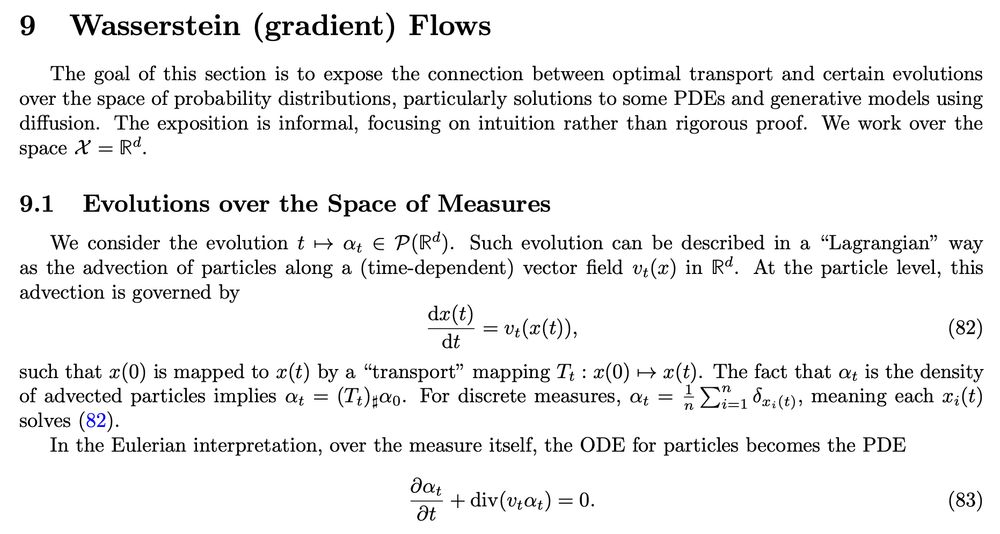

I have updated my course notes on Optimal Transport with a new Chapter 9 on Wasserstein flows. It includes 3 illustrative applications: training a 2-layer MLP, deep transformers, and flow-matching generative models. You can access it here: mathematical-tours.github.io/book-sources...

04.12.2024 08:11 —

👍 103

🔁 19

💬 2

📌 0

On the optimality of coin-betting for mean estimation

Confidence sequences are sequences of confidence sets that adapt to incoming data while maintaining validity. Recent advances have introduced an algorithmic formulation for constructing some of the ti...

exciting new work by my truly brilliant postdoc Eugenio Clerico on the optimality of coin-betting strategies for mean estimation!

for fans of: mean estimation, online learning with log loss, optimal portfolios, hypothesis testing with E-values, etc.

dig in:

arxiv.org/abs/2412.02640

04.12.2024 08:13 —

👍 41

🔁 8

💬 2

📌 0