Another great piece in @quantamagazine.bsky.social, this time on new surprising algorithms for shortest paths. With quotes from BARC head and shortest path grand-old-man Mikkel Thorup

09.08.2025 05:31 — 👍 13 🔁 2 💬 0 📌 0

Call for workshops – FOCS 2025

The Call for Workshops for #FOCS2025 is up! Submit a proposal by September 5!

📋 focs.computer.org/2025/call-fo...

Workshop chairs: Mohsen Ghaffari and Dakshita Khurana

06.08.2025 02:19 — 👍 3 🔁 3 💬 0 📌 0

Dara

DARA (Danish Advanced Research Academy) is a new initiative in which exceptional STEM candidates can get fully funded PhD scholarships at Danish universities. Those who are interested in applying should reach out to potential supervisors as soon as possible. daracademy.dk/fellowship/f...

28.06.2025 10:27 — 👍 9 🔁 0 💬 0 📌 1

First European Workshop on Differential Privacy

September 17, 2026

At IST Austria

Finally a workshop on Differential Privacy in Europe! Organized by Monika Henzinger at IST Austria next year in September, so reserve the date! Monika’s STOC keynote, where the workshop was announced, was about the intersection of privacy and dynamic algorithms. Lots of questions in this space!

26.06.2025 11:57 — 👍 8 🔁 0 💬 0 📌 0

Jonas Klausen hashing out the details at his PhD defense at BARC yesterday. Congratulations to Jonas for a successful defense!

13.06.2025 06:25 — 👍 9 🔁 0 💬 0 📌 0

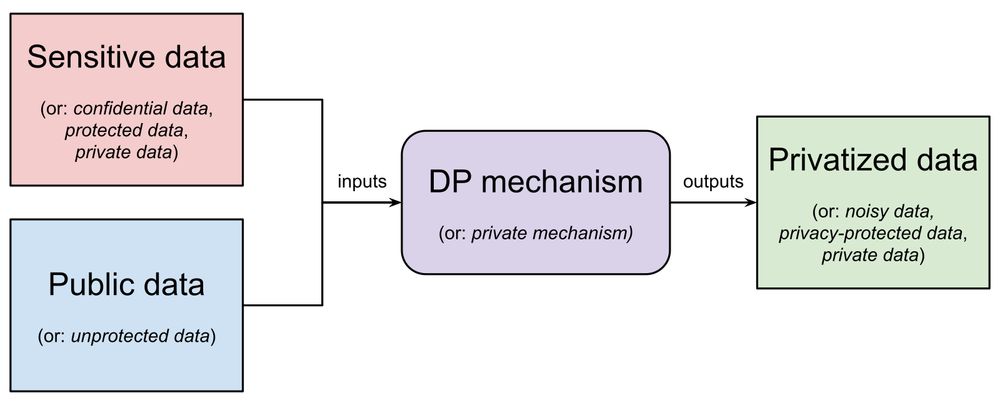

Comprehensive survey of correlated noise mechanisms and their application in private machine learning!

12.06.2025 06:03 — 👍 5 🔁 0 💬 0 📌 0

Private Lossless Multiple Release

Koufogiannis et al. (2016) showed a $\textit{gradual release}$ result for Laplace noise-based differentially private mechanisms: given an $\varepsilon$-DP release, a new release with privacy parameter...

In an upcoming #icml paper with @jdandersson.net, @lukasretschmeier.de, and Boel Nelson we extend this line of work to more general settings, e.g. allowing releases to be made adaptively in arbitrary order and supporting new noise distributions. The paper is now on arXiv: arxiv.org/abs/2505.22449

30.05.2025 06:26 — 👍 6 🔁 0 💬 1 📌 0

In 2015-2016 Koufogiannis, Han, and Pappas showed that multiple releases of Laplace and Gaussian mechanisms can be correlated such that having access to multiple releases exposes no more information than the least private release. In this sense we can create many releases with no loss in privacy!

30.05.2025 06:26 — 👍 4 🔁 0 💬 1 📌 0

Differential privacy usually deals with releasing information about a dataset at a fixed privacy level with as high utility as possible. In some settings there is a need to have *multiple* releases at different levels of privacy/trust, but how can this be done without degrading privacy?

30.05.2025 06:26 — 👍 13 🔁 1 💬 1 📌 0

Back to Square Roots: An Optimal Bound on the Matrix Factorization Error for Multi-Epoch Differentially Private SGD

Nikita P. Kalinin, Ryan McKenna, Jalaj Upadhyay, Christoph H. Lampert

http://arxiv.org/abs/2505.12128

Matrix factorization mechanisms for differentially private training have

emerged as a promising approach to improve model utility under privacy

constraints. In practical settings, models are typically trained over multiple

epochs, requiring matrix factorizations that account for repeated

participation. Existing theoretical upper and lower bounds on multi-epoch

factorization error leave a significant gap. In this work, we introduce a new

explicit factorization method, Banded Inverse Square Root (BISR), which imposes

a banded structure on the inverse correlation matrix. This factorization

enables us to derive an explicit and tight characterization of the multi-epoch

error. We further prove that BISR achieves asymptotically optimal error by

matching the upper and lower bounds. Empirically, BISR performs on par with

state-of-the-art factorization methods, while being simpler to implement,

computationally efficient, and easier to analyze.

Back to Square Roots: An Optimal Bound on the Matrix Factorization Error for Multi-Epoch Differentially Private SGD

Nikita P. Kalinin, Ryan McKenna, Jalaj Upadhyay, Christoph H. Lampert

http://arxiv.org/abs/2505.12128

20.05.2025 03:50 — 👍 5 🔁 1 💬 0 📌 0

I heard the research submitted to #neurips is so hot they need about 2000 ACs to manage it

19.05.2025 15:41 — 👍 14 🔁 0 💬 0 📌 0

“Det är klart man är lite förvånad. Det kan man ju inte sticka under stol med, säger Axel Åhman i KAJ.” Som att bli förvånad över utfallet när man singlar slant…

18.05.2025 09:28 — 👍 1 🔁 0 💬 0 📌 0

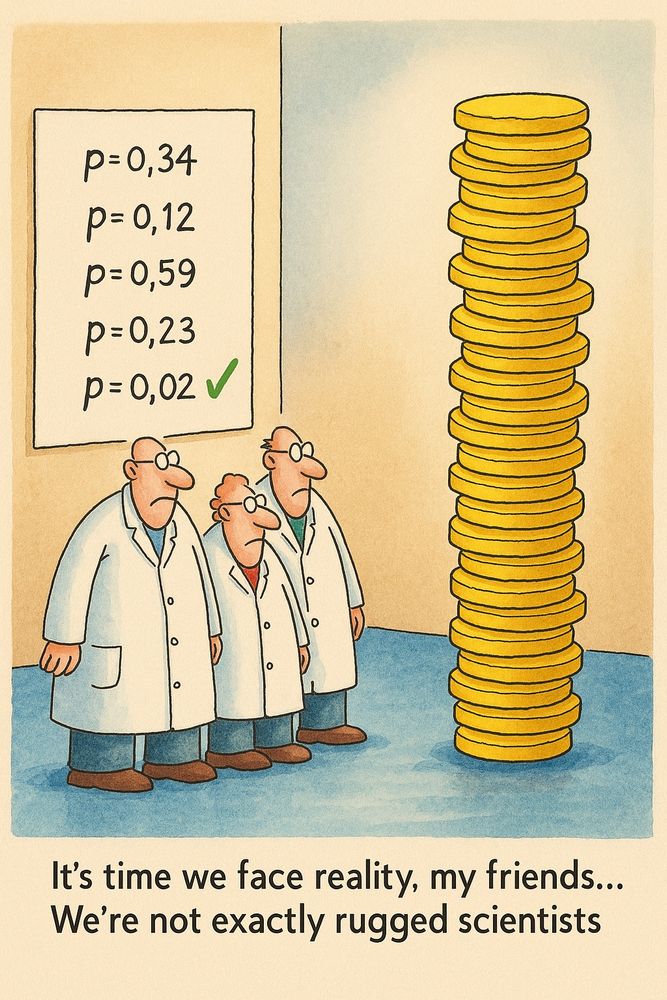

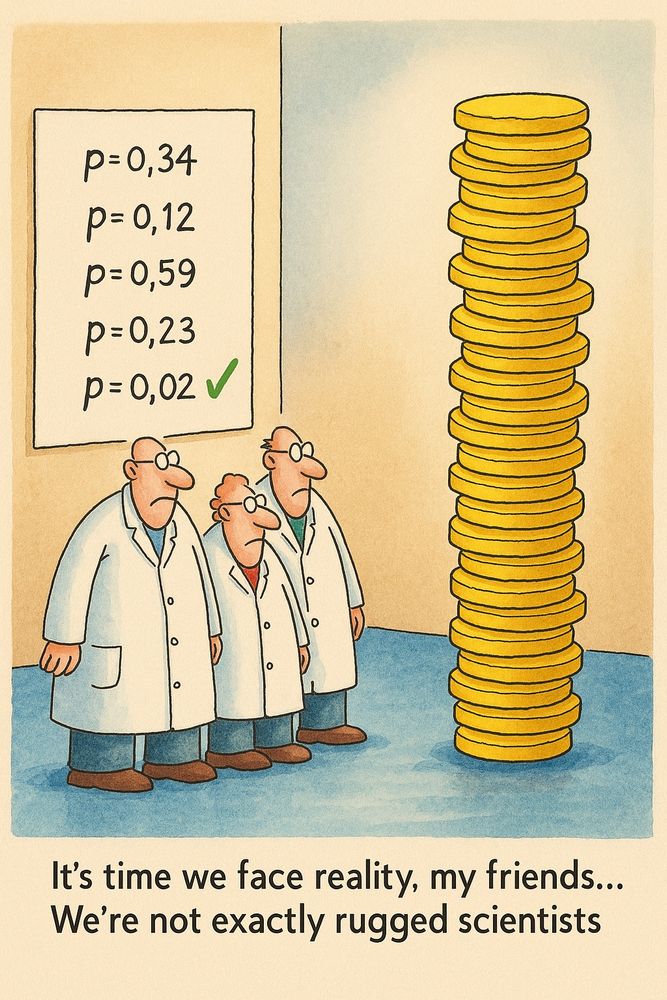

It’s a stack of experiments, I guess, repeated many times until they finally reject the null hypothesis

06.05.2025 08:17 — 👍 1 🔁 0 💬 0 📌 0

Scientists standing in front of a bunch of p-values, most large but one smaller than 0.05.

Caption: It's time we face reality, my friends... We're not exactly rugged scientists.

With apologies to Gary Larson

05.05.2025 16:11 — 👍 2 🔁 0 💬 2 📌 0

SODA 2026

The Call for Papers (CfP) for #SODA26 is out: www.siam.org/conferences-...

The submission server is open: soda26.hotcrp.com

Deadline: ⏰ Monday, July 14, AoE (July 15, 11:59am UTC)

30.04.2025 08:13 — 👍 14 🔁 11 💬 0 📌 0

- Research is needed not just on core ML methods, but on AI in combination with cybersecurity, formal verification, human-computer interaction, and more.

The post explicitly does not address AI for military use that would "require a deeper analysis"

22.04.2025 08:00 — 👍 0 🔁 0 💬 0 📌 0

- AI has potential for harmful use but can also be used to defend against harm; ensuring a playing field giving defensive use an advantage is key

- Trying to limit the development of capability is likely to be counterproductive

- Regulation should address AI application, not capability

22.04.2025 08:00 — 👍 0 🔁 0 💬 1 📌 0

Post authors @randomwalker.bsky.social and @sayash.bsky.social do a great job at articulating the view. Some takeaways:

- Many societal issues associated with AI are not new, but have arisen in other contexts

- We can build on existing approaches to mitigating risk of harm through regulation

22.04.2025 08:00 — 👍 0 🔁 0 💬 1 📌 0

"AI as normal technology" captures a view that many of us hold but has not been very visible in the debates around AI. I used Substack's text-to-audio function for the first time to listen to www.aisnakeoil.com/p/ai-as-norm... (skipping the last 30 min. reading the reference list...)

22.04.2025 08:00 — 👍 5 🔁 0 💬 1 📌 0

ESA – ALGO2025

European Sympsium on Algorithms 2025 will be held in Warsaw in September, as part of ALGO 2025. Do you have great work on design and analysis of algorithms? Submit it by April 23! algo-conference.org/2025/esa/

08.04.2025 14:45 — 👍 4 🔁 1 💬 0 📌 0

Trump’s Latest Weapon Against Critics: Destroying Their Lawyers

When a president uses executive power to not just blacklist but effectively destroy a major law firm, solely for representing political opponents, it means he’s given up any pretense that he’s not …

There are a million horrible things happening all at once, but wanted to take a moment to focus on the exec order against Perkins Coie and how it's an attempt to make it difficult for anyone to use the legal process to challenge all this law breaking.

www.techdirt.com/2025/03/11/t...

11.03.2025 18:33 — 👍 1446 🔁 512 💬 30 📌 28

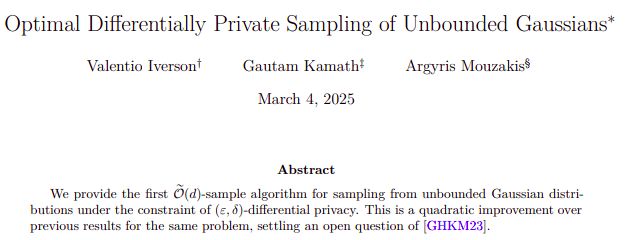

🧵New paper on arXiv: Optimal Differentially Private Sampling of Unbounded Gaussians.

With @uwcheritoncs.bsky.social undergrad Valentio Iverson and PhD student Argyris Mouzakis (@argymouz.bsky.social).

The first O(d) algorithm for privately sampling arbitrary Gaussians! arxiv.org/abs/2503.01766 1/n

04.03.2025 15:25 — 👍 14 🔁 5 💬 1 📌 2

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Strengthening Europe's Leadership in AI through Research Excellence | ellis.eu

Journalist, Weekendavisen.

Winemaker, Sonoma, California.

Professor at ISTA (Institute of Science and Technology Austria), heading the Machine Learning and Computer Vision group. We work on Trustworthy ML (robustness, fairness, privacy) and transfer learning (continual, meta, lifelong). 🔗 https://cvml.ist.ac.at

Vill förstå hur allt funkar, och helst att du förstår det också. Mer intresserad än bekymrad. Består som alla andra av 60% vatten och 40% smuts. Finns även som @avadeaux@mastodonsweden.se: https://bsky.app/profile/avadeaux.mastodonsweden.se.ap.brid.gy

Concerned world citizen and professor of mathematical statistics (in that order).

assistant prof | networks, data, decisions

https://aminrahimian.github.io/

https://sociotechnical.pitt.edu/

PhD student in differential privacy and mobility at the University of Padova. Master's in Theoretical Physics.

Love long hiking and music.

PhD candidate at University College London, CDT in Foundational AI, Turing Enrichment Scholar 2024/2025

Reinforcement Learning, Robustness, Differential Privacy

IEEE Conference on Secure and Trustworthy Machine Learning

March 2026 (Munich) • #SaTML2026

https://satml.org/

CS PhD at @sydney.edu.au. Working on Differential Privacy. Interested in TCS and maths, particularly learning, testing and statistics

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

Computer Science PhD Student at MIT

https://jusyc.github.io/