🚨 Got a great idea for an AI + Security competition?

@satml.org is now accepting proposals for its Competition Track! Showcase your challenge and engage the community.

👉 satml.org/call-for-com...

🗓️ Deadline: Aug 6

@satml.org.bsky.social

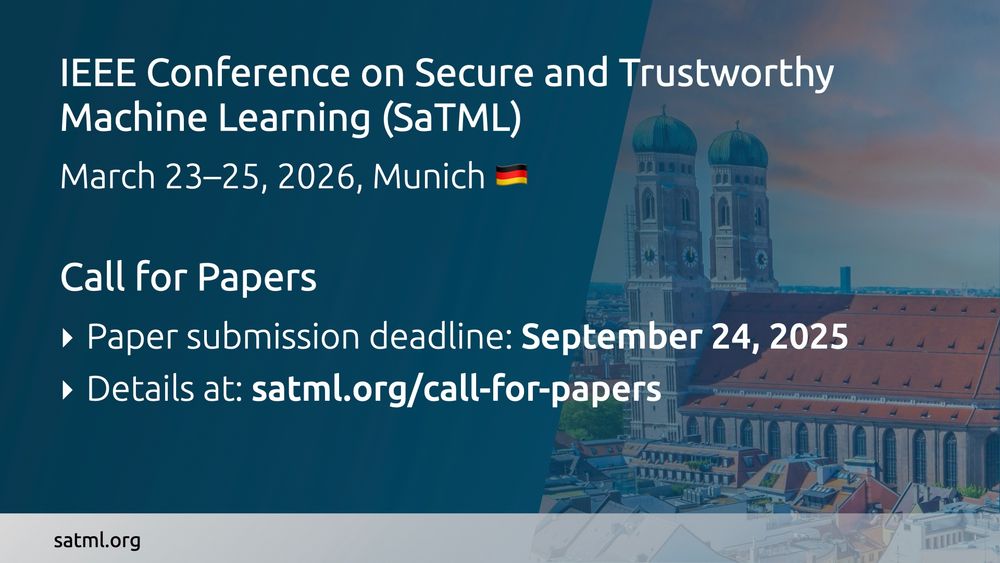

IEEE Conference on Secure and Trustworthy Machine Learning March 2026 (Munich) • #SaTML2026 https://satml.org/

🚨 Got a great idea for an AI + Security competition?

@satml.org is now accepting proposals for its Competition Track! Showcase your challenge and engage the community.

👉 satml.org/call-for-com...

🗓️ Deadline: Aug 6

Call for Competitions Competition proposal deadline: August 6, 2025 Decision notification: August 27, 2025

We’re happy to announce the Call for Competitions for

@satml.org

The competition track has been a highlight of SaTML, featuring exciting topics and strong participation. If you’d like to host one for SaTML 2026, visit:

👉 satml.org/call-for-com...

⏰ Deadline: Aug 6

IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), March 23-25, 2025, Munich Submission deadline: September 24, 2025

We're excited to announce the Call for Papers for SaTML 2026, the premier conference on secure and trustworthy machine learning @satml.org

We seek papers on secure, private, and fair learning algorithms and systems.

👉 satml.org/call-for-pap...

⏰ Deadline: Sept 24

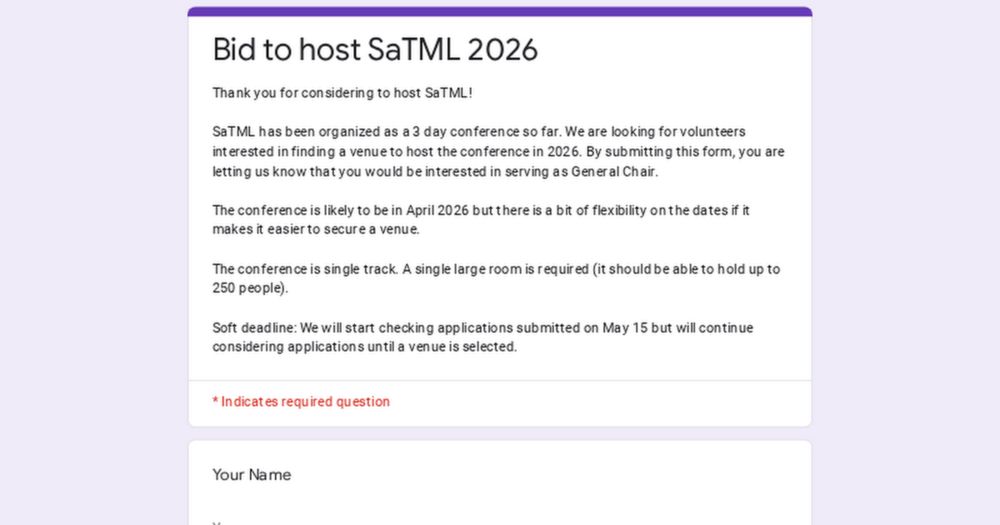

🌍 Help shape the future of SaTML!

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

🚨 SaTML is searching for its 2026 home!

Interested in becoming General Chair and hosting the conference in your city or institution? We’d love to hear from you. Place a bid here:

👉 forms.gle/kbxtwZddpcLD...

🎤 That’s a wrap on #SaTML25! Huge thanks to the speakers, organizers, reviewers, and everyone who joined the conversation. See you next time!

11.04.2025 14:42 — 👍 1 🔁 0 💬 0 📌 0

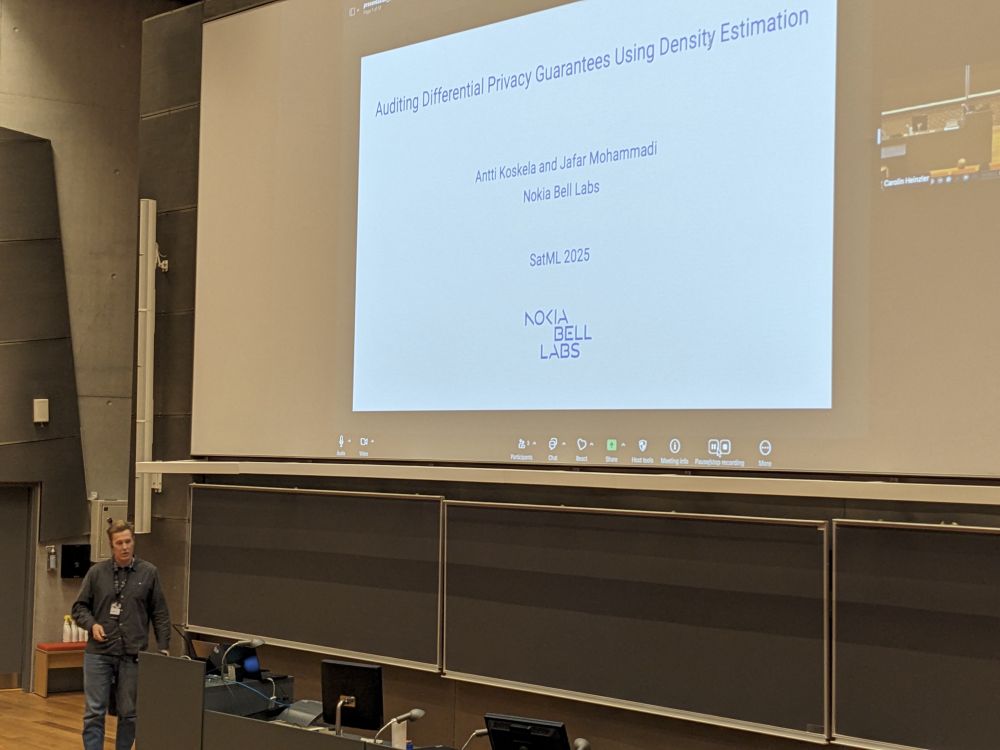

🔍 How private was that release? @a-h-koskela.bsky.social presents a method for auditing DP guarantees using density estimation. #SaTML25

11.04.2025 14:24 — 👍 0 🔁 0 💬 0 📌 0

🧮 Getting the math right. @matt19234.bsky.social walks through common traps in privacy accounting and how to avoid them. #SaTML25

11.04.2025 14:12 — 👍 3 🔁 1 💬 0 📌 0

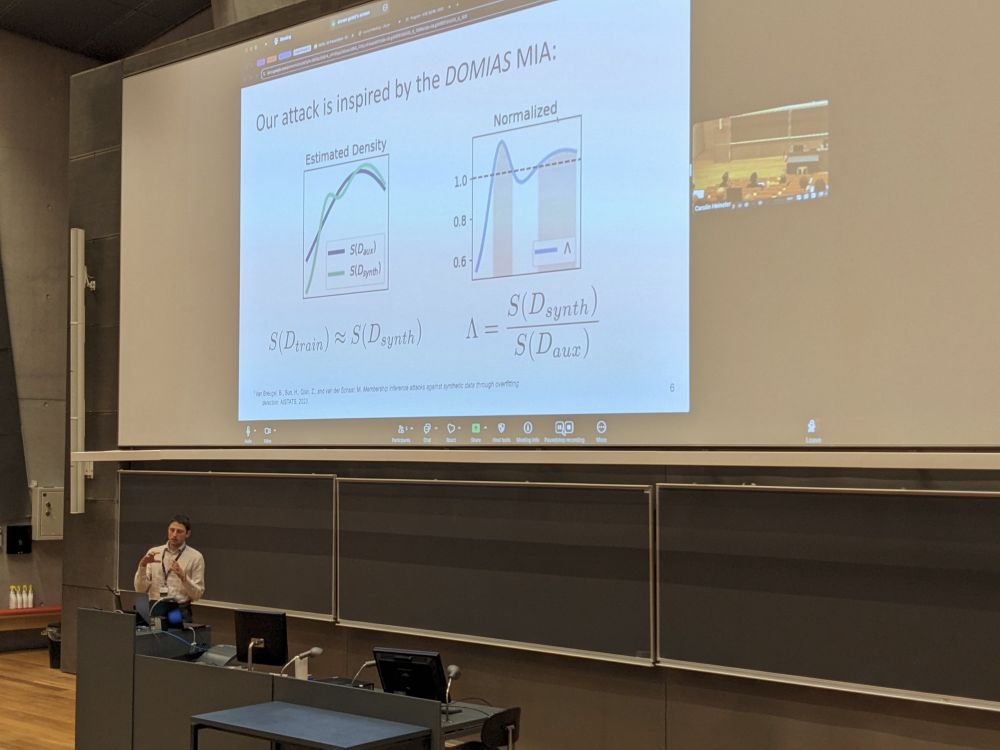

🧠 Marginals leak. Steven Golob shows how synthetic data built on marginals can still compromise privacy. Paper: arxiv.org/abs/2410.05506 #SaTML25

11.04.2025 14:03 — 👍 0 🔁 0 💬 2 📌 0

📃🔐 Privacy and fairness? Khang Tran introduces FairDP, enabling fairness certification alongside differential privacy. Paper: arxiv.org/abs/2305.16474 #SaTML25

11.04.2025 13:43 — 👍 0 🔁 0 💬 1 📌 0📏 Wrapping up the talks with deep dives into differential privacy—Session 14 gets technical, from fairness to auditing.

11.04.2025 13:41 — 👍 1 🔁 0 💬 1 📌 0

🖼️📡 Hide and seek. Luke Bauer presents a method for covert messaging with provable security via image diffusion. Paper: arxiv.org/abs/2503.10063 #SaTML25

11.04.2025 13:11 — 👍 0 🔁 0 💬 0 📌 0

💣 Still work to do. Yigitcan Kaya makes the case that ML-based behavioral malware detection is fragile and far from solved. Paper: arxiv.org/abs/2405.06124 #SaTML25

11.04.2025 12:53 — 👍 0 🔁 0 💬 0 📌 0🕵️♂️ From detection to covert messaging—Session 13 explores the gray areas of ML security. #SaTML25

11.04.2025 12:50 — 👍 0 🔁 0 💬 2 📌 0

💻 What can you learn privately when compute is tight? Zachary Charles tackles user-level privacy under realistic constraints. #SaTML25

11.04.2025 12:19 — 👍 0 🔁 0 💬 0 📌 0

📊 Not all public datasets are equal. Xin Gu proposes a new metric—gradient subspace distance—to guide private learning choices. Paper: arxiv.org/abs/2303.01256 #SaTML25

11.04.2025 12:03 — 👍 3 🔁 1 💬 0 📌 0

📚🔒 Choose wisely. Kristian Schwethelm presents a method to balance data utility and privacy in active learning. Paper: arxiv.org/abs/2410.00542 #SaTML25

11.04.2025 11:49 — 👍 0 🔁 0 💬 0 📌 0

⚖️ Privacy isn’t always fair. Kai Yao breaks down the mechanisms that can introduce unfairness into private learning. Paper: arxiv.org/abs/2501.14414 #SaTML25

11.04.2025 11:32 — 👍 1 🔁 0 💬 0 📌 0🔐 Starting the final afternoon at #SaTML25 with Session 12—private learning from all angles: fairness, dataset selection, active learning, and budget-aware privacy.

11.04.2025 11:30 — 👍 1 🔁 0 💬 4 📌 0

🌲💀 Even decision trees aren’t safe. Lorenzo Cazzaro shows how to poison tree-based models. Paper: arxiv.org/abs/2410.00862 #SaTML25

11.04.2025 09:59 — 👍 1 🔁 0 💬 0 📌 0

🚗🔦 How robust are LiDAR detectors?Alexandra Arzberger presents Hi-ALPS, benchmarking six systems used in autonomous vehicles. Paper: arxiv.org/abs/2503.17168 #SaTML25

11.04.2025 09:41 — 👍 0 🔁 0 💬 1 📌 0

🎯 Robustness meets domain adaptation. Natalia Ponomareva introduces DART, a principled method for adapting without labels—and withstanding attacks. #SaTML25

11.04.2025 09:24 — 👍 0 🔁 0 💬 1 📌 0🛡️🌍 Session 11 at #SaTML25 is all about making models that hold up—across domains, sensors, and even sneaky tree poison.

11.04.2025 09:22 — 👍 0 🔁 0 💬 1 📌 0

🔍 A fairness reality check. Claire Zhang surveys the landscape of fair clustering—what works, what doesn’t, and what’s next. #SaTML25

11.04.2025 08:55 — 👍 0 🔁 0 💬 0 📌 0

🎯 Adversarial incentives meet fairness. Emily Diana presents a minimax approach to fairness when users can game the system. #SaTML25

11.04.2025 08:38 — 👍 0 🔁 0 💬 0 📌 0

🌀 Trying to be fair… and failing? Natasa Krco argues that efforts to reduce bias can themselves be arbitrary—or even unfair. #SaTML25

11.04.2025 08:24 — 👍 1 🔁 0 💬 0 📌 0

🌍 No central authority, no problem?Sayan Biswas explores fairness challenges and solutions in decentralized learning systems. Paper: arxiv.org/abs/2410.02541 #SaTML25

11.04.2025 08:10 — 👍 0 🔁 0 💬 0 📌 0⚖️ In Session 10, #SaTML25 takes a hard look at fairness—decentralized setups, strategic behavior, and when fairness efforts might backfire.

11.04.2025 08:01 — 👍 0 🔁 0 💬 4 📌 0

☀️ Kicking off the final day of #SaTML25 with a big question: Should you trust artificial intelligence? Matt Turek takes the stage for this morning’s keynote on the path toward trustworthy AI.

11.04.2025 07:08 — 👍 0 🔁 0 💬 0 📌 0

🌈 Can machines see color like we do? Ming-Chang Chiu presents ColorSense, exploring color perception in machine vision. Paper: arxiv.org/abs/2212.08650 #SaTML25

10.04.2025 15:35 — 👍 0 🔁 0 💬 0 📌 0