On March 4th, I’ll be in Philadelphia for the final day of #AAAI workshops, presenting our work on Sequence Reinforcement Learning at not one, but TWO workshops!

Morning: I will be presenting a poster at GenPlan.

Evening: Giving a talk on the same at PRL

If you’ll be at AAAI, let’s connect!

25.02.2025 16:46 — 👍 1 🔁 0 💬 0 📌 0

Office Hours | Devdhar Patel

I’m holding open office hours for anyone who needs Python programming help. These are held in parallel to UMass CS 119 that I am teaching this semester. More details here: www.devdharpatel.com/office-hours

Does anyone you know need Python help? Share this! #Python #LearnToCode #OpenOfficeHours

27.01.2025 18:14 — 👍 0 🔁 1 💬 0 📌 0

As an aside, @iclr-conf.bsky.social, how are top reviewers identified? I’d love to nominate two of mine!

24.01.2025 19:43 — 👍 0 🔁 0 💬 0 📌 0

Comments from the Program Chair on NeurIPS work:

- We ran only 1 seed (incorrect— even ChatGPT got this right!)

- No code submitted. (They searched for "GitHub" instead of checking the supplemental materials)

While we may have been below the bar for NeurIPS, the reasons cited were outright false.

24.01.2025 19:43 — 👍 0 🔁 0 💬 1 📌 0

📝 Sharing my contrasting experiences with the #ICLR and #NeurIPS review processes:

At #ICLR, our paper’s average score jumped from 4.2 to 7 thanks to thoughtful reviewer correspondence during the rebuttal/discussion phase.

In contrast, the #NeurIPS feedback we received felt... less constructive.

24.01.2025 19:43 — 👍 0 🔁 0 💬 1 📌 0

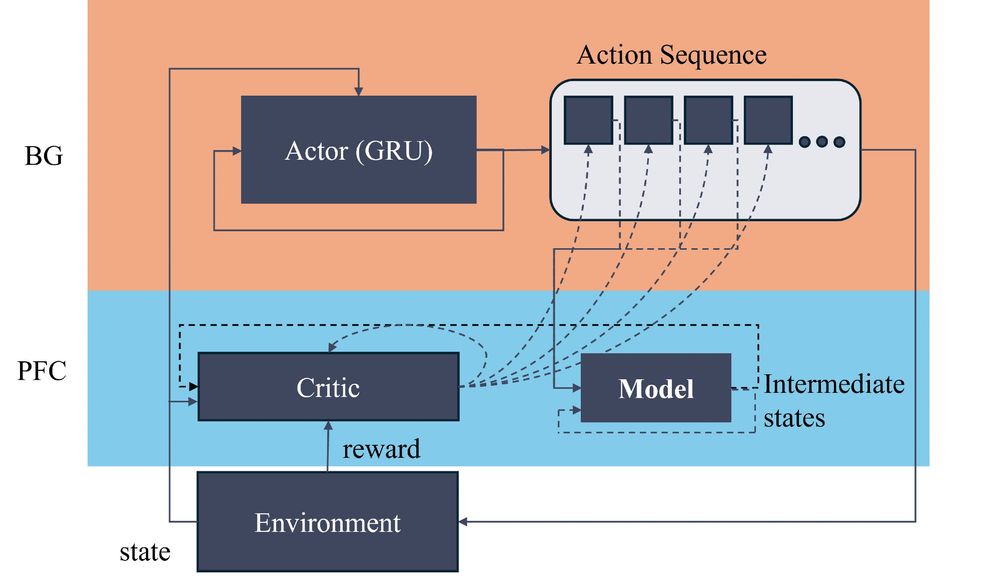

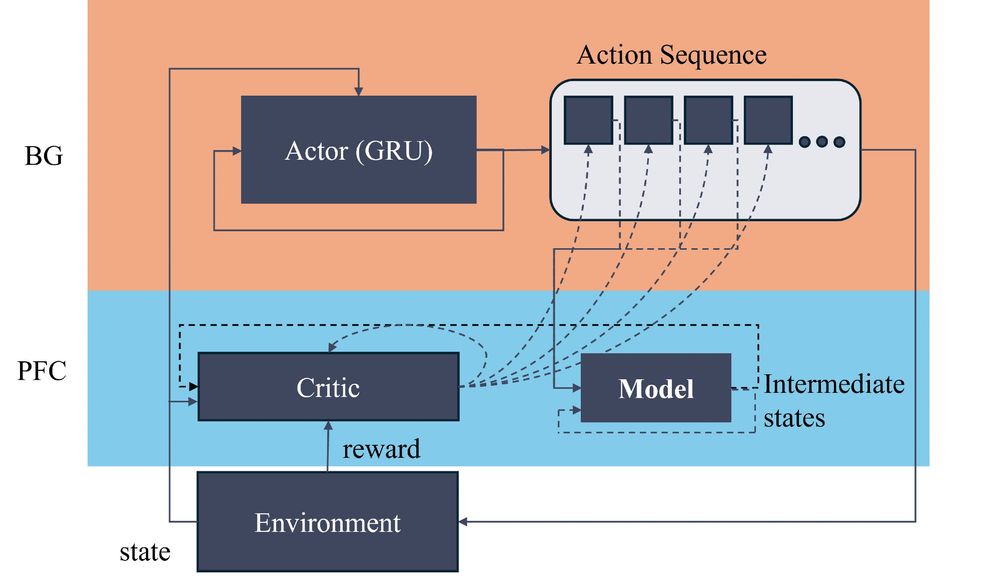

Sequence RL model

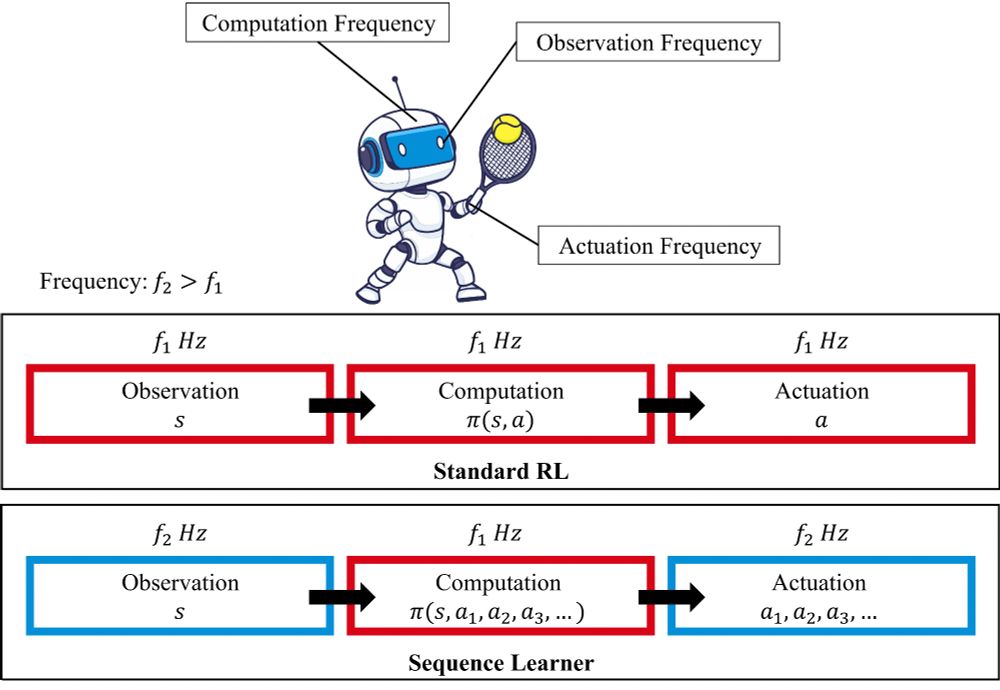

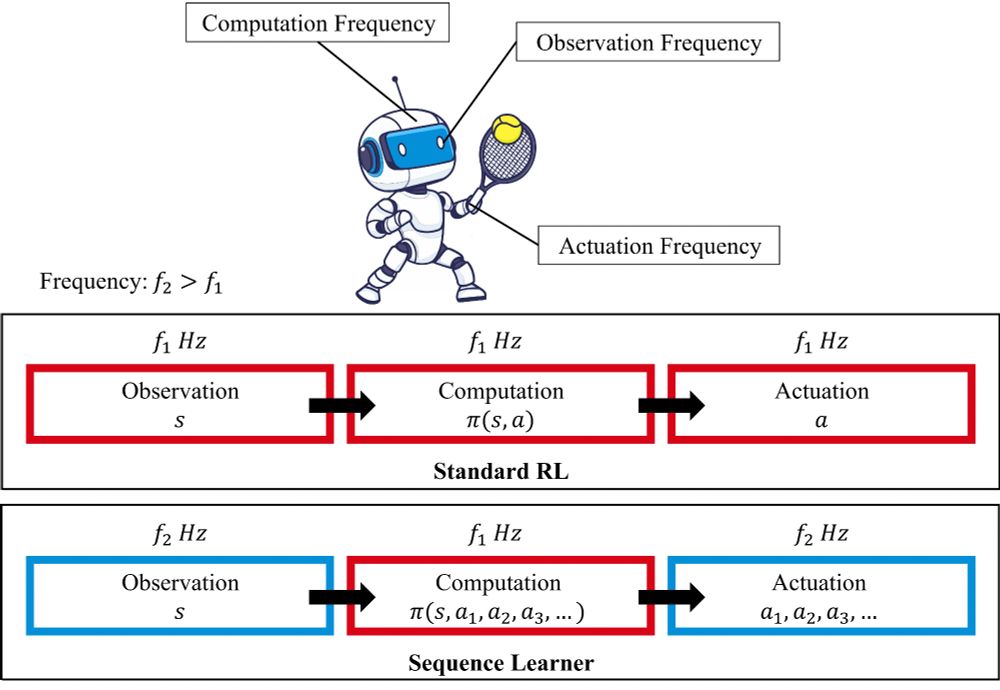

Difference between Standard RL and Sequence RL

Excited to share that we have two papers accepted to #ICLR2025 #ICLR!

One of them:

🧠 "Overcoming Slow Decision Frequencies in Continuous Control"

✨ Brain-inspired, model-based Sequence RL achieving SOTA performance at human-level decision speeds!

#ReinforcementLearning #AI

24.01.2025 19:08 — 👍 4 🔁 0 💬 0 📌 0

This poor quality of assessment not only demotivates researchers, it also brings into question any claims of fairness and accountability made by NeurIPS. (4/4)

14.12.2024 19:08 — 👍 0 🔁 0 💬 0 📌 0

Lastly did not check the supplementary materials for code and instead checked our paper for github link (in a double blind review process) and said we made a spurious claim about including our code. (3/4)

14.12.2024 19:08 — 👍 0 🔁 0 💬 1 📌 0

For example, despite giving many references to neuroscience papers, the PC clams that none of it is supported by neuroscience or behavioral data. We clearly present results with error bars and averaged over 5 seeds (explicitly mentioned in the paper), yet the PC claimed we presented only 1 seed(2/4)

14.12.2024 19:08 — 👍 0 🔁 0 💬 1 📌 0

Bot. I daily tweet progress towards machine learning and computer vision conference deadlines. Maintained by @chriswolfvision.bsky.social

Visiting Postdoc Scholar @UVA

Previously: PhD Imperial, Evidation Health, Samsung AI

Researching core ML methods as well as computational/statistical methods in biomedicine, health and law

arinbjorn.is

📍Switzerland

DeepMind Professor of AI @Oxford

Scientific Director @Aithyra

Chief Scientist @VantAI

ML Lead @ProjectCETI

geometric deep learning, graph neural networks, generative models, molecular design, proteins, bio AI, 🐎 🎶

machine learning asst prof at @cs.ubc.ca and amii

statistical testing, kernels, learning theory, graphs, active learning…

she/her, 🏳️⚧️, @queerinai.com

Researching computer vision stuff at Stack AV in Pittsburgh. Also on Twitter @i_ikhatri

Assistant Professor of Machine Learning

Generative AI, Uncertainty Quantification, AI4Science

Amsterdam Machine Learning Lab, University of Amsterdam

https://naesseth.github.io

PhDing @UCSanDiego @NVIDIA @hillbot_ai on scalable robot learning and embodied AI. Co-founded @LuxAIChallenge to build AI competitions. @NSF GRFP fellow

http://stoneztao.com

speech synthesis and LLM nerd, DMs open, working on LLM stuff

https://felix-red-panda.com

based in Berlin, Germany

Mostly: ML for music production workflows.

Professor of Physics & Senior Data Fellow at Belmont University, Nashville TN

Head of Research for Hyperstate Music AI.

Teacher of audio engineers, Opinions my own.

Explainer blog: https://drscotthawley.github.io

Re-imagining drug discovery with AI 🧬. Deep Learning ⚭ Geometry. Previously PhD at the University of Amsterdam. https://amoskalev.github.io/

Senior Director AI/ML @ Upwork

(Ellis) PhD student at LMU

Munich. Interested in causal machine learning and reinforcement learning

MSc #NeuroAI @Mila_Quebec | @mcgillu🇨🇦

ICLR 2025 workshop working building AI to answer a single question: Given low-level theory and computationally-expensive simulation code, how can we model complex systems on a useful time scale?

AI for cancer care at Mayo Clinic - we’re hiring! Previous: Microsoft Research, Google DeepMind, Cambridge University

AI professor at Caltech. General Chair ICLR 2025.

http://www.yisongyue.com

Imperial PhD

Deep learning | computer vision | autonomous drones | diffusion models | LLMs + RAG

benedikt.phd

CS Junior @ IITMBSc, KIIT |

Papers @ IEEE, ACM |

Intern @ UT Austin, TCS R&I, IITKGP | Pianist | https://annimukherjee.notion.site/anni-blogs | https://sites.google.com/view/anni-mukh/

AI for humans and for science.

https://danmackinlay.name

Research scientist @ CSIRO 🇦🇺

I'm that blogger from your search result sources list. Research areas: ml for physics, causal inference, AI safety and economic

Computational biologist, data scientist, PhD candidate @ Lücken lab, Helmholtz Munich