We’ve discovered a literal miracle with almost unlimited potential and it’s being scrapped for *no reason whatsoever*. This isn’t even nihilism, it’s outright worship of death and human suffering.

05.08.2025 23:09 — 👍 10488 🔁 3350 💬 50 📌 167

Attention Is Off By One

Let’s fix these pesky Transformer outliers using Softmax One and QuietAttention.

Really great pointer from Hao Zhang on the other site in relation to GPT OSS use of attention sinks.

If I were to guess, the attention sink is what allows them to omit QK-Norm which has become otherwise standard.

www.evanmiller.org/attention-is...

06.08.2025 12:48 — 👍 0 🔁 0 💬 0 📌 0

Alt Text:

Conference schedule for July 28th (Monday) and July 29th (Tuesday), listing talk titles, locations, times, and authors:

July 28th, Monday:

1. Attacking Vision-Language Computer Agents via Pop-ups

Location: Hall 4/5, Time: 11:00–12:30

Authors: Yanzhe Zhang, Tao Yu, Diyi Yang

2. SPHERE: An Evaluation Card for Human-AI Systems

Location: Hall 4/5, Time: 18:00–19:30

Authors: Dora Zhao*, Qianou Ma*, Xinran Zhao, Chenglei Si, Chenyang Yang, Ryan Louie, Ehud Reiter, Diyi Yang*, Tongshuang Wu*

(asterisk denotes equal contribution)

July 29th, Tuesday:

1. SynthesizeMe! Inducing Persona-Guided Prompts for Personalized Reward Models in LLMs

Location: Hall 4/5, Time: 10:30–12:00

Authors: Michael J Ryan, Omar Shaikh, Aditri Bhagirath, Daniel Frees, William Barr Held, Diyi Yang

2. Distilling an End-to-End Voice Assistant Without Instruction Training Data

Location: Room 1.61, Time: 14:12 (Second Talk)

Authors: William Barr Held, Yanzhe Zhang, Weiyan Shi, Minzhi Li, Michael J Ryan, Diyi Yang

3. Mind the Gap: Static and Interactive Evaluations of Large Audio Models

Location: Room 1.61 (implied), follows previous talk

Authors: Minzhi Li*, William Barr Held*, Michael J Ryan, Kunat Pipatanakul, Potsawee Manakul, Hao Zhu, Diyi Yang

(asterisk denotes equal contribution)

4. EgoNormia: Benchmarking Physical Social Norm Understanding

Location: Hall 4/5, Time: 16:00–17:30

Authors: MohammadHossein Rezaei*, Yicheng Fu*, Phil Cuvin*, Caleb Ziems, Yanzhe Zhang, Hao Zhu, Diyi Yang

(asterisk denotes equal contribution)

The SALT Lab is at #ACL2025 with our genius leader @diyiyang.bsky.social.

Come see work from

@yanzhe.bsky.social,

@dorazhao.bsky.social @oshaikh.bsky.social,

@michaelryan207.bsky.social, and myself at any of the talks and posters below!

28.07.2025 07:45 — 👍 2 🔁 0 💬 0 📌 0

Paper: aclanthology.org/2025.acl-lon...

28.07.2025 04:25 — 👍 0 🔁 0 💬 0 📌 0

I'm in Vienna for #ACL2025!

My work is all presented tomorrow, but today you'll find me today at the poster session from 11-12:30 evangelizing

my labmate Yanzhe Zhang's work on his behalf.

If you're interested in the risks traditional pop-up attacks present for AI agents, come chat!

28.07.2025 04:24 — 👍 4 🔁 0 💬 1 📌 0

It seems (at a minimum) like they post-trained on the virulently racist content from this thread. Musk framed this as a request for training data... and the top post is eugenics. Seems unlikely to be coincidence that the post uses the same phrasing as the prompt they later removed...

10.07.2025 05:20 — 👍 2 🔁 0 💬 0 📌 0

Btw, all of this is very nice for something that was a quick 15 line addition to Levanter.

github.com/stanford-crf...

03.07.2025 15:14 — 👍 0 🔁 0 💬 0 📌 0

Adding an Optimizer for Speedrun - Marin Documentation

Documentation for the Marin project

Have an optimizer you want to prove works better than AdamC/Muon/etc?

Submit a speedrun to Marin! marin.readthedocs.io/en/latest/tu...

For PRs with promising results, we're lucky to be able to help test at scale on compute generously provided by the TPU Research Cloud!

03.07.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

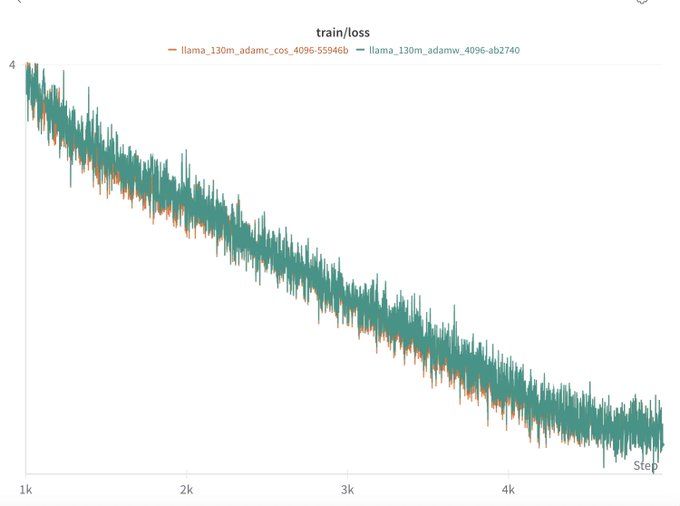

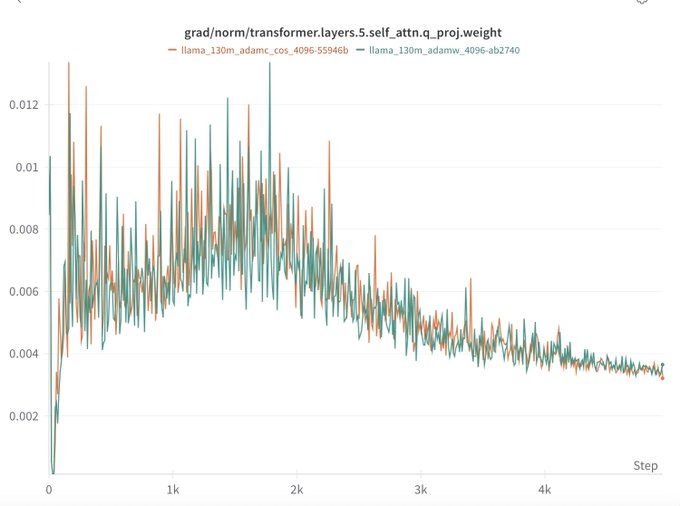

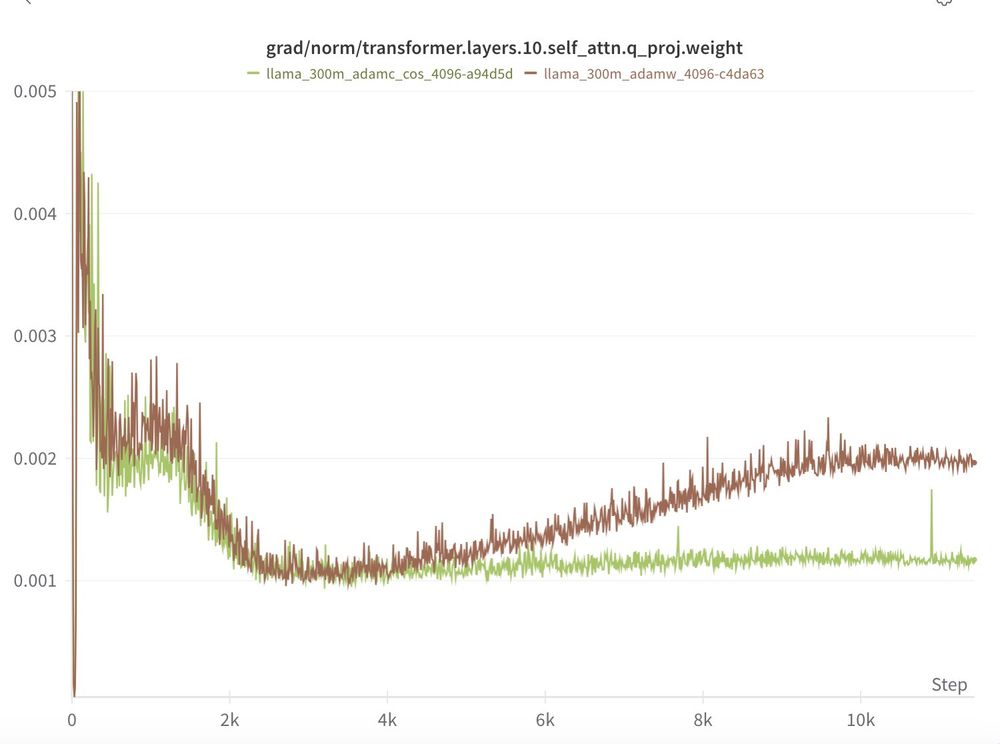

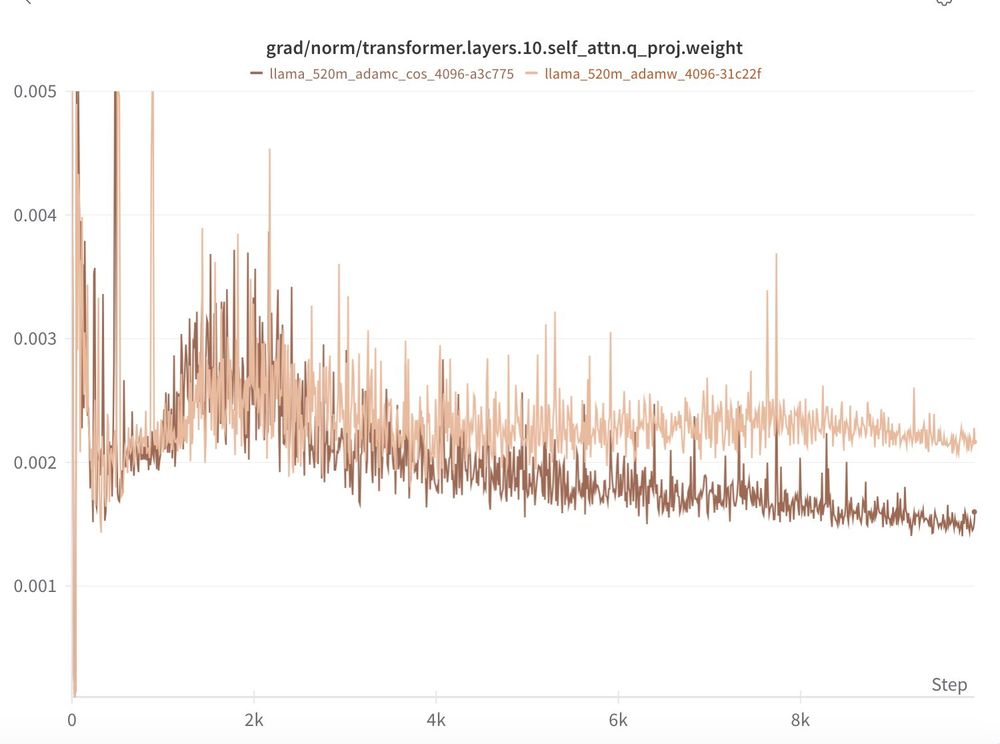

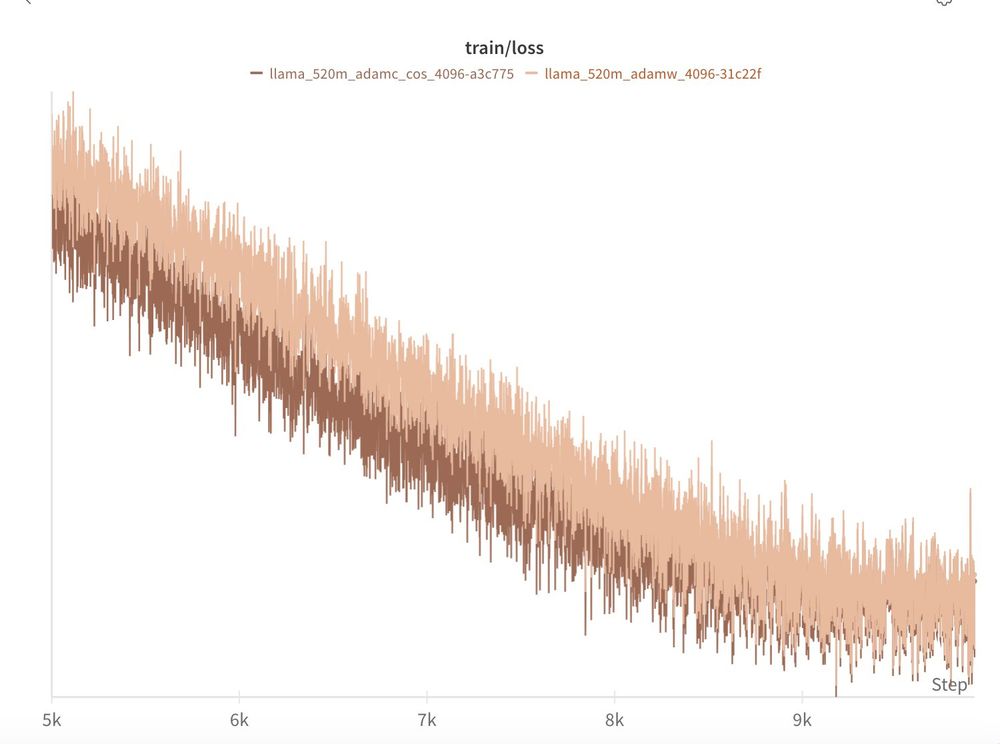

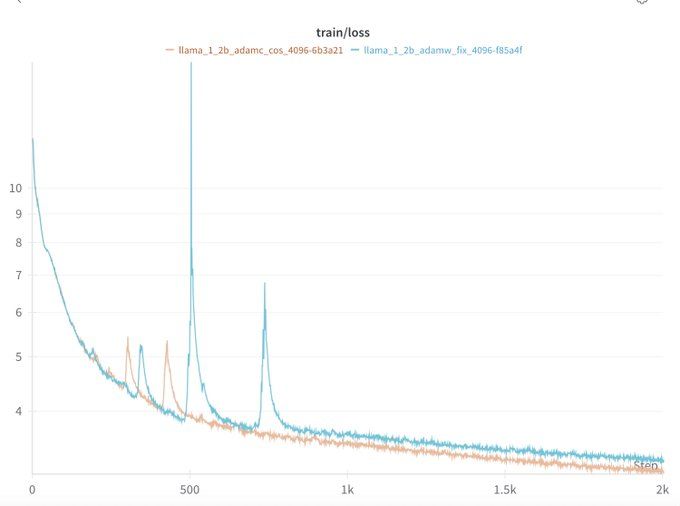

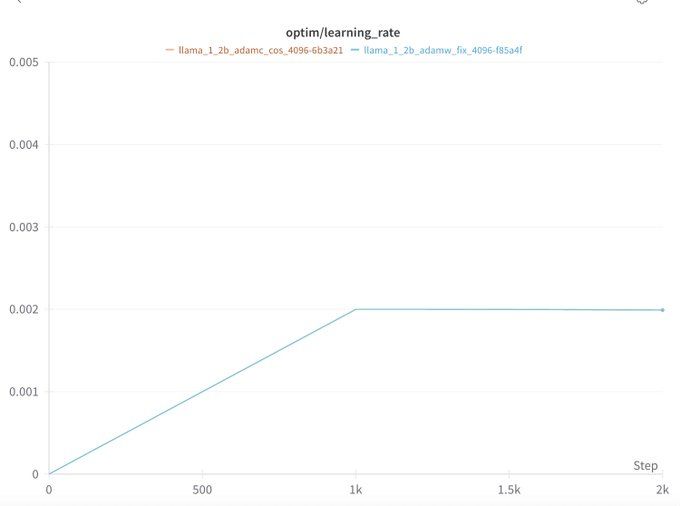

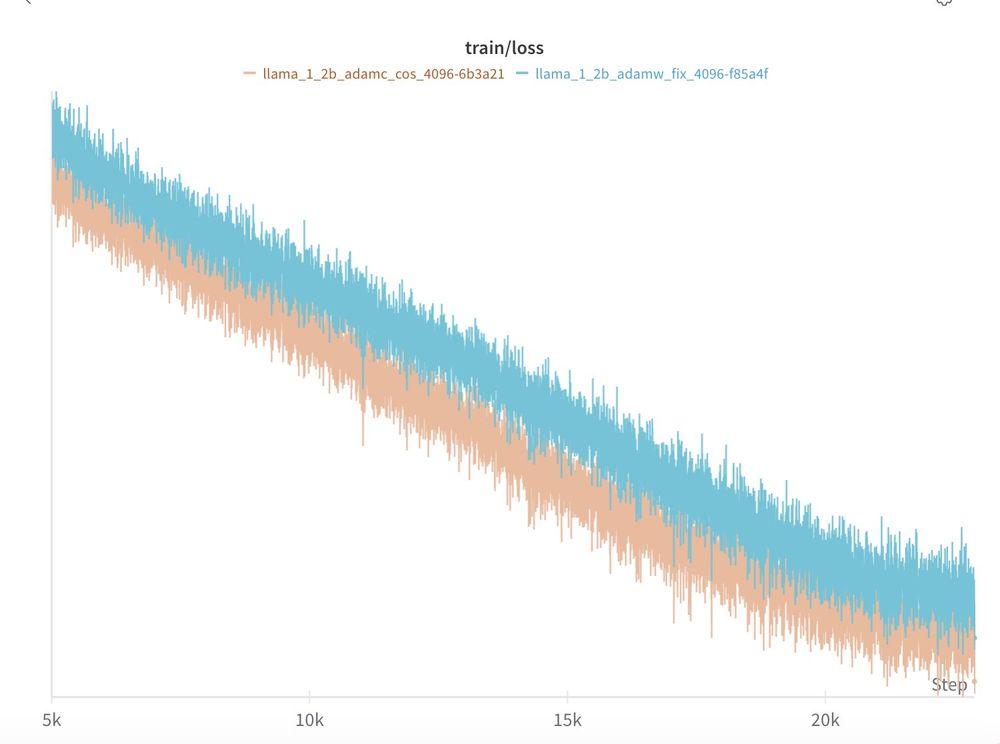

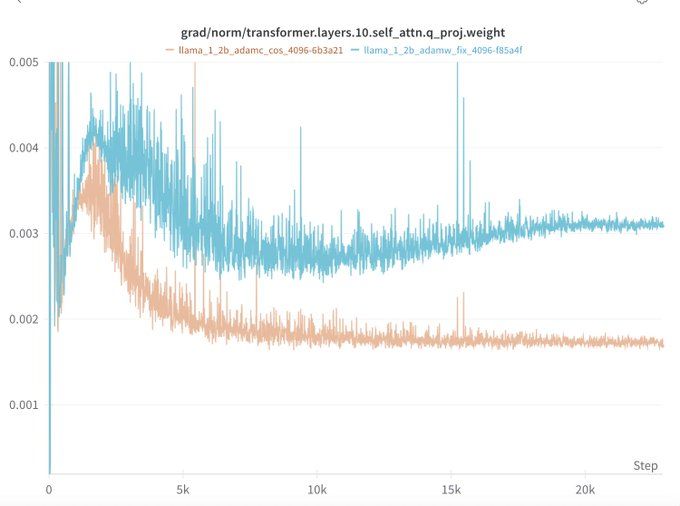

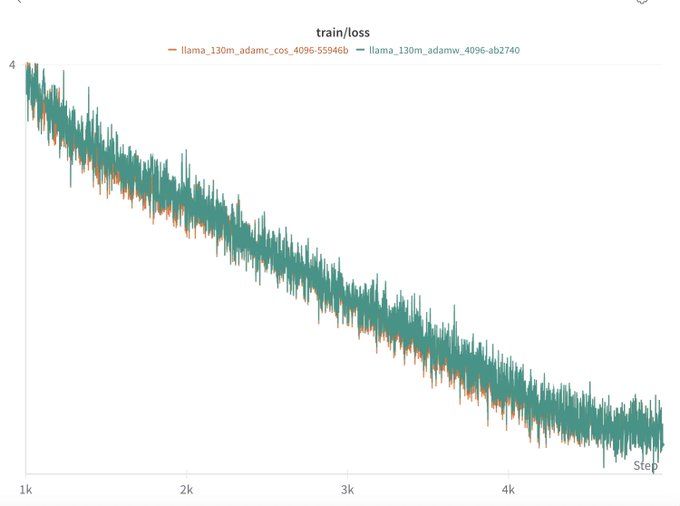

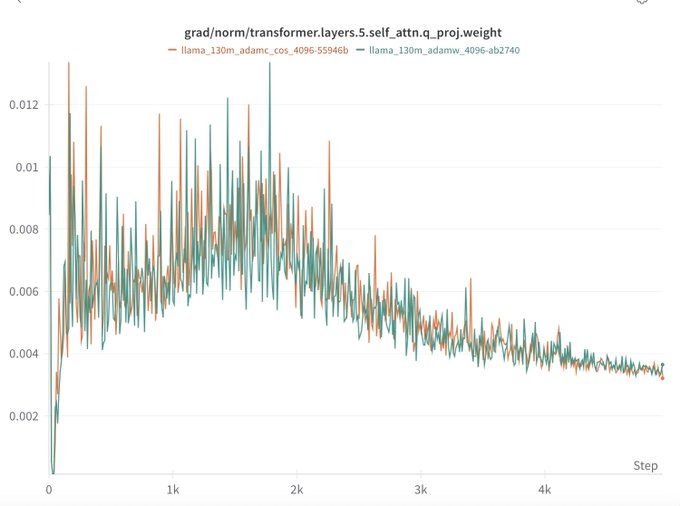

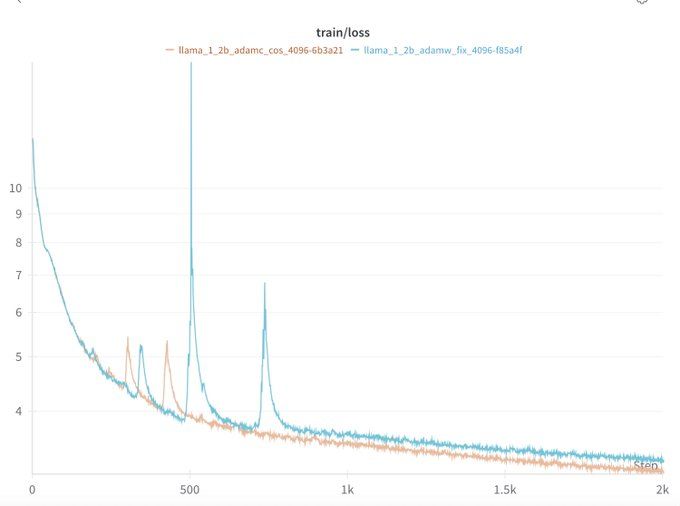

In our most similar setting to the original work (130M model), we don't see AdamC's benefits but

- We use a smaller WD (0.01) identified from sweeps v.s. what is used in the paper (0.05).

- We only train to Chnichilla optimal (2B tokens) whereas the original paper was at 200B.

03.07.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

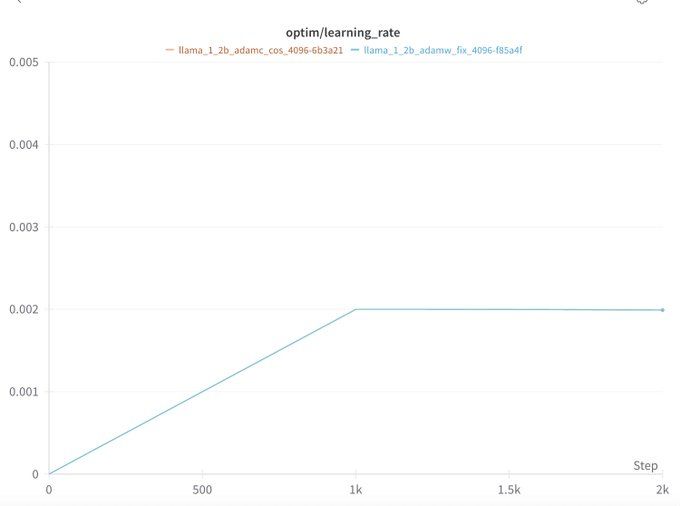

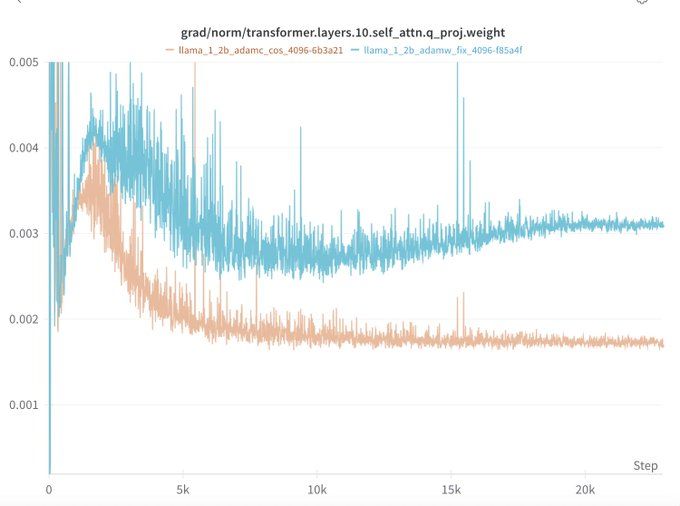

As a side note, Kaiyue Wen found that weight decay also causes slower loss decrease at the start of training in wandb.ai/marin-commun...

Similar to the end of training, this is likely because LR warmup also impacts the LR/WD ratio.

AdamC seems to mitigate this too.

03.07.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

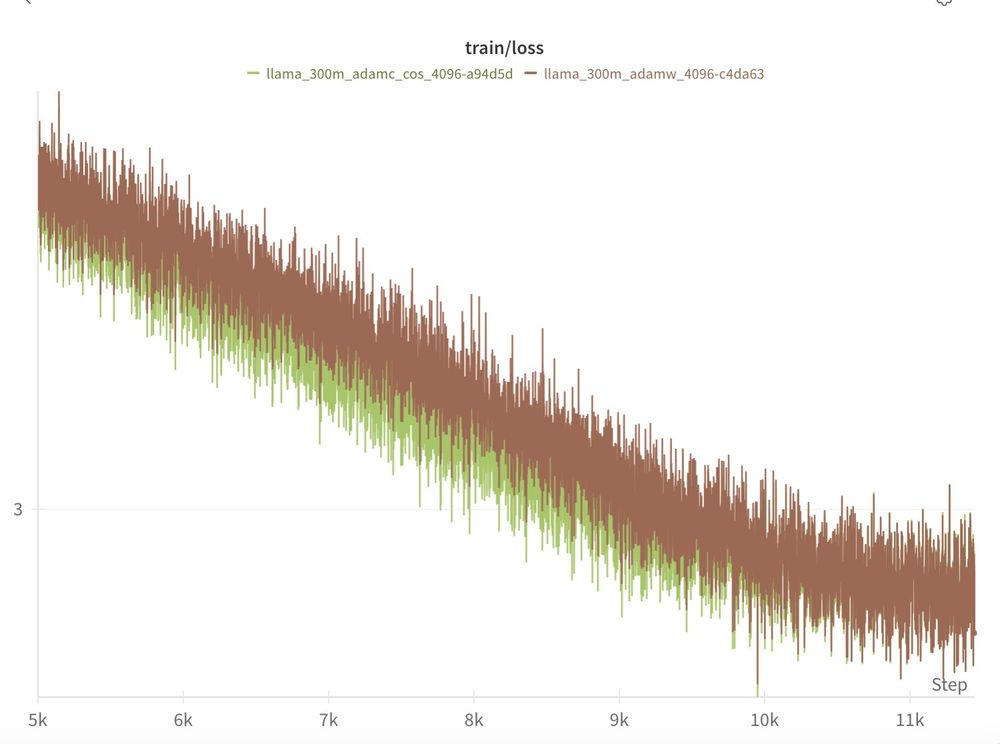

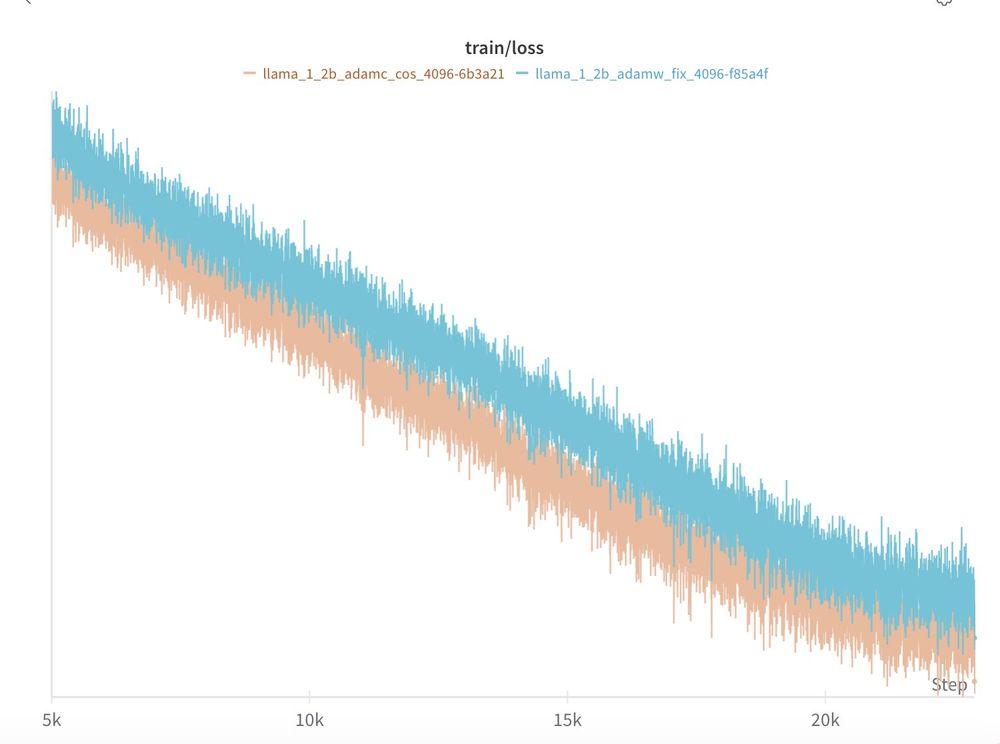

TL;DR: 3/4 of our scales we find the AdamC results to reproduce out of the box!

When compared to AdamW with all other factors held constant, AdamC mitigates the gradient ascent at the end of training and leads to an overall lower loss (-0.04)!

03.07.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

A while ago I mentioned that for marin.community project, this gradient increase led to problematic loss ascent which we patched with Z-loss.

I was curious, does AdamC just work?

So over the weekend, I ran 4 experiments—130M to 1.4B params—all at ~compute-optimal token counts...🧵

03.07.2025 15:14 — 👍 4 🔁 1 💬 1 📌 0

Unmute by Kyutai

Make LLMs listen and speak.

kyutai.org/next/unmute has built in turn-detection on the ASR and full I/O streaming for the TTS. Solves the latency issues that I think are 90% of why people use end-to-end speech models in the first place!

From the details, you can @kyutai-labs.bsky.social is focused on real-world utility.

03.07.2025 15:05 — 👍 2 🔁 0 💬 0 📌 0

Flattered and shocked for our paper to receive the #facct2025 best paper award.

21.06.2025 01:16 — 👍 11 🔁 3 💬 1 📌 0

As far as I can tell, the models aren't good enough right now that they can replace VFX at any high quality commercial scale.

They are exactly good enough to generate fake viral videos for ad revenue on TikTok/Instagram & spread misinformation. Is there any serious argument for their safe release??

17.06.2025 01:32 — 👍 0 🔁 0 💬 0 📌 0

I don't really see an argument for releasing such models with photorealistic generation capabilities.

What valid & frequent business use case is there for photorealistic video & voice generation like Veo 3 offers?

17.06.2025 01:25 — 👍 1 🔁 0 💬 1 📌 0

I've only seen Veo 3 (or any other video generation model) used to produce viral videos. The fake videos seem to successfully trick the majority of commenters and have no visible watermark or disclosure of AI use.

17.06.2025 01:24 — 👍 1 🔁 0 💬 1 📌 0

What would you say if you saw it in another country? A senator from a coequal branch of government dragged away by security from asking a question of a Cabinet official

12.06.2025 18:33 — 👍 490 🔁 143 💬 27 📌 6

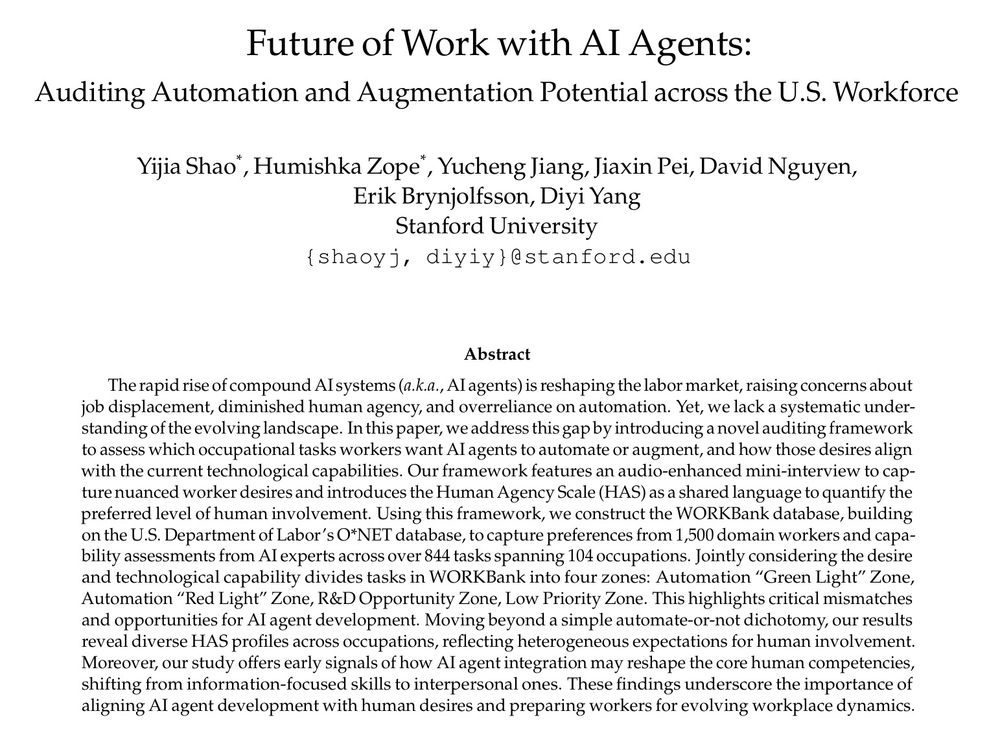

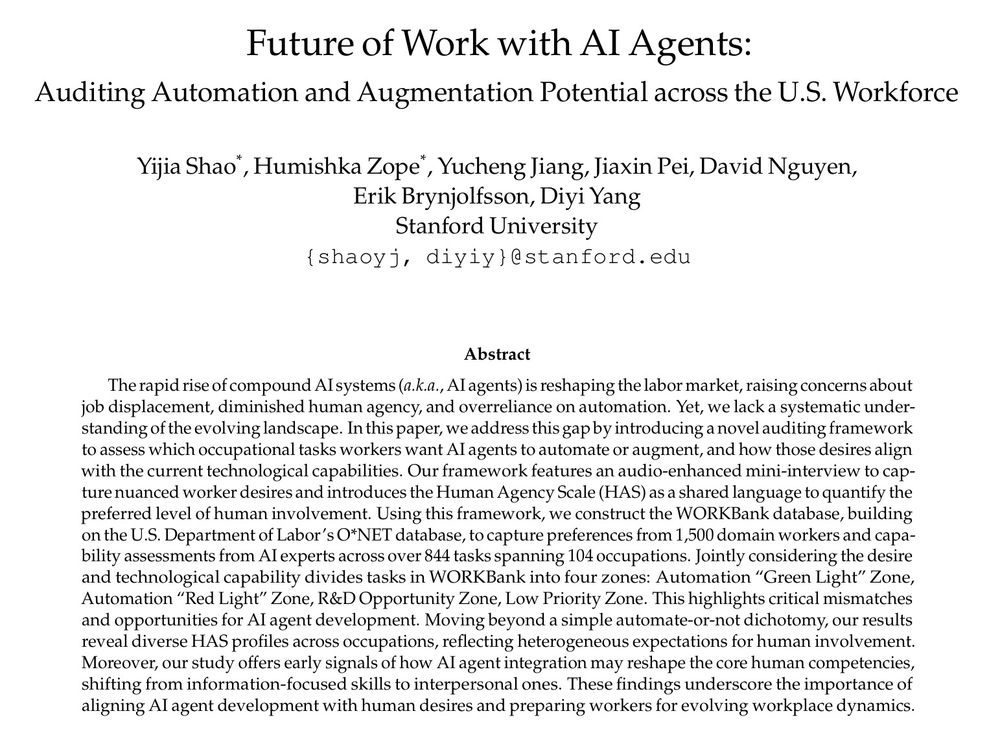

🚨 70 million US workers are about to face their biggest workplace transmission due to AI agents. But nobody’s asking them what they want.

While AI R&D races to automate everything, we took a different approach: auditing what workers want vs. what AI can deliver across the US workforce.🧵

12.06.2025 16:33 — 👍 22 🔁 7 💬 1 📌 0

Really cool to see theory connect to practice! We observed this phenomenon when trying to do deeper WSD cooldowns of our 8B model in the marin.community project!

We Z-Lossed our way through the pain, but cool to see some stronger theory: marin.readthedocs.io/en/latest/re...

06.06.2025 01:27 — 👍 10 🔁 1 💬 0 📌 0

Now, I wouldn't do research on LLMs if I thought that was true in the long term!

But I think it's reasonable for skeptics to question whether advances in inference efficiency, hardware efficiency, and even core energy infrastructure will happen soon enough for current companies to capitalize.

05.06.2025 02:26 — 👍 0 🔁 0 💬 0 📌 0

The Subprime AI Crisis

None of what I write in this newsletter is about sowing doubt or "hating," but a sober evaluation of where we are today and where we may end up on the current path. I believe that the artificial intel...

The underlying assumption being that they can (a la Uber/Lyft) eventually increase prices once the core customers are fundamentally reliant on AI.

The real question then is "what is demand once you start charging the true unit costs?". Personally, I found this article sobering but well reasoned.

05.06.2025 02:12 — 👍 1 🔁 0 💬 1 📌 0

Without knowing all the model details or with transparent financials, it's hard to say but I would naively suspect most AI companies are in the red both on a cost per query basis (for API services) and on a cost per user basis (for subscription services).

05.06.2025 02:09 — 👍 0 🔁 0 💬 1 📌 0

I haven't seen people mocking the revenue forecasts, but I agree with your take w.r.t. demand. The bigger question is whether demand is the constraint?

Unlike standard software or even manufacturing businesses, I'm not sure the economies of scale look great if you factor in cost per query.

05.06.2025 02:05 — 👍 1 🔁 0 💬 1 📌 0

Based on current administration policies, China is about to have an influx of returning talent and a accelerated advantage in research investments.

You need to be both sinophobic and irrational to expect the US to continue as the global scientific powerhouse with these policy own-goals.

02.06.2025 02:59 — 👍 3 🔁 0 💬 1 📌 0

Given that they published the same work in both the ICLR workshop and ACL... I am skeptical of the claim that "The current version of Zochi represents a substantial advancement over our earlier systems that published workshop papers at ICLR 2025" 😂

29.05.2025 04:11 — 👍 1 🔁 0 💬 1 📌 0

"“From time-to-time instances will arise in which the society, or segments of it, threaten the very mission of the university & its values... In such a crisis, it becomes the obligation of the university as an institution to oppose such measures & actively to defend its interests and its values.”

25.05.2025 15:07 — 👍 280 🔁 73 💬 4 📌 0

https://kyutai.org/ Open-Science AI Research Lab based in Paris

Helena Vasconcelos' research account. Email helenav@cs.stanford.edu.

https://helenavasc.com

Professor of HCII and LTI at Carnegie Mellon School of Computer Science.

jeffreybigham.com

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

Civil rights lawyer; democracy warrior, dedicated to racial justice & equality. Fmr President & Director-Counsel, NAACP Legal Defense & Educational Fund, Inc.

https://najoung.kim

langauge

phd candidate at stanford

We're an Al safety and research company that builds reliable, interpretable, and steerable Al systems. Talk to our Al assistant Claude at Claude.ai.

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

Faculty fellow at NYU CDS. Previously: PhD @ BIU NLP.

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

PhD student @mainlp.bsky.social (@cislmu.bsky.social, LMU Munich). Interested in language variation & change, currently working on NLP for dialects and low-resource languages.

verenablaschke.github.io

AmericasNLP 2025 will be co-located with NAACL in Albuquerque, USA. We’re looking forward to seeing you all there! 🌎

turing.iimas.unam.mx/americasnlp/

Applied Scientist @ Amazon

(Posts are my own opinion)

#NLP #AI #ML

I am specially interested in making our NLP advances accessible to the Indigenous languages of the Americas

Assistant professor of CS at UC Berkeley, core faculty in Computational Precision Health. Developing ML methods to study health and inequality. "On the whole, though, I take the side of amazement."

https://people.eecs.berkeley.edu/~emmapierson/

Pre-doc @ai2.bsky.social

davidheineman.com

NYT bestselling author of EMPIRE OF AI: empireofai.com. ai reporter. national magazine award & american humanist media award winner. words in The Atlantic. formerly WSJ, MIT Tech Review, KSJ@MIT. email: http://karendhao.com/contact.