Amazing work by @mxeddie.bsky.social

04.08.2025 09:44 — 👍 0 🔁 0 💬 0 📌 0

@bsavoldi.bsky.social taking us back in time at #GITT2025 ⌚⏳ focusing on the first discussions of gender bias in language technology as a socio-technical issue. No, the problem hasn't been fixed yet. But what has happened?

23.06.2025 07:22 — 👍 7 🔁 3 💬 6 📌 0

Qualtrics Survey | Qualtrics Experience Management

Survey of researchers in academia, industry and third sector who deploy LLMs as part of their work (e.g. because they evaluate LLMs, finetune LLMs, use LLMs to summarise data etc).

I've created a survey on ethics resources. Anyone in UK who does research with LLMs, in academia or industry, building models or using them as tools, is eligible. Takes 10 mins and there's the chance to win £100 voucher.

Survey: lnkd.in/eFRsqpT2

(V1 of the guide is here arxiv.org/abs/2410.19812)

23.05.2025 15:25 — 👍 4 🔁 5 💬 0 📌 0

My latest paper "A decade of gender bias in machine translation" with @bsavoldi.bsky.social @luisabentivogli.bsky.social and Eva Vanmassenhove is out. 😀↘️ #NLProc #NLP #MT

02.05.2025 18:33 — 👍 50 🔁 14 💬 1 📌 1

@evavnmssnhv.bsky.social

02.05.2025 18:34 — 👍 1 🔁 0 💬 0 📌 0

My latest paper "A decade of gender bias in machine translation" with @bsavoldi.bsky.social @luisabentivogli.bsky.social and Eva Vanmassenhove is out. 😀↘️ #NLProc #NLP #MT

02.05.2025 18:33 — 👍 50 🔁 14 💬 1 📌 1

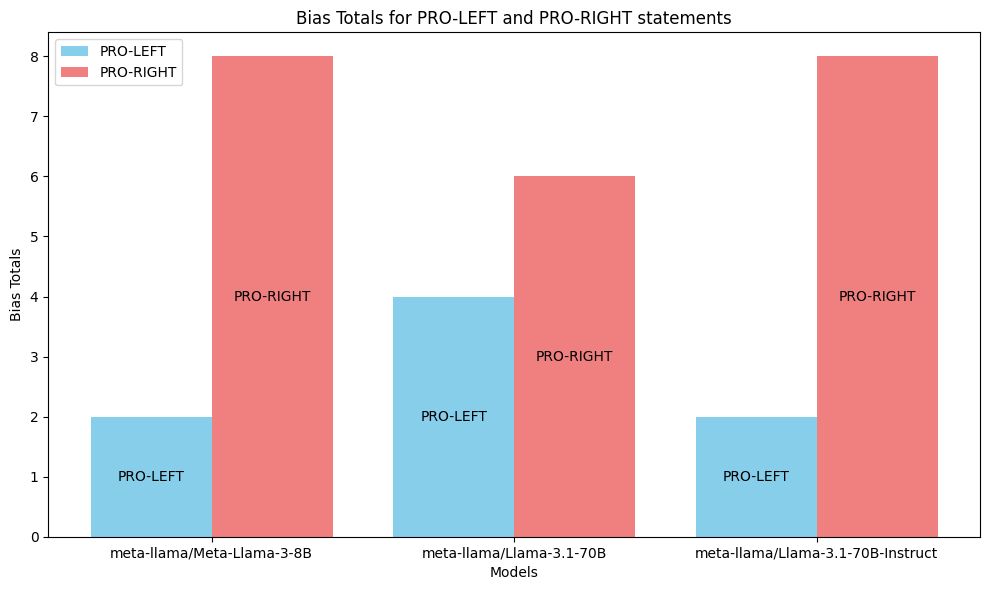

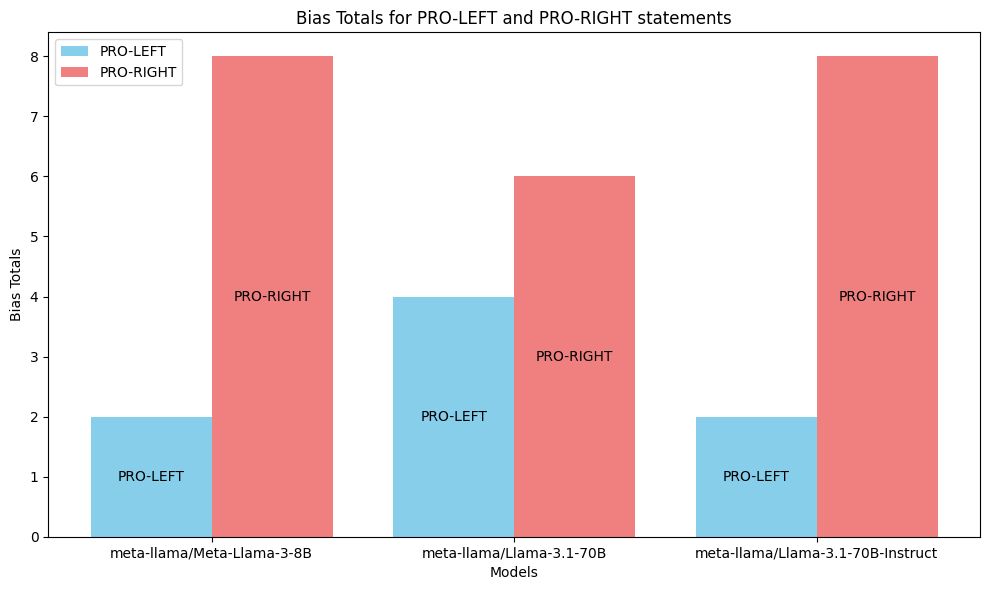

Barchart showing meta-llama/Meta-Llama-3-8B, meta-llama/Llama-3.1-70B, meta-llama/Llama-3.1-70B-Instruct. Each model has two bars, a blue one saying PRO-LEFT and a red one saying PRO-RIGHT. The PRO-RIGHT bars on higher than the PRO-LEFT in all of them.

👉 👈 Meta announced that they're changing their models to reduce "left-leaning [political] bias"--that means leaning them to the political "right". Lots to unpack about what that might mean. So I ran a quick "shot in the dark" study...and found a *political right* bias in Meta models. Some notes.🧵

22.04.2025 00:39 — 👍 711 🔁 276 💬 28 📌 37

This is a really good piece on why the UK supreme court ruling is so problematic.

22.04.2025 12:55 — 👍 1 🔁 0 💬 0 📌 0

Reserved topic scholarships | Doctoral Program - Information Engineering and Computer Science

📢 Come and join our group!

We offer a fully funded 3-year PhD position:

📔 Automatic translation with large multimodal models: iecs.unitn.it/education/ad...

📍Full details for application: iecs.unitn.it/education/ad...

📅 Deadline May 12, 2025

#NLProc #FBK

22.04.2025 10:14 — 👍 10 🔁 10 💬 1 📌 0

On Fake Hannah Arendt Quotations

This article discusses a mis-attributed quote to Hannah Arendt circulating on social media, exploring why such alterations occur and their potential consequences. Berkowitz argues that while simplifie...

I heard another fake Hannah Arendt quote on the radio. Since in the current times we might hear more of her to make sense of what is going on, here is a nice piece on what she actually said on lies & people not believing anything anymore: hac.bard.edu/amor-mundi/o...

12.01.2025 10:57 — 👍 8 🔁 0 💬 2 📌 0

My heartfelt condolences to everyone who knew and loved Felix. He was such a bright thinker. Such a loss.

03.01.2025 22:03 — 👍 3 🔁 0 💬 0 📌 0

We scaled training data attribution (TDA) methods ~1000x to find influential pretraining examples for thousands of queries in an 8B-parameter LLM over the entire 160B-token C4 corpus!

medium.com/people-ai-re...

13.12.2024 18:57 — 👍 36 🔁 8 💬 2 📌 5

Screenshot of the paper.

Even as an interpretable ML researcher, I wasn't sure what to make of Mechanistic Interpretability, which seemed to come out of nowhere not too long ago.

But then I found the paper "Mechanistic?" by

@nsaphra.bsky.social and @sarah-nlp.bsky.social, which clarified things.

20.11.2024 08:00 — 👍 232 🔁 28 💬 8 📌 2

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this:

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

20.11.2024 16:31 — 👍 861 🔁 141 💬 38 📌 24

#PhD researcher at #ANU Cybernetics, investigating bias in #speech and #voice #tech, with a focus on #data used for #ML. Contracts at @mozilla.org #CommonVoice

Ex NVIDIA, Mycroft AI, @linuxaustralia.bsky.social, Deakin Uni.

https://linktr.ee/kathyreid

PhD student in NLP @ UZH | Researching online harms, hate speech & things related | https://jagol.github.io/

PhD student in NLP at the University of Edinburgh, working on online abuse detection 👩🏻💻 | ex Intern @MetaAI @Snap | Intersectional feminist 🌻 | (she/her)

Current: Co-Executive Director @AINowInstitute | Former: Senior Advisor on AI @FTC | She/her

associate director of ainowinstitute.org

Reader in Computational Social Science at the University of Edinburgh. he/him

PhD student at @tuda.bsky.social | Computational Literary Studies | Comparative Literature

Sentence processing modeling | Computational psycholinguistics | 1st year PhD student at LLF, CNRS, Université Paris Cité | Currently visiting COLT, Universitat Pompeu Fabra, Barcelona, Spain

https://ninanusb.github.io/

Host of Local News International

Subscribe for free: http://LNI.media

Support us: http://LNI.media/membership

PhD candidate @ University of Amsterdam

evgeniia.tokarch.uk

PhD candidate - Centre for Cognitive Science at TU Darmstadt,

explanations for AI, sequential decision-making, problem solving

PhD candidate @Technion | NLP

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

Associate Professor and Computational Linguist @ University of Augsburg, Germany

I do philosophy for a living at the Institute for Information Law (UvA) and research online manipulation.

Onderzoeksjournalist, post op persoonlijke titel | Groningse Amsterdammer of is het Amsterdamse Groninger

Assistant professor of computer science at Technion

https://belinkov.com/

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

Deputy Tech Editor for Business Insider