🤔What happens when LLM agents choose between achieving their goals and avoiding harm to humans in realistic management scenarios? Are LLMs pragmatic or prefer to avoid human harm?

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

08.10.2025 15:14 — 👍 8 🔁 2 💬 1 📌 2

Reach out if you'd like to discuss anything related to model interpretability and controllability, robustness, multi-agent communication, biological LMs, etc.

Also happy to talk about PhD and Post-doc opportunities!

07.10.2025 13:40 — 👍 2 🔁 0 💬 0 📌 0

In #Interplay25 workshop, Friday ~11:30, I'll present on measuring *parametric* CoT faithfulness on behalf of

@mtutek.bsky.social , who couldn't travel:

bsky.app/profile/mtut...

Later that day we'll have a poster on predicting success of model editing by Yanay Soker, who also couldn't travel

07.10.2025 13:40 — 👍 4 🔁 1 💬 1 📌 1

@zorikgekhman.bsky.social

will present on Wednesday a poster on hidden factual knowledge in LMs

bsky.app/profile/zori...

07.10.2025 13:40 — 👍 2 🔁 0 💬 1 📌 0

@itay-itzhak.bsky.social

presenting today, morning, a spotlight talk and poster on the origin of cognitive biases in LLMs

bsky.app/profile/itay...

07.10.2025 13:40 — 👍 3 🔁 0 💬 1 📌 0

Traveling to #COLM2025 this week, and here's some work from our group and collaborators:

Cognitive biases, hidden knowledge, CoT faithfulness, model editing, and LM4Science

See the thread for details and reach out if you'd like to discuss more!

07.10.2025 13:40 — 👍 6 🔁 1 💬 1 📌 0

What's the right unit of analysis for understanding LLM internals? We explore in our mech interp survey (a major update from our 2024 ms).

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

01.10.2025 14:03 — 👍 40 🔁 14 💬 2 📌 2

Opportunities to join my group in fall 2026:

* PhD applications direct or via ELLIS @ellis.eu (ellis.eu/news/ellis-p...)

* Post-doc applications direct or via Azrieli (azrielifoundation.org/fellows/inte...) or Zuckerman (zuckermanstem.org/ourprograms/...)

01.10.2025 13:44 — 👍 3 🔁 1 💬 0 📌 0

Excited to join @KempnerInst this year!

Get in touch if you're in the Boston area and want to chat about anything related to AI interpretability, robustness, interventions, safety, multi-modality, protein/DNA LMs, new architectures, multi-agent communication, or anything else you're excited about!

22.09.2025 18:41 — 👍 2 🔁 0 💬 0 📌 0

Thrilled that FUR was accepted to @emnlpmeeting.bsky.social Main🎉

In case you can’t wait so long to hear about it in person, it will also be presented as an oral at @interplay-workshop.bsky.social @colmweb.org 🥳

FUR is a parametric test assessing whether CoTs faithfully verbalize latent reasoning.

21.08.2025 15:21 — 👍 13 🔁 3 💬 1 📌 1

BlackboxNLP is the workshop on interpreting and analyzing NLP models (including LLMs, VLMs, etc). We accept full (archival) papers and extended abstracts.

The workshop is highly attended and is a great exposure for your finished work or feedback on work in progress.

#emnlp2025 at Sujhou, China!

12.08.2025 19:16 — 👍 5 🔁 1 💬 0 📌 0

Join our Discord for discussions and a bunch of simple submission ideas you can try!

discord.gg/n5uwjQcxPR

Participants will have the option to write a system description paper that gets published.

13.07.2025 17:44 — 👍 2 🔁 0 💬 0 📌 0

Have you started working on your submission for the MIB shared task yet? Tell us what you’re exploring!

New featurization methods?

Circuit pruning?

Better feature attribution?

We'd love to hear about it 👇

09.07.2025 07:15 — 👍 2 🔁 1 💬 0 📌 1

Working on feature attribution, circuit discovery, feature alignment, or sparse coding?

Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP

We welcome submissions of both existing methods and new or experimental POCs!

08.07.2025 09:35 — 👍 5 🔁 3 💬 1 📌 0

VLMs perform better on questions about text than when answering the same questions about images - but why? and how can we fix it?

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

26.06.2025 10:40 — 👍 6 🔁 4 💬 1 📌 0

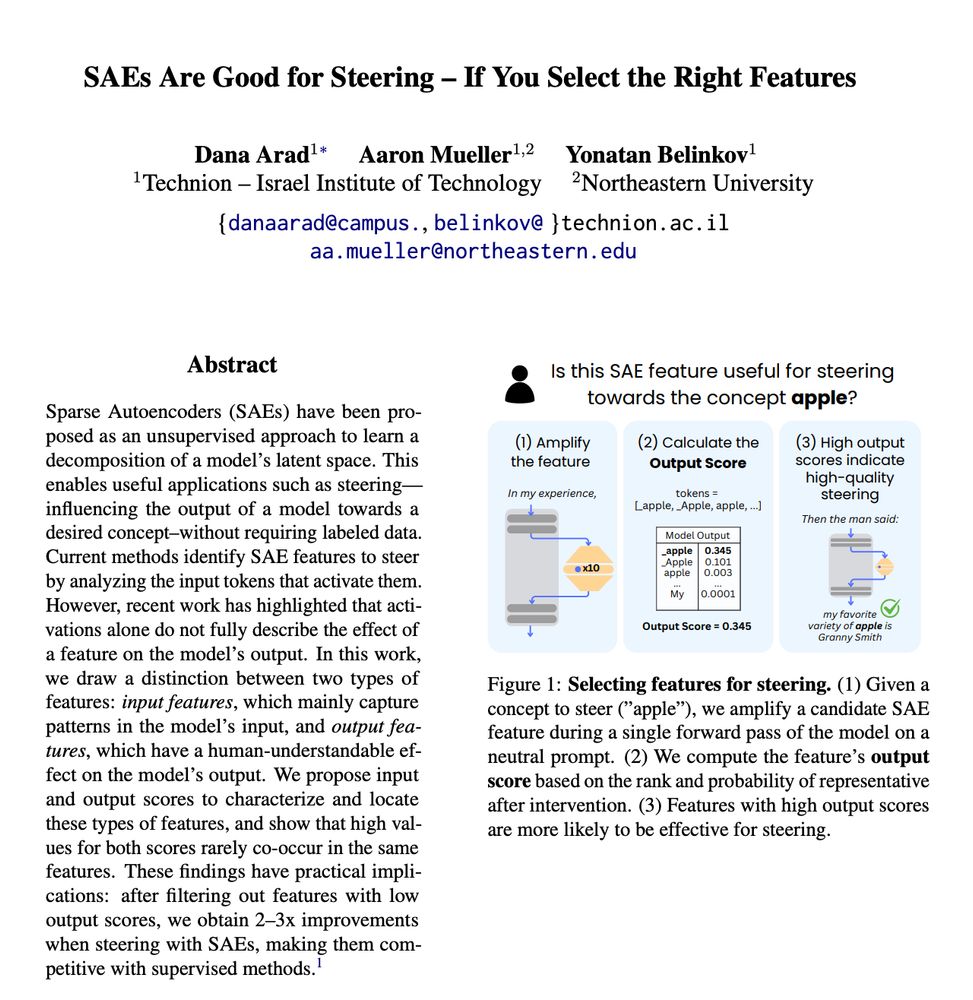

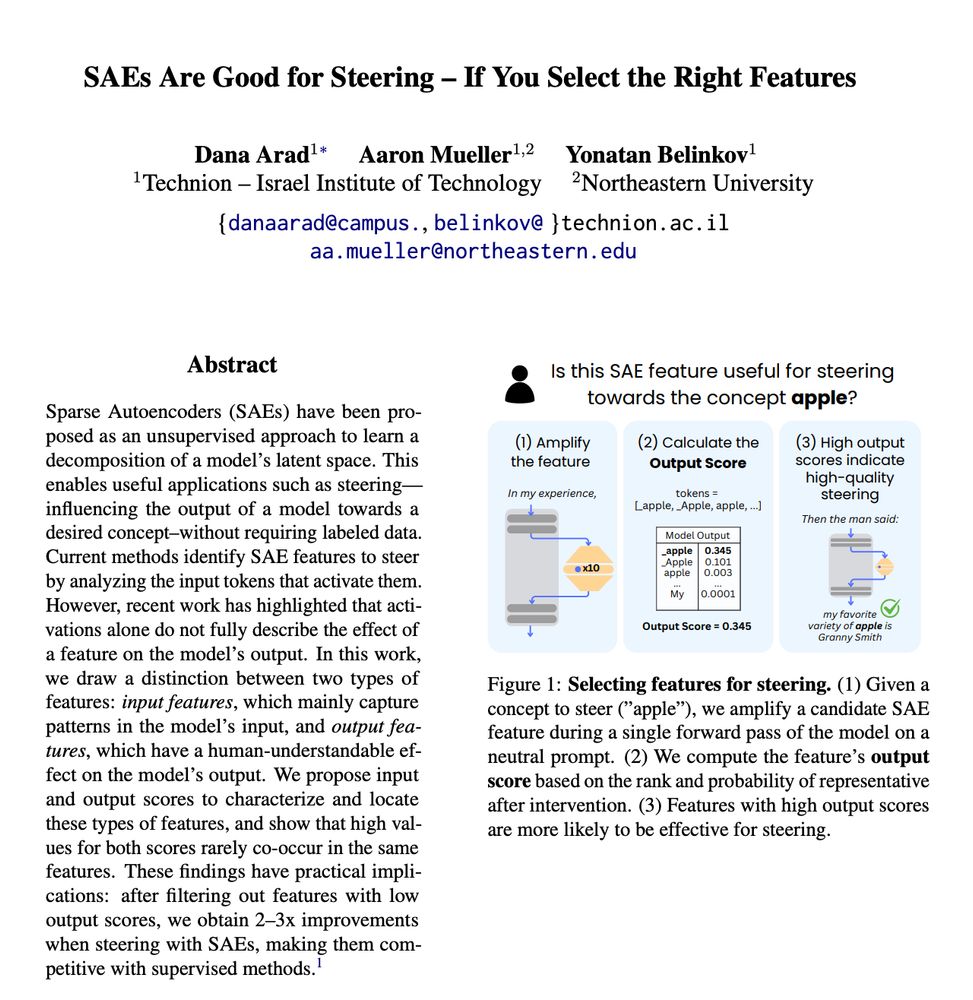

Tried steering with SAEs and found that not all features behave as expected?

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

27.05.2025 16:06 — 👍 17 🔁 6 💬 2 📌 3

🚨New paper at #ACL2025 Findings!

REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.

LMs memorize and leak sensitive data—emails, SSNs, URLs from their training.

We propose a surgical method to unlearn it.

🧵👇w/ @boknilev.bsky.social @mtutek.bsky.social

1/8

27.05.2025 08:19 — 👍 6 🔁 2 💬 1 📌 1

Interested in mechanistic interpretability and care about evaluation? Please consider submitting to our shared task at #blackboxNLP this year!

15.05.2025 09:57 — 👍 5 🔁 1 💬 0 📌 0

Slides available here: docs.google.com/presentation...

04.05.2025 18:02 — 👍 25 🔁 5 💬 1 📌 0

I think a scalable open source implementation would have many uses! Let’s say I can’t run all pretraining data because of cost. And I run a subset and get influential examples. What would that mean concerning what I’m missing?

25.04.2025 11:59 — 👍 1 🔁 0 💬 1 📌 0

Looks great! What would it take to run this on another model and dataset?

25.04.2025 00:31 — 👍 1 🔁 0 💬 1 📌 0

This has been a huge team effort with many talented contributors. Very thankful for everyone’s contributions!

See the list here:

bsky.app/profile/amuu...

24.04.2025 02:20 — 👍 1 🔁 0 💬 0 📌 0

Excited about the release of MIB, a mechanistic Interpretability benchmark!

Come talk to us at #iclr2025 and consider submitting to the leaderboard.

We’re also planning a shared task around it at #blackboxNLP this year, located with #emnlp2025

24.04.2025 02:20 — 👍 4 🔁 0 💬 1 📌 0

MIB – Project Page

We release many public resources, including:

🌐 Website: mib-bench.github.io

📄 Data: huggingface.co/collections/...

💻 Code: github.com/aaronmueller...

📊 Leaderboard: Coming very soon!

23.04.2025 18:15 — 👍 3 🔁 1 💬 1 📌 0

Hope to see you there!

14.04.2025 18:34 — 👍 1 🔁 0 💬 0 📌 0

1/13 LLM circuits tell us where the computation happens inside the model—but the computation varies by token position, a key detail often ignored!

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

06.03.2025 22:15 — 👍 25 🔁 7 💬 1 📌 1

Wondering about the mech interp passage where you say cognitive science can offer normative theories for ToM. Why is it important for a model to have a specific cog sci theory to be able to say that it has ToM? Could it implement a different mechanism for ToM than people?

06.03.2025 19:05 — 👍 1 🔁 0 💬 0 📌 0

Cornell Tech professor (information science, AI-mediated Communication, trustworthiness of our information ecosystem). New York City. Taller in person. Opinions my own.

NLP PhD @ USC

Previously at AI2, Harvard

mattf1n.github.io

The 2025 Conference on Language Modeling will take place at the Palais des Congrès in Montreal, Canada from October 7-10, 2025

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

The Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University.

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

PhD Student at Northeastern, working to make LLMs interpretable

The NLP group at Technion's CS department. @boknilev.bsky.social

ML researcher, building interpretable models at Guide Labs (guidelabs.bsky.social).

NLP. NMT. Main author of Marian NMT. Research Scientist at Microsoft Translator.

https://marian-nmt.github.io

Prof. https://ehu.eus Head of HiTZ research center @hitz-zentroa.bsky.social, Spanish Informatics Research Prize 2021. ACL fellow @aclmeeting.bsky.social. Elected member https://jakiunde.eus

I post in Basque, Spanish and English

https://hitz.eus/eneko

👩💻 Postdoc @ Technion, interested in Interpretability in IR 🔎 and NLP 💬

NLP | Interpretability | PhD student at the Technion

Typography & Typology, Exploring the convergence of Arabic & Hebrew. Developing an iOS Keyboard for mixed writing - join the beta!

https://forms.gle/wuTQs1ksnPMNPzBX8

Associate Professor, Department of Psychology, Harvard University. Computation, cognition, development.

Postdoc @ TakeLab, UniZG | previously: Technion; TU Darmstadt | PhD @ TakeLab, UniZG

Faithful explainability, controllability & safety of LLMs.

🔎 On the academic job market 🔎

https://mttk.github.io/

Assistant professor at the Hebrew University.

Let's build AI's we can trust!

NLProc, deep learning, and machine learning. Ph.D. student @ Technion and The Hebrew University.

https://itay1itzhak.github.io/

NLProc, and machine learning. Ph.D. student Technion