Congrats to Pingjun, @beiduo.bsky.social , Siyao, Marie, and @barbaraplank.bsky.social for receiving the SAC Highlights reward!

13.11.2025 18:02 — 👍 5 🔁 1 💬 0 📌 0

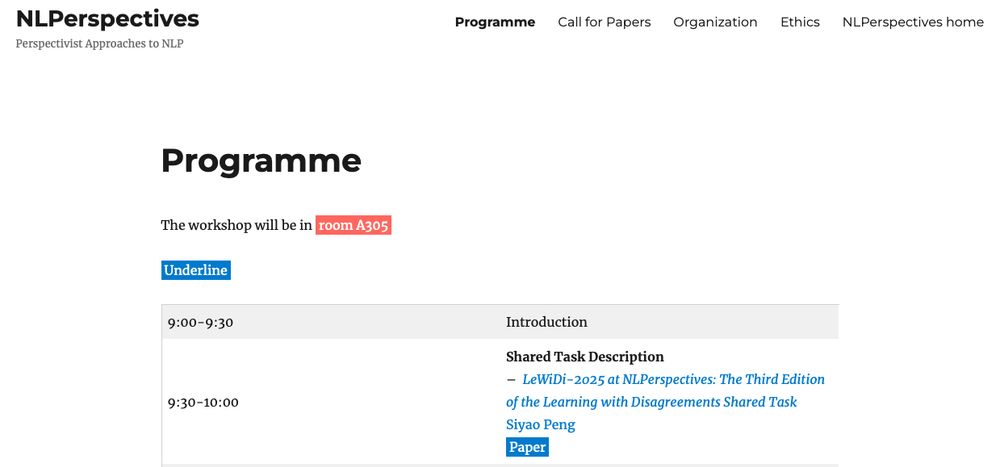

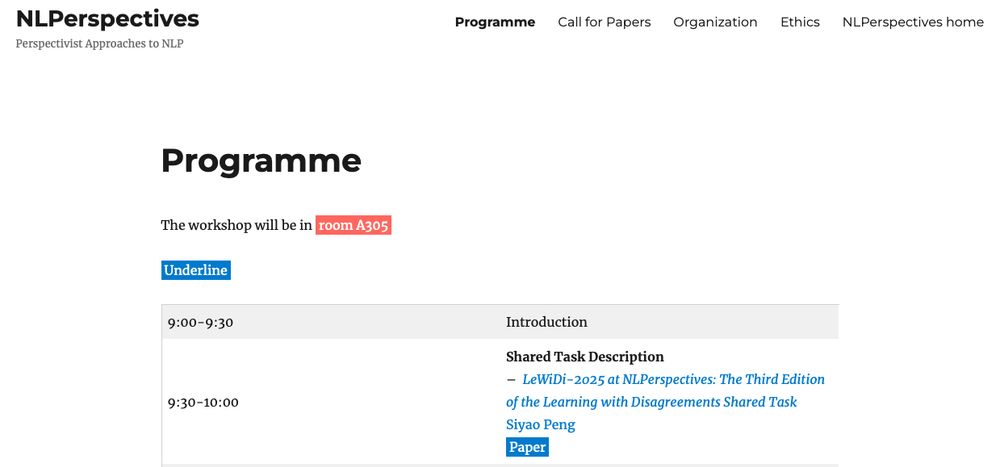

Detailed programme now up on website. Looking forward to 14 research papers, results of the 3rd Shared Task on Learning with Disagreements (LeWiDi), a talk from @camachocollados.bsky.social, and a panel discussion feat. Jose, Eve Fleisig, and @beiduo.bsky.social. See you in Room A305 or online!

06.11.2025 19:00 — 👍 2 🔁 1 💬 0 📌 2

Our paper: arxiv.org/pdf/2505.23368

Our code: github.com/mainlp/CoT2EL

Thank you to my wonderful co-authors,

@janetlauyeung.bsky.social, Anna Korhonen, and @barbaraplank.bsky.social. Also to @mainlp.bsky.social , @cislmu.bsky.social @munichcenterml.bsky.social

See you in Suzhou!

#NLP #EMNLP2025

24.10.2025 13:42 — 👍 3 🔁 0 💬 0 📌 0

Matching exact probabilities for HLV is unstable. So, we propose a more robust rank-based evaluation that checks preference order. Our combined method outperforms baselines on 3 datasets that exhibit human label variation, showing it better aligns with diverse human perspectives.

24.10.2025 13:37 — 👍 0 🔁 0 💬 1 📌 0

Instead of unnatural post-hoc explanations, we look forward. A model's CoT already contains rationales for all options. We introduce CoT2EL, a pipeline that uses linguistic discourse segmenters to extract these high-quality, faithful units to explore human label variation.

24.10.2025 13:37 — 👍 0 🔁 0 💬 1 📌 0

📑 Our CoT2EL paper will be presented as an oral at #EMNLP2025 in Suzhou!

Humans often disagree on labels. Can a model's own reasoning (CoT) help us understand why? We developed a new method to extract these insights. Come join us!

🗓️ Friday, Nov 7, 14:00 - 15:30

📍 Room: A110

24.10.2025 13:36 — 👍 8 🔁 2 💬 1 📌 0

🧠 What’s this about?

Human annotations often disagree. Instead of collapsing disagreement into a single label, we model Human Judgment Distributions — how likely humans are to choose each label in NLI tasks.

Capturing this is crucial for interpretability and uncertainty in NLP.

15.07.2025 14:50 — 👍 1 🔁 0 💬 0 📌 0

🚨 Can LLMs generate explanations that are as useful as human ones for modeling label distributions in NLI?🌹"A Rose by Any Other Name" shows that they can

💬 We explore scalable, explanation-based annotation via LLMs.

📍Come find us in Vienna 🇦🇹! (July 28, 18:00-19:30, Hall 4/5) #ACL2025NLP #acl2025

15.07.2025 14:46 — 👍 5 🔁 1 💬 3 📌 0

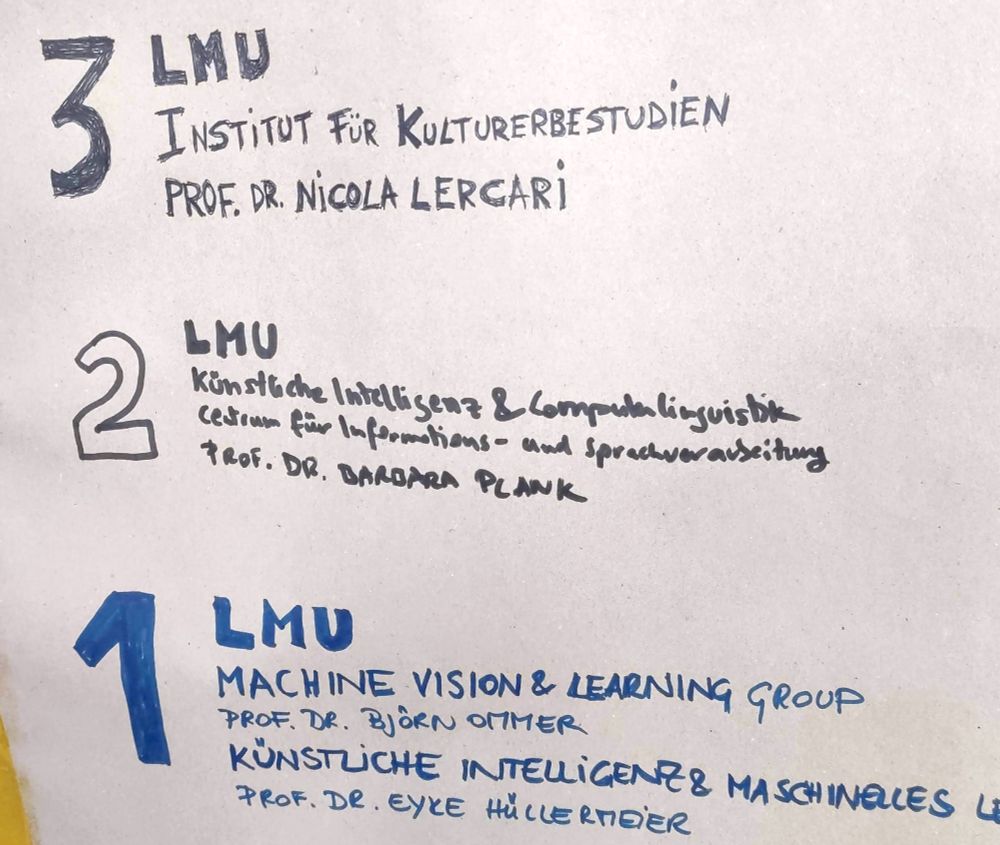

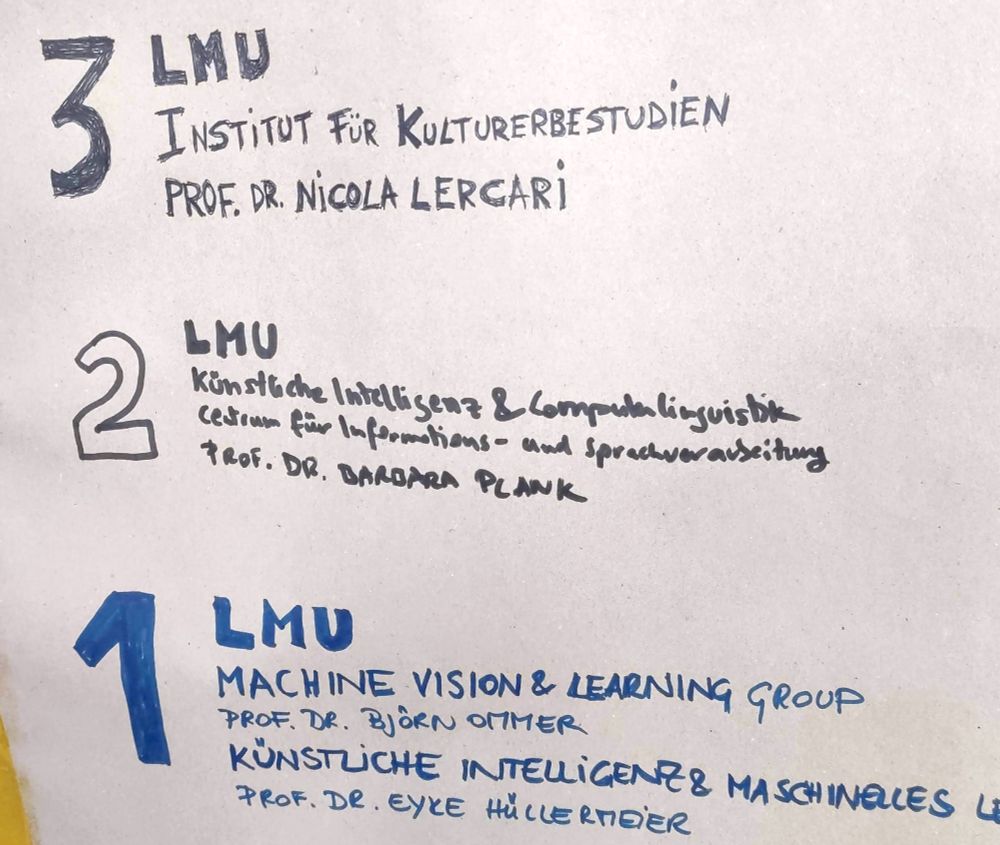

The hand-drawn sign from three years ago.

🎉MaiNLP is turning 3 today!🎂🥳 We’ve grown a lot since @barbaraplank.bsky.social started this group with nothing but three aspiring researches and a hand-drawn sign on the door. Huge thanks to all the amazing people who have joined or visited us since. Here’s to many more years of exciting research!🚀

01.04.2025 10:40 — 👍 20 🔁 9 💬 1 📌 2

natural language things @ https://sites.google.com/site/hwinteractionlab/

gavinabercrombie.github.io

Euskaldun berria

Tha beagan Gàidhlig agam

linguist turned NLP researcher, PhD student @cambridgenlp

Just a passionate dev, learning from this community daily.

✨ Sharing the entire journey - bugs, breakthroughs, and banter. 🚀

PhD student, NLP Researcher at @cislmu.bsky.social | Prev. Intern @Adobe.com

Ph.D. candidate in Artificial Intelligence at University of Liverpool | MSc in Advanced Computer Science from Swansea University | Lecturer at University of Bisha

PhD student in computational linguistics & NLP at CIS, LMU Munich, junior member at MCML, previously at SJTU.

ercong21.github.io

PhD student @ TU Munich, Human-centered AI, Computational Social Science

https://sxu3.github.io/

Post-doctoral Researcher at BIFOLD / TU Berlin interested in interpretability and analysis of language models. Guest researcher at DFKI Berlin. https://nfelnlp.github.io/

PhD student at Utrecht University, @cs-nlp-uu.bsky.social

PhD student at @gesis.org & @hhu.de, computational linguist, researching linguistic factors in (annotation) disagreement and language model behavior.

Prof, Chair for AI & Computational Linguistics,

Head of MaiNLP lab @mainlp.bsky.social, LMU Munich

Co-director CIS @cislmu.bsky.social

Visiting Prof ITU Copenhagen @itu.dk

ELLIS Fellow @ellis.eu

Vice-President ACL

PI MCML @munichcenterml.bsky.social

Prof of #NLP in School of Computing and Communications @LancasterUni, word boffin, ex-CLARIN Ambassador, Director of @ucrelnlp.bsky.social #NLProc #CorpusLinguistics #DigitalHumanities, Doctor Who & stuff. Ravenclaw.

https://www.linkedin.com/in/perayson/

PhD student in HRI/HCI/ML at ELLIS, University of Augsburg, Germany.

Research on Human-Centered Artificial Intelligence, focusing on Trust in Robotics and Social Robots.

🎓 M.Sc. in Computer Science and Psychology (Ulm University).

📧 chang.zhou@uni-a.de

MaiNLP research lab at CIS, LMU Munich directed by Barbara Plank @barbaraplank.bsky.social

Natural Language Processing | Artificial Intelligence | Computational Linguistics | Human-centric NLP

PhD candidate at LMU Munich. Representations, model and data attribution, training dynamics.

Strong opinions on coffee and tea ☕

https://florian-eichin.com

🏫 asst. prof. of compling at university of pittsburgh

past:

🛎️ postdoc @mainlp.bsky.social, LMU Munich

🤠 PhD in CompLing from Georgetown

🕺🏻 x2 intern @Spotify @SpotifyResearch

https://janetlauyeung.github.io/

ELLIS PhD Student at MaiNLP

@ellis.eu @mainlp.bsky.social @munichcenterml.bsky.social

Semi-serious runner for Berlin Track Club and my sanity

PhD student @MaiNLP (Munich AI & NLP lab), @LMU.

Working on reasoning in large language models.

Postdoc AI Researcher (NLP) @ ITU Copenhagen

🧭 https://mxij.me

Postdoc in NLP @milanlp.bsky.social (Milan) and @nlpnorth.bsky.social (Copenhagen) | affiliated @aicentre.dk | past @mainlp.bsky.social, Amazon Alexa

🔗 elisabassignana.github.io