Find our detailed @expo tutorial here: go.thor.bio/eleven-expo-...

06.08.2025 14:08 — 👍 1 🔁 0 💬 0 📌 0@elevenlabs.io.bsky.social

Our mission is to make content universally accessible in any language and voice. https://elevenlabs.io

Find our detailed @expo tutorial here: go.thor.bio/eleven-expo-...

06.08.2025 14:08 — 👍 1 🔁 0 💬 0 📌 0

Find the docs here: go.thor.bio/eleven-react...

06.08.2025 14:08 — 👍 1 🔁 0 💬 1 📌 0Introducing the ElevenLabs React Native SDK

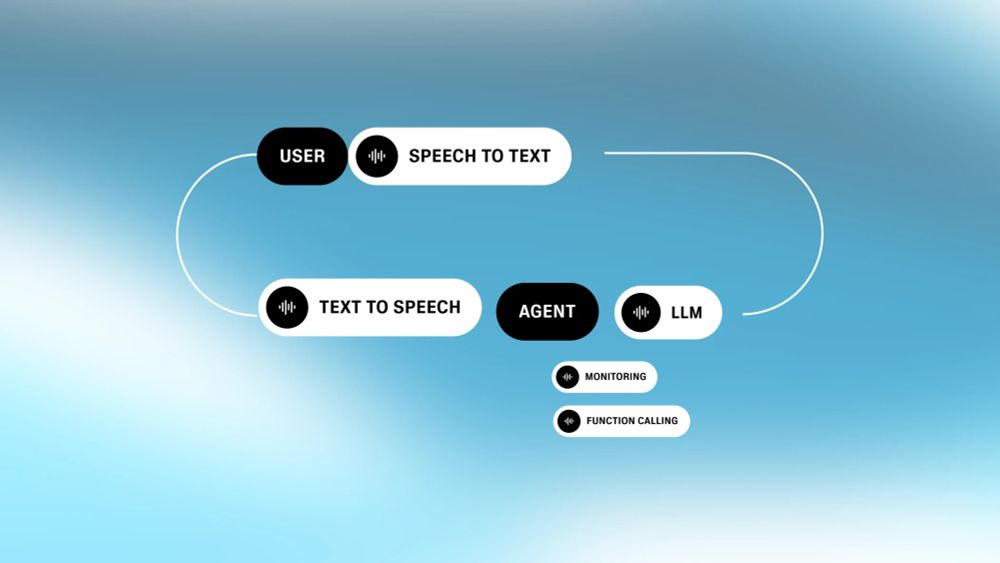

Build cross-platform conversational AI agents for iOS and Android in minutes. WebRTC and first-class @expo.dev support built in.

Docs links below:

ElevenLabs Swift SDK docs: elevenlabs.io/docs/convers...

29.07.2025 14:33 — 👍 1 🔁 0 💬 0 📌 0Introducing the ElevenLabs Swift SDK 2.0 & Voice UI Starter Kit

Add Conversational AI to your visionOS, macOS, and iOS apps in minutes.

Built with:

• ElevenLabs Swift SDK 2.0 (powered by WebRTC)

• SwiftUI + drop-in Xcode template / components

Docs and repo links below:

The CLI makes conversational agents programmable, enabling reproducibility, traceability, and automation across your stack.

Get started with npm install @elevenlabs/convai-cli and read the docs here elevenlabs.io/docs/convers...

Coding agents now have a local scratchpad they can use to reason about your conversational agents and update them programmatically.

Try using

@anthropic.com's Claude Code or

Lovable with our CLI.

Agents are now version-controlled with Git.

Roll back to previous states, audit every change, and deploy updates through your CI/CD pipelines.

We’ve built a new CLI for managing conversational agents as code.

It brings version control, programmability, and deeper integration into your existing workflows.

www.youtube.com/watch?v=TNOq...

来週、東京で開催される @revenuecat.com の Vibe Coding Catfe に参加できることを、とても楽しみにしています。

来週火曜日は、@elevenlabs.io の会話型AIワークショップと #Shipaton 2025 のキックオフパーティーにもぜひご参加ください。

会場でお会いしましょう!

It's the final day of hackathon.dev and inspiration finally hit ⚡️

Presenting GeoGuesser AI, the #voice powered location guessing game 🗺️✨

Built with @elevenlabs.io and Google Maps street view, vibe coded by @bolt.new!

How we code has changed drastically in just the last year, which also means we need to adapt our developer experience to work for both humans and machines!

Join me at nyc.devrelcon.dev where I'll be sharing my learnings from my time at stripe.com , @supabase.com, and @elevenlabs.io!

To get started with 11ai:

1. Sign up at 11.ai

2. Pick from 5,000 voices (or use your own)

3. Connect your tools and MCP servers

4. Talk through your day and start taking action

It's free for the coming weeks.

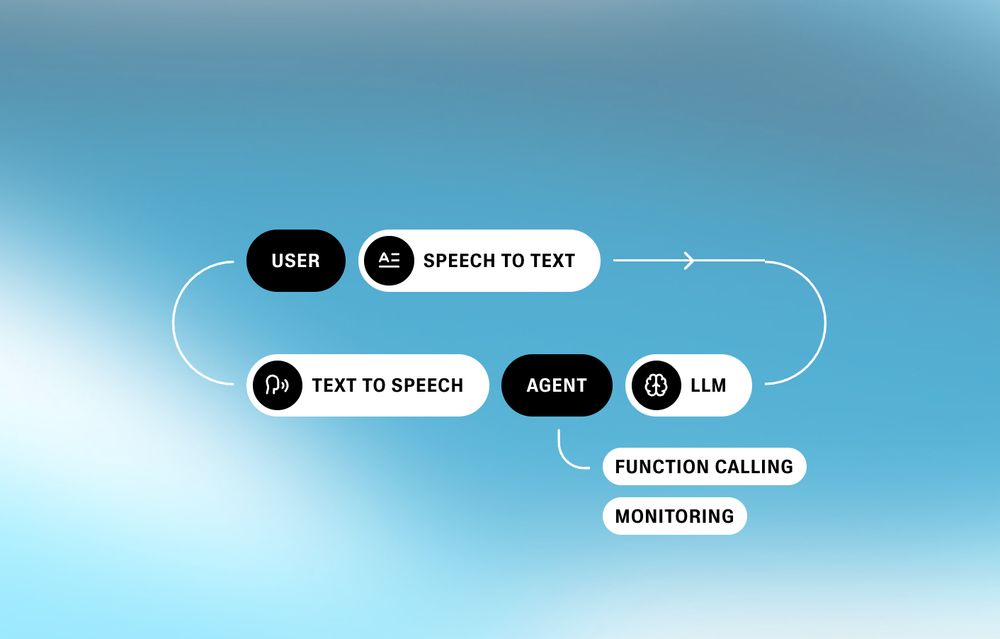

11ai is built on Conversational AI, our low-latency platform for scalable voice agents.

Conversational AI supports voice & text, integrated RAG, language detection, and more.

11ai provides out-of-the-box integrations for Perplexity, Linear, Slack, and more.

Or you can connect your own MCP servers.

youtu.be/rVtpxrwAusA

Introducing 11ai - the AI personal assistant that's voice-first and supports MCP.

This is an experiment to show the potential of Conversational AI:

1. Plan your day and add your tasks to Notion

2. Use Perplexity to research a customer

3. Search and create Linear issues

youtu.be/HOg8jPLTwLI

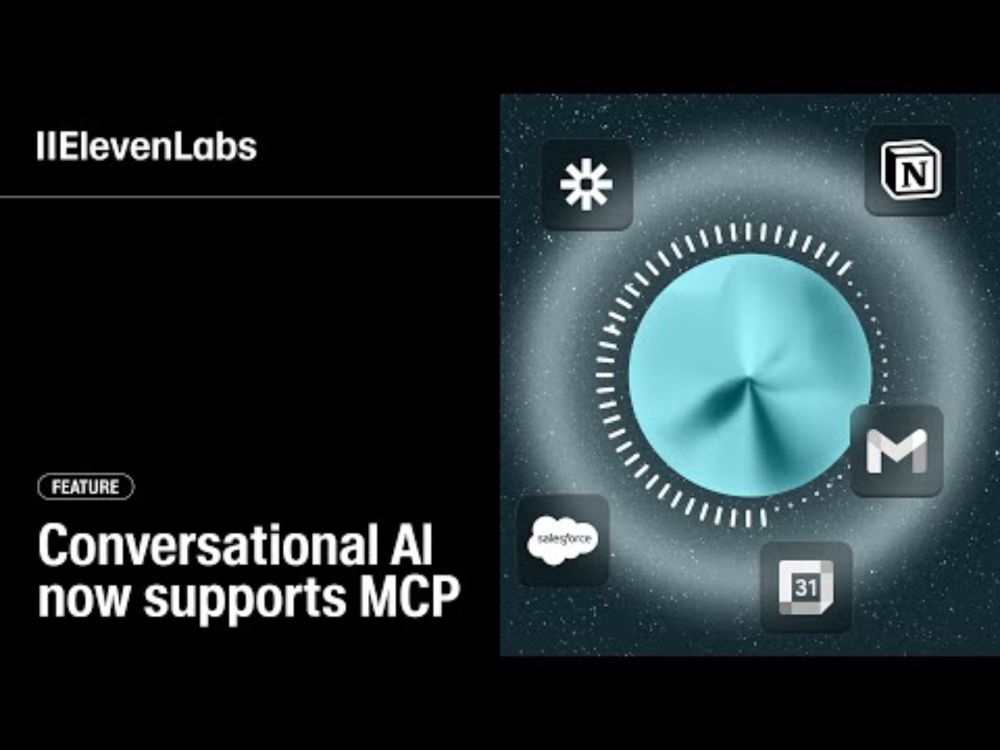

Build agents that book, summarize, search, or automate anything using natural language.

Try it now:

elevenlabs.io/conversation...

Read the MCP docs: elevenlabs.io/docs/convers...

ElevenLabs Conversational AI now supports MCP — letting AI agents connect to services like Salesforce, HubSpot, Gmail and more instantly.

No more manual tool definitions. No more setup friction.

youtu.be/7WLfKp7FpD8

Honored to be part of the incredible panel of judges for the World's Largest Hackathon ✨ Excited to see all the amazing submissions for the @elevenlabs.io #Voice #AI Challenge! 🔥

Don’t forget to check out:

→ Prompting Guide

→ Conversational Voice Design Tips

#worldslargesthackathon @bolt.new

Built for creators and developers building media tools.

If you’re working on videos, audiobooks, or media tools - v3 unlocks a new level of expressiveness. Learn how to get the most out of it with our prompting guide: elevenlabs.io/docs/best-pr...

Introducing Eleven v3 (alpha) - the most expressive Text to Speech model ever.

Supporting 70+ languages, multi-speaker dialogue, and audio tags such as [excited], [sighs], [laughing], and [whispers].

Now in public alpha and 80% off in June.

Hear more about why they chose Scribe: elevenlabs.io/blog/jamie

19.05.2025 16:20 — 👍 0 🔁 0 💬 0 📌 0

In February, we launched Scribe — the most accurate Speech to Text model.

Jamie switched to Scribe to power their AI meeting notetaker and achieved a 3× faster transcription pipeline, with measurable gains in product quality and latency.

still thinking what to build in the @elevenlabs.io & @expo.dev meetup next friday in taipei. would try to be as much fun as possible.

if you are around taipei next week, join us with the legendary @thorweb.dev

Need an easy way to build and deploy custom tools for your conversational AI agents?

@buildship.com just launched buildship.tools, a platform that helps you do exactly that.

E.g. connect your agent to Linear to generate conversational changelogs. go.thor.bio/buildship-to...

Learn more about our MCP server: github.com/elevenlabs/e...

09.05.2025 06:10 — 👍 2 🔁 0 💬 0 📌 0Give wind.surf a voice using the ElevenLabs MCP server.

@asjes.dev walks you through configuring and using our MCP server inside Windsurf.