[6] It's now undeniable that, with a little bit of creativity, improving scaling is not only approachable but also particularly rewarding. And while I'm obviously excited by convolution-attention hyena hybrids due to their balance of efficiency and quality across domains, there's a lot more to do!

14.11.2024 20:33 — 👍 0 🔁 0 💬 0 📌 0

[5] We have seen time and again that various classes of computational units outperform others in different modalities, on different tasks, in different regimes. We've seen this in scaling laws, on synthetics, on inference.

14.11.2024 20:32 — 👍 0 🔁 0 💬 1 📌 0

[4] There has been a flurry of work over the last couple of years (from the great people at HazyResearch and elsewhere) on developing bespoke model designs as "proofs of existence" to challenge the Transformer orthodoxy, at a time when model design was considered "partially solved."

14.11.2024 20:32 — 👍 0 🔁 0 💬 1 📌 0

[3] We continue to push the scale of what's possible with "beyond Transformer" models applied to biology, in what could be among the most computationally intensive fully open (weights, data, pretraining infrastructure) sets of pretrained models across AI as a whole.

14.11.2024 20:31 — 👍 0 🔁 0 💬 1 📌 0

[2] A lot has happened since the first release of Evo. We have made public the original pretraining dataset (OpenGenome)—links below—and will soon release the entire pretraining infrastructure and model code.

14.11.2024 20:31 — 👍 0 🔁 0 💬 1 📌 0

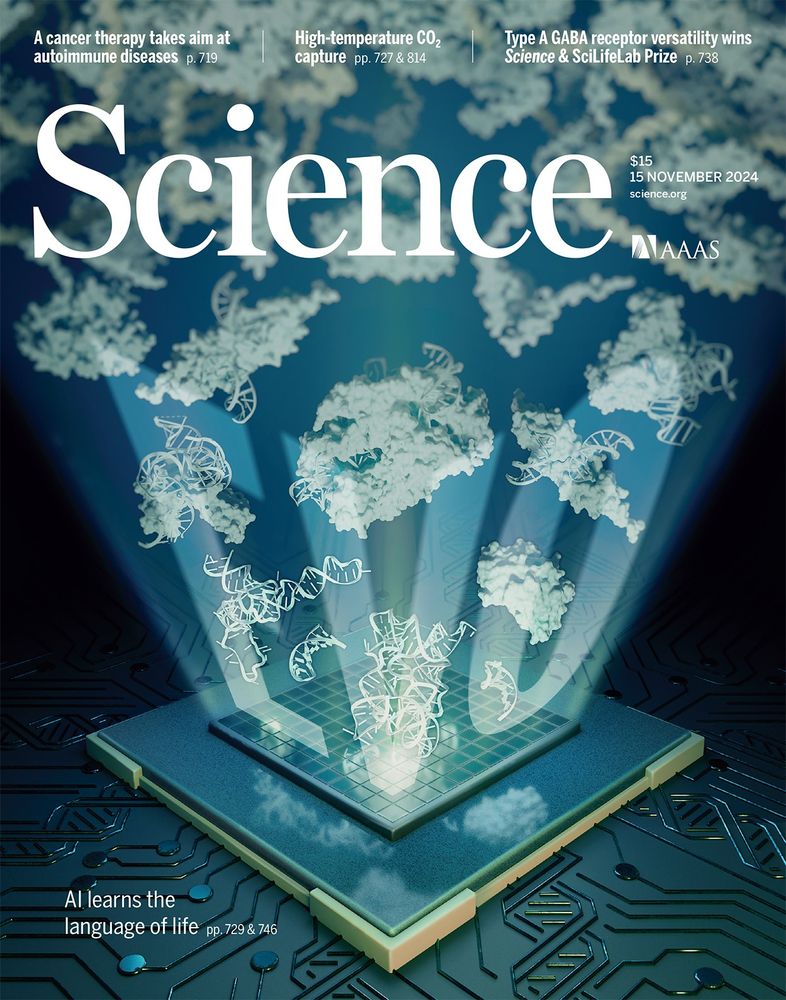

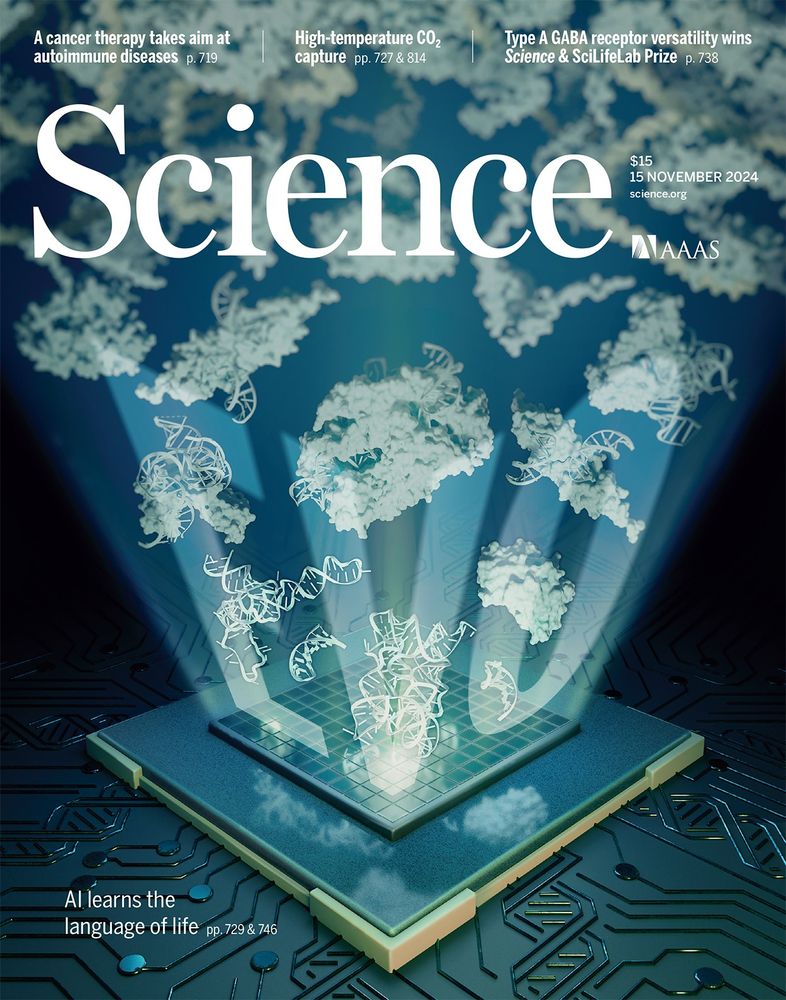

An absolute privilege to see our work on Evo 🧬 highlighted on the cover of the latest issue of Science. Thank you to all the friends and collaborators at Stanford and the Arc Institute.

14.11.2024 20:30 — 👍 2 🔁 0 💬 2 📌 0

I do SciML + open source!

🧪 ML+proteins @ http://Cradle.bio

📚 Neural ODEs: http://arxiv.org/abs/2202.02435

🤖 JAX ecosystem: http://github.com/patrick-kidger

🧑💻 Prev. Google, Oxford

📍 Zürich, Switzerland

AI @ OpenAI, Tesla, Stanford

ML/AI researcher & former stats professor turned LLM research engineer. Author of "Build a Large Language Model From Scratch" (https://amzn.to/4fqvn0D) & reasoning (https://mng.bz/Nwr7).

Also blogging about AI research at magazine.sebastianraschka.com.

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

Reverse engineering neural networks at Anthropic. Previously Distill, OpenAI, Google Brain.Personal account.

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of http://www.deeplearningbook.org . Founding chairman of www.publichealthactionnetwork.org

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

Breakthrough AI to solve the world's biggest problems.

› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

Co-founder and CEO at Hugging Face

I like tokens! Lead for OLMo data at @ai2.bsky.social (Dolma 🍇) w @kylelo.bsky.social. Open source is fun 🤖☕️🍕🏳️🌈 Opinions are sampled from my own stochastic parrot

more at https://soldaini.net

Cofounded and lead PyTorch at Meta. Also dabble in robotics at NYU.

AI is delicious when it is accessible and open-source.

http://soumith.ch

Working on fully open-source LLMs and training data. We believe in community-owned AI.

https://www.llm360.ai

https://Answer.AI & https://fast.ai founding CEO; previous: hon professor @ UQ; leader of masks4all; founding CEO Enlitic; founding president Kaggle; various other stuff…

Associate Professor at Princeton

Machine Learning Researcher

Professor in Scalable Trustworthy AI @ University of Tübingen | Advisor at Parameter Lab & ResearchTrend.AI

https://seongjoonoh.com | https://scalabletrustworthyai.github.io/ | https://researchtrend.ai/

Building AI climate models at Google. I also contribute to the scientific Python ecosystem, including Xarray, NumPy and JAX.

Opinions are my own, not my employer's.