Excited to be part of this vision!

11.07.2025 20:54 — 👍 7 🔁 2 💬 0 📌 0Excited to be part of this vision!

11.07.2025 20:54 — 👍 7 🔁 2 💬 0 📌 0

... and @davidduvenaud.bsky.social

@josephgubbels.bsky.social

@sydneylevine.bsky.social

@samuelemarro.bsky.social

@morgansutherland.bsky.social

@joelbot3000.bsky.social

@tobyshorin.bsky.social, among many many others :)

special thanks to: @edelwax.bsky.social

@xuanalogue.bsky.social

@klingefjord.bsky.social

@matijafranklin.bsky.social

@atoosakz.bsky.social

@atrisha.bsky.social

@mbakker.bsky.social

@ryanothnielkearns.bsky.social

@fbarez.bsky.social

@vinnylarouge.bsky.social ...

If you're excited about these ideas, drop me a line!! We're looking for researchers to collaborate with -- send an email to research@meaningalignment.org.

It's gonna be fun.

✌️

This is a huge project. We'll need lots of help.

But if we succeed, the future could be more beautiful than we can possibly imagine today.

Examples of TMV include: resource-rational contractualism by @sydneymlevine et al, self-other overlap by

@MarcCarauleanu et al, and our previous work on moral graph elicitation.

It's an emerging field, but we think early research is very promising!!

Instead, we call for a new paradigm — "Thick models of value" (TMV).

TMV is a broad class of structured approaches to modeling values and norms that:

1. are more robust against distortions

2. have better treatment of collective values and norms

3. have better generalization

It's like programming in a completely dynamic language without any type system. Kinda sketch if we design all of our institutions around unstructured text and hope LLMs interpret them in the way we intended.

11.07.2025 18:56 — 👍 1 🔁 0 💬 1 📌 0

In principle, unstructured text could be an improvement; after all, language is how humans naturally express values.

But this lack of internal structure becomes a critical weakness when we need *reliability* across contexts and institutions.

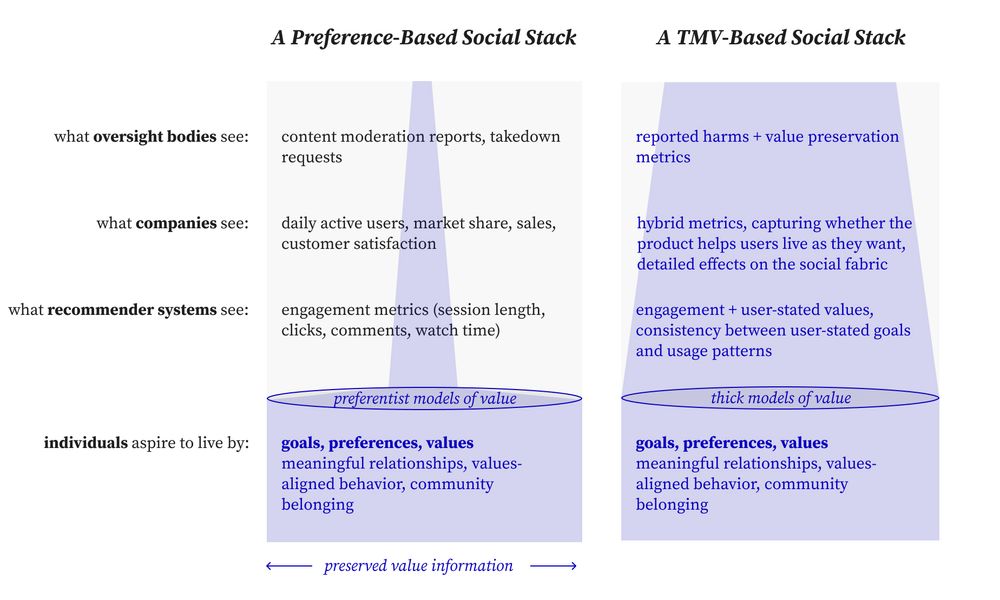

In practice this looks like: a desire for "meaningful connection" becomes "engagement metrics" to recommender systems, which becomes "daily active users" to companies, and "quarterly revenue" in markets.

11.07.2025 18:56 — 👍 1 🔁 0 💬 1 📌 0

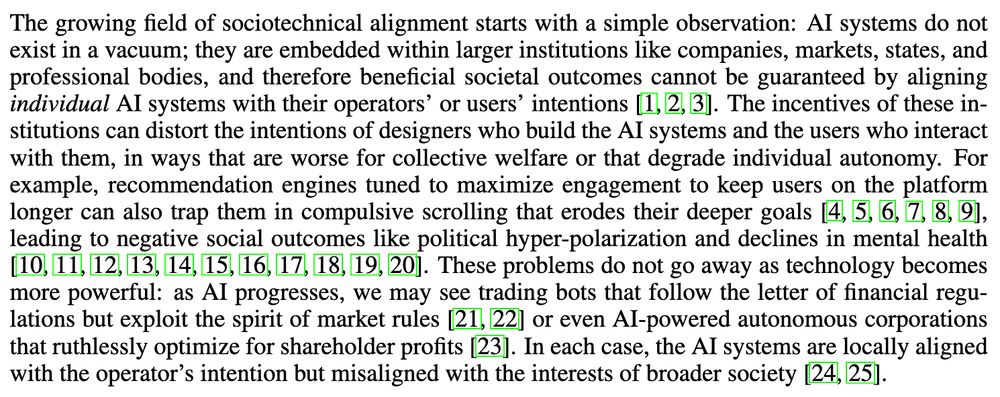

PMV in particular (the dominant paradigm in microeconomics, game theory, mechanism design, social choice theory, etc) fails to capture the richness of human motivation, because preferences bundle all kinds of signals into a flattened ordering.

11.07.2025 18:56 — 👍 4 🔁 0 💬 1 📌 0

Current approaches tend to fall into what we call "Preferentist models of Value" (PMV), or "Values-as-text" (VAT). Both have issues preserving the richness of what people care about, as value information propagates up the "societal stack"

11.07.2025 18:56 — 👍 2 🔁 0 💬 1 📌 0

Okay, so this seems extremely hard. How do we do it?

We think we've identified an important piece of the puzzle: we need better *representations* of values and norms that are legible to AI, markets and democracies.

Important to say: the goal of full-stack alignment is pluralistic. We don't impose a singular vision of human flourishing.

Rather, we argue that we need better institutional tools to improve our capacity for individual and collective self-determination.

So, full-stack alignment is our way of saying that we need AI and institutions that "fit" us, and that help us live the lives we want to live.

11.07.2025 18:56 — 👍 4 🔁 1 💬 1 📌 0

We have markets that favor products that are addictive and isolating, and our democratic institutions are hyper-polarizing us.

Things will get worse as AI displaces workers and out-paces regulation. In our near-term future we risk losing both our economic and democratic agency.

Why do we need to co-align AI *and* institutions?

AI systems don't exist in a vacuum. They are embedded within institutions whose incentives shape their deployment.

Often, institutional incentives are not aligned with what's in our best interest.

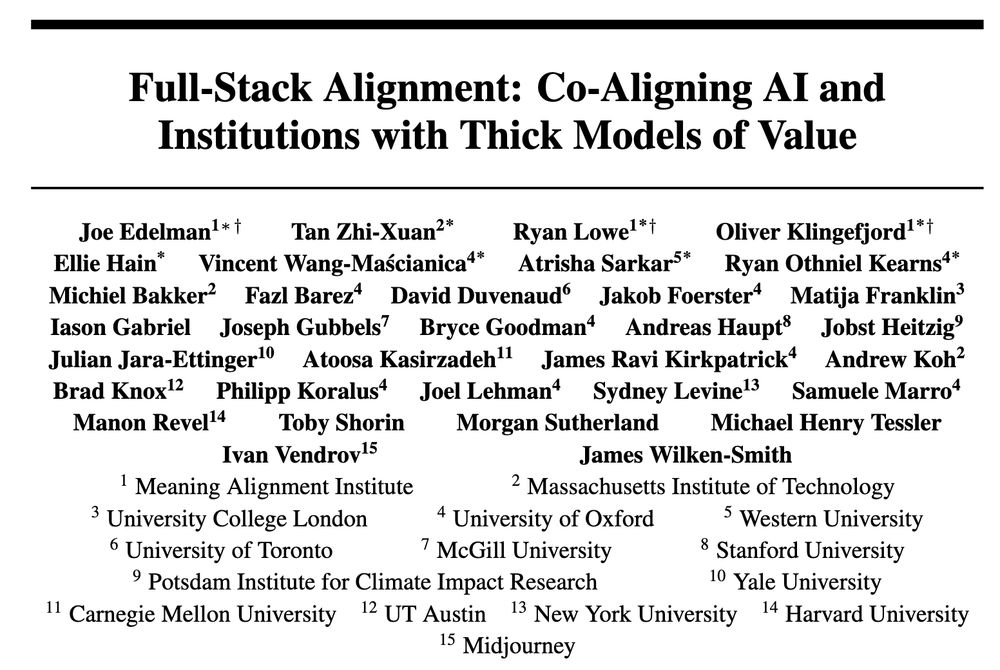

This is in collaboration with a huge list of really really amazing researchers (see the list of authors!)

11.07.2025 18:56 — 👍 2 🔁 0 💬 1 📌 0

Today we're launching:

- A position paper that articulates the conceptual foundations of FSA (jytmawd4y4kxsznl.public.blob.vercel-storage.com/Full_Stack_...)

- A website which will be the homepage of FSA going forward (www.full-stack-alignment.ai/)

Introducing: Full-Stack Alignment 🥞

A research program dedicated to co-aligning AI systems *and* institutions with what people value.

It's the most ambitious project I've ever undertaken.

Here's what we're doing: 🧵

And: I'm hiring a post-doc! I'm looking for someone with a strong computational background who can start in the summer (or sooner). Details here: apply.interfolio.com/165122

Feel free to reach out with questions (email is best: smlevine@mit.edu) and please share widely!