Results tl;dr:

1. Performance drops >70% from 128 to 8K tokens

2. Query expansion helps marginally, but can't solve the core issue

3. When literal matches fail in long context, semantic alternatives fail harder

4. Position bias: needles at start/end perform better than middle

07.03.2025 09:29 — 👍 0 🔁 0 💬 1 📌 0

Embedding models become "blind" beyond 4K tokens in context length. Building on the NoLIMA paper, our experiments show that for needle-in-a-haystack tasks, performance of embedding models drops to near-random chance with long contexts—even with exact keyword matches 🤔 🧵

07.03.2025 09:28 — 👍 4 🔁 3 💬 1 📌 0

Query Expansion with LLMs: Searching Better by Saying More

Search has changed a lot since embedding models were introduced. Is there still a role for lexical techniques like query expansion in AI? We think so.

I applied LLMs for query expansion and we wrote this article:

It sees to work out-of-the-box and generally boost the performance of embedding models. However, it requires more latency. Would be interesting to see more about this.

📃: jina.ai/news/query-e...

🛠️: github.com/jina-ai/llm-...

18.02.2025 08:29 — 👍 6 🔁 3 💬 0 📌 0

An obvious pivot would be to sell unique data-assets as IP, but a far more future-proof proposition is to sell data flywheels - the more enterprises you onboard, the more differentiated and indispensable your software gets.

16.02.2025 08:31 — 👍 0 🔁 0 💬 0 📌 0

It's time for enterprise SaaS companies to seriously rethink what it is that their customers were paying them for, and for what will they now? It's no longer enough to operate a walled-garden service (rule-based ER mgmt) when "comprehension" of multimodal data is being commoditized by large models.

16.02.2025 08:27 — 👍 0 🔁 0 💬 1 📌 0

The business value that still remains to be cracked was, and is, in inference not training! Even if we accept the take that skipping sft direcrlt for RL meant huge savings in training costs - you're only ever going to train it once! Where you gonna get 30 h100s for running it?

27.01.2025 20:07 — 👍 0 🔁 0 💬 0 📌 0

I don't get it - are influencers really writing obituaries for nvda because there's a really good new model?? Make it make sense. It's obvs great that r1 training recipe is kinda open (which is btw charitable, cuz it's not even that open, there's nothing in there about training data)

27.01.2025 20:03 — 👍 0 🔁 0 💬 2 📌 0

47th EUROPEAN CONFERENCE ON INFORMATION RETRIEVAL – 47th EUROPEAN CONFERENCE ON INFORMATION RETRIEVAL

Our submission to ECIR 2025 on jina-embeddings-v3 has been accepted! 🎉

At the ECIR Industry Day my colleague @str-saba.bsky.social presents how we train the latest version of our text embedding model.

More details on ECIR: ecir2025.eu

More details about the model: arxiv.org/abs/2409.10173

16.12.2024 16:18 — 👍 3 🔁 2 💬 0 📌 0

A lot of people prefer getting into the weeds of what agents are, instead of this very important point about introspection at the heart of it - I appreciate 🤘🏽

14.12.2024 16:58 — 👍 0 🔁 0 💬 0 📌 0

There's no free lunch though - for slightly complex vulnerability audits or refactoring Claude works best with multiple subtask-oriented chats. O1-p, while rarely complaining about context bloat, just literally stops even trying to be helpful after a point (which you just have to know from vibes).

12.12.2024 06:43 — 👍 0 🔁 0 💬 0 📌 0

After having set up Claude with Brave and knowledge graph MCPs, I've tbh not felt the need to open chatgpt or ppl in a while.

12.12.2024 06:40 — 👍 0 🔁 0 💬 1 📌 0

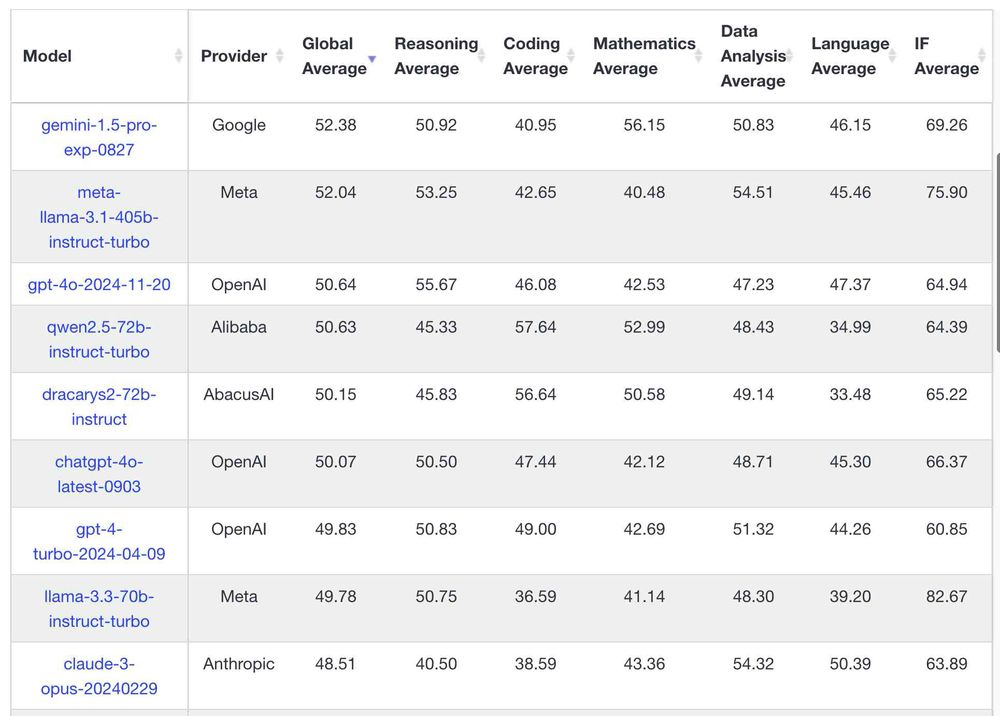

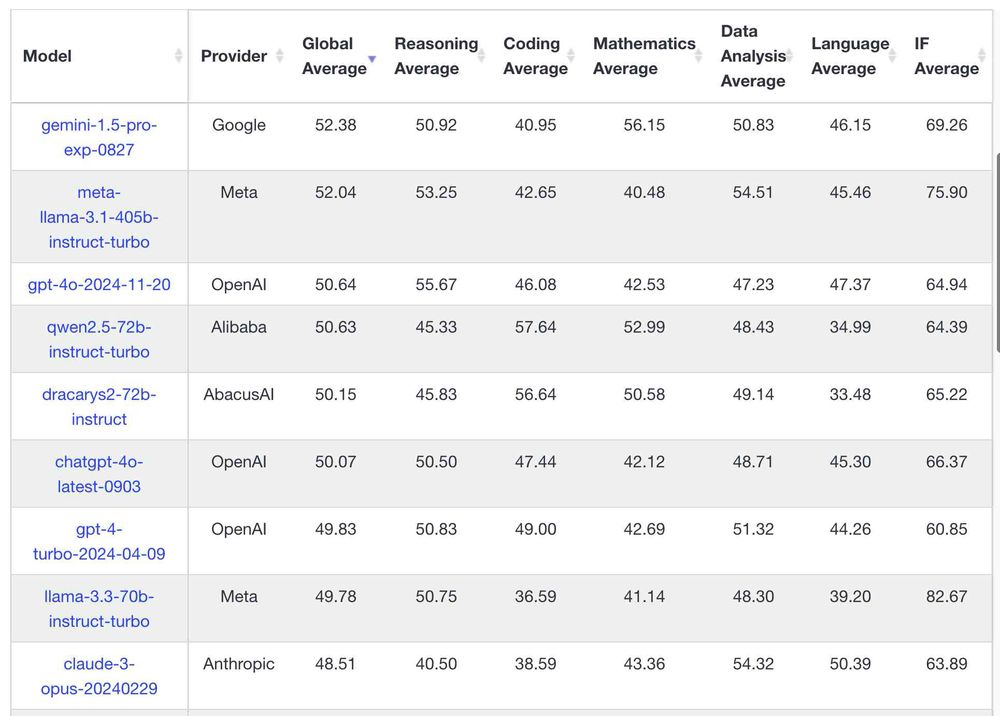

I can now run a GPT-4 class model on my laptop

Meta’s new Llama 3.3 70B is a genuinely GPT-4 class Large Language Model that runs on my laptop. Just 20 months ago I was amazed to see something that felt …

I can now run a GPT-4 class model on my laptop

(The exact same laptop that could just about run a GPT-3 class model 20 months ago)

The new Llama 3.3 70B is a striking example of the huge efficiency gains we've seen in the last two years

simonwillison.net/2024/Dec/9/l...

09.12.2024 15:19 — 👍 360 🔁 59 💬 11 📌 6

https://jina.ai/news/still-need-chunking-when-long-context-models-can-do-it-all/

One year ago, we released the first OS embedding model for 8192 tokens. Many suspected it to be not useful and chunking to be better than a single vector. I run many experiments to explain, when to use what and we summarized the findings in this article

t.co/BLC3WTU3LP

05.12.2024 08:49 — 👍 7 🔁 2 💬 2 📌 1

Screenshot of vscode showing python code calling OpenAI client with Jina AI URL for creating embeddings object.

Thanks for reminding me, XAI, because I had forgotten to tell everyone that Jina Embeddings API is also OpenAI compatible 🤭

04.12.2024 14:00 — 👍 2 🔁 0 💬 0 📌 0

So, it seems that, unlike text tasks where pre-trained MLM models do form a generally good backbone for downstream tasks (I'm now not even sure of this?), when it comes to images, one needs to pay careful attention for whether the downstream task is a multimodal or a unimodal image one.

03.12.2024 14:16 — 👍 0 🔁 0 💬 0 📌 0

I didn't find any studies pitting both models head-to-head for vision-language, or even just vision tasks, but there is this one study that shows that for small scale datasets, MAE-trained vision encoders at least do improve CLIP model performance arxiv.org/abs/2301.07836

03.12.2024 14:15 — 👍 1 🔁 0 💬 1 📌 0

...except language alignment, where the distillation method of EVA-02 performs arguably better on multimodal tasks, due to its direct optimization with NL modelling, which gives it an edge over the technique of image reconstruction only.

03.12.2024 14:14 — 👍 1 🔁 0 💬 1 📌 0

Even with as much as 75% of the image patches masked, this MAE technique performs exceedingly well for almost all downstream vision tasks, incl. classification, semantic segmentation etc. ALMOST all of them ...

03.12.2024 14:14 — 👍 1 🔁 0 💬 1 📌 0

Masked Autoencoders Are Scalable Vision Learners

This paper shows that masked autoencoders (MAE) are scalable self-supervised learners for computer vision. Our MAE approach is simple: we mask random patches of the input image and reconstruct the mis...

@bowang0911.bsky.social showed me this cool paper that I'd never read before, about Masked Autoencoder (MAE) for images. The idea: an image encoder encodes non-masked patches, followed by a decoder using the non-masked and masked embeddings to regenerate the original image arxiv.org/abs/2111.06377

03.12.2024 14:13 — 👍 3 🔁 1 💬 1 📌 0

EVA-02: A Visual Representation for Neon Genesis

We launch EVA-02, a next-generation Transformer-based visual representation pre-trained to reconstruct strong and robust language-aligned vision features via masked image modeling. With an updated pla...

Got a bit into a rabbit hole of image modelling, after talking to a colleague last week. While Jina CLIP models employ EVA-02 as image tower, I wanted to know if there's an equivalent to masked "patch prediction" for images, like MLM in pre-trained text models arxiv.org/abs/2303.11331 (1/n)

03.12.2024 14:12 — 👍 3 🔁 0 💬 1 📌 0

I've had a funny year really. For most of it, weeks flew past me so quickly because, well AI and it's 2024, but at least weekends were slow, inside or at a playground. But ever since I restarted seeing friends actively since the birth of my second child, the weekends seem to whizz right by too.

01.12.2024 20:33 — 👍 3 🔁 0 💬 0 📌 0

Transgender YouTubers had their videos grabbed to train facial recognition software

In the race to train AI, researchers are taking data first and asking questions later

And the idea that "is it public" is all that matters and not what you DO with people's content, is absurd.

Like you cannot possibly suggest with a straight face that this example of using transgender YouTubers' videos to train facial recognition is 100% fine.

www.theverge.com/2017/8/22/16...

27.11.2024 15:57 — 👍 142 🔁 23 💬 4 📌 3

A bunch of things that I'd add to it are

1. VLMs for maintaining document structure integrity, while getting equally if not better outcomes.

2. Colbert models for improving explanaibility of results, before diving into fine-tuning.

3. Fine-tuning reranker models

28.11.2024 13:23 — 👍 0 🔁 0 💬 0 📌 0

RAG's Biggest Problems & How to Fix It (ft. Synthetic Data) | S2 E16

Had a lot of fun recording this one with @nicolay.fyi. All things considered, in the time between recording it and now I haven't seen many left-field developments that have changed my mind about evals in RAG.

open.spotify.com/episode/5bzb...

28.11.2024 13:17 — 👍 4 🔁 2 💬 1 📌 0

AI is good at pricing, so when GPT-4 was asked to help merchants maximize profits - and it did exactly that by secretly coordinating with other AIs to keep prices high!

So... aligned for whom? Merchants? Consumers? Society? The results we get depend on how we define 'help' arxiv.org/abs/2404.00806

28.11.2024 05:15 — 👍 114 🔁 15 💬 1 📌 2

The authors of ColPali trained a retrieval model based on SmolVLM 🤠 TLDR;

- ColSmolVLM performs better than ColPali and DSE-Qwen2 on all English tasks

- ColSmolVLM is more memory efficient than ColQwen2 💗

Find the model here huggingface.co/vidore/colsm...

27.11.2024 14:10 — 👍 74 🔁 8 💬 4 📌 2

IMO, the source matters even more than if the information was not good (for you). If you consider an information piece "good", it is likely that you'll propagate it more.

27.11.2024 14:46 — 👍 0 🔁 0 💬 1 📌 0

Subscribe to my tech and online culture newsletter UserMag.co

Listen/watch Power User podcast on all platforms!!

Support my work on Patreon: https://www.patreon.com/c/taylorlorenz

Ezra Klein’s tweets, articles, clips and podcasts on Bluesky.

We amplify voices on the issues that matter to you. Read on: https://www.nytimes.com/section/opinion

Professor at a University in the Fens.

Berkeley professor, former Secretary of Labor. Co-founder of @inequalitymedia.bsky.social and @imcivicaction.bsky.social.

Substack: http://robertreich.substack.com

Buy my new book: https://sites.prh.com/reich

Visit my website: https://rbreich.com/

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Professor of Marketing at NYU Stern School of Business, serial entrepreneur, and host of the Prof G and Pivot Podcasts.

Host of Vector Podcast, product manager, search guy

https://youtube.com/@vectorpodcast

The Search Juggler: expert consultant in search & AI, conference host & speaker, author - get my help at www.thesearchjuggler.com

Founder/engineer; I build infrastructure for search. When I'm not writing Rust or Nix, I'm bloviating on business. Austin, TX.

Hardcore backend stuff, python, cloud and ml

Author of Python behind the scenes

Building dstack.ai

My blog: http://tenthousandmeters.com

Other projects: https://github.com/r4victor

I'm a newsletter (and sometimes other stuff) about the internet (and sometimes other stuff)

Chilling online. I write a newsletter called Garbage Day.

Assistant Professor of CS at CU Boulder. I research statistical & geospatial machine learning.