OSF

Huge thanks to Surya and Nathan for their help😊

Open access preprint: osf.io/preprints/so...

All materials and data: osf.io/54vms/

Feel free to reach out to me in case of any questions! 9/9

05.12.2024 12:03 — 👍 2 🔁 0 💬 0 📌 0

(2) Observers can flexibly prioritize one sense over the other, in anticipation of modality-specific interference, and use only the most informative sensory modality to guide behavior, while nearly ignoring other modalities (even when they convey substantial information). 8/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

In these four experiments, we concluded that (1) observers use both hearing and vision when localizing static objects, but use only unisensory input when localizing moving objects and predicting motion under occlusion. 7/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

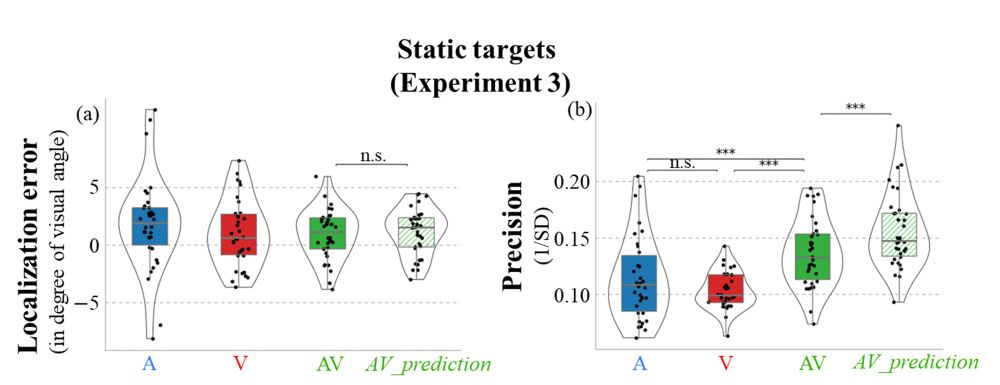

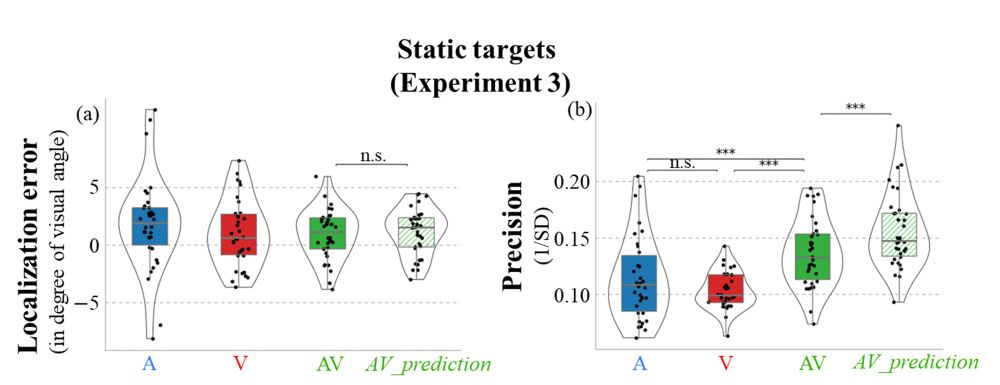

In Exp. 3 the target did not move, but only briefly appeared as a static stimulus at the exact same endpoints as in Exp. 1 and 2. Here, a substantial multisensory benefit was found when participants localized static audiovisual target, showing near-optimal (MLE) integration. 6/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

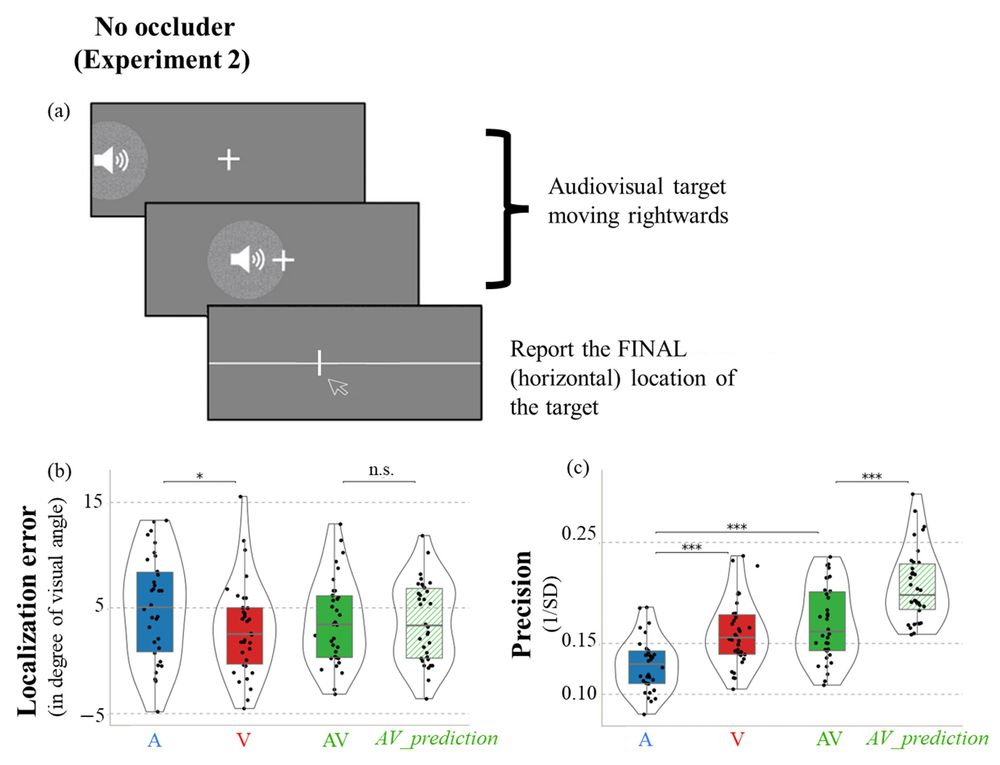

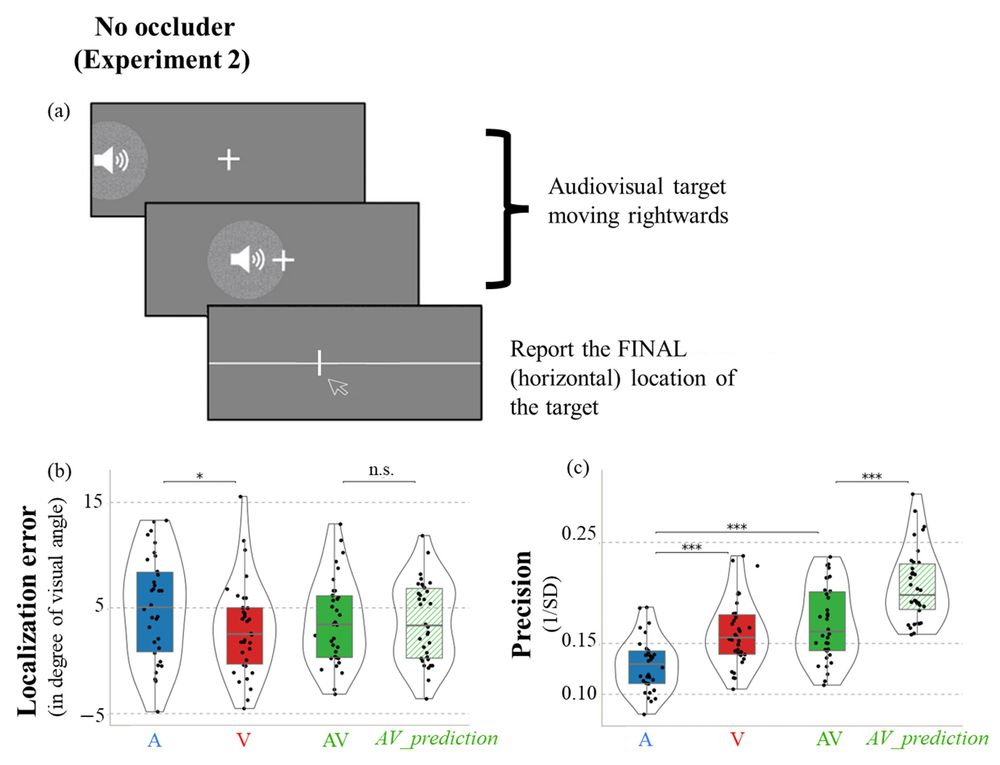

In Exp. 2, there was no occluder, so participants were required to simply report where the moving target disappeared from the screen. Here, although localization estimates were in line with MLE predictions, no multisensory precision benefits were found either. 5/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

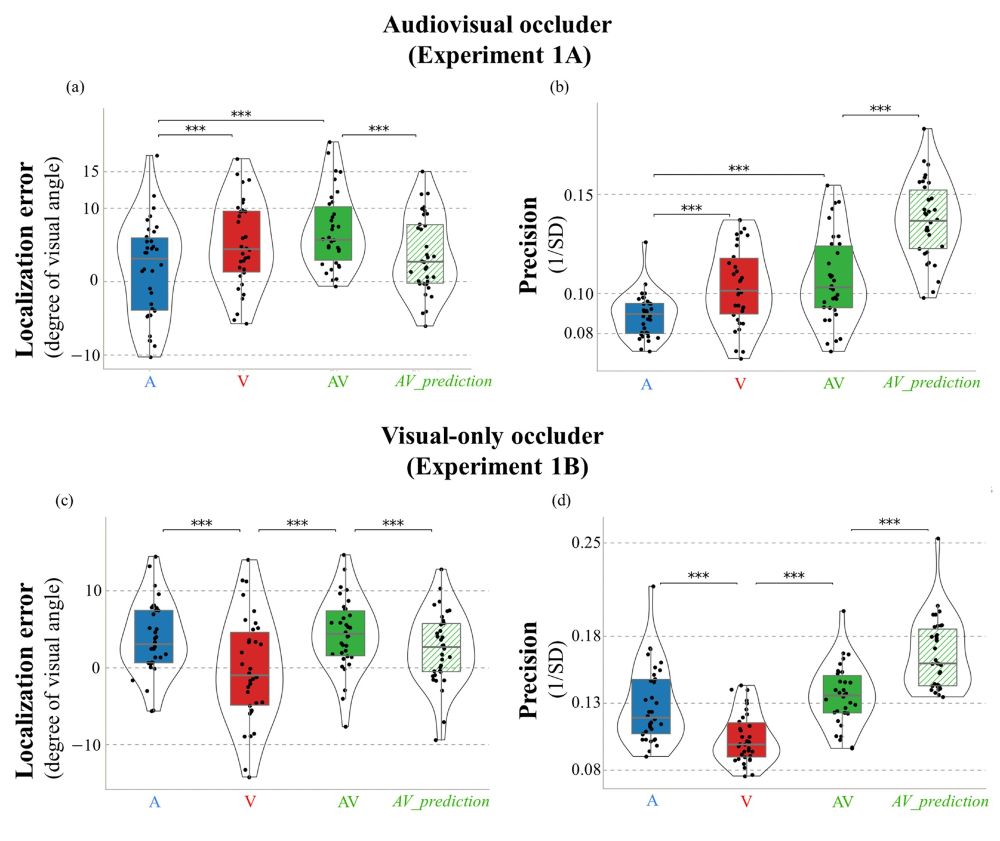

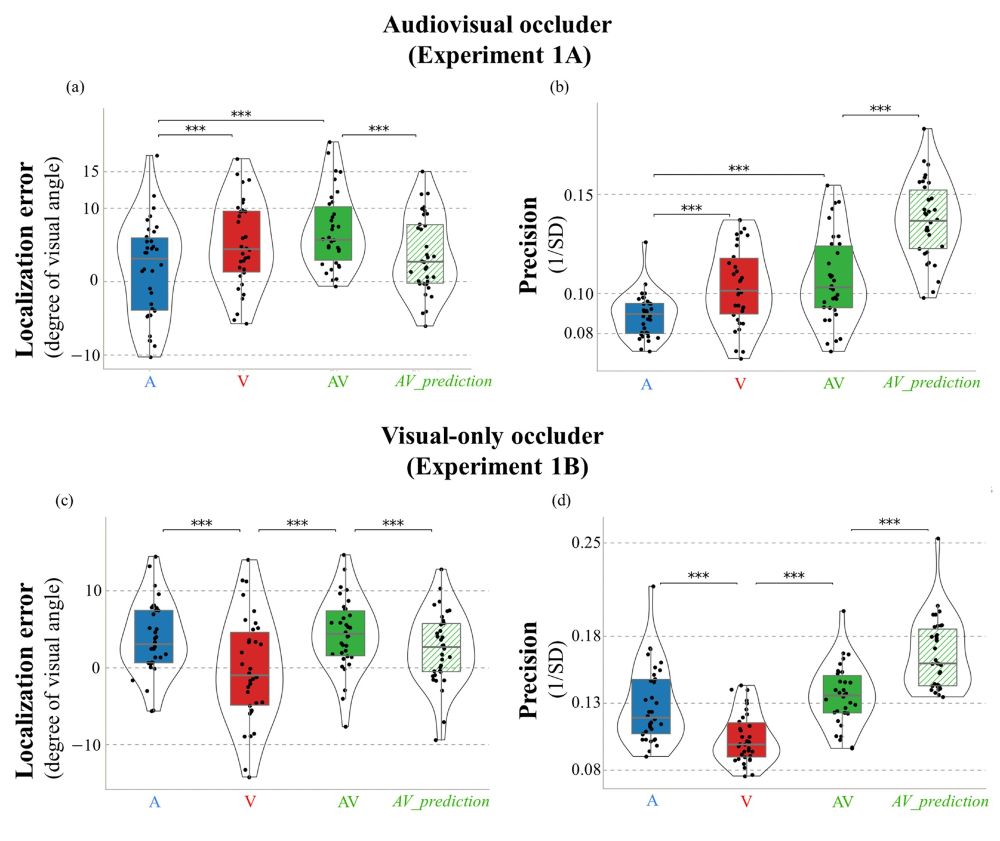

In these two experiments, we showed that participants do not seem to benefit from audiovisual information when tracking occluded objects, but flexibly prioritize one sense (V in Exp 1A and A in Exp 1B) over the other, in anticipation of modality-specific interference. 4/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

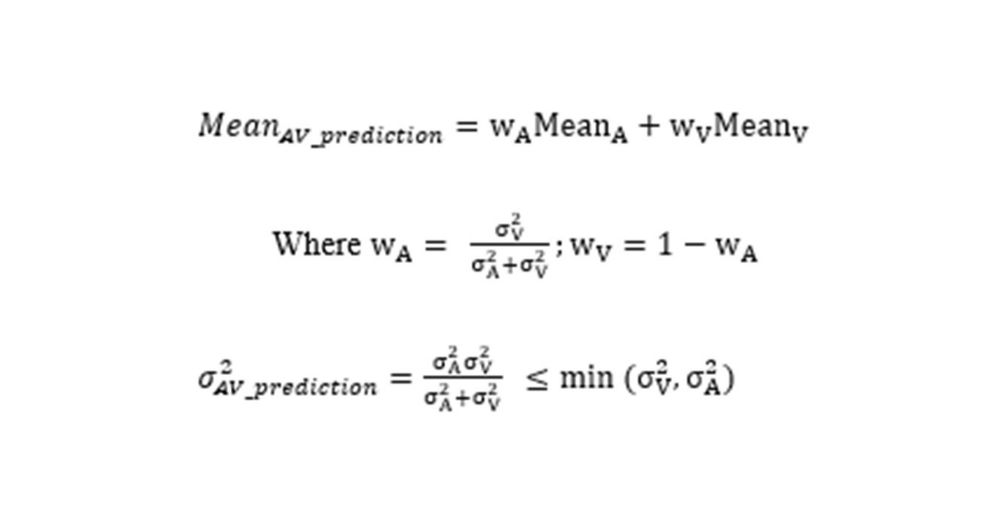

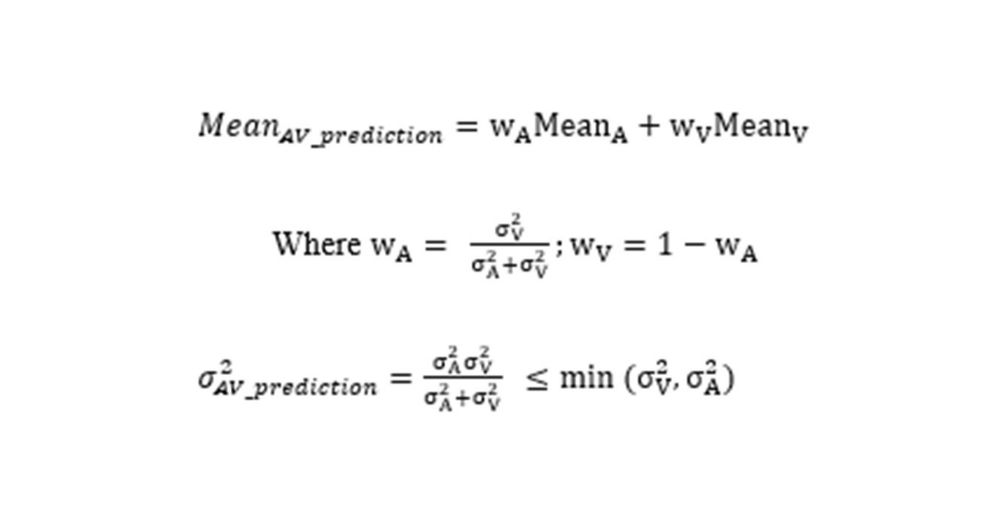

We asked whether observers optimally weigh the auditory & visual components of audiovisual stimuli. We therefore compared the observed data to maximum likelihood estimation (MLE) model predictions, which weighs the unisensory inputs according to their uncertainty (variance). 3/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

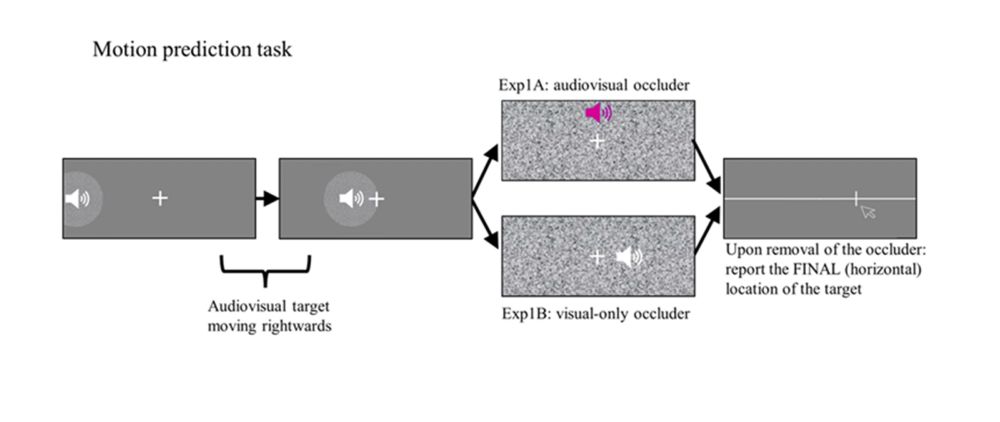

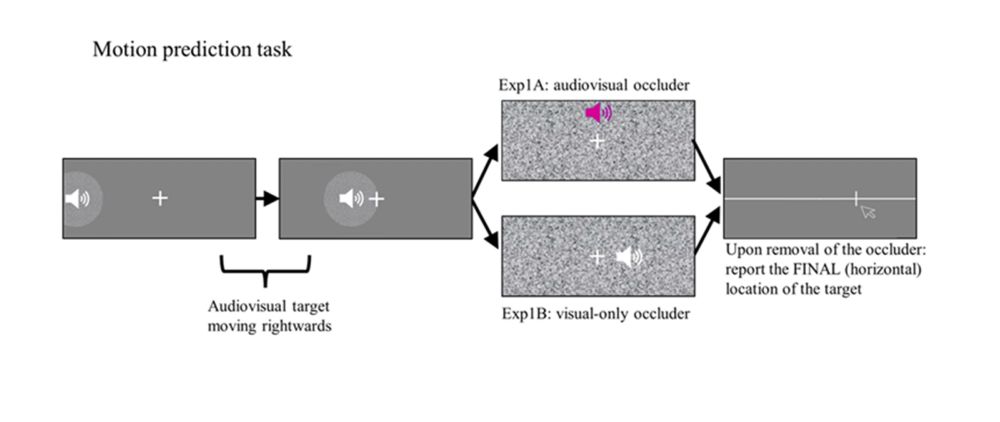

In Exp. 1A, moving targets (auditory, visual or audiovisual) were occluded by an audiovisual occluder, and their final locations had to be inferred from target speed and occlusion duration. Exp. 1B was identical to Experiment 1A except that a visual-only occluder was used. 2/9

05.12.2024 12:03 — 👍 1 🔁 0 💬 1 📌 0

OSF

New paper accepted in JEP-General (preprint: osf.io/preprints/socarxiv/uvzdh) with @suryagayet.bsky.social & Nathan van der Stoep. We show that observers use both hearing & vision for localizing static objects, but rely on a single modality to report & predict the location of moving objects. 1/9

05.12.2024 12:03 — 👍 18 🔁 2 💬 1 📌 2

A Nature Portfolio journal bringing you research and commentary on all aspects of human behaviour.

https://www.nature.com/nathumbehav/

PhD student at Umass Boston in the Early Minds Lab! Studying working memory and cognitive effort in all ages, with Zsuzsa Kaldy and Erik Blaser

PhD Candidate in Artificial Intelligence and Cognitive Neuroscience | Investigating how AI can help understand the brain | Utrecht University | COBRA & AttentionLab

The Dutch Society for Brain and Cognition (NVP) promotes fundamental research on brain and cognition in The Netherlands. Among other things, NVP organizes the famous Winter Conference every other year.

Using large-scale experiments to study perception and cognition, working at The Chinese University of Hong Kong.

Post-doctoral researcher at IfaDo.

Interested in the multifaceted interplay between attention, working memory, and multisensory processing 🧠

https://lauraklatt.github.io/

Cognitive neuroscientist with many interests, including why our stomachs churn when we feel disgust. I also write books on programming; teach Python, statistics, and machine learning; and develop open-source software.

https://www.dalmaijer.org

Postdoc @UCSD | working memory 🧠

Psycholinguist into L2 processing, emotion communication, and language & cognition (decision making, motion events, etc) | Postdoc @UniMannheim, Germany | she/her (Pssst, I'm more active on LinkedIn ;-) )

Juan C. Castro-Alonso. Rewarded hubby & daddy. Educational psychology, multimedia, STEM, biochemistry, spatial working memory. Asst. Professor at University of Birmingham (UK), Author, Speaker, Editor, Consultant

Postdoc Researcher at The Hong Kong Polytechnic University https://www.decision-neuro.com/ Working on visual attention, awareness, memory & decision making

CAP-Lab: https://www.cap-lab.net/

AttentionLab: https://www.uu.nl/en/research/attentionlab/team

Postdoc in visual cognitive neuroscience 🧠 @ Donders Institute. Interested in attention, perception and imagery (she/her)

The MoDI Lab brings together research at the University of Surrey investigating motor development and its impact across the lifespan.

https://www.surrey.ac.uk/motor-development-and-impact-lab

Computational cognition. Vision. Working memory.

The vOICe? Oh I see! Sensory substitution for the blind: vision by mental imagery. https://x.com/seeingwithsound, https://mas.to/@seeingwithsound, feedback@seeingwithsound.com

https://www.seeingwithsound.com

https://www.artificialvision.com

PhD student @Ernst Strüngmann Institute, Frankfurt | Working memory, Eye movement behaviour, Open science, other stuff |

AttentionLab is the research group headed by Prof. Stefan Van der Stigchel at Experimental Psychology | Helmholtz Institute

| Utrecht University