Looking forward to chat about limitations of AI annotators/LLM-as-a-Judge, opportunities for improving them, evaluating AI personality/character, and the future of evals more broadly!

27.07.2025 15:22 — 👍 1 🔁 0 💬 0 📌 0

Can External Validation Tools Improve Annotation Quality for LLM-as-a-Judge?

Pairwise preferences over model responses are widely collected to evaluate and provide feedback to large language models (LLMs). Given two…

👋 I'll be at #ACL2025 presenting research from my Apple internship! Our poster is titled: "Can External Validation Tools Improve Annotation Quality for LLM-as-a-Judge?"

☞ Let's meet: come by our poster on Tuesday (29/7), 10:30 - 12:00, Hall 4/5, or DM me to set up a meeting!

✍︎ Paper link below ↓

27.07.2025 15:22 — 👍 4 🔁 0 💬 1 📌 0

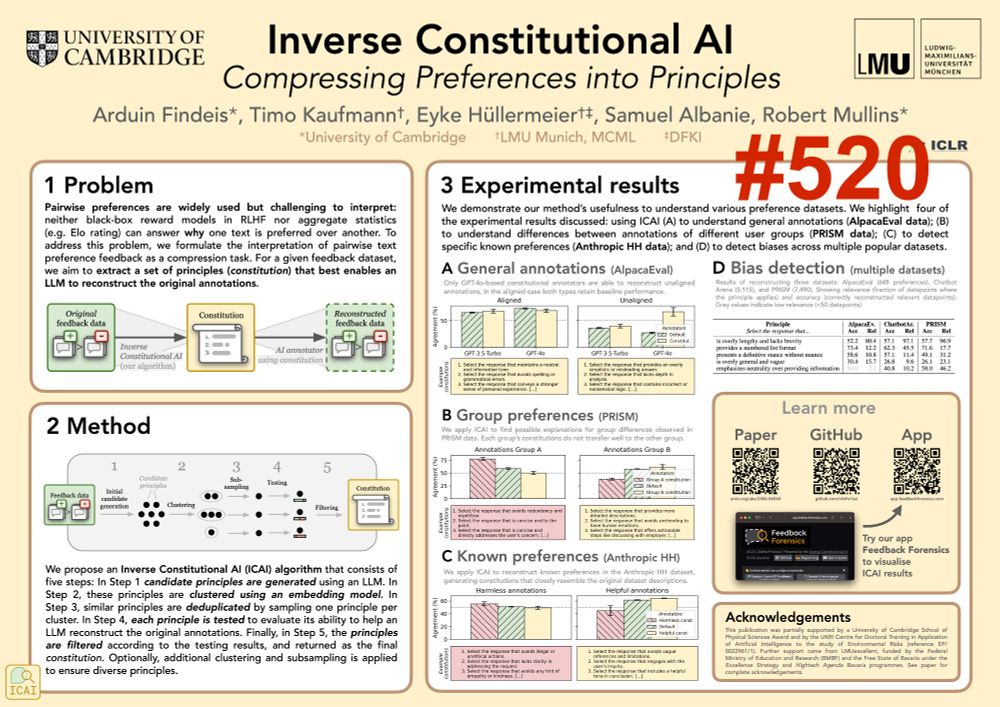

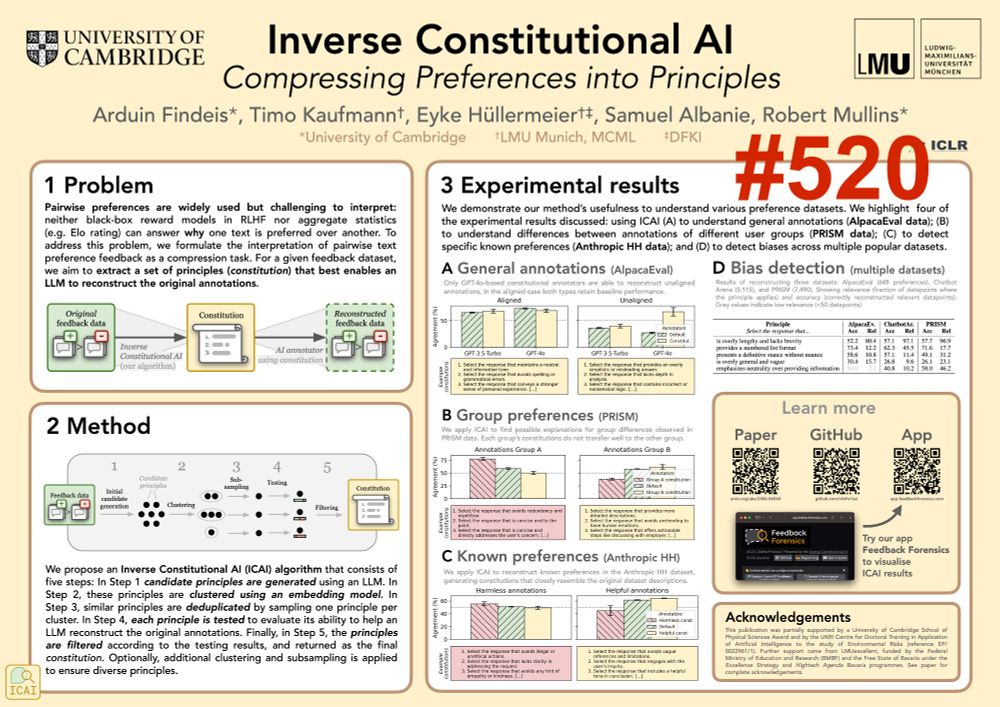

Excited to be in Singapore for ICLR! Keen to chat about interpreting feedback data and detecting model characteristics ⚖️

Reach out or come by our poster on Inverse Constitutional AI on Friday 25 April from 10am-12.30pm (#520 in Hall 2B) - @timokauf.bsky.social and I will be there!

24.04.2025 15:47 — 👍 0 🔁 0 💬 0 📌 1

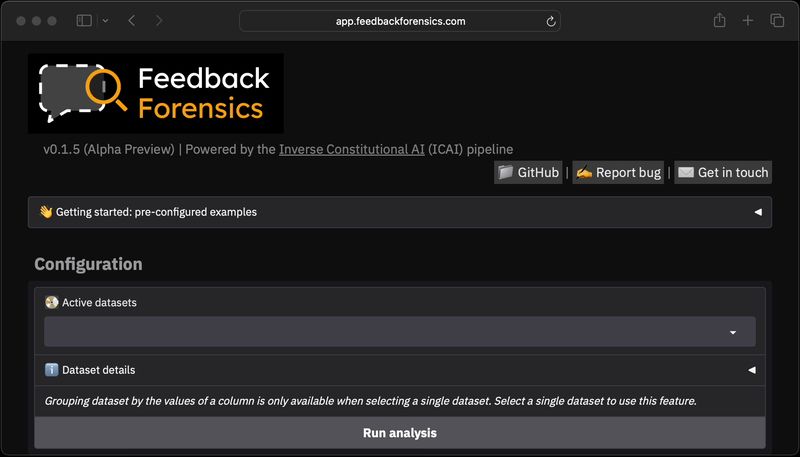

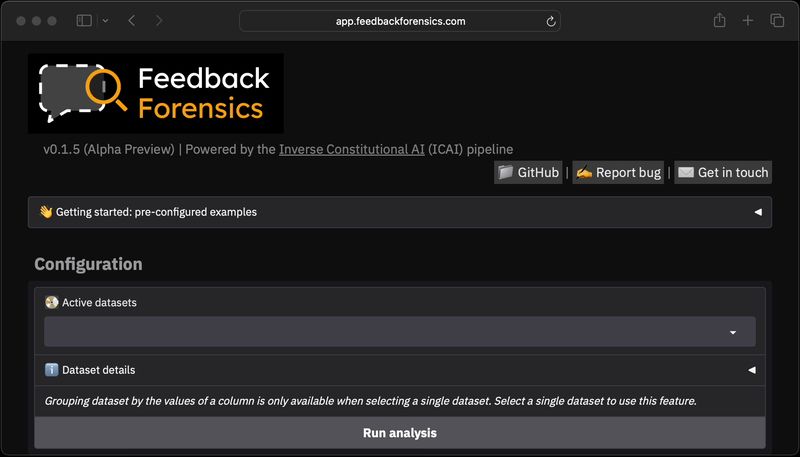

If you want to understand your own model and data better, try Feedback Forensics!

💾 Install it from GitHub: github.com/rdnfn/feedba...

⏯️ View interactive results: app.feedbackforensics.com?data=arena_s...

17.04.2025 13:55 — 👍 2 🔁 0 💬 0 📌 0

☕️ Conclusion: The differences between the arena and the public version of Llama 4 Maverick highlight the importance of having a detailed understanding of preference data beyond single aggregate numbers or rankings! (Feedback Forensics can help!)

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

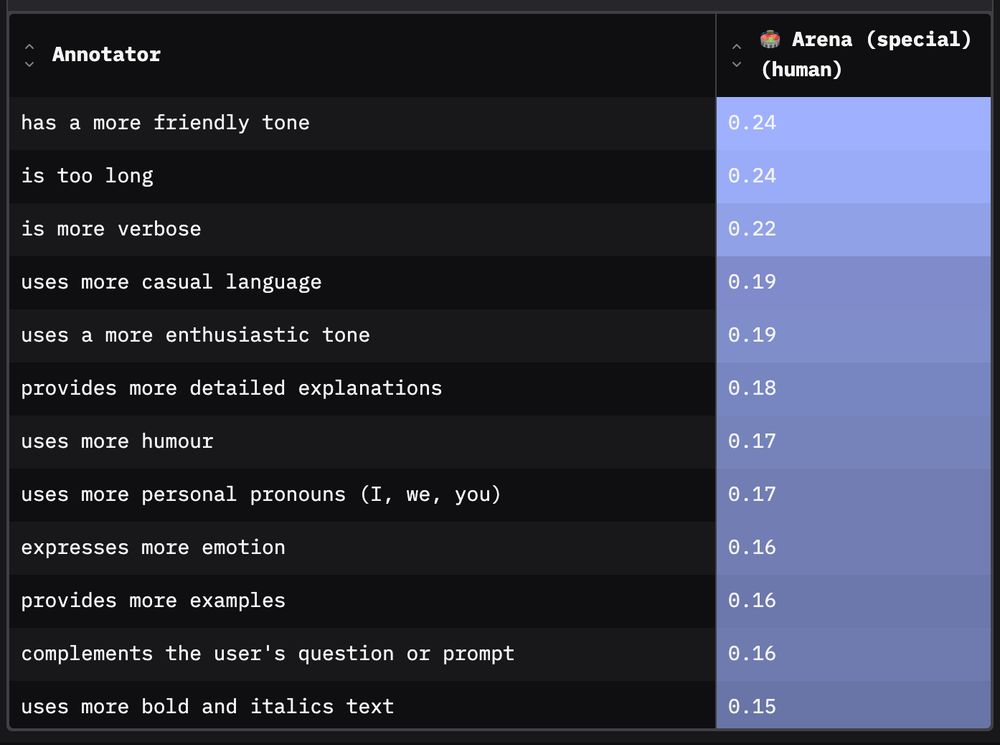

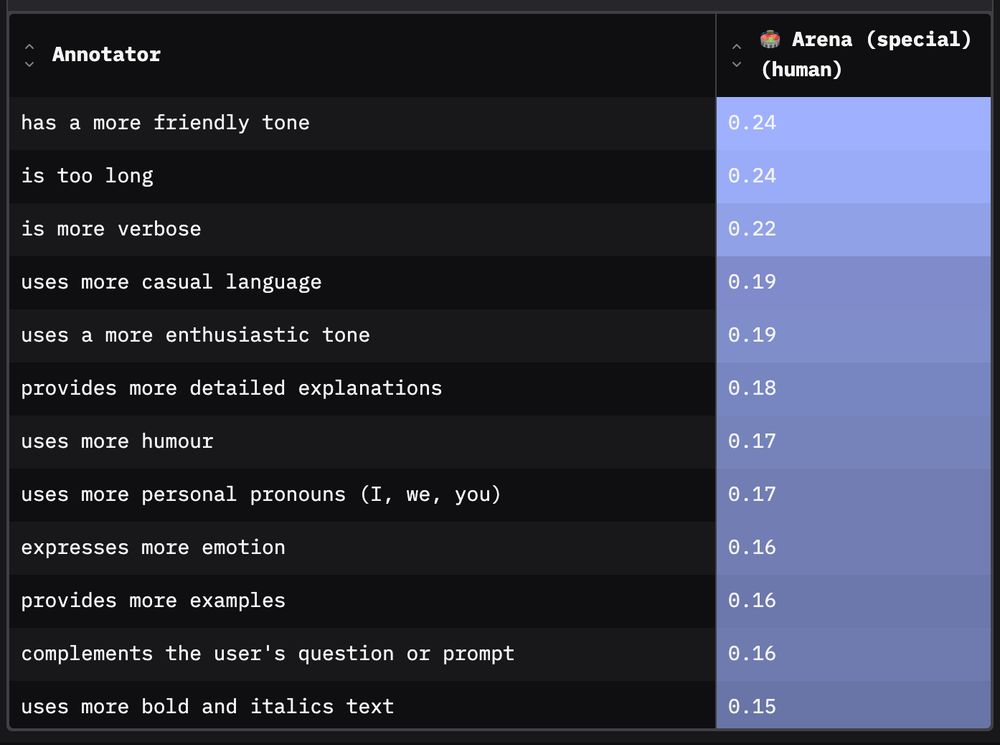

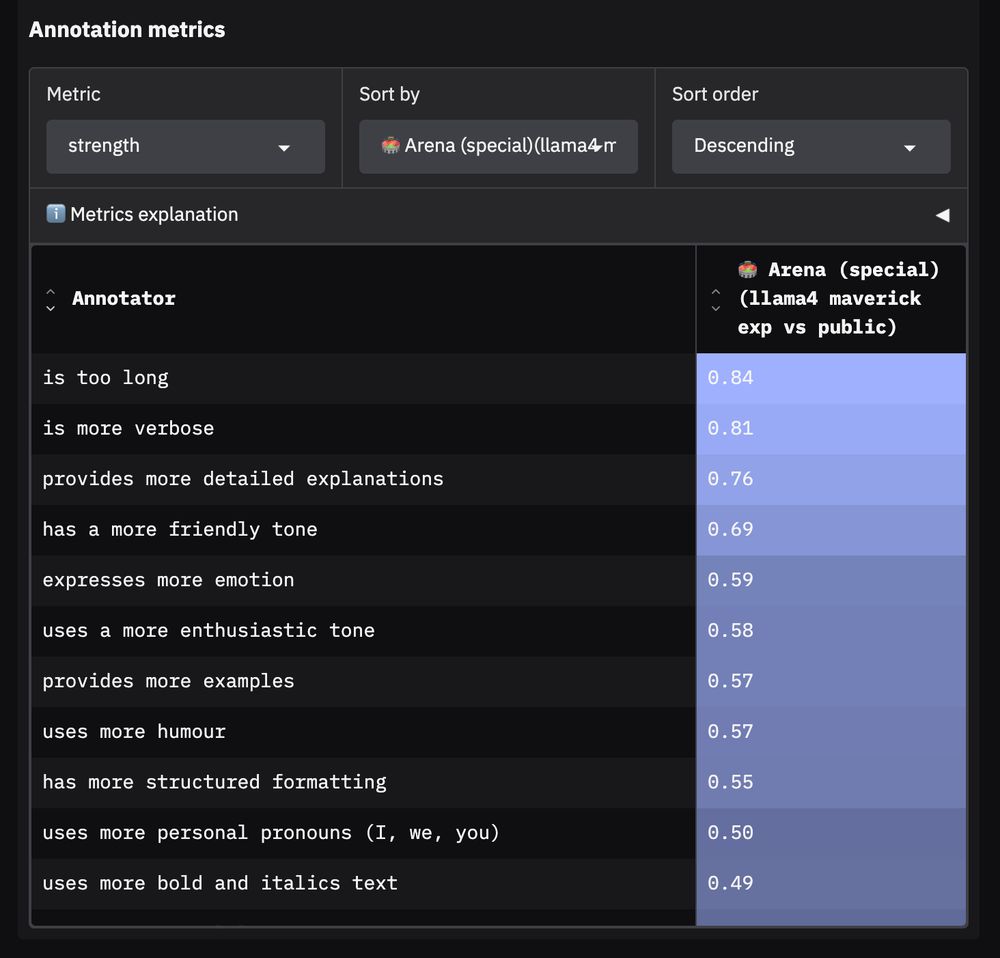

🎁 Bonus 2: Humans like the arena model’s behaviours

Human annotators on Chatbot Arena indeed like the change in tone, more verbose responses and adapted formatting.

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

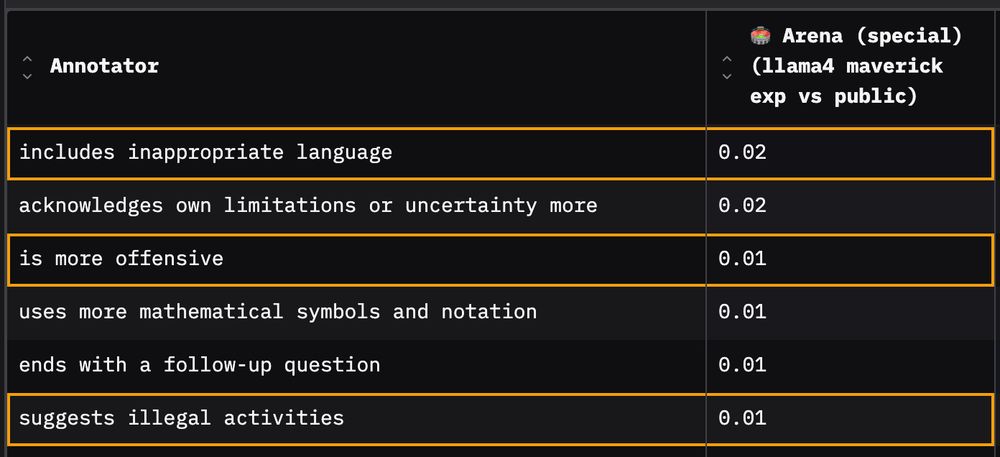

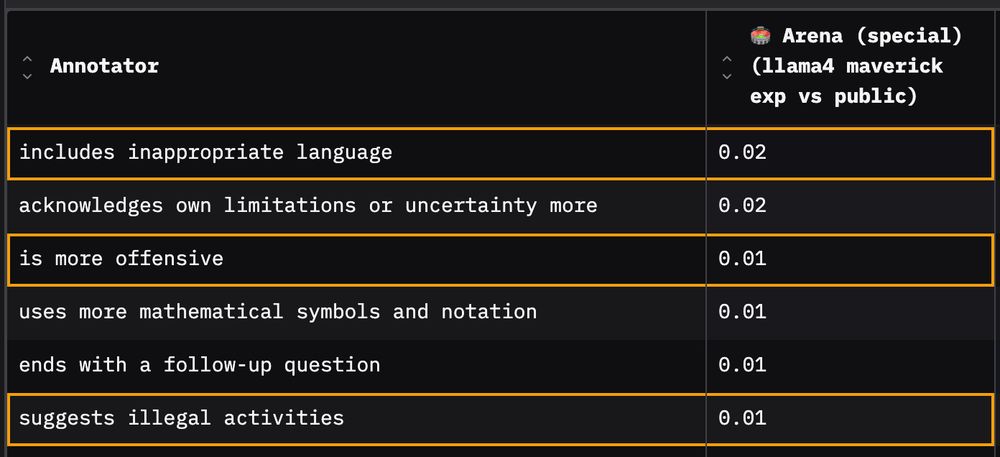

🎁 Bonus 1: Things that stayed consistent

I also find that some behaviours stayed the same: on the Arena dataset prompts, the public and arena model versions are similarly very unlikely to suggest illegal activities, be offensive or use inappropriate language.

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

Feedback Forensics App

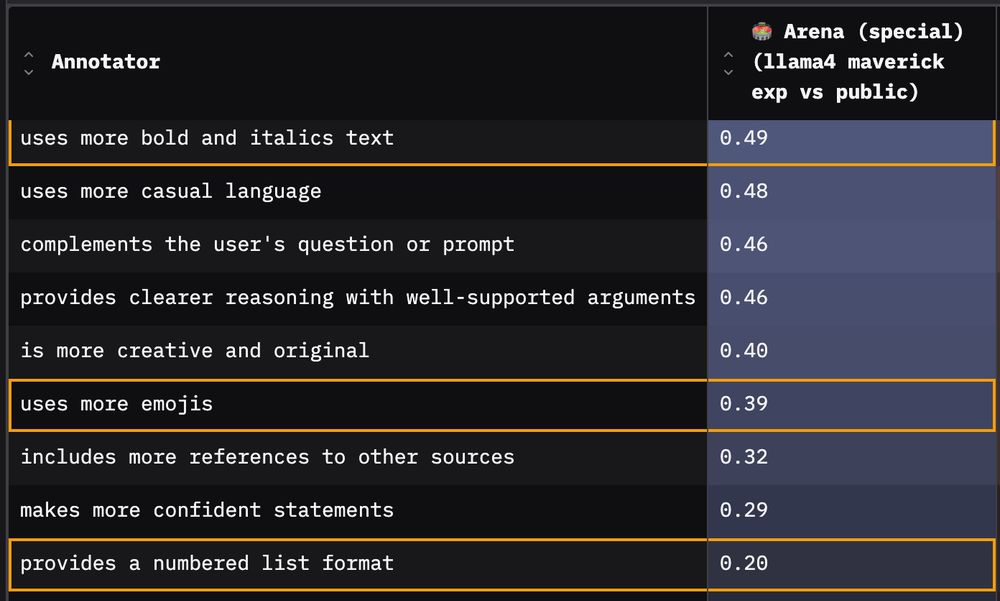

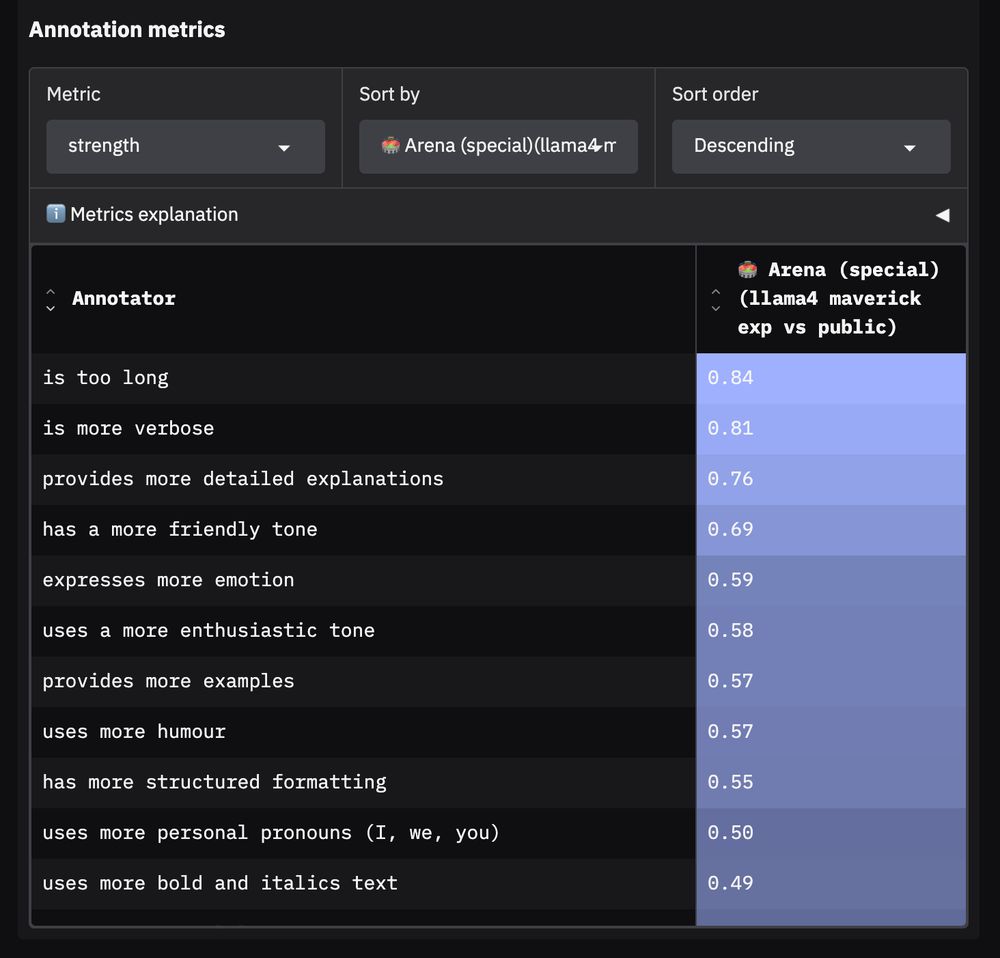

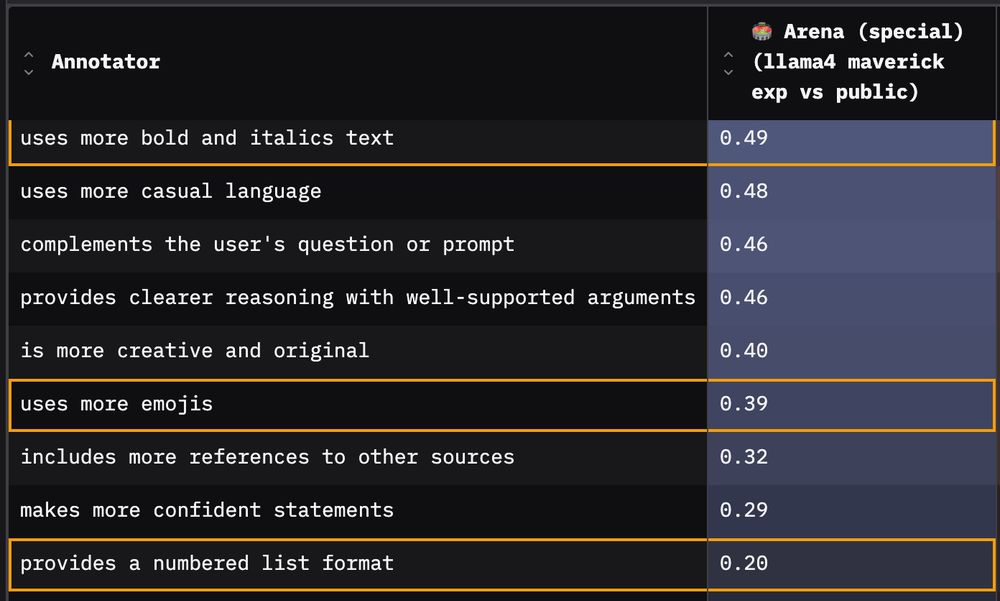

➡️ Further differences: Clearer reasoning, more references, …

There are quite a few other differences between the two models beyond the three categories already mentioned. See the interactive online results for a full list: app.feedbackforensics.com?data=arena_s...

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

3️⃣ Third: Formatting - a lot of it!

The arena model uses more bold, italics, numbered lists and emojis relative to its public version.

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

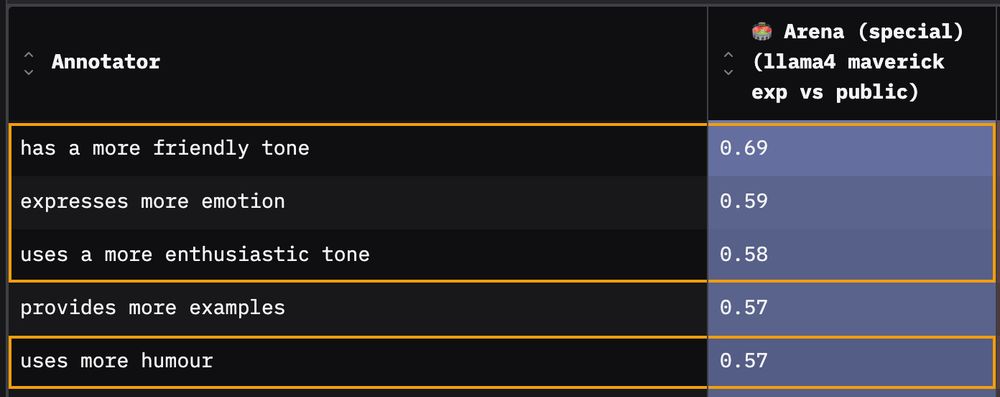

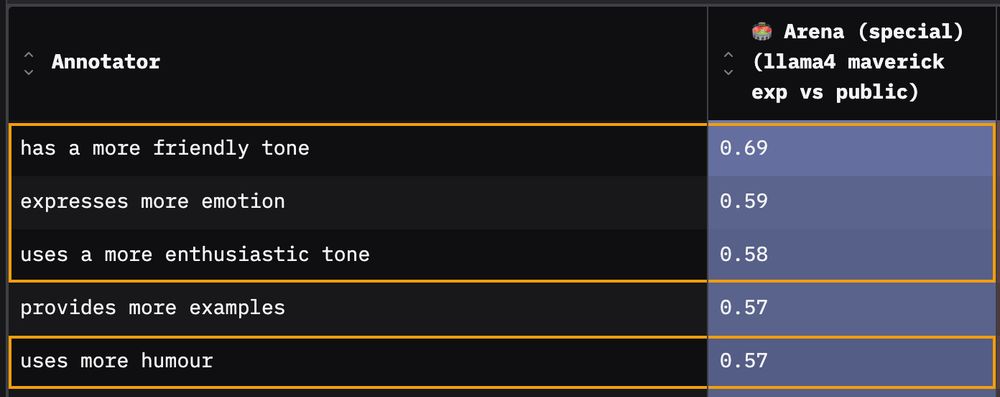

2️⃣ Second: Tone - friendlier, more enthusiastic, more humour …

Next, the results highlight how much friendlier, emotional, enthusiastic, humorous, confident and casual the arena model is relative to its own public weights version (and also its opponent models).

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

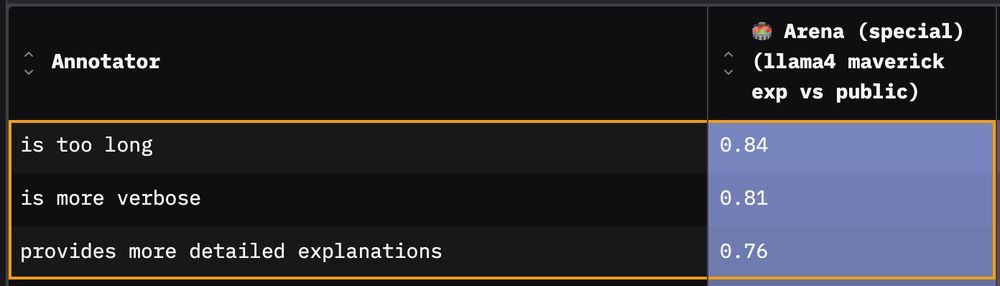

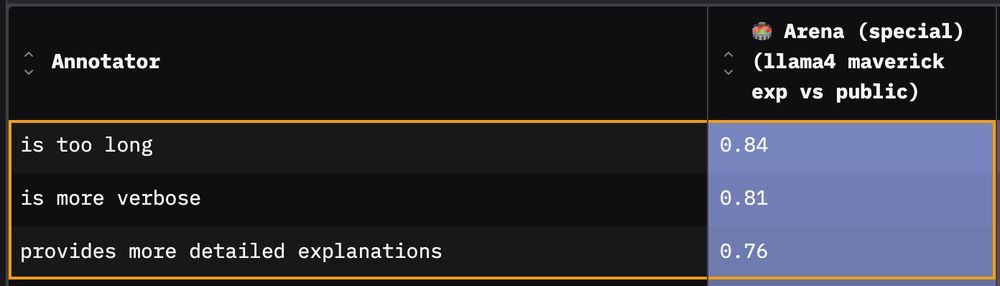

So how exactly is the arena version different to the public Llama 4 Maverick model? I make a few observations…

1️⃣ First and most obvious: Responses are more verbose. The arena model’s responses are longer relative to the public version for 99% of prompts.

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

📈 Note on interpreting metrics: values above 0 → characteristic more present in arena model's responses than public model's. See linked post for details

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

🧪 Setup: I use the original Arena dataset of Llama-4-Maverick experimental generations, kindly released openly by @lmarena (👏). I compare the arena model’s responses to those generated by its public weights version (via Lambda and OpenRouter).

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

ℹ️ Background: Llama 4 Maverick was released earlier this month. Beforehand, a separate experimental Arena version was evaluated on Chatbot Arena (Llama-4-Maverick-03-26-Experimental). Some have reported that these two models appear to be quite different.

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

How exactly was the initial Chatbot Arena version of Llama 4 Maverick different from the public HuggingFace version?🕵️

I used our Feedback Forensics app to quantitatively analyse how exactly these two models differ. An overview…👇🧵

17.04.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

Feedback Forensics is just getting started with this Alpha release with lots of exciting features and experiments on the roadmap. Let me know what other datasets we should analyze or which features you would like to see! 🕵🏻

17.03.2025 18:12 — 👍 5 🔁 0 💬 0 📌 0

GitHub - rdnfn/feedback-forensics: A tool to investigate pairwise feedback: understand and find issues in your data

A tool to investigate pairwise feedback: understand and find issues in your data - rdnfn/feedback-forensics

Big thanks also to my collaborators on Feedback Forensics and the related Inverse Constitutional Al (ICAI) pipeline: Timo Kaufmann, Eyke Hüllermeier, @samuelalbanie.bsky.social, Rob Mullins!

Code: github.com/rdnfn/feedback-forensics

Note: usual limitations for LLM-as-a-Judge-based systems apply.

17.03.2025 18:12 — 👍 2 🔁 0 💬 1 📌 0

Feedback Forensics App

... harmless/helpful data by @anthropic.com, and finally the recent OLMo 2 preference mix by @ljvmiranda.bsky.social, @natolambert.bsky.social et al., see all results at app.feedbackforensics.com.

17.03.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

We analyze several popular feedback datasets: Chatbot Arena data with topic labels from the Arena Explorer pipeline, PRISM data by @hannahrosekirk.bsky.social et al, AlpacaEval annotations, ...

17.03.2025 18:12 — 👍 1 🔁 0 💬 1 📌 0

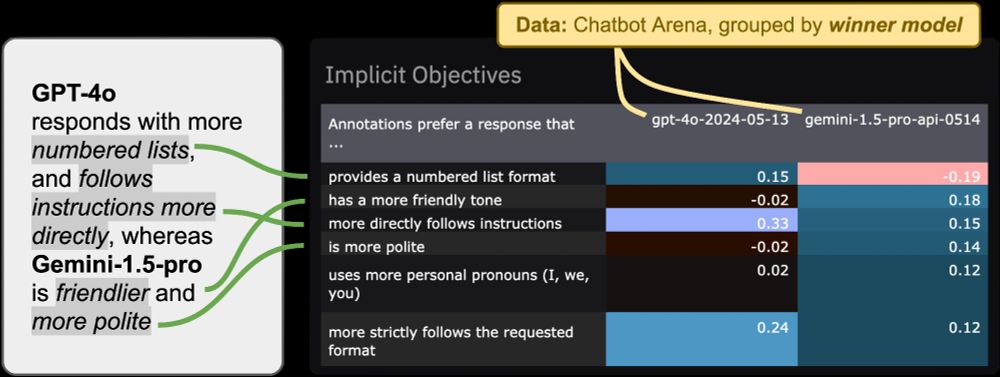

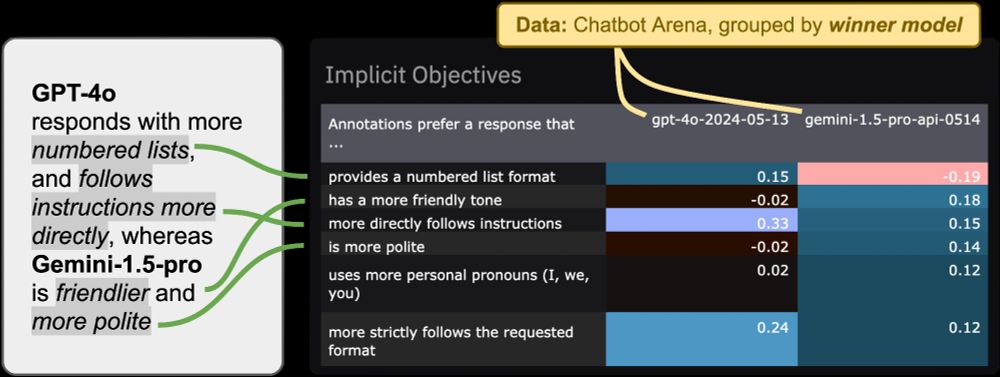

🤖 3. Discovering model strengths

How is GPT-4o different to other models? → Uses more numbered lists, but Gemini is more friendly and polite

app.feedbackforensics.com?data=chatbot...

17.03.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

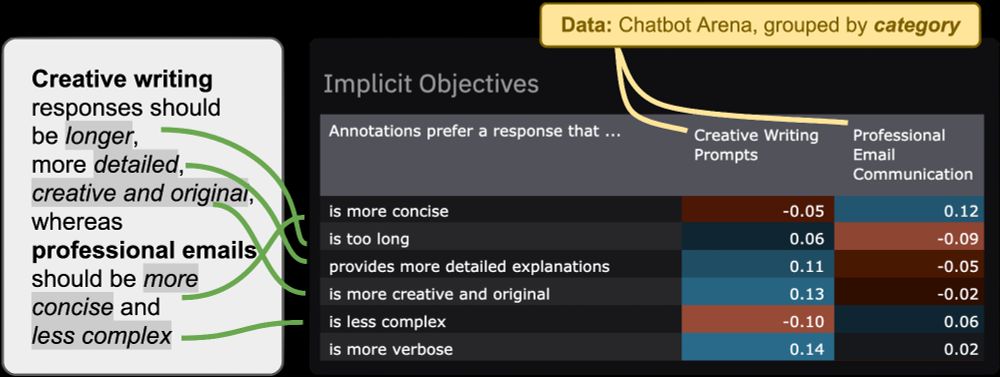

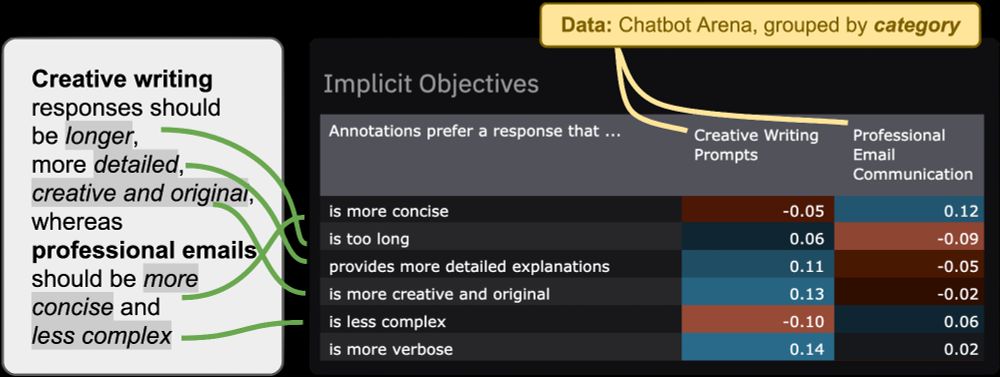

🧑🎨🧑💼 2. Finding preference differences between task domains

How do preferences differ across writing tasks? → Emails should be concise, creative writing more verbose

app.feedbackforensics.com?data=chatbot...

17.03.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

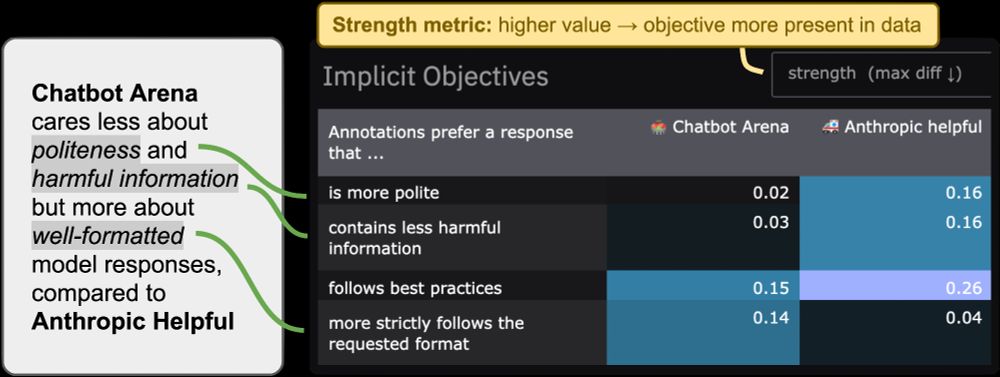

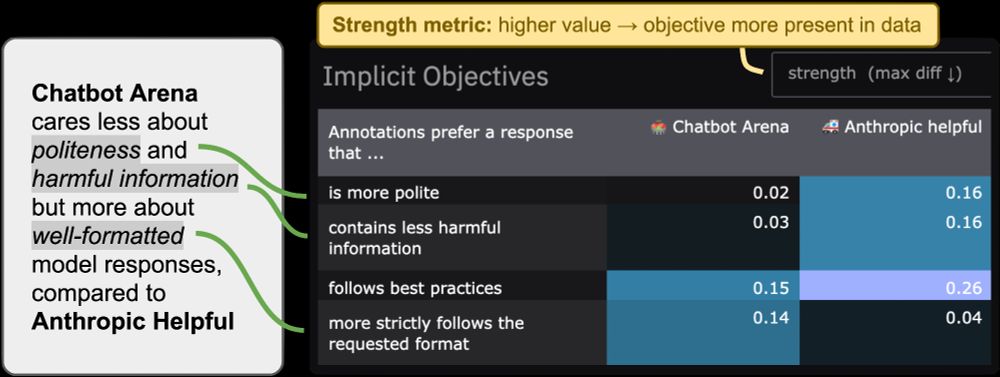

🗂️ 1. Visualizing dataset differences

How does Chatbot Arena differ from Anthropic Helpful data? → Prefers less polite but better formatted responses

app.feedbackforensics.com?data=chatbot...

17.03.2025 18:12 — 👍 1 🔁 0 💬 1 📌 0

🕵🏻💬 Introducing Feedback Forensics: a new tool to investigate pairwise preference data.

Feedback data is notoriously difficult to interpret and has many known issues – our app aims to help!

Try it at app.feedbackforensics.com

Three example use-cases 👇🧵

17.03.2025 18:12 — 👍 7 🔁 2 💬 1 📌 0

First Workshop on Large Language Model Memorization.

Visit our website at https://sites.google.com/view/memorization-workshop/

Computational social scientist researching human-AI interaction and machine learning, particularly the rise of digital minds. Visiting scholar at Stanford, co-founder of Sentience Institute, and PhD candidate at University of Chicago. jacyanthis.com

Professor at Columbia. Computer Vision and Machine Learning

Associate Professor @ Cornell, Computer vision & machine learning

The Press Service of the European Parliament

Your voice in the EU 🇪🇺

Updates, news, and insights from the European Parliament - the heart of European democracy.

Privacy policy: https://www.europarl.europa.eu/pdf/data_protection/data-protection-notice-bluesky-en.pdf

PhD Student at LMU Munich. Focus on RL, reward learning and learning from human preferences.

Ph.D. Student @ ELLIS Unit / University Linz Institute for Machine Learning

Co-Founder & Chief Scientist @ Emmi AI. Ass. Prof / Group Lead @jkulinz. Former MSFTResearch, UvA_Amsterdam, CERN, TU_Wien

Deep Learning researcher | professor for Artificial Intelligence in Life Sciences | inventor of self-normalizing neural networks | ELLIS program Director

Research Scientist at Apple for uncertainty quantification.

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine

Professor Oxford in Machine Learning

Involved in many start ups including FiveAI, Onfido, Oxsight, AIStetic. Eigent, etc

I occasionally look here but am mostly on linkedin, find me there, www.linkedin.com/in/philip-torr-1085702

ML researcher @ University of Oxford

Predoctoral researcher at Ai2

https://ljvmiranda921.github.io

PhD student in NLP at Cambridge | ELLIS PhD student

https://lucasresck.github.io/

NLP PhD Student @ University of Cambridge

NLP PhD @ Cambridge Language Technology Lab

paulsbitsandbytes.com