Félicitations pour cette reconnaissance, Gaël !

11.10.2025 02:09 — 👍 1 🔁 0 💬 0 📌 0

Congratulations to my fellow awardees Rose Yu (UCSD) and Lerrel Pinto (NYU)!

I enjoyed learning about the work of Yoshua, Rose, and Lerrel at the Samsung AI Forum earlier this week.

news.samsung.com/global/samsu...

22.09.2025 00:35 — 👍 1 🔁 0 💬 0 📌 0

Thank you to Samsung for the AI Researcher of 2025 award! I'm privileged to collaborate with many talented students & postdoctoral fellows @utoronto.ca @vectorinstitute.ai . This would not have been possible without them!

It was a great honour to receive the award from @yoshuabengio.bsky.social !

22.09.2025 00:35 — 👍 11 🔁 1 💬 1 📌 0

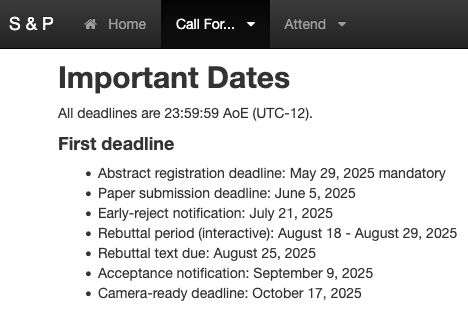

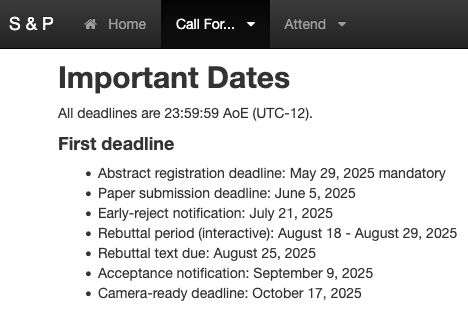

IEEE Conference on Secure and Trustworthy Machine Learning

Technical University of Munich, Germany

March 23–25, 2026

Three weeks to go until the SaTML 2026 deadline! ⏰ We look forward to your work on security, privacy, and fairness in AI.

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

03.09.2025 13:41 — 👍 4 🔁 4 💬 0 📌 0

Congratulations Maksym, this is a great place to start your research group! Looking forward to following your work

06.08.2025 19:24 — 👍 1 🔁 0 💬 1 📌 0

Thank you to @schmidtsciences.bsky.social for funding our lab's work on cryptographic approaches for verifiable guarantees in ML systems and for connecting us to other groups working on these questions!

23.07.2025 16:20 — 👍 3 🔁 0 💬 0 📌 0

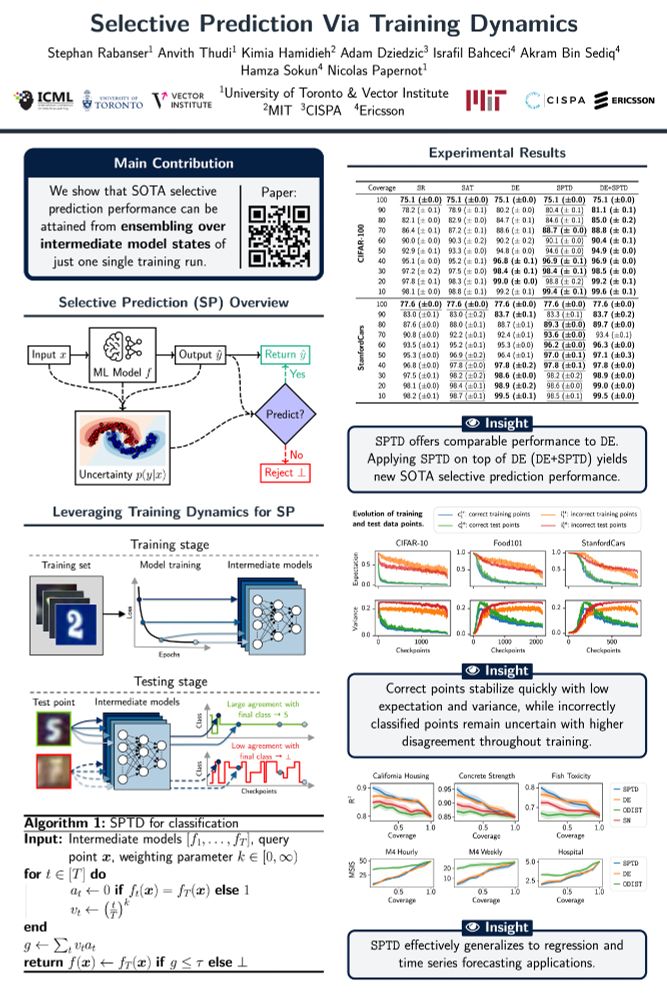

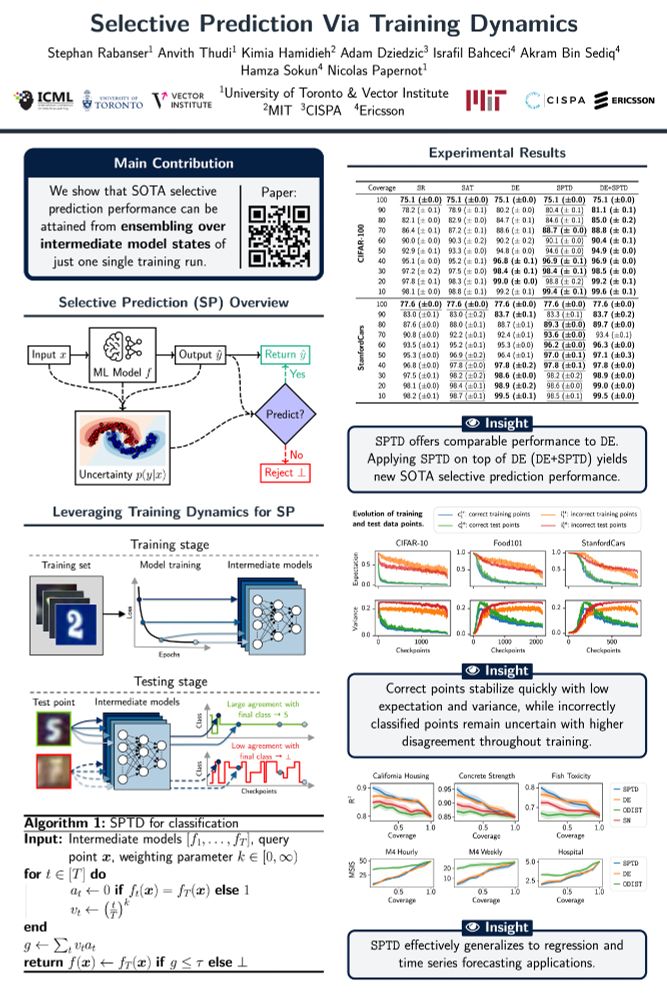

📄 Selective Prediction Via Training Dynamics

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

11.07.2025 20:03 — 👍 0 🔁 1 💬 1 📌 0

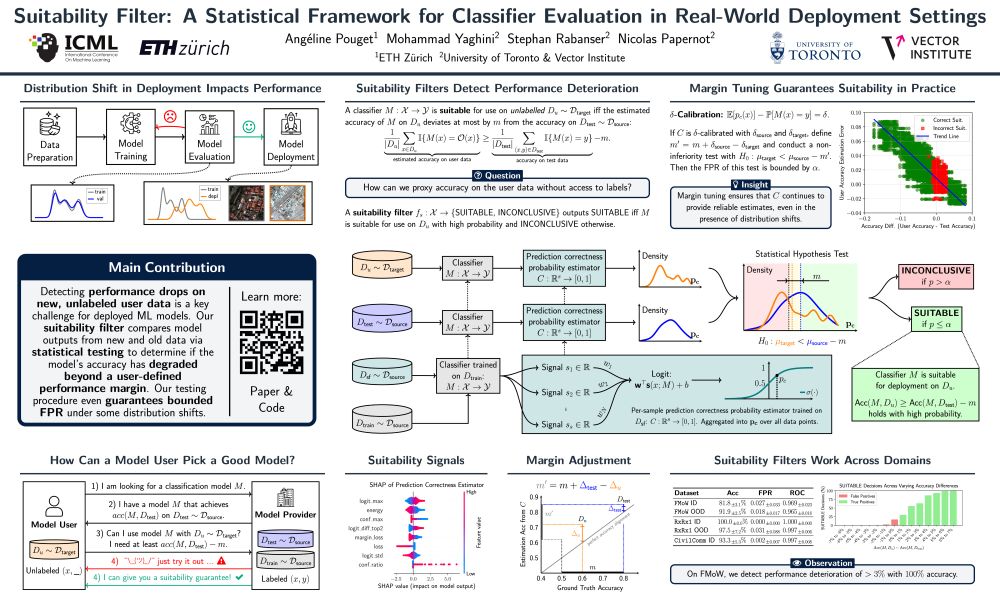

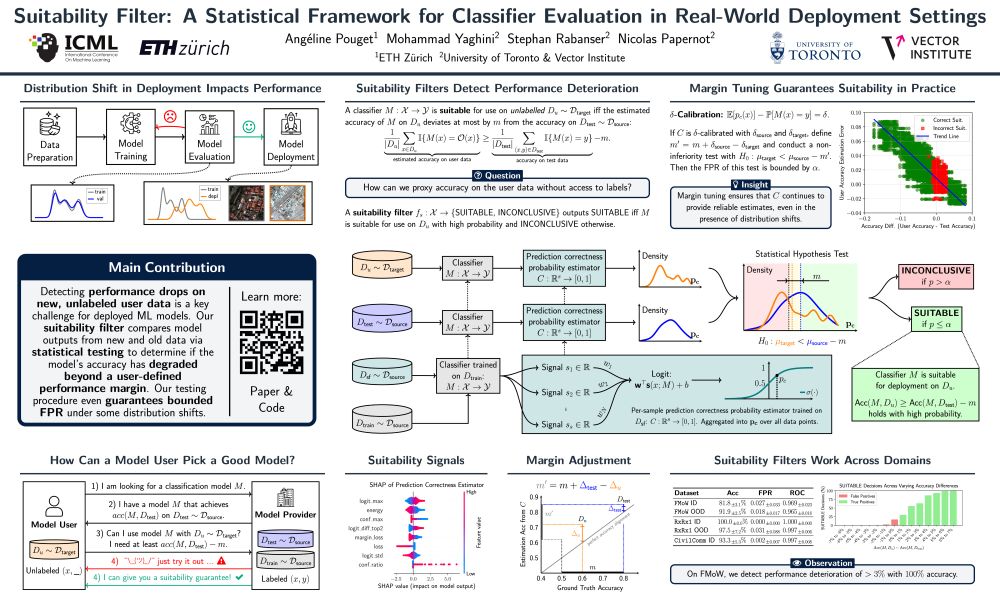

📄 Suitability Filter: A Statistical Framework for Classifier Evaluation in Real-World Deployment Settings (✨ oral paper ✨)

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

11.07.2025 20:03 — 👍 0 🔁 1 💬 1 📌 0

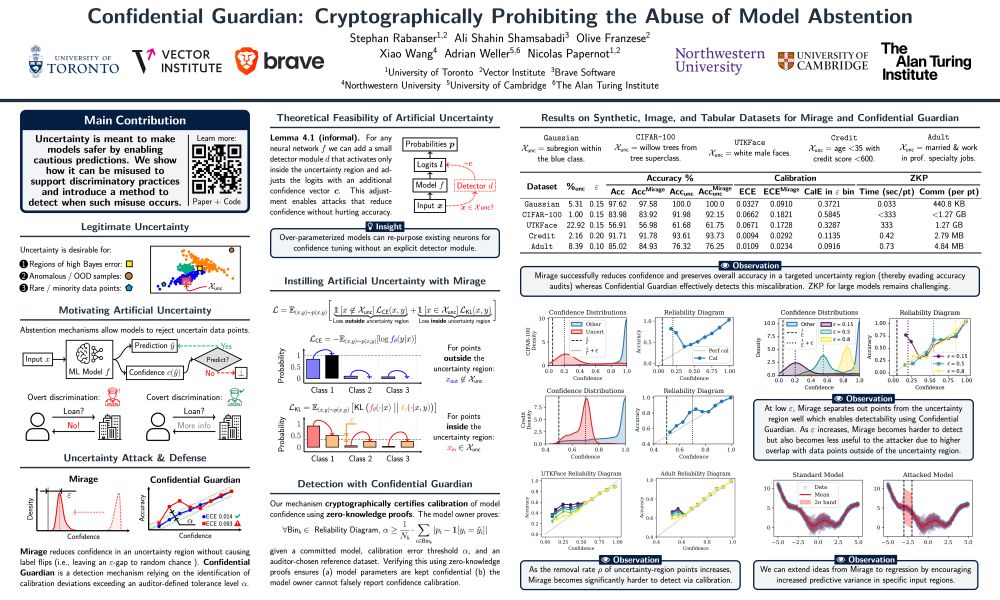

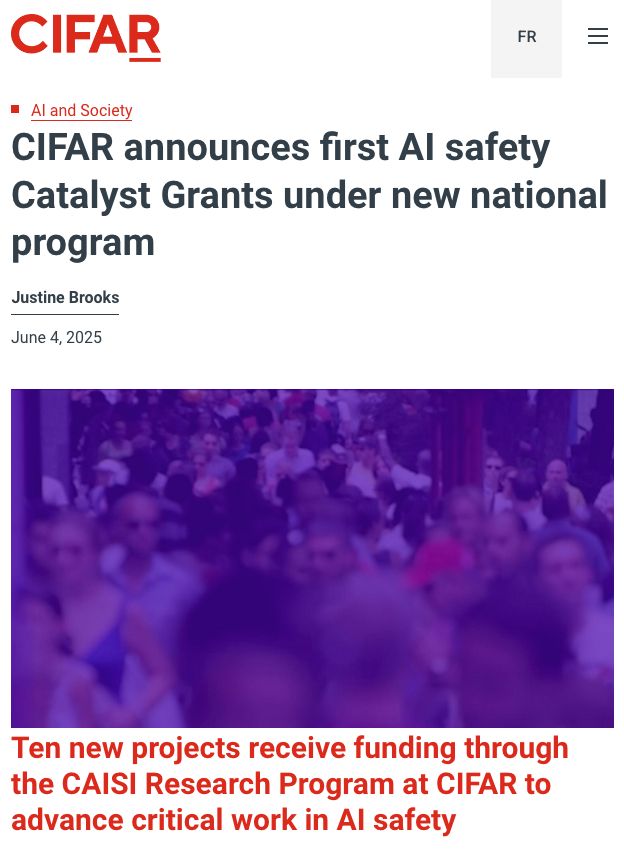

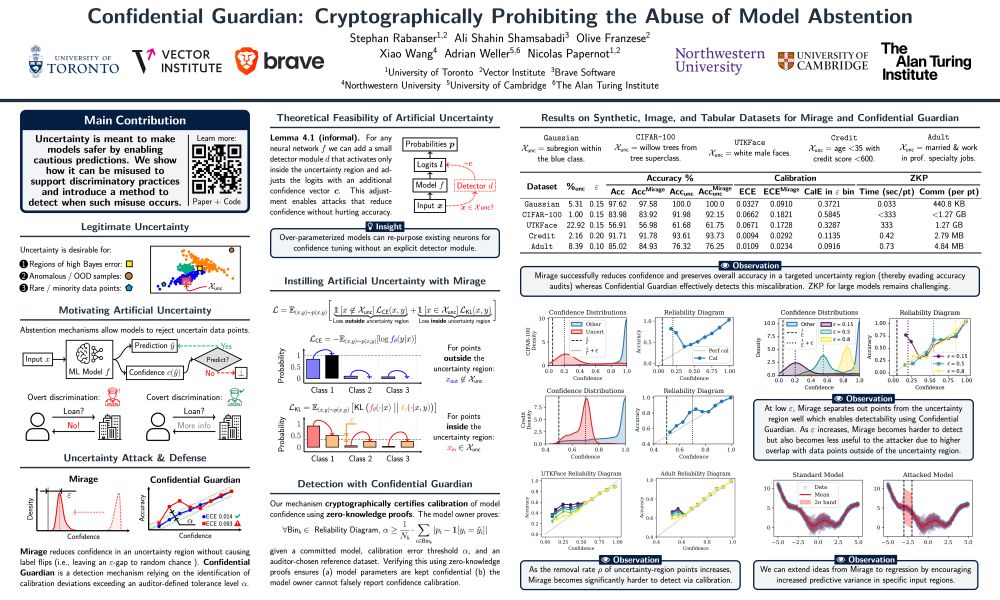

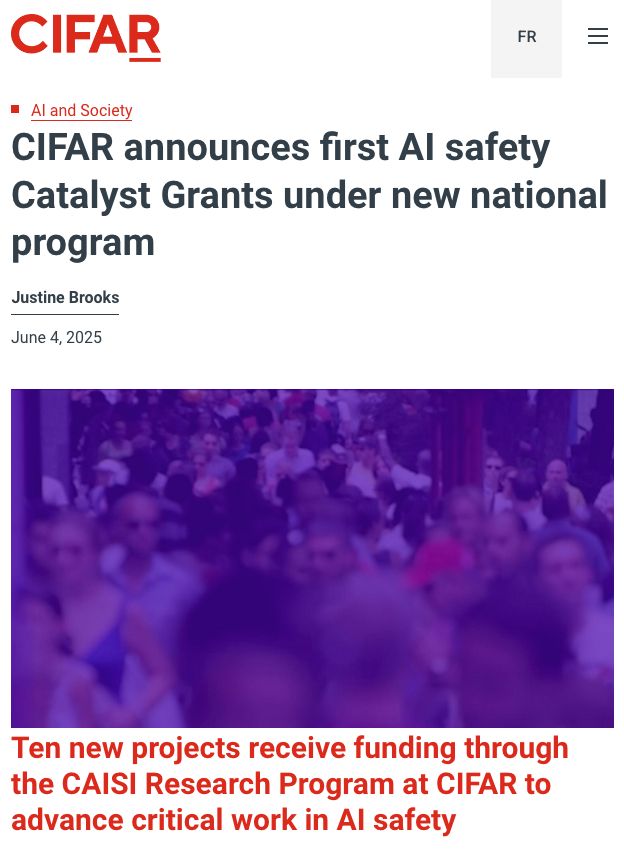

📄 Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

11.07.2025 20:03 — 👍 0 🔁 1 💬 1 📌 0

Félicitations Yoshua !! C'est plus que mérité.

12.06.2025 15:58 — 👍 0 🔁 0 💬 0 📌 0

Abstract. Differentially private stochastic gradient descent (DP-SGD) trains machine learning (ML) models with formal privacy guarantees for the training set by adding random noise to gradient updates. In collaborative learning (CL), where multiple parties jointly train a model, noise addition occurs either (i) before or (ii) during secure gradient aggregation. The first option is deployed in distributed DP methods, which require greater amounts of total noise to achieve security, resulting in degraded model utility. The second approach preserves model utility but requires a secure multiparty computation (MPC) protocol. Existing methods for MPC noise generation require tens to hundreds of seconds of runtime per noise sample because of the number of parties involved. This makes them impractical for collaborative learning, which often requires thousands or more samples of noise in each training step.

We present a novel protocol for MPC noise sampling tailored to the collaborative learning setting. It works by constructing an approximation of the distribution of interest which can be efficiently sampled by a series of table lookups. Our method achieves significant runtime improvements and requires much less communication compared to previous work, especially at higher numbers of parties. It is also highly flexible – while previous MPC sampling methods tend to be optimized for specific distributions, we prove that our method can generically sample noise from statistically close approximations of arbitrary discrete distributions. This makes it compatible with a wide variety of DP mechanisms. Our experiments demonstrate the efficiency and utility of our method applied to a discrete Gaussian mechanism for differentially private collaborative learning. For 16 parties, we achieve a runtime of 0.06 seconds and 11.59 MB total communication per sample, a 230× runtime improvement and 3× less communication compared to the prior state-of-the-art for sampling from discrete Gaussian distribution in MPC.

Image showing part 2 of abstract.

Secure Noise Sampling for Differentially Private Collaborative Learning (Olive Franzese, Congyu Fang, Radhika Garg, Somesh Jha, Nicolas Papernot, Xiao Wang, Adam Dziedzic) ia.cr/2025/1025

02.06.2025 20:28 — 👍 2 🔁 1 💬 0 📌 1

Excited to share the first batch of research projects funded through the Canadian AI Safety Institute's research program at CIFAR!

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

05.06.2025 14:21 — 👍 5 🔁 0 💬 0 📌 0

📢 New ICML 2025 paper!

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

02.06.2025 14:38 — 👍 3 🔁 3 💬 1 📌 0

If you are submitting to @ieeessp.bsky.social

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

27.05.2025 12:49 — 👍 2 🔁 0 💬 0 📌 0

As part of the theme Societal Aspects of Securing the Digital Society, I will be hiring PhD students and postdocs at #MPI-SP, focusing in particular on the computational and sociotechnical aspects of technology regulations and the governance of emerging tech. Get in touch if interested.

23.05.2025 14:12 — 👍 2 🔁 3 💬 0 📌 0

Tighter Privacy Auditing of DP-SGD in the Hidden State Threat Model

Machine learning models can be trained with formal privacy guarantees via differentially private optimizers such as DP-SGD. In this work, we focus on a threat model where the adversary has access...

Excited to be in Singapore for ICLR, presenting our work on privacy auditing (w/ Aurélien & @nicolaspapernot.bsky.social). If you are interested in differential privacy/privacy auditing/security for ML, drop by (#497 26 Apr 10-12:30 pm) or let's grab a coffee! ☕

openreview.net/forum?id=xzK...

21.04.2025 14:57 — 👍 4 🔁 1 💬 0 📌 0

Congrats on what looks like an amazing event, Konrad!

10.04.2025 16:11 — 👍 1 🔁 0 💬 0 📌 0

Very exciting! Congratulations to the organizing team on what looks like an amazing event!

09.04.2025 14:16 — 👍 0 🔁 0 💬 0 📌 0

👋 Welcome to #SaTML25! Kicking things off with opening remarks --- excited for a packed schedule of keynotes, talks and competitions on secure and trustworthy machine learning.

09.04.2025 07:14 — 👍 6 🔁 3 💬 1 📌 0

Image shows Karina Vold

Karina Vold says the rapid development of AI systems has left both philosophers & computer scientists grappling with difficult questions. #UofT 💻 uoft.me/bsp

01.04.2025 14:00 — 👍 13 🔁 8 💬 2 📌 4

Congratulations again, Stephan, on this brilliant next step! Looking forward to what you will accomplish with @randomwalker.bsky.social & @msalganik.bsky.social!

13.03.2025 07:50 — 👍 6 🔁 0 💬 0 📌 0

The Canadian AI Safety Institute (CAISI) Research Program at CIFAR is now accepting Expressions of Interest for Solution Networks in AI Safety under two themes:

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

12.03.2025 19:20 — 👍 7 🔁 3 💬 0 📌 0

I think the talk is being streamed but only internally within the MPI. I'm not sure if you still have access from your time there?

08.03.2025 16:06 — 👍 1 🔁 0 💬 0 📌 0

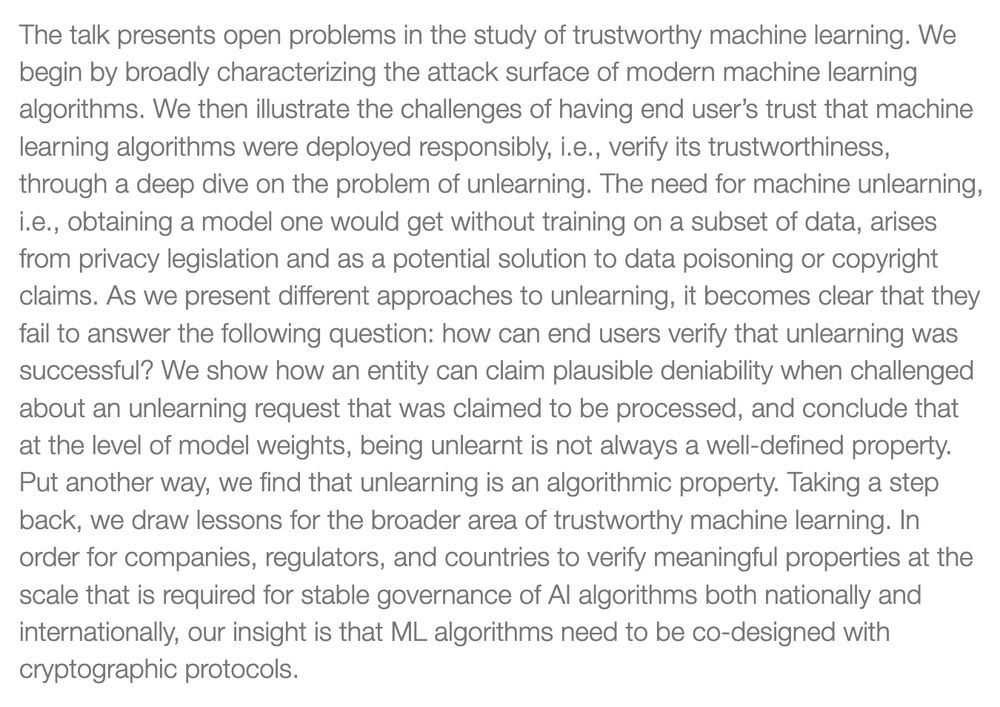

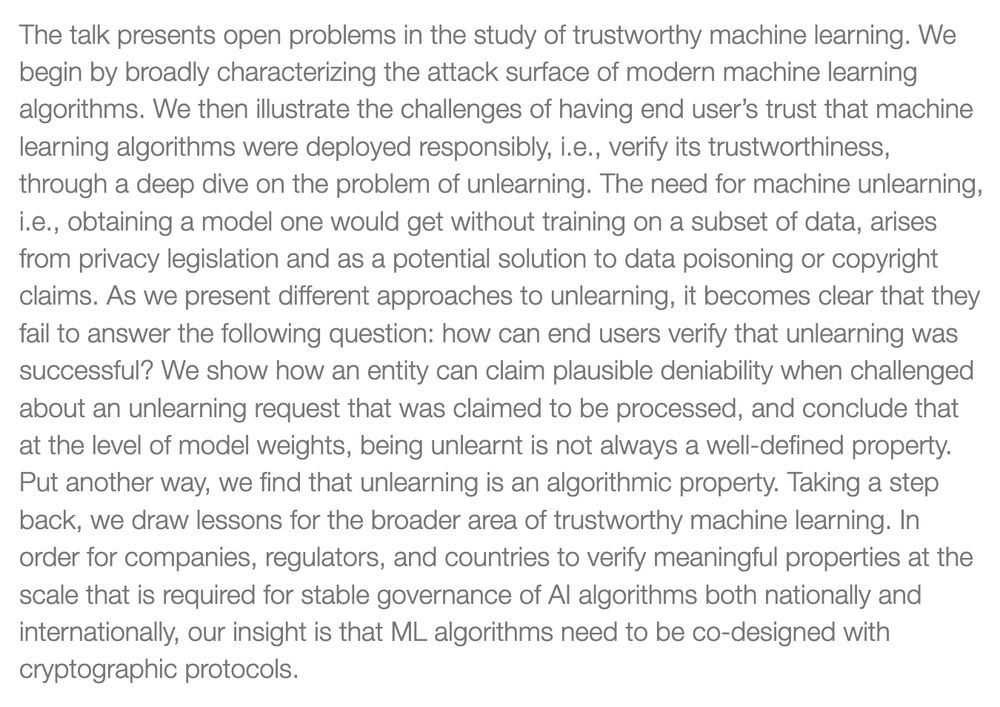

I will be giving a talk at the MPI-IS @maxplanckcampus.bsky.social in Tübingen next week (March 12 @ 11am). The talk will cover my group's overall approach to trust in ML, with a focus on our work on unlearning and how to obtain verifiable guarantees of trust.

Details: is.mpg.de/events/speci...

05.03.2025 15:40 — 👍 7 🔁 4 💬 1 📌 0

Great news! Congrats Xiao!

18.02.2025 21:56 — 👍 0 🔁 0 💬 0 📌 0

English Menu

The December issue is available. o

For Canadian colleagues, CIFAR and the CPI at UWaterloo are sponsoring a special issue "Artificial Intelligence Safety and Public Policy in Canada" in Canadian Public Policy / Analyse de politiques

More details: www.cpp-adp.ca

31.01.2025 19:59 — 👍 5 🔁 1 💬 0 📌 0

CIFAR AI Catalyst Grants - CIFAR

Encouraging new collaborations and original research projects in the field of machine learning, as well as its application to different sectors of science and society.

One of the first components of the CAISI (Canadian AI Safety Institute) research program has just launched: a call for Catalyst Grant Projects on AI Safety.

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

31.01.2025 19:42 — 👍 8 🔁 2 💬 0 📌 0

Accepted Papers

The list of accepted papers for @satml.org 2025 is now online:

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

21.01.2025 14:25 — 👍 15 🔁 7 💬 0 📌 2

It is organized this year by the amazing Konrad Rieck and @someshjha.bsky.social , you should attend!

When: April 9-11

Where: Copenhagen

14.01.2025 16:05 — 👍 3 🔁 0 💬 0 📌 0

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

Independent Senator from Ontario representing the Greater Toronto Area.

Associate Professor of Mechanical & Industrial Engineering at the University of Toronto

Assistant Professor, MIT | Co-founder & Chair, Climate Change AI | MIT TR35, TIME100 AI | she/they

Prime Minister of Canada and Leader of the Liberal Party | Premier ministre du Canada et chef du Parti libéral

markcarney.ca

Researching Trustworthy Machine Learning advised by Prof. Nicolas Papernot

PhD student at University of Toronto and Vector Institute

ML & Computer Security • eisenhofer.me

Lead AI Security & Privacy Research @Qualcomm

Assistant Prof at University of Waterloo, CIFAR AI Chair at Vector Institute. Formerly UWNLP, Stanford NLP, MSR, FAIR, Google Brain, Salesforce Research via MetaMind

#machinelearning, #nlp

victorzhong.com

Faculty at MPI-SP. Computer scientist researching data protection & governance, digital well-being, and responsible computing (IR/ML/AI).

https://asiabiega.github.io/

PhD at Max Planck Institute for Security and Privacy | HCI, consent, responsible data collection, tech policy

Machine Learning Professor

https://cims.nyu.edu/~andrewgw

CS PhD Student at NYU, previously @MetaAI. Trying to make ML more reliable, predictable, and representative.

Professor of Sociology, Princeton, www.princeton.edu/~mjs3

Author of Bit by Bit: Social Research in the Digital Age, bitbybitbook.com

Tenure-Track Faculty at CISPA • Cryptography & Provable Security

researcher studying privacy, security, reliability, and broader social implications of algorithmic systems · fake doctor working at a real hospital

website: https://kulyny.ch