✈️ Headed to @iclr-conf.bsky.social — whether you’ll be there in person or tuning in remotely, I’d love to connect!

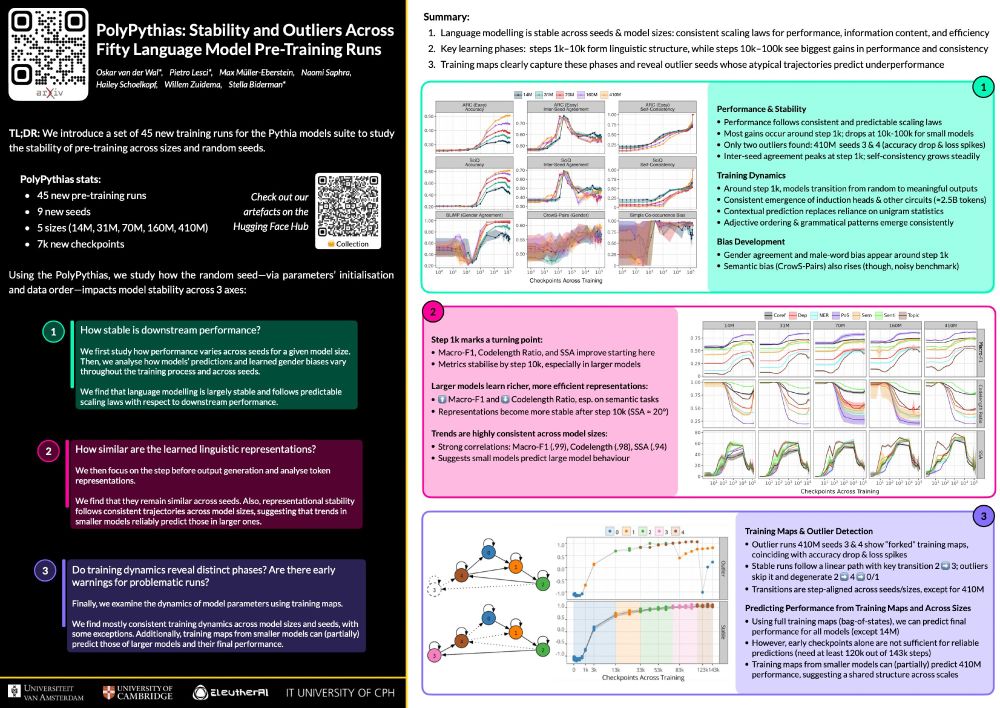

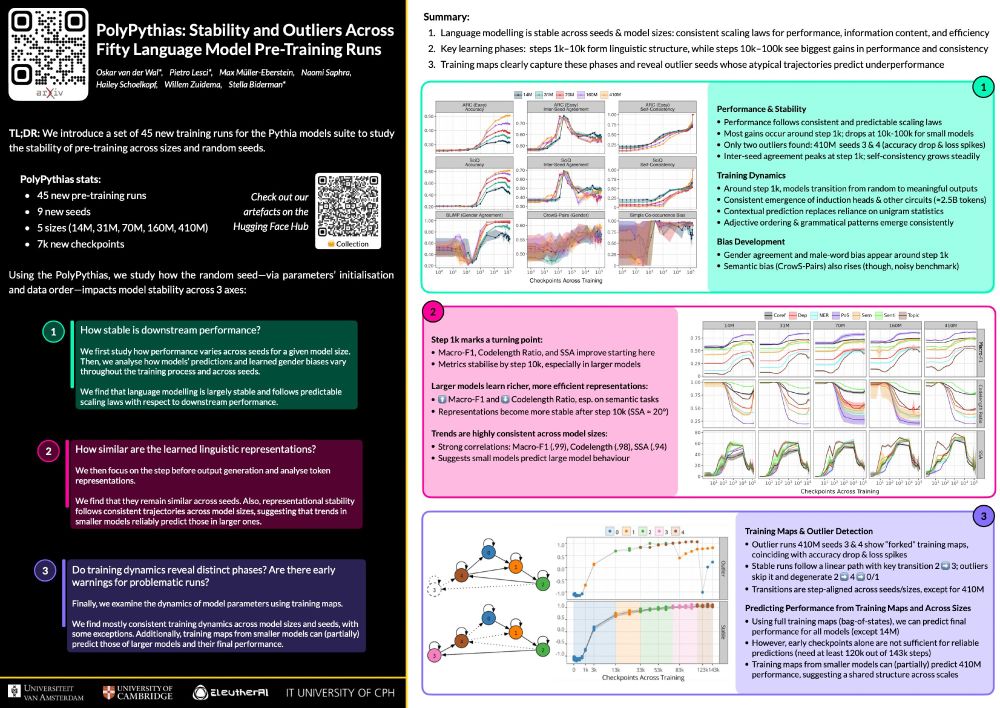

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

22.04.2025 11:02 — 👍 5 🔁 3 💬 1 📌 0

Work in progress -- suggestions for NLP-ers based in the EU/Europe & already on Bluesky very welcome!

go.bsky.app/NZDc31B

10.11.2024 17:24 — 👍 70 🔁 20 💬 51 📌 0

I would like to be added! 😄

19.11.2024 19:30 — 👍 1 🔁 0 💬 0 📌 0

Hi, I'd like to be part of this!

18.11.2024 16:15 — 👍 1 🔁 0 💬 0 📌 0

👋

16.11.2024 16:19 — 👍 2 🔁 0 💬 0 📌 0

A photo of the panel discussion.

💬Panel discussion with Sally Haslanger and Marjolein Lanzing: A philosophical perspective on algorithmic discrimination

Is discrimination the right way to frame the issues of lang tech? Or should we answer deeper rooted questions? And how does tech fit in systems of oppression?

15.11.2024 16:36 — 👍 0 🔁 0 💬 0 📌 0

Screenshot of a slide discussing how to improve how we communicate bias scores on Model Cards.

📄Undesirable Biases in NLP: Addressing Challenges of Measurement

We also presented our own work on strategies for testing the validity and reliability of LM bias measures:

www.jair.org/index.php/ja...

15.11.2024 16:36 — 👍 0 🔁 0 💬 1 📌 0

Photo of the presentation. The slide shows an image of Frankenstein's monster.

🔑Keynote @zeerak.bsky.social: On the promise of equitable machine learning technologies

Can we create equitable ML technologies? Can statistical models faithfully express human language? Or are tokenizers "tokenizing" people—creating a Frankenstein monster of lived experiences?

15.11.2024 16:36 — 👍 6 🔁 2 💬 1 📌 0

Photo of the presentation.

📄A Capabilities Approach to Studying Bias and Harm in Language Technologies

@hellinanigatu.bsky.social introduced us to the Capabilities Approach and how it can help us better understand the social impact of language technologies—with case studies of failing tech in the Majority World.

15.11.2024 16:36 — 👍 1 🔁 0 💬 1 📌 1

Photo of the presentation.

📄Angry Men, Sad Women: Large Language Models Reflect Gendered Stereotypes in Emotion Attribution

Flor Plaza discussed the importance of studying gendered emotional stereotypes in LLMs, and how collaborating with philosophers benefits work on bias evaluation greatly.

15.11.2024 16:36 — 👍 1 🔁 0 💬 1 📌 0

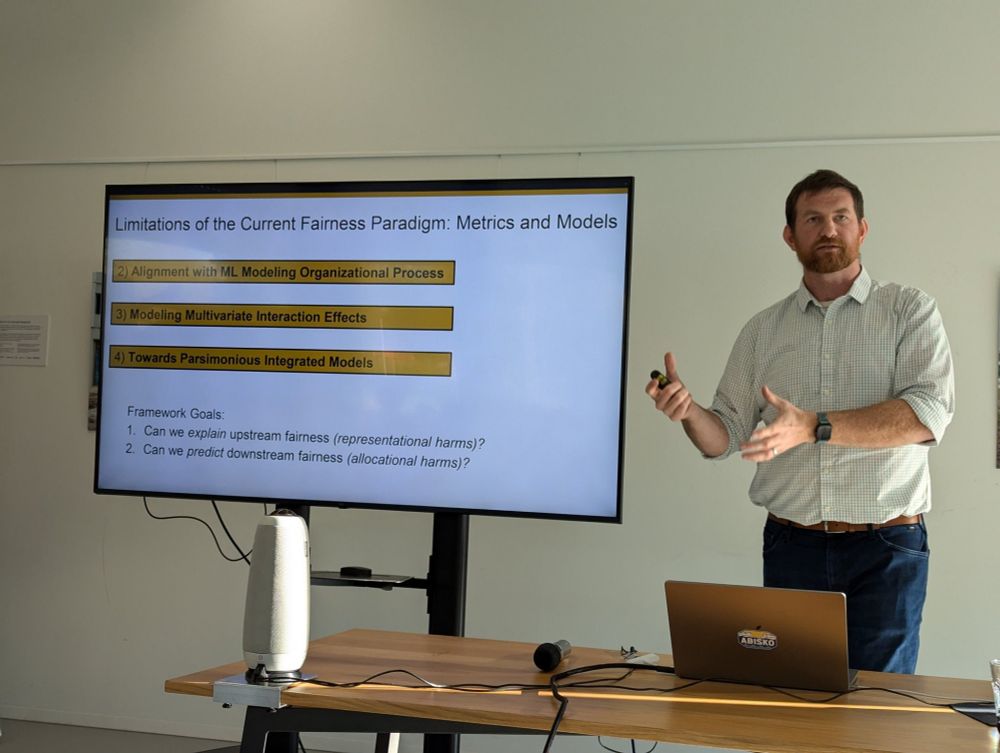

Photo of the presentation.

🔑Keynote by John Lalor: Should Fairness be a Metric or a Model?

While fairness is often viewed as a metric, using integrated models instead can help with explaining upstream bias, predicting downstream fairness, and capturing intersectional bias.

15.11.2024 16:36 — 👍 0 🔁 0 💬 1 📌 0

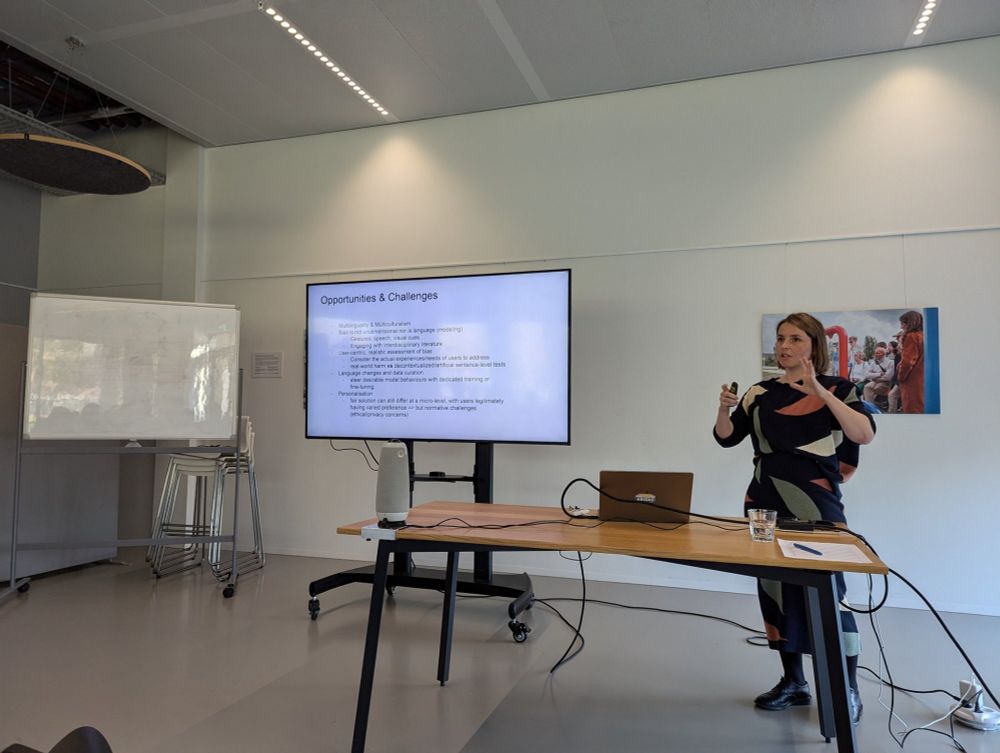

Photo of the presentation.

📄A Decade of Gender Bias in Machine Translation

Eva Vanmassenhove: how has research on gender bias in MT developed over the years? Important issues, like non-binary gender bias, now fortunately get more attention. Yet, fundamental problems (that initially seemed trivial) remain unsolved.

15.11.2024 16:36 — 👍 2 🔁 0 💬 1 📌 0

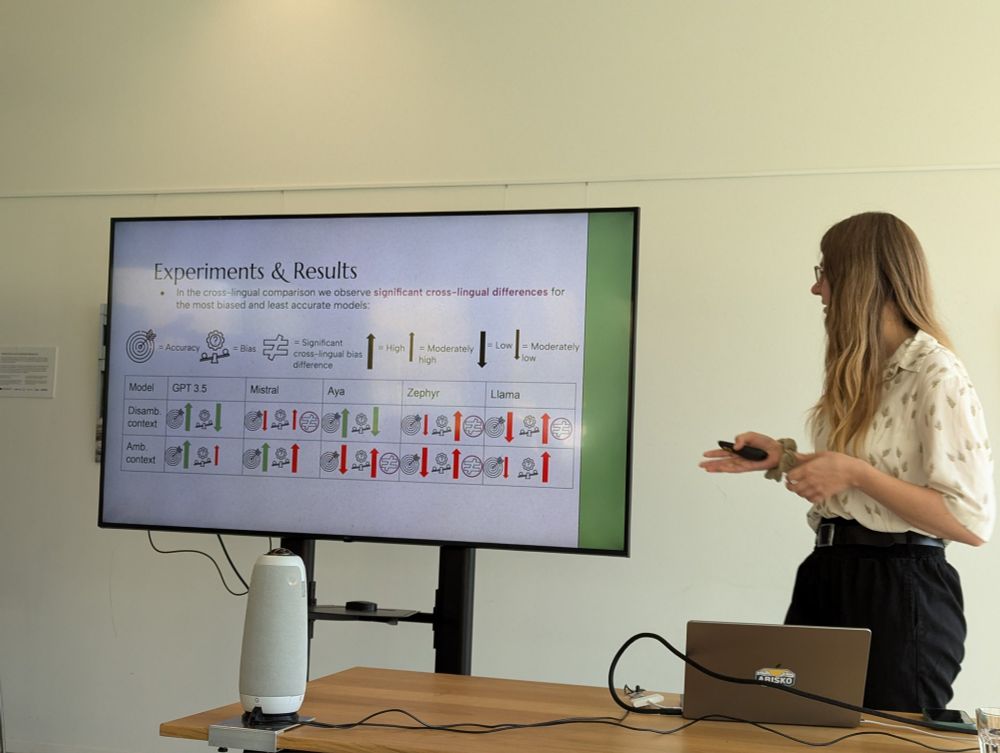

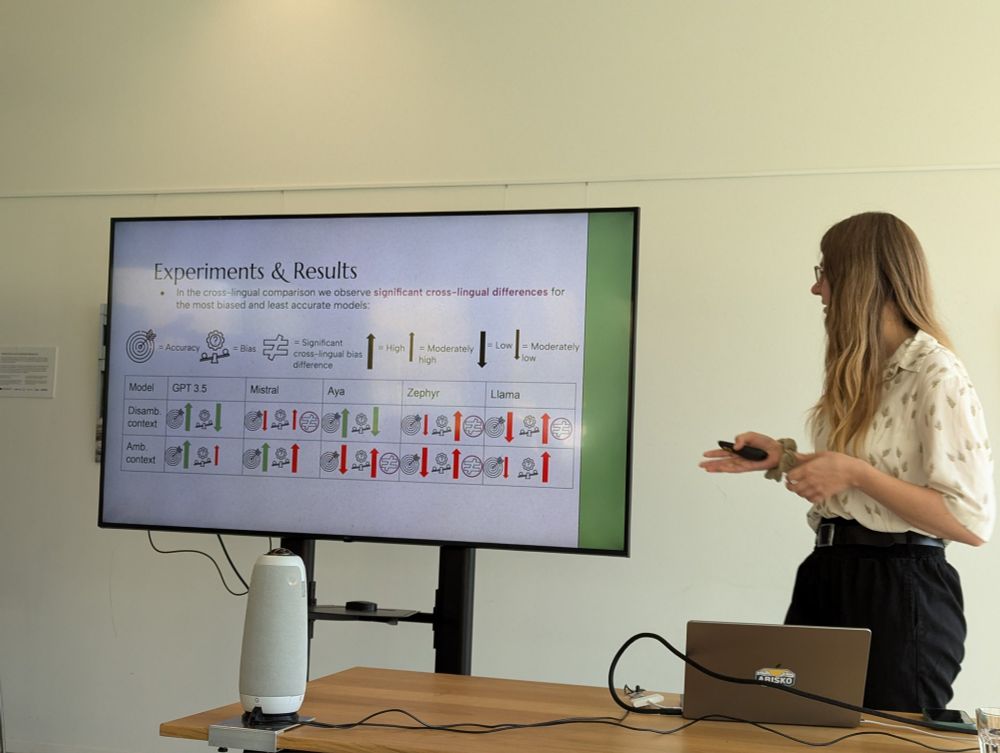

Photo of the presentation.

📄MBBQ: A Dataset for Cross-Lingual Comparison of Stereotypes in Generative LLMs

Vera Neplenbroek presented a multilingual extension of the BBQ bias benchmark to study bias across English, Dutch, Spanish, and Turkish.

"Multilingual LLMs are not necessarily multicultural!"

15.11.2024 16:36 — 👍 0 🔁 0 💬 1 📌 0

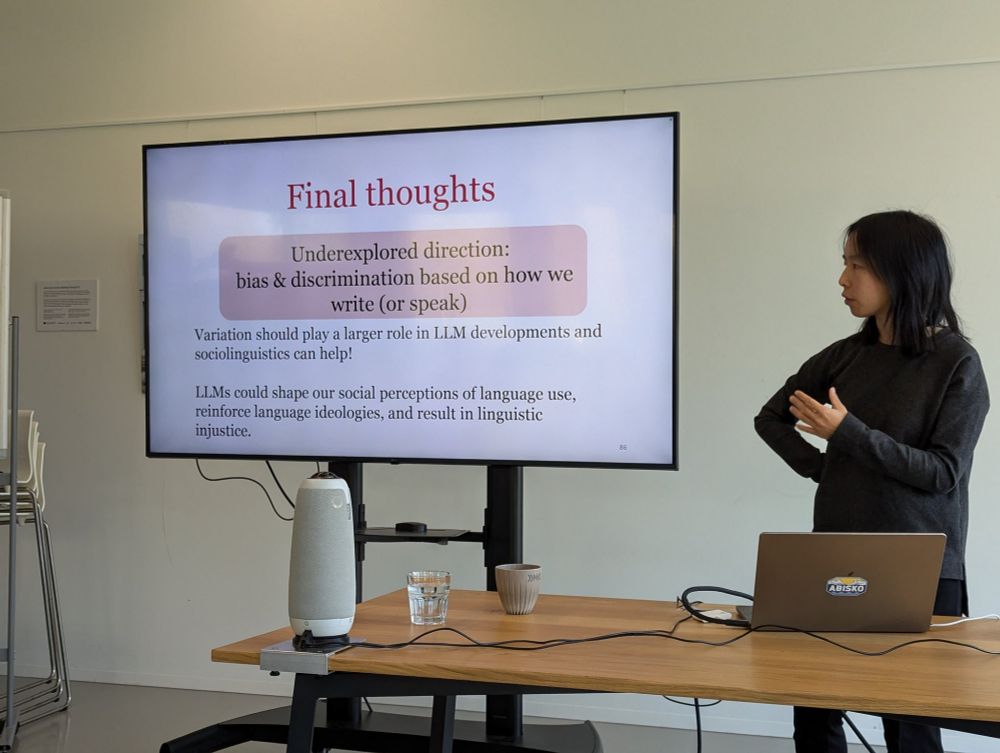

Photo of the presentation.

🔑Keynote by Dong Nguyen: When LLMs meet language variation: Taking stock and looking forward

Non-standard language is often seen as noisy/incorrect data, but this ignores the reality of language. Variation should play a larger role in LLM developments and sociolinguistics can help!

15.11.2024 16:36 — 👍 0 🔁 0 💬 1 📌 0

Last week, we organized the workshop "New Perspectives on Bias and Discrimination in Language Technology" 🤖 @uvahumanities.bsky.social @amsterdamnlp.bsky.social

We're looking back at two inspiring days of talks, posters, and discussions—thanks to everyone who participated!

wai-amsterdam.github.io

15.11.2024 16:36 — 👍 11 🔁 1 💬 1 📌 0

This is a friendly reminder that there are 7 days left for submitting your extended abstract to this workshop!

(Since the workshop is non-archival, previously published work is welcome too. So consider submitting previous/future work to join the discussion in Amsterdam!)

08.09.2024 16:45 — 👍 0 🔁 1 💬 0 📌 0

Workshop: New Perspectives on Bias and Discrimination in Language Technology.

Workshop: New Perspectives on Bias and Discrimination in Language Technology.

This workshop is organized by University of Amsterdam researchers Katrin Schulz, Leendert van Maanen, @wzuidema.bsky.social, Dominik Bachmann, and myself.

More information on the workshop can be found on the website, which will be updated regularly.

wai-amsterdam.github.io

07.08.2024 14:27 — 👍 1 🔁 0 💬 0 📌 0

🌟The goal of this workshop is to bring together researchers from different fields to discuss the state of the art on bias measurement and mitigation in language technology and to explore new avenues of approach.

07.08.2024 14:25 — 👍 1 🔁 0 💬 1 📌 0

One of the central issues discussed in the context of the societal impact of language technology is that ML systems can contribute to discrimination. Despite efforts to address these issues, we are far from solving them.

07.08.2024 14:25 — 👍 1 🔁 0 💬 1 📌 0

We're super excited to host Dong Nguyen, John Lalor, @zeerak.bsky.social and @azjacobs.bsky.social as invited speakers at this workshop! Submit an extended abstract to join the discussions; either in a 20min talk or a poster session.

📝Deadline Call for Abstracts: 15 Sep, 2024

07.08.2024 14:24 — 👍 2 🔁 1 💬 1 📌 0

Workshop: New Perspectives on Bias and Discrimination in Language Technology.

Workshop: New Perspectives on Bias and Discrimination in Language Technology.

Working on #bias & #discrimination in #NLP? Passionate about integrating insights from different disciplines? And do you want to discuss current limitations of #LLM bias mitigation work? 🤖

👋Join the workshop New Perspectives on Bias and Discrimination in Language Technology 4&5 Nov in #Amsterdam!

07.08.2024 14:21 — 👍 6 🔁 4 💬 1 📌 2

OLMo: Open Language Model

A State-Of-The-Art, Truly Open LLM and Framework

release day release day 🥳 OLMo 1b +7b out today and 65b soon...

OLMo accelerates the study of LMs. We release *everything*, from toolkit for creating data (Dolma) to train/inf code

blog blog.allenai.org/olmo-open-la...

olmo paper allenai.org/olmo/olmo-pa...

dolma paper allenai.org/olmo/dolma-p...

01.02.2024 19:33 — 👍 29 🔁 14 💬 1 📌 2

But exciting to see more work dedicated to sharing models, checkpoints, and training data to the (research) community!

01.02.2024 19:51 — 👍 1 🔁 0 💬 0 📌 0

I look forward to debates in the philosophy of (techno)science by people more knowledgeable. I'd say we have some philosophical basis that people are capable of such tasks. But there is also sufficient reason to believe LLM≠human, so any trust in one does not automatically transfer to the other.

28.01.2024 19:07 — 👍 0 🔁 0 💬 1 📌 0

That is not to say I am categorically against using LLMs as epistemic tools, but from my own experience as a bias and interpretability researcher I think we should be careful of potential biases/failure modes. If we are transparent about their use and potential issues, I could see LLMs being useful.

28.01.2024 18:35 — 👍 1 🔁 0 💬 1 📌 0

I think it all boils down to the reliability+validity of the approach. We don't have good methodologies (yet) to assess these qualities for LLMs compared to simpler more interpretable techniques. And intuitively I think we have more reasons to trust (expert) human annotators—see also psychometrics.

28.01.2024 18:24 — 👍 0 🔁 0 💬 2 📌 0

A 🧵thread about strategies for improving social bias evaluations of LMs. #blueskAI 🤖

bsky.app/profile/ovdw...

24.01.2024 09:55 — 👍 4 🔁 1 💬 0 📌 0

Special thanks go to Dominik Bachmann (shared first-author) whose insights from the perspective of psychometrics not only helped shape this paper, but also my views of current AI fairness practices more broadly.

24.01.2024 09:44 — 👍 0 🔁 0 💬 0 📌 0

ML researcher, MSR + Stanford postdoc, future Yale professor

https://afedercooper.info

Post-doc @ VU Amsterdam, prev University of Edinburgh.

Neurosymbolic Machine Learning, Generative Models, commonsense reasoning

https://www.emilevankrieken.com/

Postdoc @ai2.bsky.social & @uwnlp.bsky.social

PhD student at NYU. NLP & training data.

michahu.github.io

PhD Candidate @University of Amsterdam. Working on understanding language generation from visual events—particularly from a "creative" POV!

🔗 akskuchi.github.io

Cognitive and perceptual psychologist, industrial designer, & electrical engineer. Assistant Professor of Industrial Design at University of Illinois Urbana-Champaign. I make neurally plausible bio-inspired computational process models of visual cognition.

professor for natural language processing, head of

BamNLP @bamnlp.de

📍 Duisburg, Stuttgart, Bamberg

#NLProc #emotion #sentiment #factchecking #argumentmining #informationextraction #bionlp

Professor of Language Technology at the University of Helsinki @helsinki.fi

Head of Helsinki-NLP @helsinki-nlp.bsky.social

Member of the Ellis unit Helsinki @ellisfinland.bsky.social

Senior Data Scientist at Financial Times.

Python/R, ML, NLP, but also the occasional local politics.

Dad of two girls.

Opinions are my own.

PhD cadidate at the University of Amsterdam

PhD student at ILLC - University of Amsterdam 🌷

Interested in linguistics and interpretability.

Workshop on Gender Bias for Natural Language Processing @ ACL 2025 in Vienna, Austria

🌐 https://gebnlp-workshop.github.io/

Top news and commentary for technology's leaders, from all around the web.

This account shares top-level Techmeme headlines. Visit https://techmeme.com/ for full context.

Making invisible peer review contributions visible 🌟 Tracking 2,970 exceptional ARR reviewers across 1,073 institutions | Open source | arrgreatreviewers.org

studying the minds on our computers | https://kyobrien.io

PhD student at the University of Amsterdam working on vision-language models and cognitive computational neuroscience

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

The Milan Natural Language Processing Group #NLProc #AI

milanlproc.github.io

Senior Lecturer (Associate Professor) in Natural Language Processing, Queen's University Belfast. NLProc • Cognitive Science • Semantics • Health Analytics.