Introducing TiRex - xLSTM based time series model | NXAI

TiRex model at the top 🦖

We are proud of TiRex - our first time series model based on #xLSTM technology.

Key take aways:

🥇 Ranked #1 on official international leaderboards

➡️ Outperforms models ...

TiRex 🦖 time series xLSTM model ranked #1 on all leaderboards.

➡️ Outperforms models by Amazon, Google, Datadog, Salesforce, Alibaba

➡️ industrial applications

➡️ limited data

➡️ embedded AI and edge devices

➡️ Europe is leading

Code: lnkd.in/eHXb-XwZ

Paper: lnkd.in/e8e7xnri

shorturl.at/jcQeq

02.06.2025 12:11 — 👍 5 🔁 5 💬 0 📌 0

Happy to introduce 🔥LaM-SLidE🔥!

We show how trajectories of spatial dynamical systems can be modeled in latent space by

--> leveraging IDENTIFIERS.

📚Paper: arxiv.org/abs/2502.12128

💻Code: github.com/ml-jku/LaM-S...

📝Blog: ml-jku.github.io/LaM-SLidE/

1/n

22.05.2025 12:24 — 👍 7 🔁 8 💬 1 📌 1

1/11 Excited to present our latest work "Scalable Discrete Diffusion Samplers: Combinatorial Optimization and Statistical Physics" at #ICLR2025 on Fri 25 Apr at 10 am!

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

24.04.2025 08:57 — 👍 16 🔁 7 💬 1 📌 0

⚠️ Beware: Your AI assistant could be hijacked just by encountering a malicious image online!

Our latest research exposes critical security risks in AI assistants. An attacker can hijack them by simply posting an image on social media and waiting for it to be captured. [1/6] 🧵

18.03.2025 18:25 — 👍 8 🔁 8 💬 1 📌 3

X-IL: Exploring the Design Space of Imitation Learning Policies

Designing modern imitation learning (IL) policies requires making numerous decisions, including the selection of feature encoding, architecture, policy representation, and more. As the field rapidly a...

Exploration imitation learning architectures: Transformer, Mamba, xLSTM: arxiv.org/abs/2502.12330

*LIBERO: “xLSTM shows great potential”

*RoboCasa: “xLSTM models, we achieved success rate of 53.6%, compared to 40.0% of BC-Transformer”

*Point Clouds: “xLSTM model achieves a 60.9% success rate”

19.02.2025 19:43 — 👍 6 🔁 3 💬 0 📌 0

Ever wondered why presenting more facts can sometimes *worsen* disagreements, even among rational people? 🤔

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

07.01.2025 22:25 — 👍 234 🔁 78 💬 8 📌 2

Often LLMs hallucinate because of semantic uncertainty due to missing factual training data. We propose a method to detect such uncertainties using only one generated output sequence. Super efficient method to detect hallucination in LLMs.

20.12.2024 12:52 — 👍 15 🔁 3 💬 0 📌 2

🔊 Super excited to announce the first ever Frontiers of Probabilistic Inference: Learning meets Sampling workshop at #ICLR2025 @iclr-conf.bsky.social!

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

18.12.2024 19:09 — 👍 84 🔁 19 💬 2 📌 3

Just 10 days after o1's public debut, we’re thrilled to unveil the open-source version of the technique behind its success: scaling test-time compute

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

16.12.2024 21:42 — 👍 83 🔁 18 💬 4 📌 2

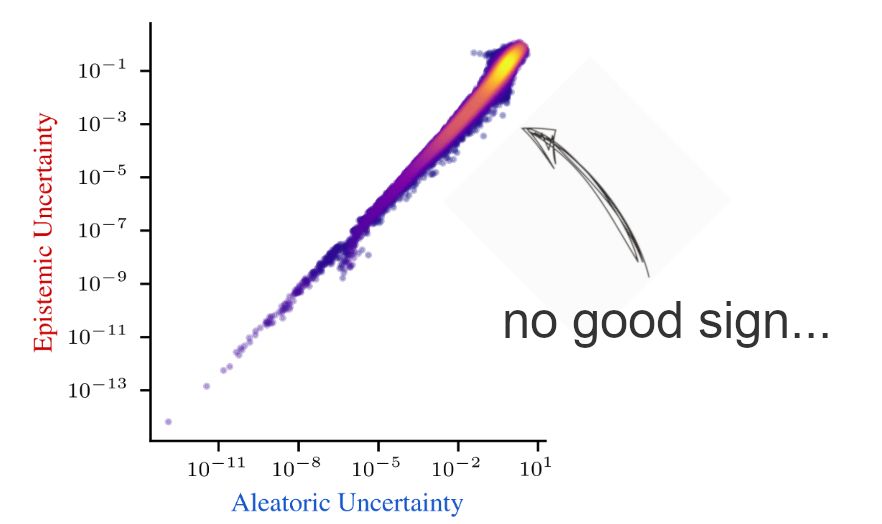

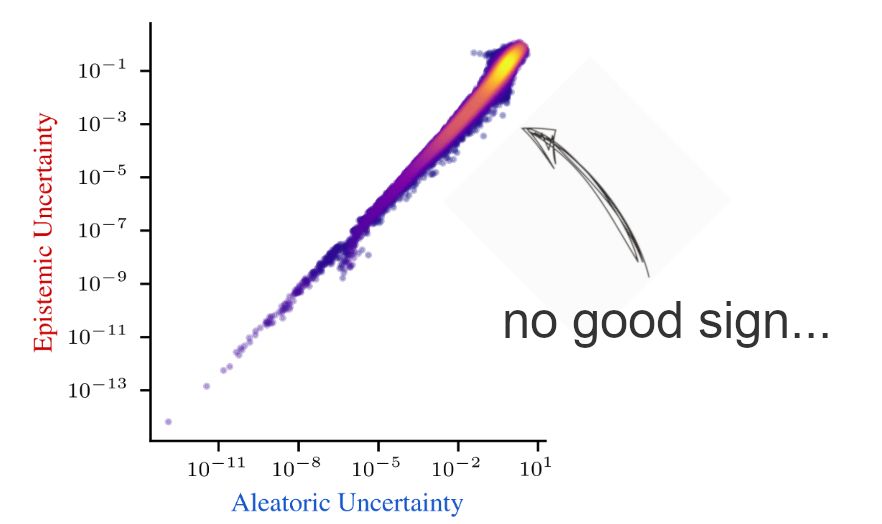

Proud to announce our NeurIPS spotlight, which was in the works for over a year now :) We dig into why decomposing aleatoric and epistemic uncertainty is hard, and what this means for the future of uncertainty quantification.

📖 arxiv.org/abs/2402.19460 🧵1/10

03.12.2024 09:45 — 👍 74 🔁 12 💬 3 📌 2

Cool work!

03.12.2024 15:29 — 👍 0 🔁 0 💬 0 📌 0

Thrilled to share our NeurIPS spotlight on uncertainty disentanglement! ✨ We study how well existing methods disentangle different sources of uncertainty, like epistemic and aleatoric. While all tested methods fail at this task, there are promising avenues ahead. 🧵 👇 1/7

📖: arxiv.org/abs/2402.19460

03.12.2024 13:38 — 👍 56 🔁 6 💬 4 📌 1

ML for molecules and materials in the era of LLMs [ML4Molecules]

ELLIS workshop, HYBRID, December 6, 2024

The Machine Learning for Molecules workshop 2024 will take place THIS FRIDAY, December 6.

Tickets for in-person participation are "SOLD" OUT.

We still have a few free tickets for online/virtual participation!

Registration link here: moleculediscovery.github.io/workshop2024/

03.12.2024 12:35 — 👍 19 🔁 14 💬 0 📌 0

🙌

29.11.2024 12:15 — 👍 0 🔁 0 💬 0 📌 0

Would love to join, working on Bayesian ML

27.11.2024 14:43 — 👍 1 🔁 0 💬 0 📌 0

Very cool Michael, congrats! 😄

23.11.2024 07:45 — 👍 0 🔁 0 💬 1 📌 0

Assistant professor at Princeton CS working on reinforcement learning and AI/ML.

Site: https://ben-eysenbach.github.io/

Lab: https://princeton-rl.github.io/

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

Computer Scientist at SRI. Machine Learning. Uncertainty Quantification.

Studying genomics, machine learning, and fruit. My code is like our genomes -- most of it is junk.

Assistant Professor UMass Chan

Previously IMP Vienna, Stanford Genetics, UW CSE.

PhD student at MIT. Machine learning, computer vision, ecology, climate. Previously: Co-founder, CTO Ai.Fish; Researcher at Caltech; UC Berkeley. justinkay.github.io

Machine Learning Researcher. PhD in Machine Learning.

✨Researching Reinforcement Learning.

Been at @UCL @GoogleDeepmind @UCBerkeley @ucberkeleyofficial.bsky.social @ucl.ac.uk

Website: https://ezgikorkmaz.github.io/

PhD student 🎓 in Statistics at Bielefeld University interested in doubly stochastic processes and their application to ecology 🦅, sports 🏈, and finance 📈.

Website: https://janolefi.github.io

GitHub: https://github.com/janolefi

https://ellis-jena.eu is developing+applying #AI #ML in #earth system, #climate & #environmental research.

Partner: @uni-jena.de, https://bgc-jena.mpg.de/en, @dlr-spaceagency.bsky.social, @carlzeissstiftung.bsky.social, https://aiforgood.itu.int

ai research @ thinking machines . realtime video+voice. i like trains and bikes. sometimes I climb rocks and throw pottery.

Professor for "Machine Learning in Science", University of Tübingen.

Artificial Intellgence as a source of inspiration in Science.

https://mariokrenn.wordpress.com/

Assistant prof at TU Graz, formerly assistant prof at TU Eindhoven, Marie-Curie Fellow at University of Cambridge. Probabilistic Machine Learning.

Probabilistic machine learning and its applications in AI, health, user interaction.

@ellisinstitute.fi, @ellis.eu, fcai.fi, @aifunmcr.bsky.social

Assistant Professor at the University of Alberta. Amii Fellow, Canada CIFAR AI chair. Machine learning researcher. All things reinforcement learning.

📍 Edmonton, Canada 🇨🇦

🔗 https://webdocs.cs.ualberta.ca/~machado/

🗓️ Joined November, 2024

Researcher Machine Learning & Data Mining, Prof. Computational Data Analytics @jkulinz.bsky.social, Austria.

I am a physicist working on neural networks (artificial and biological). Find me on dmitrykrotov.com.

AI Prof at TU Darmstadt, Founding Co-Director Hessian.AI, DFKI, AAAI/EurAI/AAIA/ELLIS Fellow, AAAI24 Ass. PC CoChair, Fmr. PC CoChair UAI, ECML PKDD, Invest. @Aleph__Alpha, Fmr. AI Column German Newspaper Welt (am Sonntag)

Probabilistic {Machine,Reinforcement} Learning and more at SDU

ELLIS PhD student in Machine Learning @Aalto University, Finland

Working on Bayesian experimental design, causality, human-in-the-loop models

Maldivian

nazaal.com