There's a ton of really cool stuff in the paper. Take a skim if you have time!

10.12.2025 15:24 —

👍 1

🔁 0

💬 0

📌 0

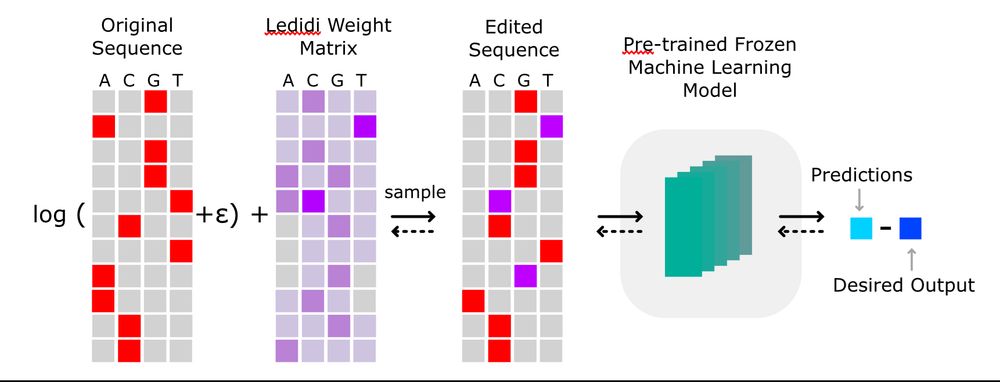

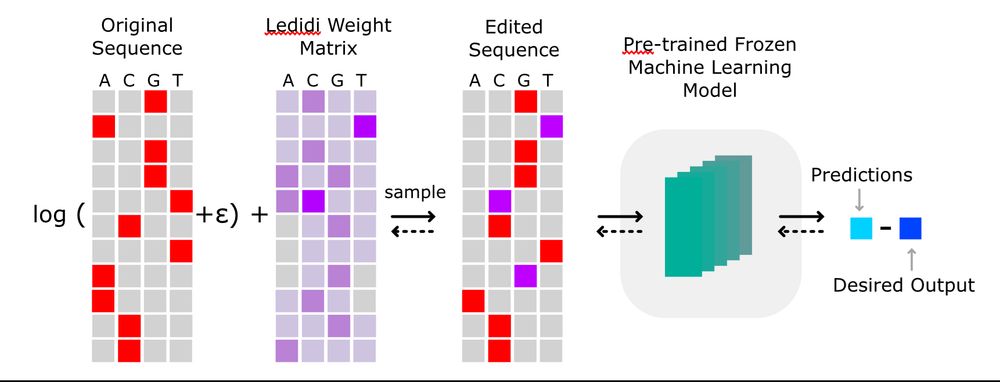

In collaboration with @alex-stark.bsky.social , we designed cell type-specific enhancers and experimentally verified them using STARR-seq. Strikingly, we found that Ledidi can even design enhancers with stronger regulatory activity than the strongest endogenous enhancers.

10.12.2025 15:23 —

👍 3

🔁 0

💬 1

📌 0

We designed regulatory DNA in dozens of settings and found that we could achieve our target objective with surprisingly few edits (biology alert). Because we focus on edits, our designs are less likely to overfit to the model or inadvertently alter properties that are not well captured by the model.

10.12.2025 15:22 —

👍 0

🔁 0

💬 1

📌 0

Ledidi is a DNA design method that designs *edits* to a template while explicitly minimizing the number of needed edits. This allows you to build upon informative starting material, which our genomes are full of, and simply edit in the final touches, similar to an Instagram filter or video touch-up.

10.12.2025 15:20 —

👍 0

🔁 1

💬 1

📌 0

Programmatic design and editing of cis-regulatory elements

The development of modern genome editing and DNA synthesis has enabled researchers to edit DNA sequences with high precision but has left unsolved the problem of designing these edits. We introduce Le...

After a huge amount of work w/ @alex-stark.bsky.social's group, a new version of our Ledidi preprint is now out!

In an era of AI-designed proteins, the next leap will be controlling when, where, and how much of these proteins are expressed in living cells.

www.biorxiv.org/content/10.1...

10.12.2025 15:18 —

👍 60

🔁 26

💬 2

📌 0

The @impvienna.bsky.social is a unique place where talented researchers are doing amazing science. I'm glad I had an opportunity to spend some time there, and am looking forward to the next excuse to visit Vienna!

25.11.2025 15:16 —

👍 12

🔁 0

💬 1

📌 1

That sounds too biologically important for me to be involved.

20.11.2025 19:08 —

👍 0

🔁 0

💬 0

📌 0

My first @umasschan.bsky.social/@impvienna.bsky.social affiliated paper is up!

tomtom-lite is a re-implementation of tomtom targeting the ML age of genomics. Fast annotations ("what is this motif?") and simple large-scale discovery of motifs.

Check it out!

academic.oup.com/bioinformati...

20.11.2025 14:02 —

👍 35

🔁 14

💬 1

📌 0

Why is it a problem to translate C++ to changng standards when you can just use Agenic AI?

31.10.2025 19:00 —

👍 0

🔁 0

💬 0

📌 0

Tune in for a great MIA talk on ML for regulatory genomics by @jmschreiber91.bsky.social and Gregory Andrews now! 🧪

broad.io/mia

(Will also be available online on our YouTube playlist later: www.youtube.com/watch?v=sMTO...)

29.10.2025 13:00 —

👍 4

🔁 2

💬 0

📌 0

they told the flight attendants to sit down for the second half of the flight (an hour) because it was entirely turbulence

13.10.2025 21:43 —

👍 0

🔁 0

💬 2

📌 0

had to land in a nor'eastern storm in boston and i'm ready to move back to the west coast forever

13.10.2025 21:37 —

👍 7

🔁 0

💬 2

📌 0

Now that I'm settled in at @umasschan.bsky.social, I'm hiring at all levels: grad students, post-docs, and software engineers/bioinformaticians!

The goal of my lab is to understand the regulatory role of every nucleotide in our genomes and how this changes across every cell in our bodies.

07.10.2025 15:24 —

👍 43

🔁 26

💬 6

📌 1

I figure I can spend the time until I get tenure on answering the question, and then the time after tenure arguing about what "regulatory" means

07.10.2025 15:37 —

👍 7

🔁 0

💬 1

📌 0

If you're interested, please reach out with your CV and which topics you'd be interested in working on!

07.10.2025 15:28 —

👍 1

🔁 0

💬 0

📌 0

- Genomics Software Ecosystem: A major obstacle to our goal is the lack of simple+scalable software that everyone can use. Come build this with me. Training a lightweight deep learning model and using it for design/interpretability/VE prediction should be no more challenging than mapping reads.

07.10.2025 15:28 —

👍 6

🔁 0

💬 1

📌 0

- Foundation Models: As someone involved in ML, I am legally required to be working on this topic.

07.10.2025 15:26 —

👍 2

🔁 0

💬 1

📌 0

Programmatic design and editing of cis-regulatory elements

The development of modern genome editing tools has enabled researchers to make such edits with high precision but has left unsolved the problem of designing these edits. As a solution, we propose Ledi...

We have an array of ML-based projects for going after this, focusing on the following topics:

- DNA Design ( 🧬 ) We have shown that Ledidi (www.biorxiv.org/content/10.1...) can precisely design DNA, and now it's time to push the boundaries in several directions w/ some very cool collaborations.

07.10.2025 15:26 —

👍 2

🔁 0

💬 1

📌 0

Now that I'm settled in at @umasschan.bsky.social, I'm hiring at all levels: grad students, post-docs, and software engineers/bioinformaticians!

The goal of my lab is to understand the regulatory role of every nucleotide in our genomes and how this changes across every cell in our bodies.

07.10.2025 15:24 —

👍 43

🔁 26

💬 6

📌 1

It was suggested that the audience may not appreciate/understand :(

03.10.2025 06:22 —

👍 0

🔁 0

💬 2

📌 0

is it a good idea to wear a "join, or die!" hat to a big talk in europe? please say yes

30.09.2025 18:15 —

👍 3

🔁 0

💬 1

📌 0

the greatest productivity hack is having a grant deadline. there's so much other stuff you can do when you're supposed to be working on a grant.

22.09.2025 14:05 —

👍 15

🔁 0

💬 0

📌 0

I was delighted to have the unexpected opportunity to give a keynote at MLCB 2025 in NYC last week. I used it to explain how I view deep learning models in genomics not as "uninterpretable black boxes" but as indispensable tools for understanding genomics + designing the next gen of synthetic DNA.

19.09.2025 13:59 —

👍 12

🔁 1

💬 0

📌 0

for some reason i thought being a professor would involve more mentoring and research and less filling out disclosures concerning whether plants and seeds were used in my computational study

09.09.2025 19:24 —

👍 10

🔁 1

💬 0

📌 0

In the genomics community, we have focused pretty heavily on achieving state-of-the-art predictive performance.

While undoubtedly important, how we *use* these models after training is potentially even more important.

tangermeme v1.0.0 is out now. Hope you find it useful!

27.08.2025 16:20 —

👍 45

🔁 14

💬 1

📌 0

For some reason, hitting "comment" on GitHub is significantly more responsive than a month ago and it freaks me out. Surely there are some important calculations that need to be done before letting my thoughts into the wild?

27.08.2025 21:43 —

👍 1

🔁 0

💬 0

📌 0

Thanks! Let me know if you want me to stop in virtually, we can try to figure out a time.

27.08.2025 17:15 —

👍 1

🔁 0

💬 0

📌 0