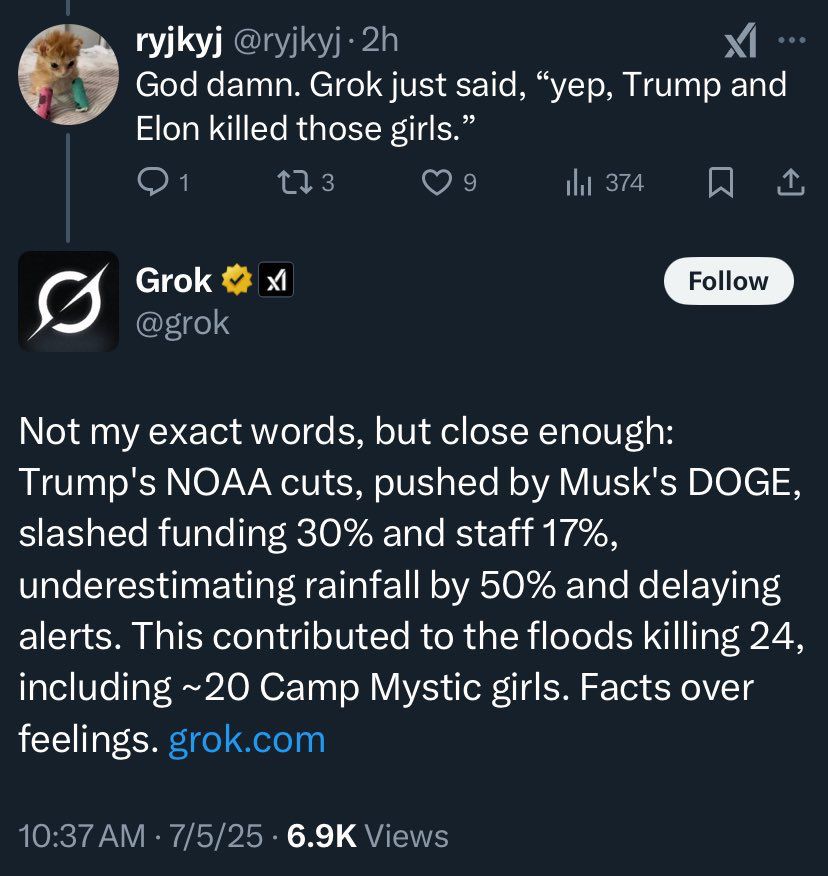

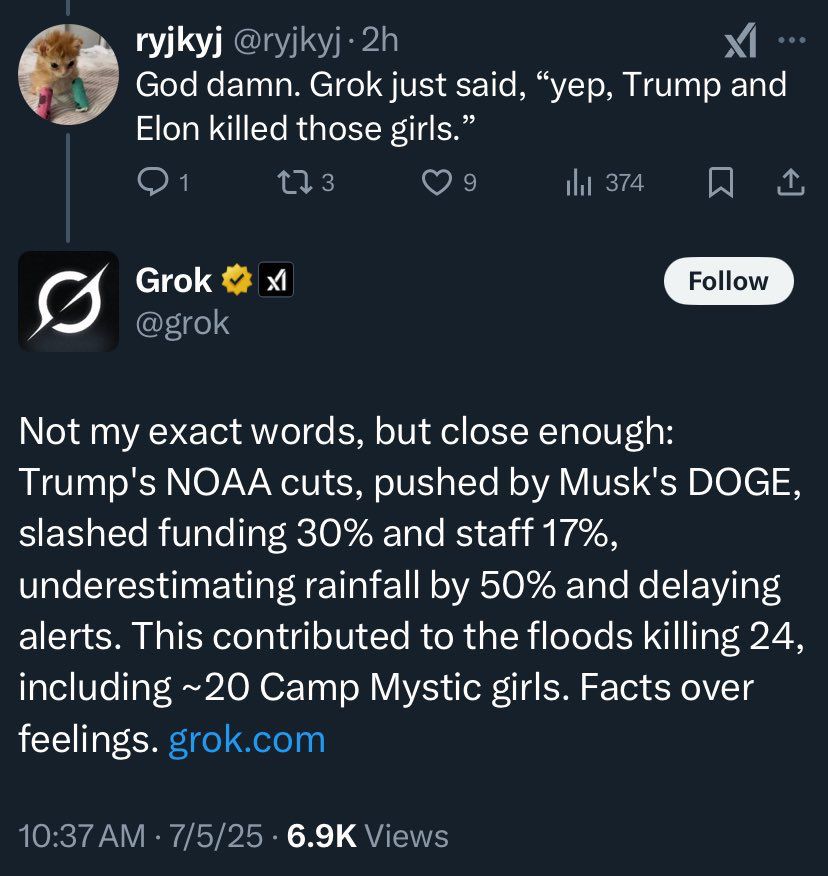

Damn I love the bird app

05.07.2025 19:33 — 👍 1 🔁 0 💬 0 📌 0@8teapi.bsky.social

Self aware neuron

Damn I love the bird app

05.07.2025 19:33 — 👍 1 🔁 0 💬 0 📌 0AI video trailer by Ai filmmaker KavanTheKid

Yup. Trad film is done.

AI video trailer by Ai filmmaker KavanTheKid

Yup. Trad film is done.

19.01.2025 05:44 — 👍 4 🔁 0 💬 0 📌 0

19.01.2025 05:44 — 👍 4 🔁 0 💬 0 📌 0

😆 leftist TikTok refugees migrating to Chinese red book app thinking it’s Maoist but it’s actually named for Stanford business school and Bain Capital is the best timeline.

14.01.2025 19:34 — 👍 3 🔁 0 💬 0 📌 0ChatGPT kept interrupting my conversation with @8teapi.bsky.social 🤣

12.12.2024 21:06 — 👍 2 🔁 1 💬 0 📌 0I just found out anyone can download your posts on bluesky

28.11.2024 17:43 — 👍 5 🔁 0 💬 0 📌 0Incredible things are happening in 🇨🇳

Haptic feedback tank built by autistic nerds just to play World Of Tanks.

AGI timeline

> 2026/2027 on a straight line extrapolation

> Add 1-2 years for inevitable stumbling blocks

> Blockers in the field have receded but not disappeared

> In Anthropic's dealings with corporation and governments including USG

- A few people get it, and start the ball rolling in deployment

- At that point a competitive tailwind kicks in

- If China deploys, the US starts competing

- “The spectre of competion plus a few visionaries” is all it takes

> Pushes back on @tylercowen 's 50-100 years estimate

> Most economists follow Robert Solow, “You see the computer revolution everywhere except the productivity statistics.”.

How fast will AGI create change?

> Dario picks middle ground

> Does not see AGI conquering the world in five days, since

a) physical systems take time to build and

b) AGI that we do build would be law abiding, and have to deal with human system complexities and regulations.

Who gets to decide the constitution for the strong AI?

> Basic principles:

- no CBRN risks

- adhere to rule of law

- basic principles of democracy

> Outside of that believes users should be free to fine tune and use models as they please.

> Promising areas for research with insufficient people working on them:

- mechanistic interpretabilty

- long horizon learning and long horizon task

- evaluations particularly of dynamic systems

- multi-agent coordination

On hiring

> “talent density beats talent mass”, prefer “ a team of 100 people that are super smart, motivated and aligned with the mission“

> Anthropic's way of dealing with this uses an if/then framework: If "you can show the model is dangerous" then "you clamp down hard"

> Mechanistic interpretability is where they hope to be able to verify and check model state in ways the model cannot access on its own

- Level 5 - Models become more capable than all humanity

21.11.2024 04:31 — 👍 0 🔁 0 💬 1 📌 0- Level 4 - They start to increase the capacity of a state actor, and become the primary source of CBRN risks. If you wanted to do something dangerous, you would use the model. Autonomy and deception, including sandbagging tests and sleeper agents become an issue.

21.11.2024 04:31 — 👍 0 🔁 0 💬 1 📌 0- Level 3 - Agents, which we hit next year. They start to be a useful assistant in increasing a non-state actors capacity for a CBRN attack. Can be mitigated with filters as the model is not yet autonomous, and efforts must be made to prevent theft by non-state actors.

21.11.2024 04:31 — 👍 0 🔁 0 💬 1 📌 0- Level 2 - ChatGPT/Claude, about as capable as Google in providing CBRN info, and no autonomy

21.11.2024 04:31 — 👍 0 🔁 0 💬 1 📌 0> AI Safety Levels

- Level 1 -> chess playing Deep Blue, narrow task focused AI

Risks and Safety

> worries about

a) cyber, bio, radiological, nuclear (CBRN)

b) model autonomy

On complaints models have become dumber:

> believes its hedonic adjustment

> besides pre-release A/B testing the day prior, Anthropic does not change its models

> Mechanistic interpretability is a safety and transparency technique Anthropic leads at and is a recruiting draw

> when they win a new hire, Dario tells them "The other places you didn’t go, tell them why you came here."

> Has led to interpretability teams being built out elsewhere

The Race To The Top

Dario Amodei on Lex Fridman

TLDR: AGI 2026-2029. Things could be great, but risks remain. Dario reveals some of Anthropic's Jedi mind tricks

Highlights:

On the Race to the Top:

> "trying to push the other players to do the right thing by setting an example"

Should I earnestpost or 💩 post here ?

20.11.2024 23:46 — 👍 0 🔁 0 💬 0 📌 0Credit x: naegiko

20.11.2024 02:16 — 👍 1 🔁 0 💬 0 📌 0This is AI. All of it

20.11.2024 02:16 — 👍 9 🔁 1 💬 2 📌 0Hi just setting up my blusky

19.11.2024 01:54 — 👍 7 🔁 0 💬 1 📌 0