Cengiz Pehlevan

@cpehlevan.bsky.social

theory of neural networks for natural and artificial intelligence https://pehlevan.seas.harvard.edu/

@cpehlevan.bsky.social

theory of neural networks for natural and artificial intelligence https://pehlevan.seas.harvard.edu/

Applying to do a postdoc or PhD in theoretical ML or neuroscience this year? Consider joining my group (starting next Fall) at UT Austin!

POD Postdoc: oden.utexas.edu/programs-and... CSEM PhD: oden.utexas.edu/academics/pr...

William Qian, Cengiz Pehlevan: Discovering alternative solutions beyond the simplicity bias in recurrent neural networks https://arxiv.org/abs/2509.21504 https://arxiv.org/pdf/2509.21504 https://arxiv.org/html/2509.21504

29.09.2025 06:50 — 👍 7 🔁 3 💬 0 📌 0⏳ Less than 1 day left until the Brain & Mind Workshop submission deadline!

🔍 Submit to our Finding or Tutorials track on OpenReview.

Findings track submission: openreview.net/group?id=Neu...

Tutorial track submission: openreview.net/group?id=Neu...

More info: data-brain-mind.github.io

Thank you so much for the kind shoutout! Grateful to be part of such a fantastic team.

07.09.2025 16:45 — 👍 1 🔁 0 💬 0 📌 0

Since I'm back on BlueSky - with @frostedblakess.bsky.social and @cpehlevan.bsky.social we wrote a brief perspective on how ideas about summary statistics from the statistical physics of learning could potentially help inform neural data analysis... (1/2)

04.09.2025 18:30 — 👍 33 🔁 10 💬 1 📌 0

Excited to share new computational work, led by @jzv.bsky.social, driven by Juan Carlos Fernandez del Castillo + contribution from Farhad Pashakanloo. We recover 3 core motifs in the olfactory system of evolutionarily distant animals using a biophysically-grounded model + efficient coding ideas!

04.09.2025 16:51 — 👍 24 🔁 12 💬 0 📌 1

Great to have this video about my @darpa.mil Artificial Intelligence Quantified (AIQ) program out! Very exciting program with absolutely fantastic teams. Stay tuned for some jaw dropping announcements!

www.youtube.com/watch?v=KVRF...

I am extremely grateful to be awarded the National University of Singapore (NUS) Development Grant, and to be a Young NUS Fellow! Look forward to collaborating with the Yong Loo Lin School of Medicine on exciting projects. This is my first grant and hopefully many more to come! #NUS #NeuroAI

27.08.2025 14:31 — 👍 8 🔁 1 💬 1 📌 0

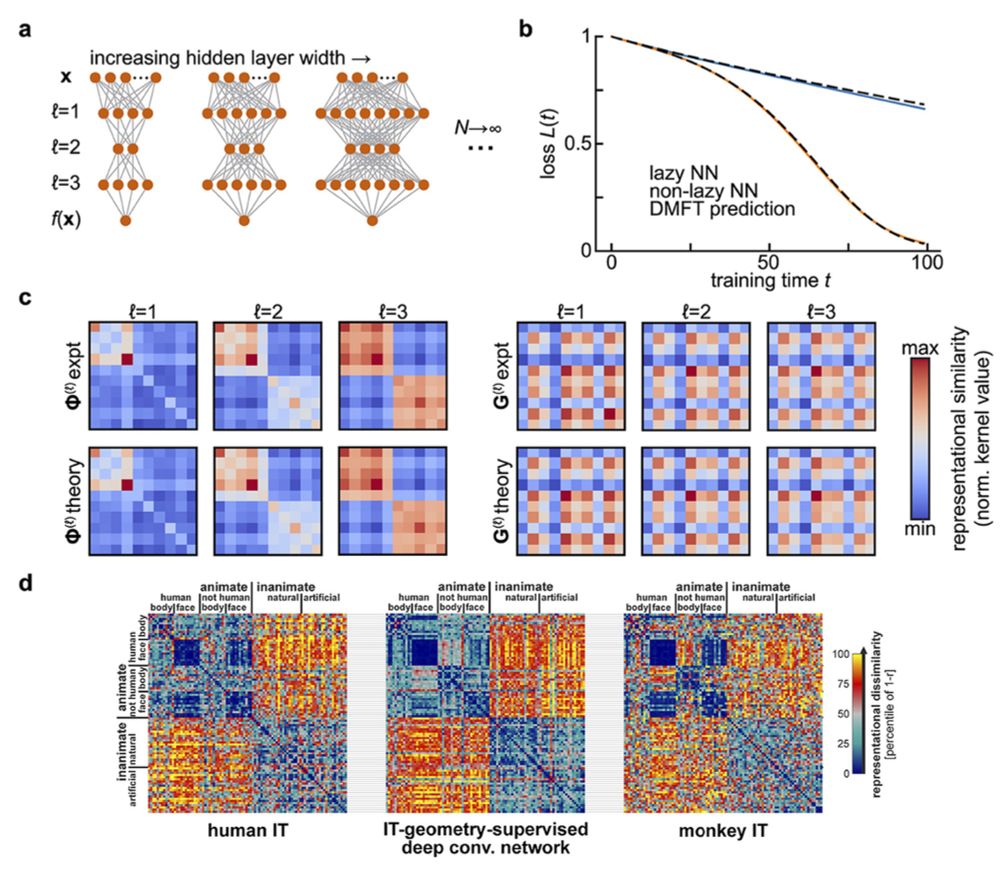

Our new Simons Collaboration on the Physics of Learning and Neural Computation will develop powerful tools from #physics, #math, computer science and theoretical #neuroscience to understand how large neural networks learn, compute, scale, reason and imagine: www.simonsfoundation.org/2025/08/18/s...

19.08.2025 14:43 — 👍 21 🔁 5 💬 0 📌 3

If you work on artificial or natural intelligence and are finishing your PhD, consider applying for a Kempner research fellowship at Harvard:

kempnerinstitute.harvard.edu/kempner-inst...

Congratulations to #KempnerInstitute associate faculty member @cpehlevan.bsky.social for joining the new

@simonsfoundation.org Simons Collaboration on the Physics of Learning and Neural Computation!

www.simonsfoundation.org/2025/08/18/s...

#AI #neuroscience #NeuroAI #physics #ANNs

Very excited to lead this new @simonsfoundation.org collaboration on the physics of learning and neural computation to develop powerful tools from physics, math, CS, stats, neuro and more to elucidate the scientific principles underlying AI. See our website for more: www.physicsoflearning.org

18.08.2025 17:48 — 👍 92 🔁 14 💬 4 📌 1

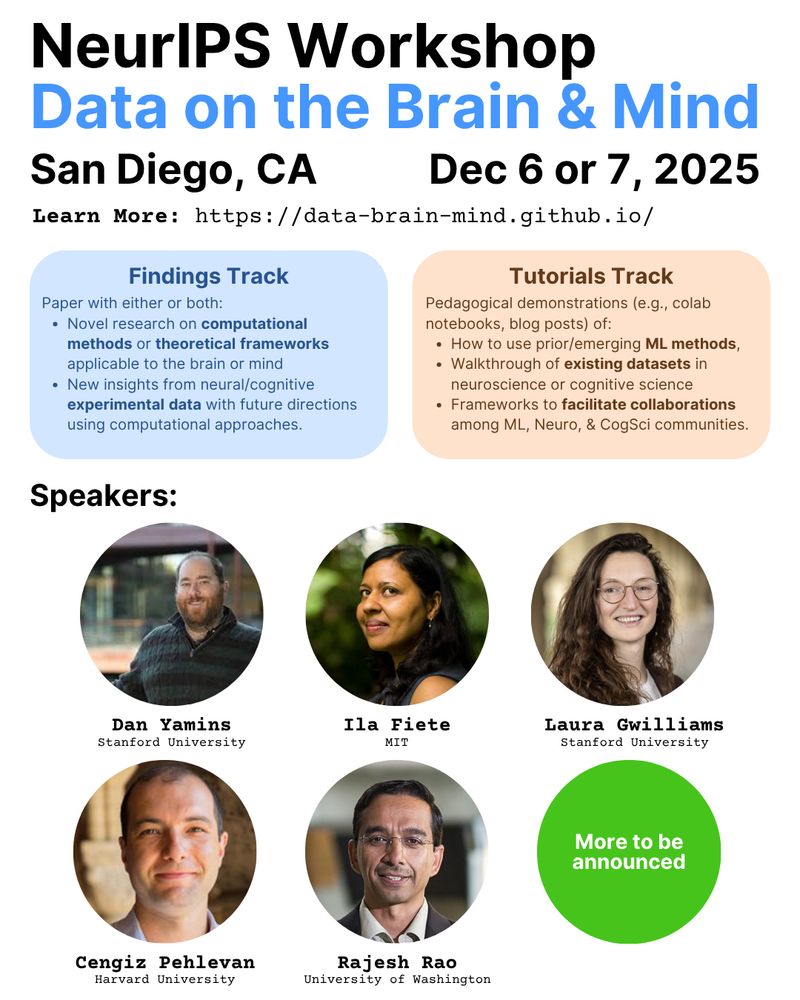

🚨 Excited to announce our #NeurIPS2025 Workshop: Data on the Brain & Mind

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

The post is based on a paper written with Yue M. Lu., @jzv.bsky.social, Anindita Maiti and @cpehlevan.bsky.social evan.

Check it out now at PNAS:

doi.org/10.1073/pnas...

(2/2)

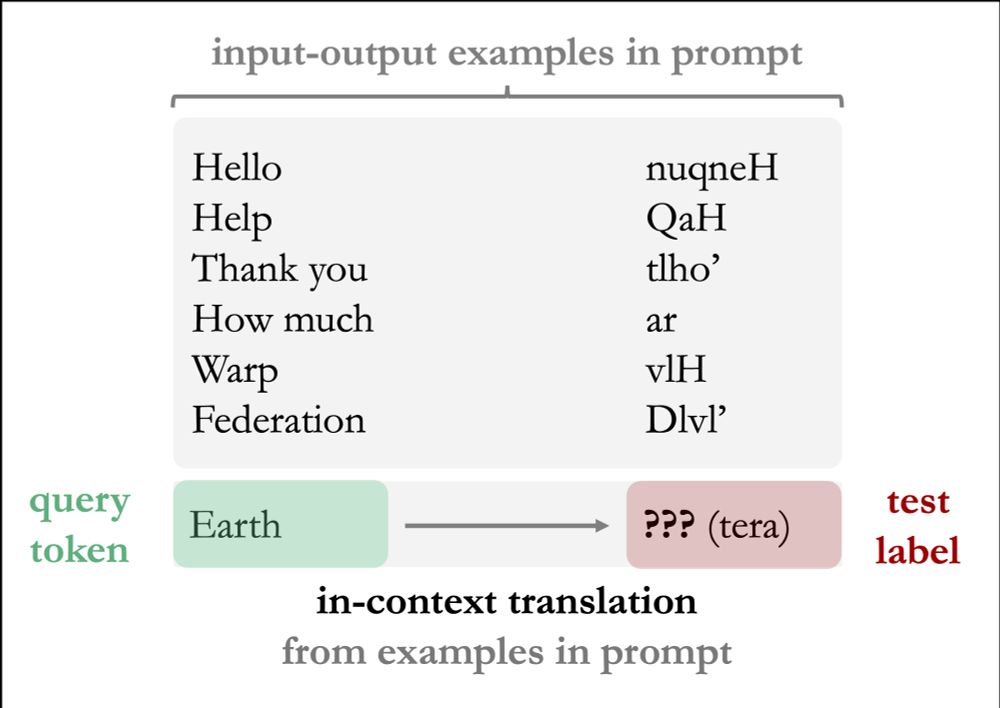

New in the #DeeperLearningBlog: the #KempnerInstitute's Mary Letey presents work recently published in PNAS that offers generalizable insights into in-context learning (ICL) in an analytically-solvable model architecture.

bit.ly/4lPK15p

#AI @pnas.org

(1/2)

At #ICML2025, presenting work done at @flatironinstitute.org w Matt Smart and @albertobietti.bsky.social on in-context denoising (arxiv.org/abs/2502.05164). Come to Matt’s oral, Thursday, 4:15-4:30 PM, West Ballroom A, and see us right after at poster #E-3207, 4:30-7:00 PM, East Exhibition Hall A-B.

16.07.2025 18:37 — 👍 5 🔁 3 💬 0 📌 0

Great to see this one finally out in PNAS! Asymptotic theory of in-context learning by linear attention www.pnas.org/doi/10.1073/... Many thanks to my amazing co-authors Yue Lu, Mary Letey, Jacob Zavatone-Veth @jzv.bsky.social and Anindita Maiti!

11.07.2025 07:33 — 👍 21 🔁 5 💬 1 📌 0

#eNeuro: Obeid and Miller identify distinct neural computations in the primary visual cortex that explain how surrounding context suppresses perception of visual figures and features. @harvardseas.bsky.social

vist.ly/3n6tfb2

📣 Grad students and postdocs in computational and theoretical neuroscience: please consider applying for the 2025 Flatiron Institute Junior Theoretical Neuroscience Workshop! All expenses are covered. Apply by April 14. jtnworkshop2025.flatironinstitute.org

09.04.2025 16:11 — 👍 21 🔁 16 💬 0 📌 0New preprint! We trained an RNN using RL to solve a decision making task used to characterize suboptimal decision making by Schizophrenic patients. First project exploring comp psych models, thanks to @adam-manoogian.bsky.social @shawnrhoadsphd.bsky.social @bqian.bsky.social @cpehlevan.bsky.social

27.03.2025 17:00 — 👍 10 🔁 5 💬 1 📌 0Congratulations!

19.02.2025 01:12 — 👍 1 🔁 0 💬 0 📌 0Honoured to have been selected as a #SloanFellow

Thankful for all the support from family, mentors, collaborators, colleagues and students along the way!

@sloanfoundation.bsky.social

(1/30) New preprint! "Symmetries and continuous attractors in disordered neural circuits" with Larry Abbott and Haim Sompolinsky

bioRxiv: www.biorxiv.org/content/10.1...

Theory in neuroscience, you say? How about this preprint by @david-g-clark.bsky.social, with a couple of others you might recognize? :-) #neuroscience

27.01.2025 00:23 — 👍 35 🔁 9 💬 0 📌 1Our preprint with @frostedblakess.bsky.social, @jzv.bsky.social, @cpehlevan.bsky.social is out!

We develop a simple reinforcement learning model that recapitulates 3 disparate hippocampal dynamics. With ablation studies, these representations improve the speed and flexibility of policy learning.

The official ad for this postdoc position is now (finally) live, see academicpositions.harvard.edu/postings/14486!

16.12.2024 14:42 — 👍 10 🔁 5 💬 2 📌 0

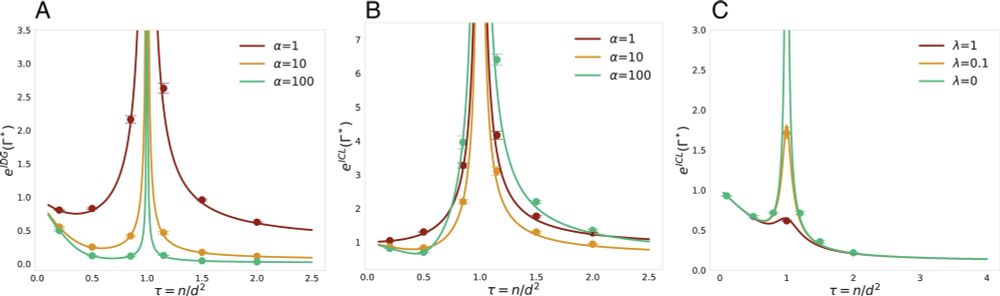

Come by at Neurips to hear Hamza present about properties of various feature learning infinite parameter limits of transformer models.

Poster in Hall A-C #4804 at 11 AM PST

Paper arxiv.org/abs/2405.15712 , code github.com/Pehlevan-Gro...

Work with Hamza Chaudhry and @cpehlevan.bsky.social

Excited to share my #NeurIPS2024 paper with @jzv.bsky.social, @BenjaminSRuben, and @cpehlevan.bsky.social on mechanistic mismatches in data-constrained models of neural dynamics! (1/n)

12.12.2024 19:51 — 👍 37 🔁 10 💬 1 📌 3I have to check how these works use/intepret these equations but typically, in your notation, r is interpreted as firing rate, and x is current.

11.12.2024 20:02 — 👍 0 🔁 0 💬 0 📌 0