Don't miss the next Statistics and DSI Joint Colloquium!

@uuujf.bsky.social, postdoc fellow at the Simons Institute at @ucberkeleyofficial.bsky.social, presents 'Towards a Less Conservative Theory of Machine Learning: Unstable Optimization and Implicit Regularization' on Thursday, February 5th

29.01.2026 15:05 —

👍 1

🔁 1

💬 1

📌 0

slides: uuujf.github.io/postdoc/wu20...

26.09.2025 03:49 —

👍 0

🔁 0

💬 0

📌 0

GD dominates ridge

sharing a new paper w/ Peter Bartlett, @jasondeanlee.bsky.social @shamkakade.bsky.social, Bin Yu

ppl talking about implicit regularization, but how good is it? We show it's surprisingly effective: GD dominates ridge for linear regression, w/ more cool stuff on GD vs SGD

arxiv.org/abs/2509.17251

26.09.2025 03:49 —

👍 0

🔁 0

💬 1

📌 0

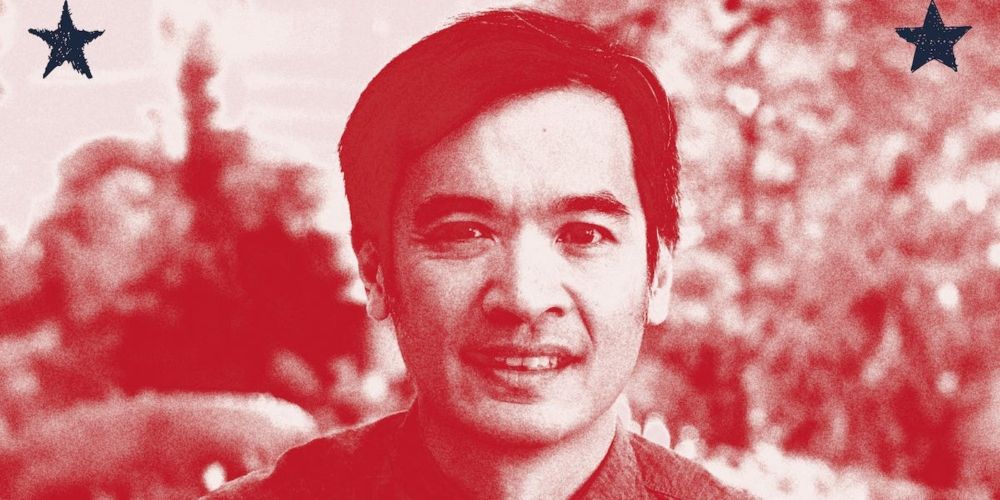

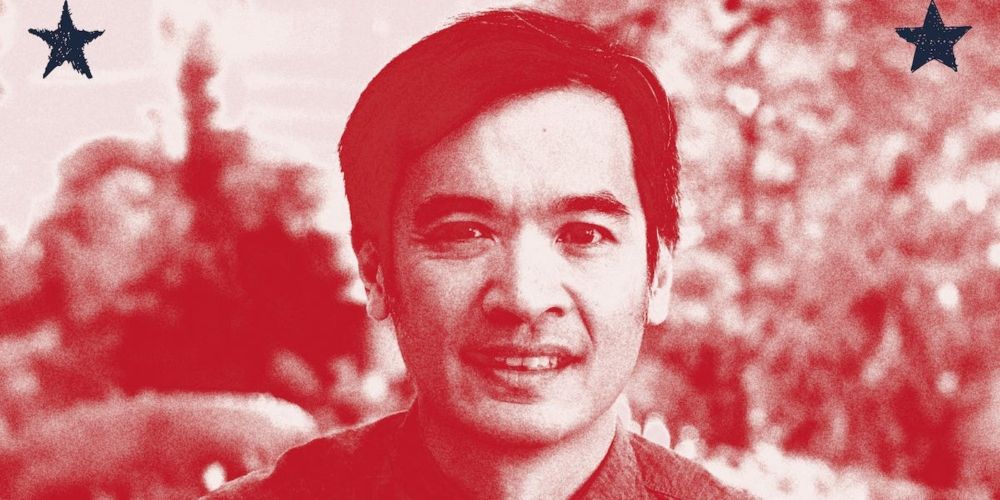

I’m an award-winning mathematician. Trump just cut my funding.

The “Mozart of Math” tried to stay out of politics. Then it came for his research.

I wrote an op-ed on the world-class STEM research ecosystem in the United States, and how this ecosystem is now under attack on multiple fronts by the current administration: newsletter.ofthebrave.org/p/im-an-awar...

18.08.2025 15:45 —

👍 793

🔁 325

💬 20

📌 32

📣Join us at COLT 2025 in Lyon for a community event!

📅When: Mon, June 30 | 16:00 CET

What: Fireside chat w/ Peter Bartlett & Vitaly Feldman on communicating a research agenda, followed by mentorship roundtable to practice elevator pitches & mingle w/ COLT community!

let-all.com/colt25.html

24.06.2025 18:22 —

👍 16

🔁 7

💬 0

📌 1

effects of stepsize for GD

Sharing two new papers on accelerating GD via large stepsizes!

Classical GD analysis assumes small stepsizes for stability. However, in practice, GD is often used with large stepsizes, which lead to instability.

See my slides for more details on this topic: uuujf.github.io/postdoc/wu20...

04.06.2025 18:55 —

👍 1

🔁 0

💬 1

📌 0

Jingfeng Wu, Pierre Marion, Peter Bartlett

Large Stepsizes Accelerate Gradient Descent for Regularized Logistic Regression

https://arxiv.org/abs/2506.02336

04.06.2025 05:26 —

👍 1

🔁 1

💬 0

📌 0

Announcing the first workshop on Foundations of Post-Training (FoPT) at COLT 2025!

📝 Soliciting abstracts/posters exploring theoretical & practical aspects of post-training and RL with language models!

🗓️ Deadline: May 19, 2025

09.05.2025 17:09 —

👍 17

🔁 6

💬 1

📌 1

We were very lucky to have Peter Bartlett visit @uwcheritoncs.bsky.social and give a Distinguished Lecture on "Gradient Optimization Methods: The Benefits of a Large Step-size." Very interesting and surprising results.

(Recording will be available eventually)

07.05.2025 10:11 —

👍 26

🔁 3

💬 0

📌 0

Ruiqi Zhang, Jingfeng Wu, Licong Lin, Peter L. Bartlett

Minimax Optimal Convergence of Gradient Descent in Logistic Regression via Large and Adaptive Stepsizes

https://arxiv.org/abs/2504.04105

08.04.2025 05:26 —

👍 4

🔁 1

💬 0

📌 0