If you are at NeurIPS I encourage you to check out Gasser's poster showing his ongoing work on models of speech perception.

06.12.2025 19:45 — 👍 0 🔁 0 💬 0 📌 0

Skyline of Madison, WI

🚨I am looking for a POSTDOC, LAB MANAGER/TECH and GRAD STUDENTS to join my new lab in beautiful Madison, WI.

We study how our brains perceive and represent the physical world around us using behavioral, computational, and neuroimaging methods.

paulunlab.psych.wisc.edu

#VisionScience #NeuroSkyence

17.11.2025 21:43 — 👍 48 🔁 41 💬 1 📌 4

82. A model of continuous speech recognition reveals the role of context in human speech perception - Gasser Elbanna

96. Machine learning models of hearing demonstrate the limits of attentional selection of speech heard through cochlear implants - Annesya Banerjee

14.11.2025 15:27 — 👍 0 🔁 0 💬 0 📌 0

58. Optimized models of uncertainty explain human confidence in auditory perception - Lakshmi Govindarajan

64. Source-location binding errors in auditory scene perception - Sagarika Alavilli

14.11.2025 15:27 — 👍 1 🔁 0 💬 1 📌 0

If you are at APAN today, check out these posters from members of our lab:

1. Cross-culturally shared sensitivity to harmonic structure underlies some aspects of pitch perception - Malinda McPherson-McNato

14.11.2025 15:27 — 👍 1 🔁 0 💬 1 📌 0

Lakshmi happens to be on the job market right now, so I will end by recommending that everyone try to hire him. He is a wonderful colleague, and I expect he will do many other important things. (end)

09.11.2025 21:34 — 👍 4 🔁 0 💬 0 📌 0

Added bonus: expressing stimulus-dependent uncertainty enables models that perform regression (i.e., yielding continuous valued outputs), which has previously not worked very well. (15/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

Lots more in the paper. The approach is applicable to any perceptual estimation problem. We hope it will enable the study of confidence to be extended to more realistic conditions, via models that can operate on images or sounds. (14/n)

09.11.2025 21:34 — 👍 2 🔁 0 💬 1 📌 0

He measured human confidence for pitch, and found that confidence was higher for conditions with lower discrimination thresholds. The model reproduced this general trend. (13/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

Lakshmi used the same framework to build models of pitch perception that represent uncertainty. The models generate a distribution over fundamental frequency. (12/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

By contrast, simulating bets using the softmax distribution of a standard classification-based neural network does not yield human-like confidence, presumably because the distribution is not incentivized to have correct uncertainty. (11/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

The model can also be used to select natural sounds whose localization is certain or uncertain. When presented to humans, humans place higher bets on the sounds with low model uncertainty, and vice versa. (10/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

The model replicates patterns of localization accuracy (like previous models) but also replicates the dependence of confidence on conditions. Here confidence is lower for sounds with narrower spectra, and at peripheral locations: (9/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

To simulate betting behavior from the model, he mapped a measure of the model posterior spread to a bet (in cents). (8/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

Lakshmi then tested whether the model’s uncertainty was predictive of human confidence judgments. He ran experiments in which people localized sounds and then placed bets on their localization judgment: (7/n)

09.11.2025 21:34 — 👍 2 🔁 0 💬 1 📌 0

The model was trained on spatial renderings of lots of natural sounds in lots of different rooms. Once trained, it produces narrow posteriors for some sounds, and broad posteriors for others: (6/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

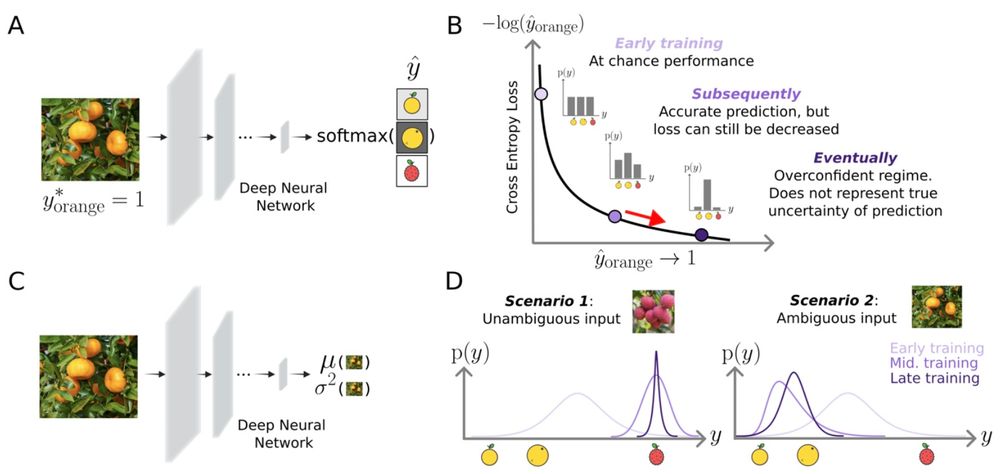

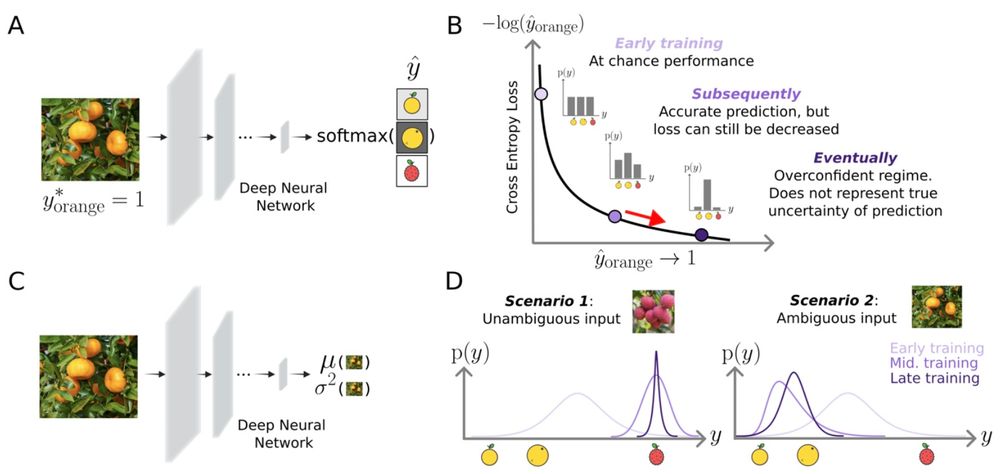

He first applied this idea to sound localization. The model takes binaural audio as input and estimates parameters of a mixture distribution over a sphere. Distributions can be narrow, broad, or multi-modal, depending on the stimulus. (5/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

Lakshmi realized that models could be trained to output parameters of distributions, and that by optimizing models with a log-likelihood loss function, the model is incentivized to correctly represent uncertainty. (4/n)

09.11.2025 21:34 — 👍 3 🔁 0 💬 1 📌 0

Standard neural networks are not suitable for several reasons, including because cross-entropy loss is known to induce over-confidence. (3/n)

09.11.2025 21:34 — 👍 4 🔁 0 💬 1 📌 0

Uncertainty is inevitable in perception. It seems like it would be useful for people to explicitly represent it. But it has been hard to study in realistic conditions, as we haven’t had stimulus-computable models. (2/n)

09.11.2025 21:34 — 👍 1 🔁 0 💬 1 📌 0

if you see this post, your actions are:

- if you have a spare buck, give it to Wikipedia, then repost this

- if you don't have a spare buck, just repost

your action is mandatory for the world's best source of information to survive

26.12.2024 12:03 — 👍 27360 🔁 35635 💬 258 📌 398

Excited that this work discovering cross-species signatures of stabilizing foot placement control is now out in PNAS!

pnas.org/doi/10.1073/...

@antoinecomite.bsky.social

21.10.2025 21:39 — 👍 49 🔁 9 💬 1 📌 3

Want to make publication-ready figures come straight from Python without having to do any manual editing? Are you fed up with axes labels being unreadable during your presentations? Follow this short tutorial including code examples! 👇🧵

16.10.2025 08:26 — 👍 156 🔁 44 💬 2 📌 4

DeckerLab

Excited to share that I'm joining WashU in January as an Assistant Prof in Psych & Brain Sciences! 🧠✨!

I'm also recruiting grad students to start next September - come hang out with us! Details about our lab here: www.deckerlab.com

Reposts are very welcome! 🙌 Please help spread the word!

01.10.2025 18:30 — 👍 77 🔁 33 💬 6 📌 1

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

Brown’s Department of Cognitive & Psychological Sciences is hiring a tenure-track Assistant Professor, working in the area of AI and the Mind (start July 1, 2026). Apply by Nov 8, 2025 👉 apply.interfolio.com/173939

#AI #CognitiveScience #AcademicJobs #BrownUniversity

23.09.2025 17:51 — 👍 40 🔁 26 💬 2 📌 2

Variance partitioning is used to quantify the overlap of two models. Over the years, I have found that this can be a very confusing and misleading concept. So we finally we decided to write a short blog to explain why.

@martinhebart.bsky.social @gallantlab.org

diedrichsenlab.org/BrainDataSci...

10.09.2025 16:58 — 👍 66 🔁 22 💬 2 📌 5

PhD student @ MIT Brain and Cognitive Sciences

aliciamchen.github.io

CNRS Researcher at CRNL. Research Specialist at Karolinska Institutet, Stockholm. Studying the prefrontal cortex and cognition in rodents. Electronic musician.

lab : https://www.lab.pierrelemerre.com/

personal webpage: https://www.pierrelemerre.com/

Professor, Northwestern University

Computational neuroscience | Neural manifolds

neuroscience and behavior in parrots and songbirds

Simons junior fellow and post-doc at NYU Langone studying vocal communication, PhD MIT brain and cognitive sciences

Research Scientist at Google DeepMind: Enivironmental sound understanding

Husband, Father and grandfather, Datahound, Dog lover, Fan of Celtic music, Former NIGMS director, Former EiC of Science magazine, Stand Up for Science advisor, Pittsburgh, PA

NIH Dashboard: https://jeremymberg.github.io/jeremyberg.github.io/index.html

Professor, Stanford

Vision Neuroscientist

Interested on how the interplay between brain function, structure & computations enables visual perception; and also what are we born with and what develops.

Neuroscientist and psychologist, ex-pat kiwi slowly reverting to my Scottish roots at UoG. Researching mental map formation in rats and humans. she/her

Professor of Signal Processing

Head of Department of Informatics

@kingscollegelondon.bsky.social

Graduate student in Brain and Cognitive Sciences at MIT studying spatial hearing in Josh McDermott’s lab | sometimes climbs

We study the mathematical principles of learning, perception & action in brains & machines. Funded by the Gatsby Charitable Foundation. Based at UCL. www.ucl.ac.uk/life-sciences/gatsby

MGMT: micky@circleeightmgmt.com

Bookings UK/Europe: mike.robinson@arcade-talent.com

North & South America: brent@good-direction.agency

Retired professor of psychology at University of Oxford. Interests in developmental neuropsychology and improving science. Blogs at deevybee.blogspot.com

Boston bartender, cocktail blogger, author of Drink & Tell: A Boston Cocktail Book and Boston Cocktails: Drunk & Told. He/him.

Mathematician, writer, Cornell professor. All cards on the table, face up, all the time. www.stevenstrogatz.com

How do we move? I study brains and machines at York University (Assistant Professor). Full-time human.

Neural Control & Computation Lab

www.ncclab.ca

Studying language in biological brains and artificial ones at the Kempner Institute at Harvard University.

www.tuckute.com

Computational Cognitive Scientist 🧠🤖 • NeuroAI, Predictive Coding, RL & Deep Learning, Complex Systems • Postdoc at @siegellab.bsky.social, @unituebingen.bsky.social • Husband & Dad

🎓 https://scholar.google.com/citations?hl=en&user=k5eR8_oAAAAJ