📄 Read the full paper:

SANSA: Unleashing the Hidden Semantics in SAM2 for Few-Shot Segmentation

Now on arXiv → arxiv.org/abs/2505.21795

Gabriele Trivigno

@gabtriv.bsky.social

PhD in Computer Vision

@gabtriv.bsky.social

PhD in Computer Vision

📄 Read the full paper:

SANSA: Unleashing the Hidden Semantics in SAM2 for Few-Shot Segmentation

Now on arXiv → arxiv.org/abs/2505.21795

🔹 4/4 – Promptable segmentation in action SANSA reduces reliance on costly pixel-level masks by supporting point, box, and scribble prompts

📈enabling fast, scalable annotation with minimal supervision.

See the qualitative results 👇

🔹 3/4 – SANSA achieves state-of-the-art in few-shot segmentation. We outperform specialist and foundation-based methods across various benchmarks:

📈 +9.3% mIoU on LVIS-92i

⚡ 3× faster than prior works

💡 Only 234M parameters (4-5x smaller than competitors)

🔹2/4 – Unlocking semantic structure

SAM2 features are rich, but optimized for tracking.

🧠 Insert bottleneck adapters into frozen SAM2

📉 These restructure feature space to disentangle semantics

📈 Result: features cluster semantically—even for unseen classes (see PCA👇)

🚀 As #CVPR2025 week kicks off, meet SANSA: Semantically AligNed Segment Anything 2

We turn SAM2 into a semantic few-shot segmenter:

🧠 Unlocks latent semantics in frozen SAM2

✏️ Supports any prompt: fast and scalable annotation

📦 No extra encoders

📎 github.com/ClaudiaCutta...

#ICCV2025

11.05.2025 15:05 — 👍 4 🔁 1 💬 1 📌 0I guess merging the events could also work 😂 I wonder whether cricket players would be better at ComputerVision than CV researchers are at cricket, or viceversa

11.05.2025 15:47 — 👍 1 🔁 0 💬 0 📌 0

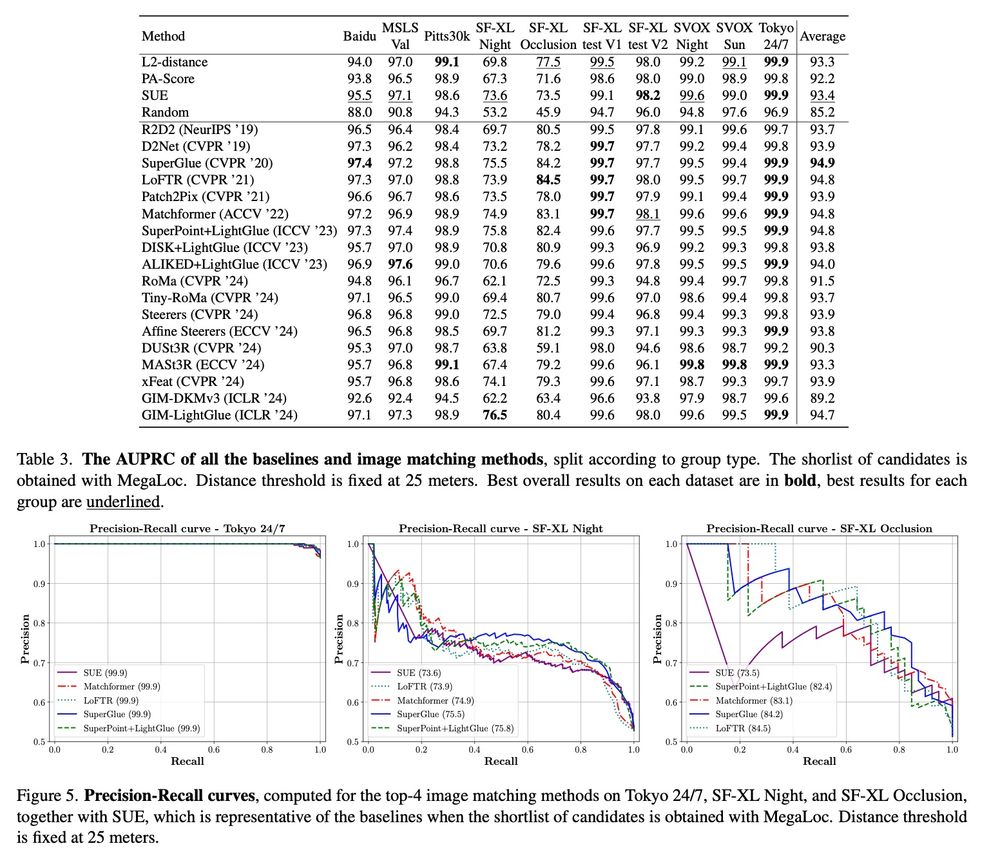

To Match or Not to Match: Revisiting Image Matching for Reliable Visual Place Recognition

Davide Sferrazza, @berton-gabri.bsky.social Gabriele Trivigno, Carlo Masone

tl;dr: global descriptors nowadays are often better than local feature matching methods for simple datasets.

arxiv.org/abs/2504.06116

✨ SAMWISE achieves state-of-the-art performance across multiple #RVOS benchmarks—while being the smallest model in RVOS! 🎯 It also sets a new #SOTA in image-level referring #segmentation. With only 4.9M trainable parameters, it runs #online and requires no fine-tuning of SAM2 🚀

10.04.2025 18:11 — 👍 0 🔁 0 💬 0 📌 0

🚀 Contributions:

🔹 Textual Prompts for SAM2: Early fusion of visual-text cues via a novel adapter

🔹 Temporal Modeling: Essential for video understanding, beyond frame-by-frame object tracking

🔹 Tracking Bias: Correcting tracking bias in SAM2 for text-aligned object discovery

🔥 Our paper SAMWISE: Infusing Wisdom in SAM2 for Text-Driven Video Segmentation is accepted as a #Highlight at #CVPR2025! 🎉

We make #SegmentAnything wiser, enabling it to understand textual prompts—training only 4.9M parameters! 🧠

💻 Code, models & demo: github.com/ClaudiaCutta...

Why SAMWISE?👇

To Match or Not to Match: Revisiting Image Matching for Reliable Visual Place Recognition

Davide Sferrazza, @berton-gabri.bsky.social, @gabtriv.bsky.social, Carlo Masone

tl;dr:VPR datasets saturate;re-ranking not good;image matching->uncertainty->inlier counts->confidence

arxiv.org/abs/2504.06116

🚀 Paper Release! 🚀

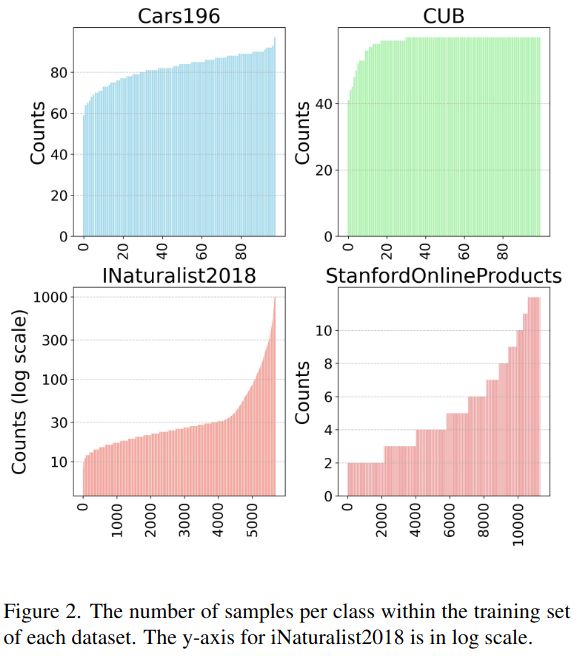

Curious about image retrieval and contrastive learning? We present:

📄 "All You Need to Know About Training Image Retrieval Models"

🔍 The most comprehensive retrieval benchmark—thousands of experiments across 4 datasets, dozens of losses, batch sizes, LRs, data labeling, and more!

Trying to convince my bluesky feed to put me in the Computer Vision community. Right now I only see posts about the orange-haired president. @berton-gabri.bsky.social @gabrigole.bsky.social how did you do it?

07.04.2025 09:43 — 👍 0 🔁 0 💬 1 📌 0

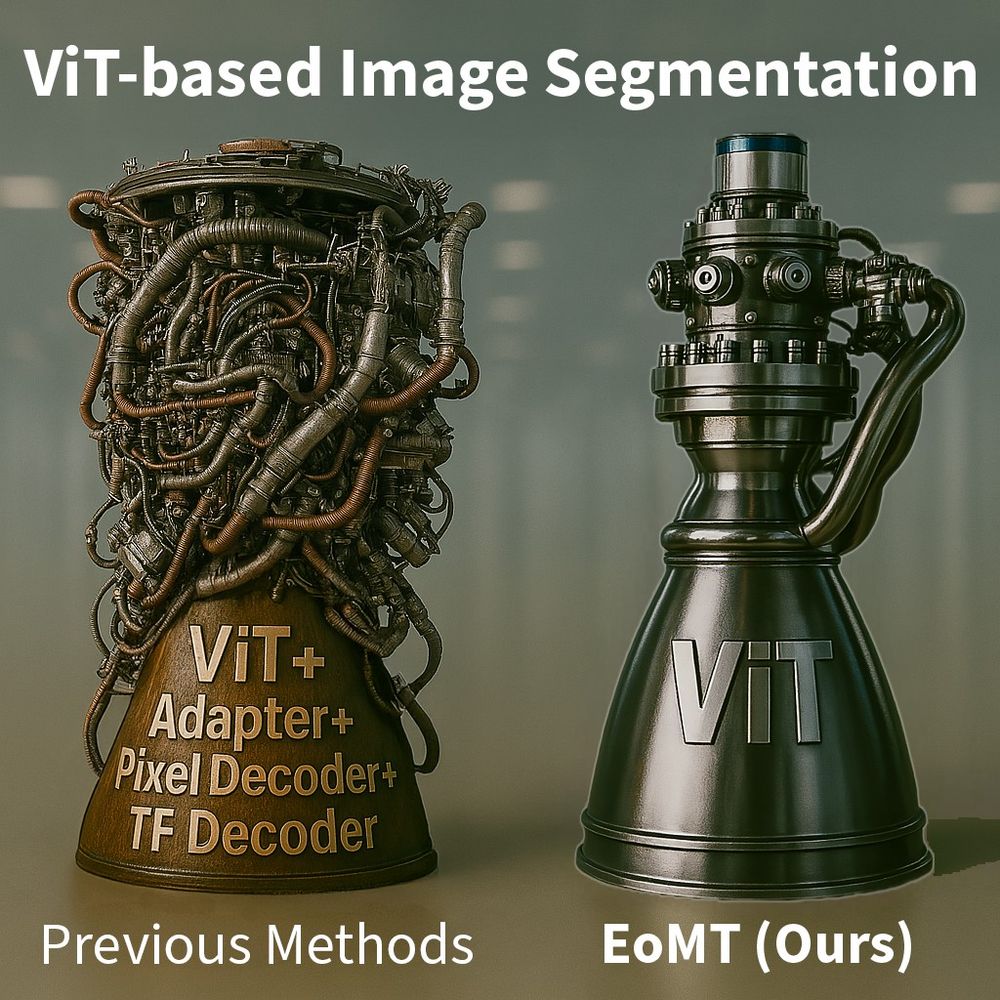

Image segmentation doesn’t have to be rocket science. 🚀

Why build a rocket engine full of bolted-on subsystems when one elegant unit does the job? 💡

That’s what we did for segmentation.

✅ Meet the Encoder-only Mask Transformer (EoMT): tue-mps.github.io/eomt (CVPR 2025)

(1/6)

Went outside today and thought this would be perfect for my first #bluesky post

05.04.2025 08:39 — 👍 1 🔁 0 💬 0 📌 0