Now that ICML papers are submitted and we are in the midst of discussions on whether scaling is enough or new architectural/algorithmic ideas are needed, what can be a better time to submit your best work to our workshop on New Frontiers in Associative Memory @iclr-conf.bsky.social?

31.01.2025 14:40 — 👍 14 🔁 2 💬 1 📌 1

I'm at #NeurIPS2024 ! Come chat with us about random features and DenseAMs, East hall # 3507 today Fri Dec 13 11a-2p!

13.12.2024 17:13 — 👍 2 🔁 0 💬 0 📌 0

Tips

If you’re headed to NeurIPS 2024, and want to learn about IBM Research Human-Centered Trustworthy AI, there are many many opportunities to do so.

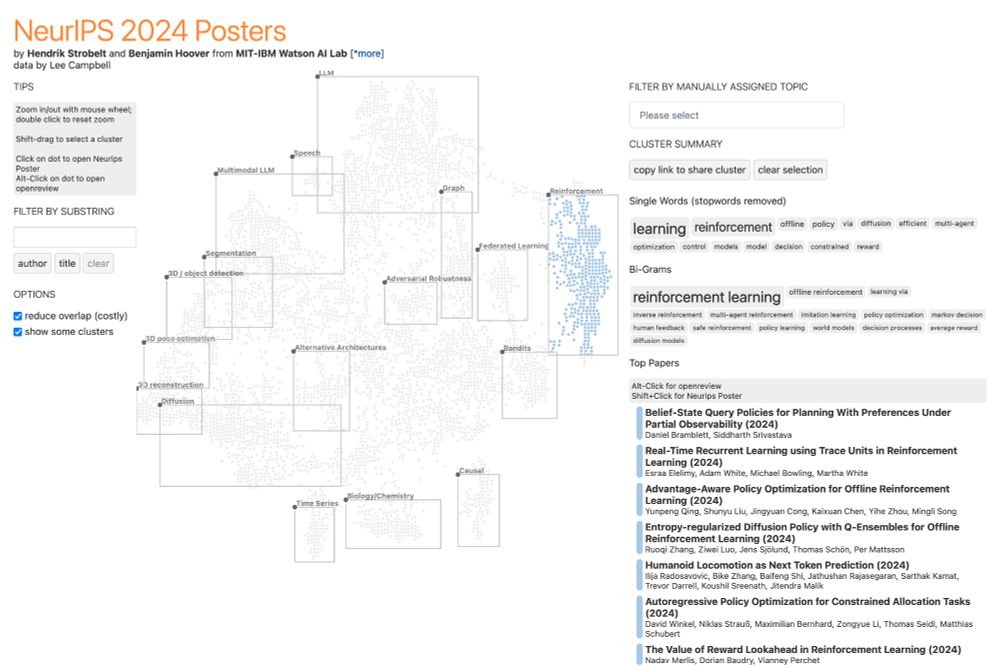

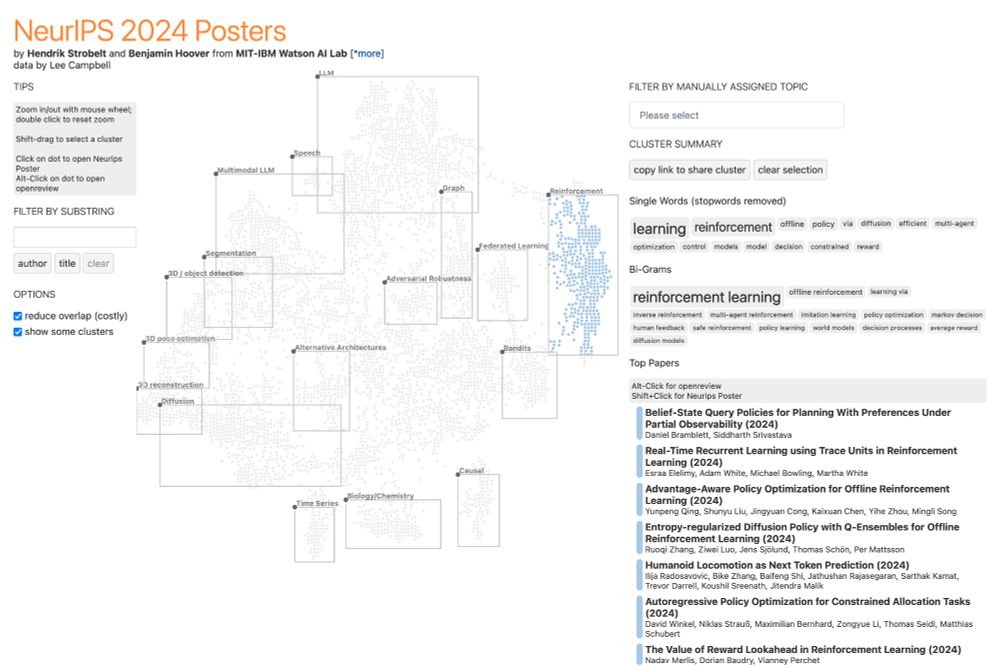

1. Start with the official NeurIPS explorer by @henstr.bsky.social and @benhoover.bsky.social. It is infoviz par excellence. neurips2024.vizhub.ai

07.12.2024 02:50 — 👍 9 🔁 3 💬 1 📌 0

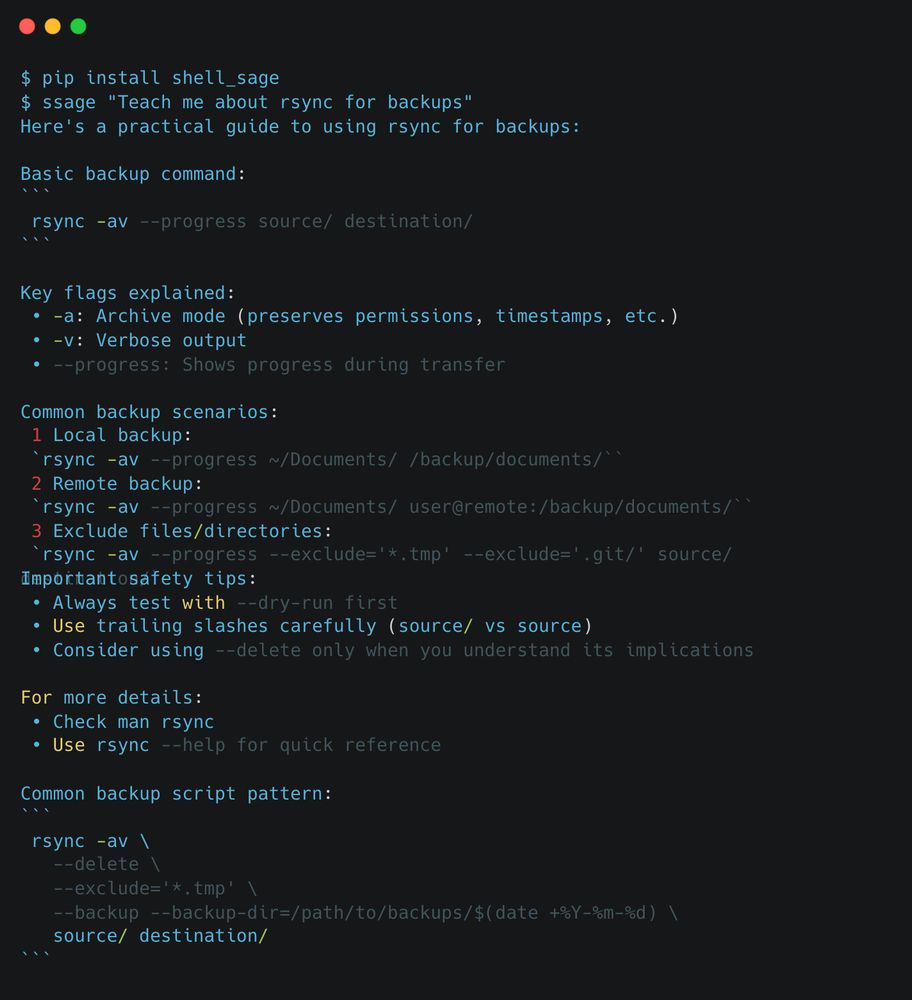

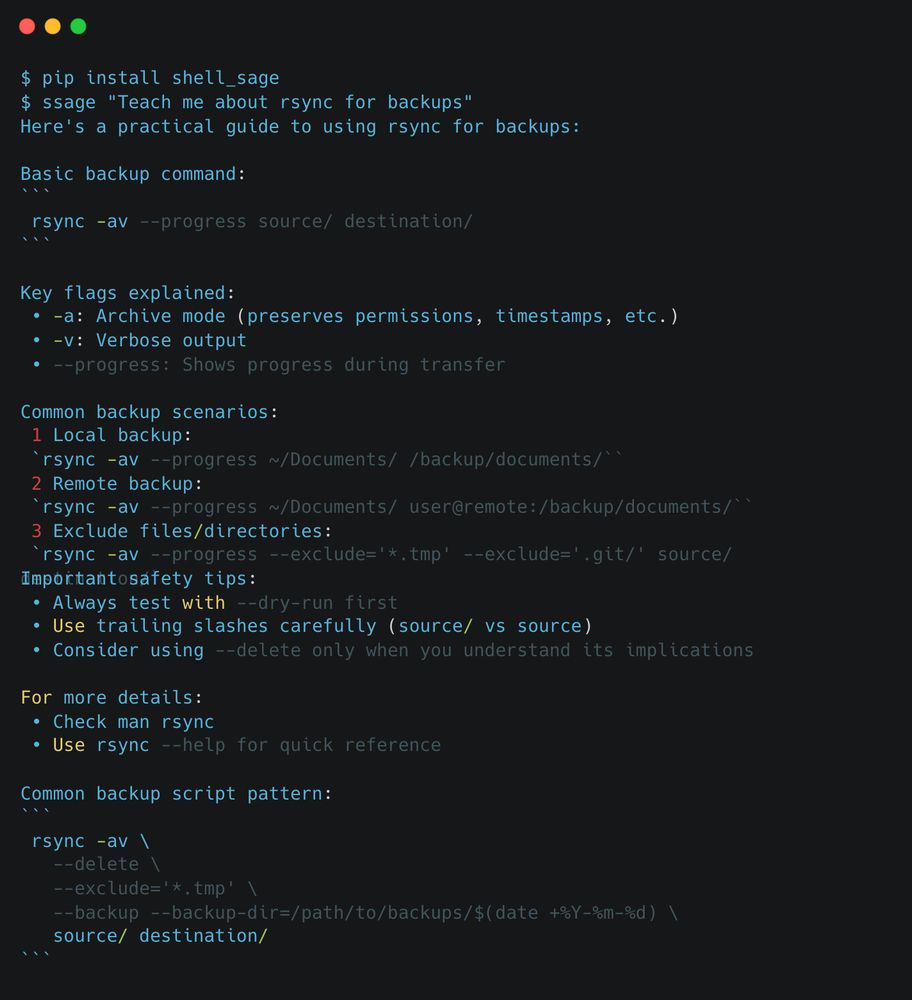

As R&D staff @ answer.ai, I work a lot on boosting productivity with AI. A common theme that always comes up is the combination of human+AI. This combination proved to be powerful in our new project ShellSage, which is an AI terminal buddy that learns and teaches with you. A 🧵

05.12.2024 20:27 — 👍 72 🔁 16 💬 7 📌 7

When u say AM decision boundaries, do you mean the "ridge" that separates basins of attraction? Not sure I understand the pseudomath

04.12.2024 20:50 — 👍 0 🔁 0 💬 0 📌 0

Interesting -- when you say inversion, you mean taking the strict inverse of the random projection? Our work is not just a random projection for the purpose of dim reduction, but instead a random mapping to a feature space to approximate the original AM's energy.

04.12.2024 20:47 — 👍 0 🔁 0 💬 0 📌 0

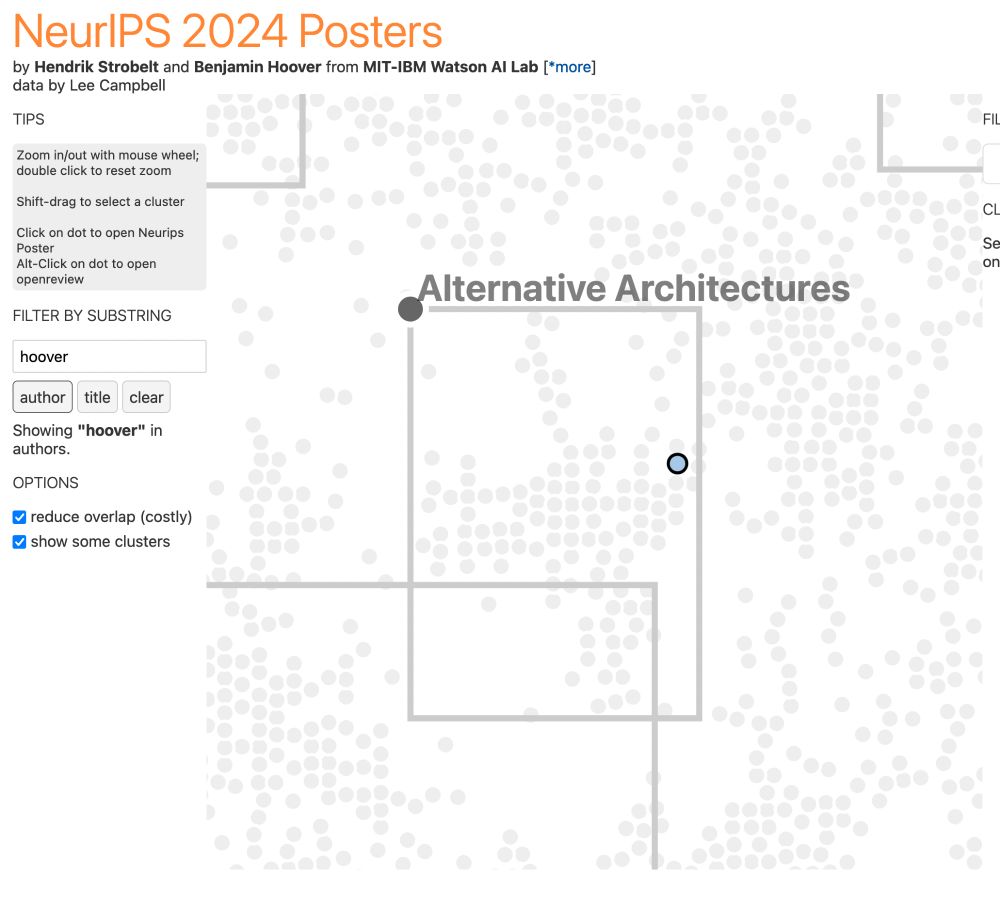

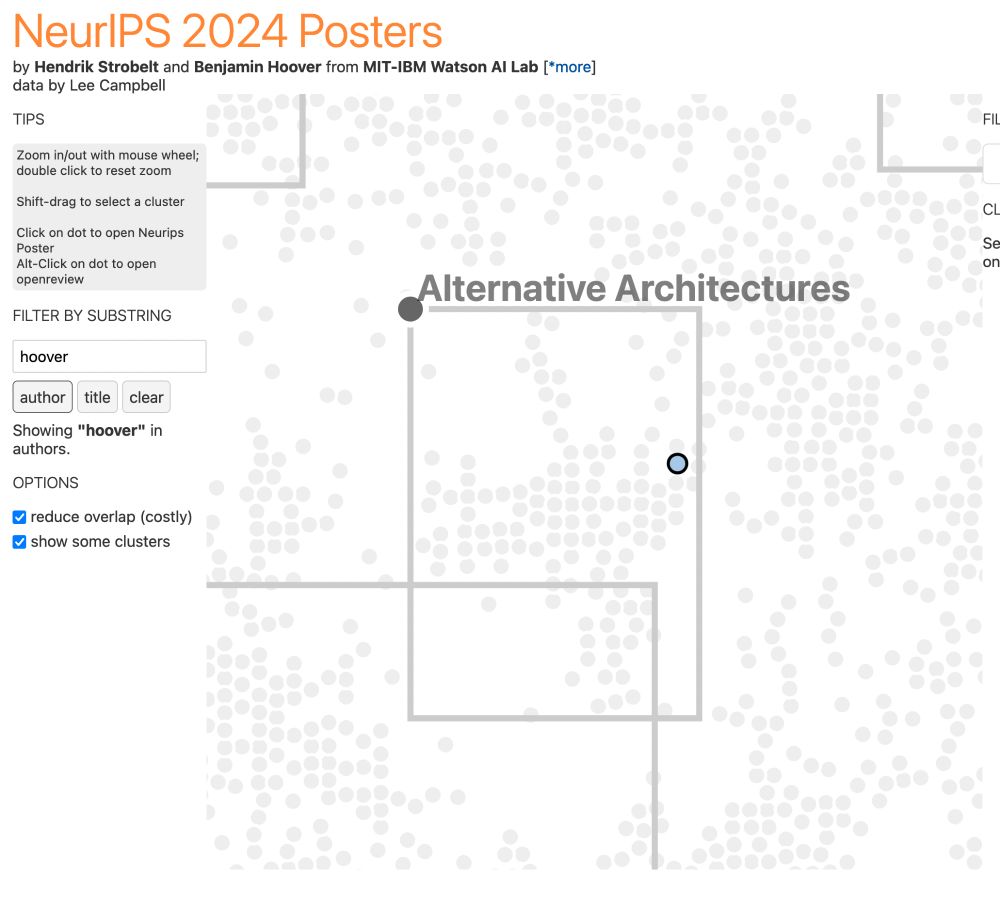

Overview of paper browser. A cluster for reinforcement learning is selected.

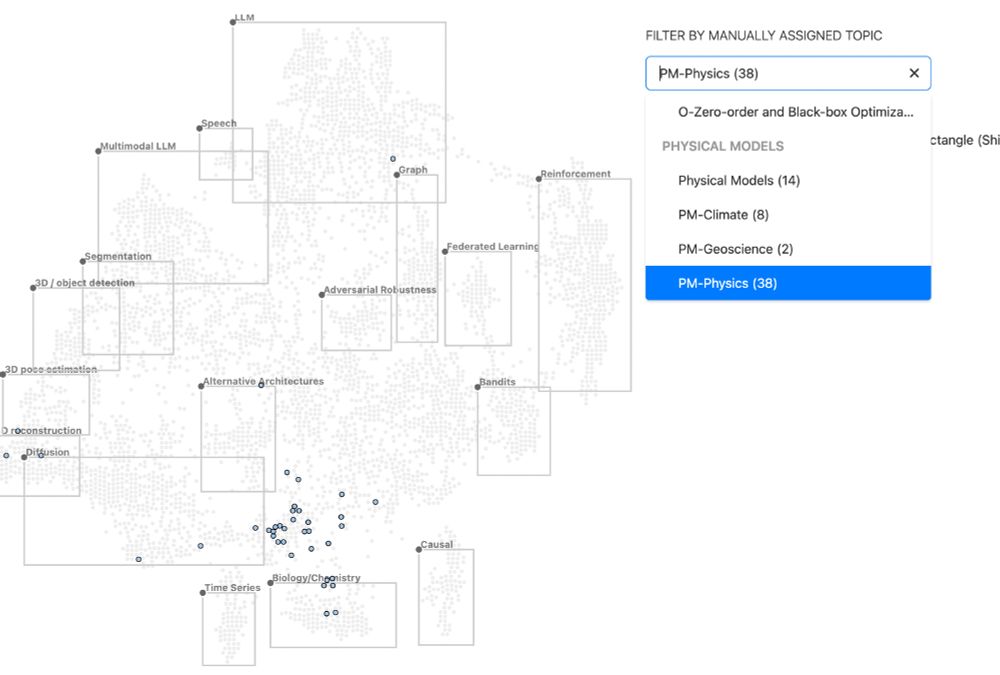

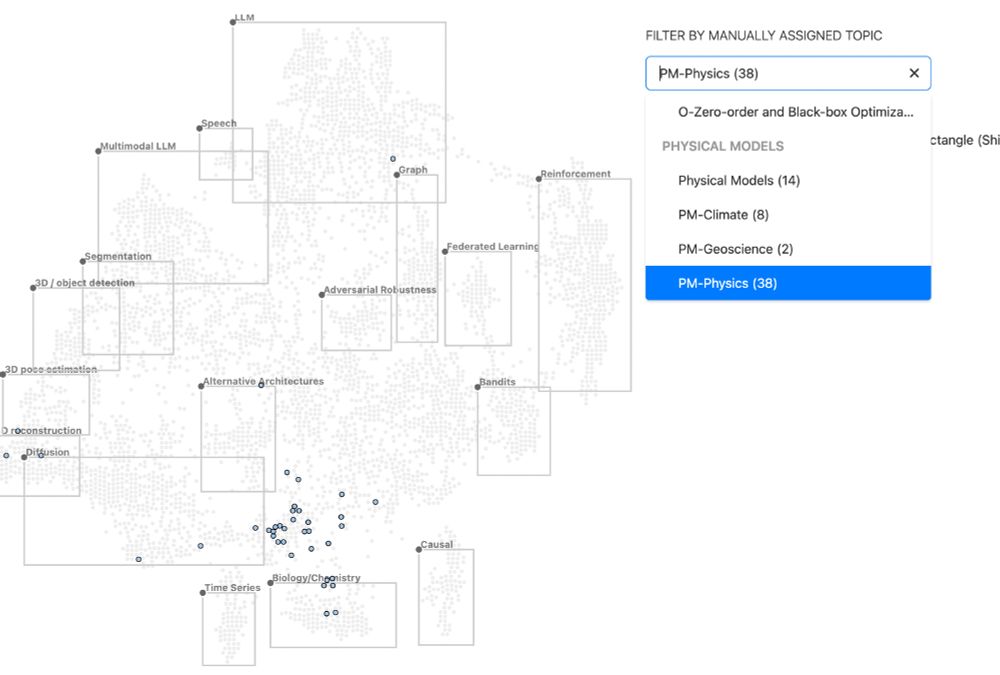

Paper Browser: only papers assigned to "physical models - physics" are shown.

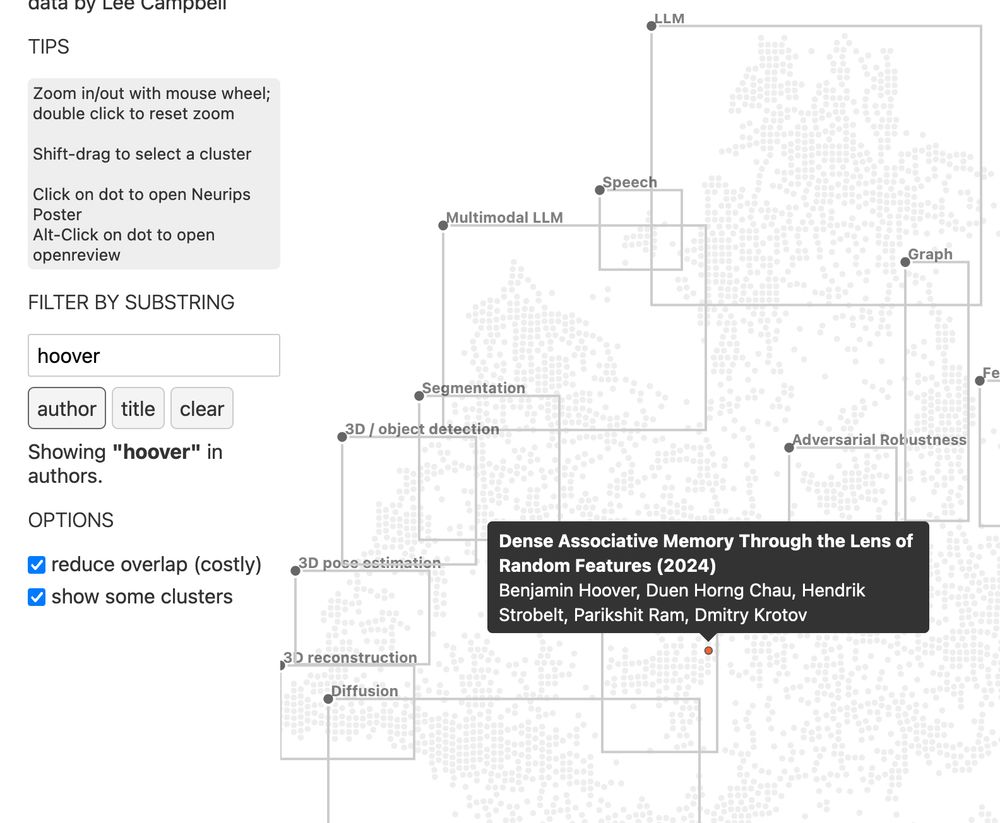

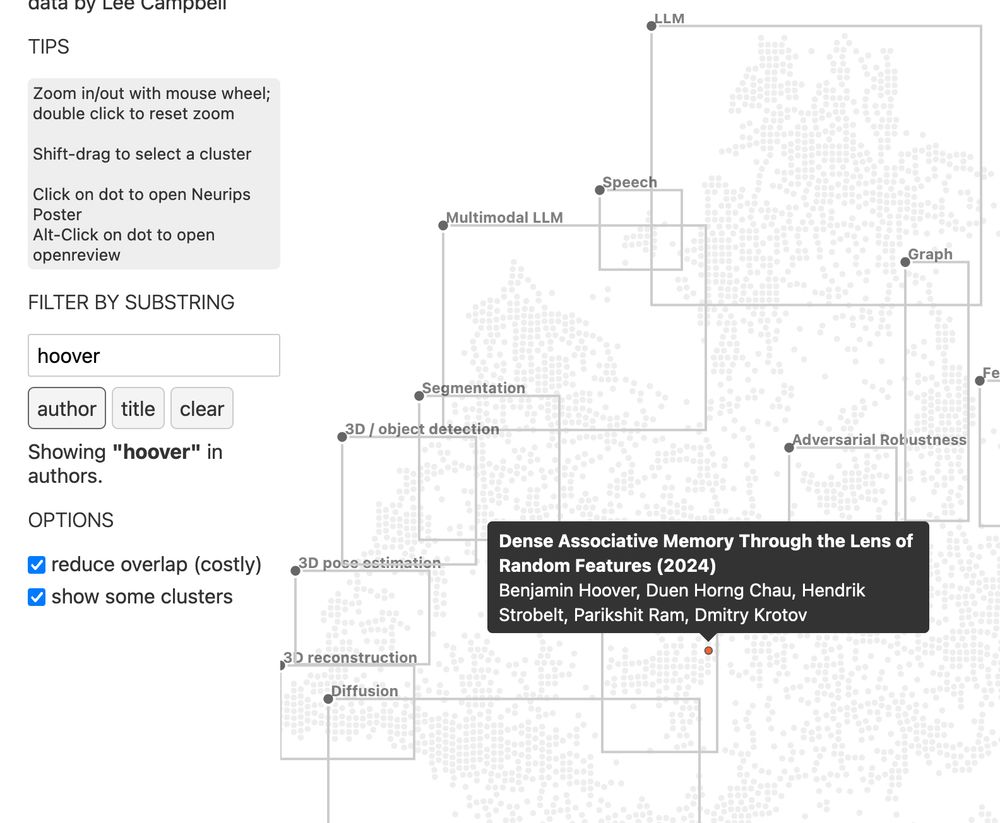

Paper Browser: Filtered by author "Hoover" and detail is shown

Paper Brower: ZOOOOM in

🎺 Here comes the official 2024 NeurIPS paper browser:

- browse all NeurIPS papers in a visual way

- select clusters of interest and get cluster summary

- ZOOOOM in

- filter by human assigned keywords

- filter by substring (authors, titles)

neurips2024.vizhub.ai

#neurips by IBM Research Cambridge

03.12.2024 17:01 — 👍 60 🔁 22 💬 5 📌 4

Dense Associative Memory Through the Lens of Random Features

Dense Associative Memories are high storage capacity variants of the Hopfield networks that are capable of storing a large number of memory patterns in the weights of the network of a given size. Thei...

🎉Work done with @polochau.bsky.social @henstr.bsky.social @p-ram-p.bsky.social @krotov.bsky.social

Want to learn more?

📜Paper arxiv.org/abs/2410.24153

💾Code github.com/bhoov/distri...

👨🏫NeurIPS Page: neurips.cc/virtual/2024...

🎥SlidesLive (use Chrome) recorder-v3.slideslive.com#/share?share...

03.12.2024 16:33 — 👍 3 🔁 1 💬 0 📌 0

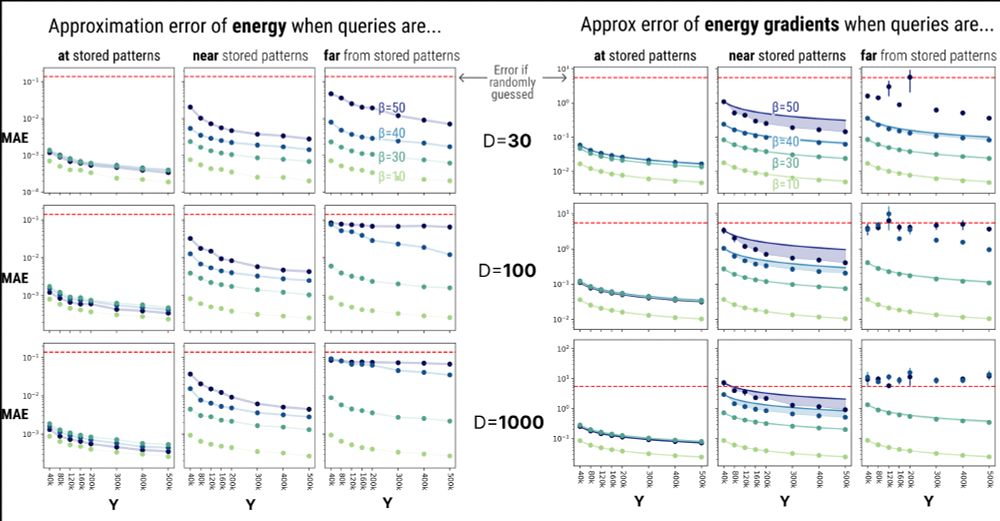

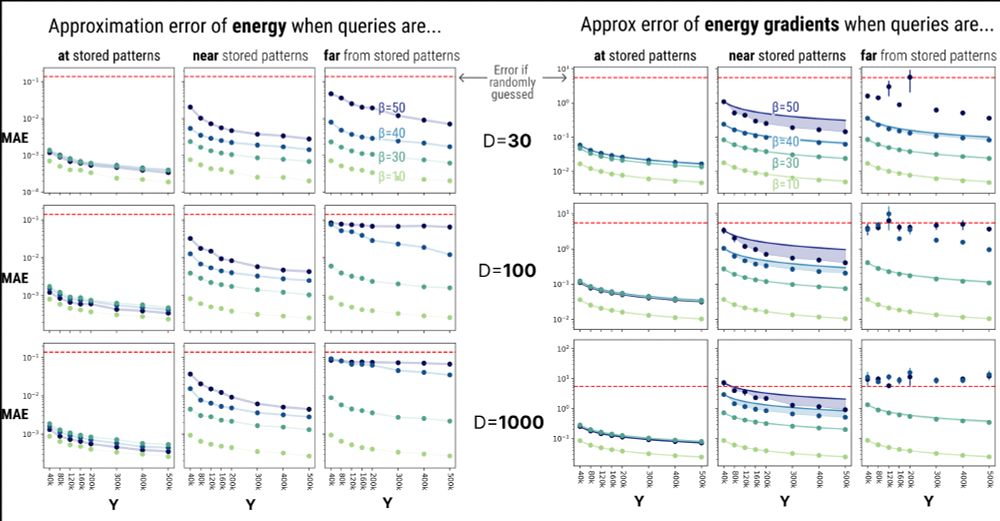

There is of course a trade off. DrDAM poorly approximates energy landscapes that are:

1️⃣Far from memories

2️⃣“Spiky” (i.e., low temperature/high beta)

We need more random features Y to reconstruct highly occluded/correlated data!

03.12.2024 16:33 — 👍 3 🔁 1 💬 1 📌 0

DrDAM can meaningfully approximate the memory retrievals of MrDAM! Shown are reconstructions of occluded imgs from TinyImagenet, retrieved by strictly minimizing the energies of both DrDAM and MrDAM.

03.12.2024 16:33 — 👍 3 🔁 1 💬 1 📌 0

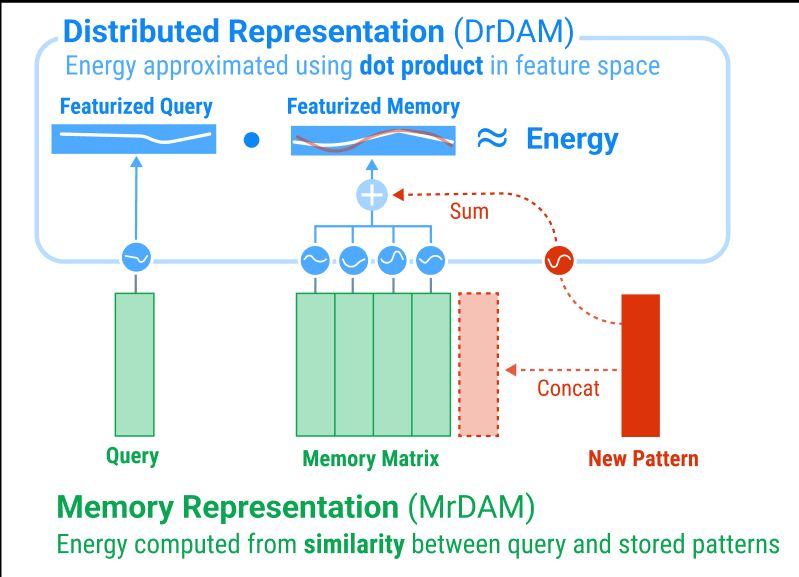

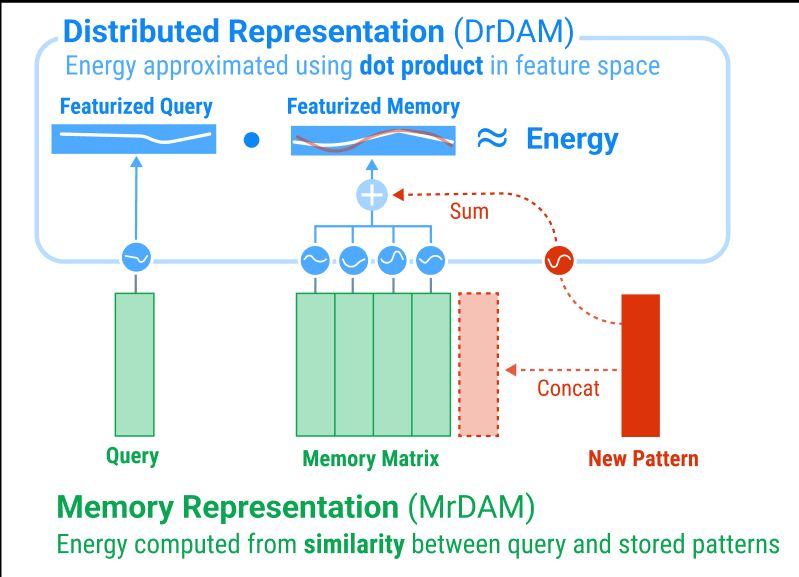

MrDAM energies can be decomposed into:

1️⃣A similarity func between stored patterns & noisy input

2️⃣A rapidly growing separation func (e.g., exponential)

Together, they reveal kernels (e.g., RBF) that can be approximated via the kernel trick & random features (Rahimi&Recht, 2007)

03.12.2024 16:33 — 👍 2 🔁 1 💬 1 📌 0

Why say “Distributed”?🤔

In traditional Memory representations of DenseAMs (MrDAM) one row in the weight matrix stores one pattern. In our new Distributed representation (DrDAM) patterns are entangled via superposition, “distributed” across all dims of a featurized memory vector

03.12.2024 16:33 — 👍 2 🔁 1 💬 1 📌 0

Excited to share "Dense Associative Memory through the Lens of Random Features" accepted to #neurips2024🎉

DenseAMs need new weights for each stored pattern–hurting scalability. Kernel methods let us add memories without adding weights!

Distributed memory for DenseAMs, unlocked🔓

03.12.2024 16:33 — 👍 20 🔁 6 💬 1 📌 2

Manager and Scientist and IBM Research

Doing cybernetics (without being allowed to call it that). Assistant Professor @ Brown. Previously: IBM Research, MIT, Rutgers. https://kozleo.github.io/

Research Scientist @ IBM Research, Cambridge (MA), US

PhD Student at RPI. Interested in Hopfield or Associative Memory models and Energy-based models.

CS Ph.D. Student @ Georgia Tech | AR/VR & Data Vis Research | Ex-@Goldman Sachs

alexanderyang.me

NLP Research Scientist at IBM Research

Principal Research Scientist at IBM Research AI in New York. Speech, Formal/Natural Language Processing. Currently LLM post-training, structured SDG and RL. Opinions my own and non stationary.

ramon.astudillo.com

Post-training Alignment at IBM Research AI | Prev: Penn CS + Wharton

Researcher at @IBMResearch #NLProc #ConvAI #Agents #SWE-Agent || RTs ≠ endorsements. Views personal, not of employers/institutions.

jatinganhotra.dev swebencharena.com

🥇 LLMs together (co-created model merging, BabyLM, textArena.ai)

🥈 Spreading science over hype in #ML & #NLP

Proud shareLM💬 Donor

@IBMResearch & @MIT_CSAIL

Father, Husband, and a Senior Researcher and Manager of the AI Multimodal group at IBM Research.

Senior Research Scientist @ IBM

Knowledge Graphs, Semantic Web, LLM, Data Integration, Wikidata, NLP, Text 2 KG