MLCommons - Better AI for Everyone

MLCommons aims to accelerate AI innovation to benefit everyone. It's philosophy of open collaboration and collaborative engineering seeks to improve AI systems by continually measuring and improving t...

5/ Congratulations and thanks to all submitters!

Alluxio, Argonne National Lab, DDN, ExponTech, FarmGPU, H3C, Hammerspace, HPE, JNIST/Huawei, Juicedata, Kingston, KIOXIA, Lightbits Labs, MangoBoost, Micron, Nutanix, Oracle, Quanta Cloud Technology, Samsung, Sandisk,

04.08.2025 17:36 — 👍 0 🔁 0 💬 1 📌 0

4/ The v2.0 submissions showcase a wide range of technical solutions—local & object storage, in-storage accelerators, software-defined storage, block systems, and more. This diversity highlights the community’s commitment to advancing AI infrastructure.

04.08.2025 17:36 — 👍 0 🔁 0 💬 1 📌 0

3/ New in this round: checkpoint benchmarks, designed to reflect real-world practices in large-scale AI training systems. These benchmarks provide key data to help stakeholders optimize system reliability and efficiency at scale.

04.08.2025 17:36 — 👍 0 🔁 0 💬 1 📌 0

v2.0 highlights:

- 200+ results

- 26 organizations

- 7 countries represented

- Benchmarked systems now support about 2x the accelerators vs v1.0

04.08.2025 17:36 — 👍 0 🔁 0 💬 1 📌 0

New MLPerf Storage v2.0 Benchmark Results Demonstrate the Critical Role of Storage Performance in AI Training Systems - MLCommons

New checkpoint benchmarks provide “must-have” information for optimizing AI training

1/ MLCommons just released results for the MLPerf Storage v2.0 benchmark—an industry-standard suite for measuring storage system performance in #ML workloads. This benchmark remains architecture-neutral, representative, and reproducible.

mlcommons.org/2025/08/mlpe...

04.08.2025 17:36 — 👍 0 🔁 0 💬 1 📌 0

MLCommons Releases MLPerf Client v1.0: A New Standard for AI PC and Client LLM Benchmarking - MLCommons

MLCommons Releases MLPerf Client v1.0 with Expanded Models, Prompts, and Hardware Support, Standardizing AI PC Performance.

MLPerf Client v1.0 is out! 🎉

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

30.07.2025 15:12 — 👍 0 🔁 2 💬 0 📌 0

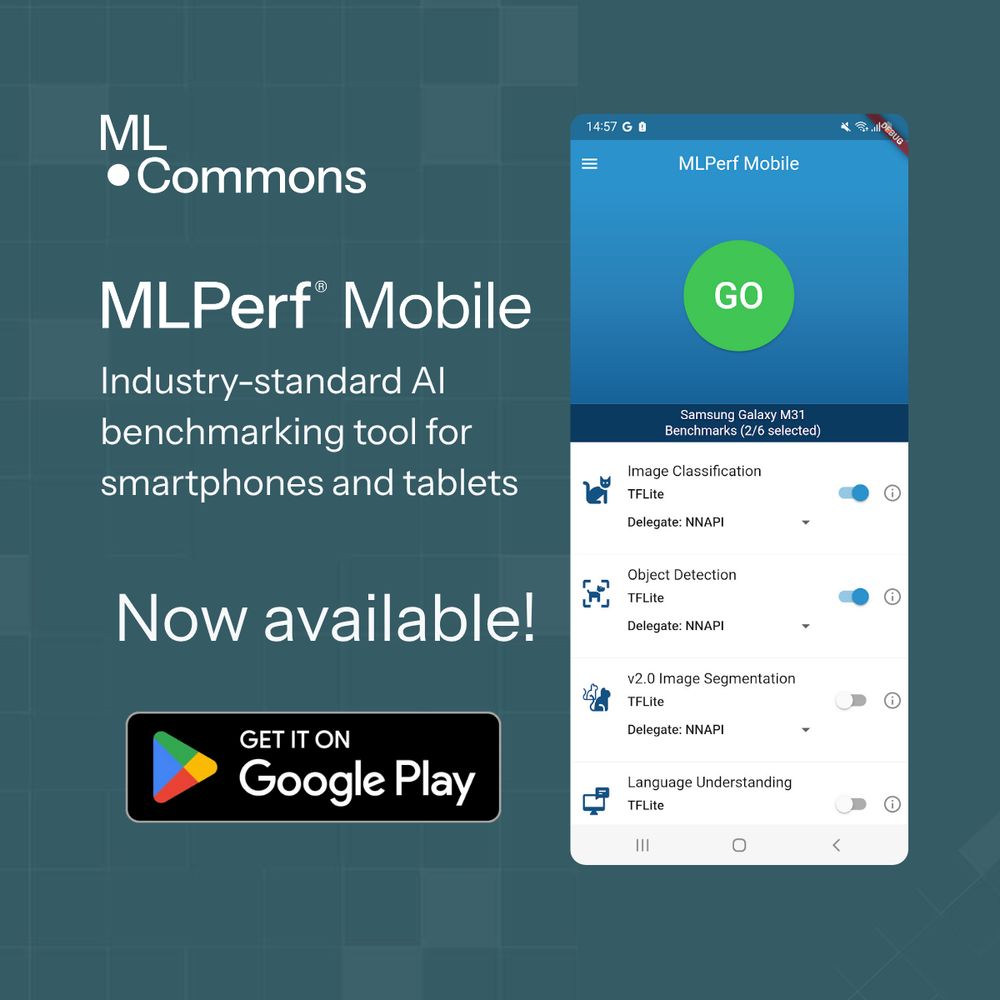

MLCommons just launched MLPerf Mobile on the Google Play Store! 📱

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

10.07.2025 19:01 — 👍 3 🔁 2 💬 1 📌 2

3/3

27.06.2025 19:07 — 👍 0 🔁 0 💬 0 📌 0

MLCommons Builds New Agentic Reliability Evaluation Standard in Collaboration with Industry Leaders - MLCommons

MLCommons and partners unite to create actionable reliability standards for next-generation AI agents.

3/3 @cam.ac.uk , @ox.ac.uk, University of Illinois Urbana-Champaign, and @ucsb.bsky.social.

Read more about the collaborative development of the Agentic Reliability Evaluation Standard and opportunities to participate: mlcommons.org/2025/06/ares...

27.06.2025 19:07 — 👍 0 🔁 0 💬 1 📌 0

2/3 Contributions from: Advai, AI Verify Foundation, @anthropic.com, @arize.bsky.social , @cohere.com , Google, Intel, LNE, Meta, @microsoft.com, NASSCOM, OpenAI, Patronus AI, @polymtl.bsky.social, Qualcomm, QuantumBlack - AI by McKinsey, Salesforce, Schmidt Sciences, @servicenow.bsky.social,

27.06.2025 19:07 — 👍 0 🔁 0 💬 1 📌 0

MLCommons Builds New Agentic Reliability Evaluation Standard in Collaboration with Industry Leaders - MLCommons

MLCommons and partners unite to create actionable reliability standards for next-generation AI agents.

Today, MLCommons is announcing a new collaboration with contributors from across academia, civil society, and industry to co-develop an open agent reliability evaluation standard to operationalize trust in agentic deployments.

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

27.06.2025 19:07 — 👍 1 🔁 0 💬 1 📌 0

We're all about acceleration! 😉

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

03.04.2025 11:15 — 👍 5 🔁 4 💬 1 📌 0

AI is posing immediate threats to your business. Here’s how to protect yourself

The AI threats your busines is facing right now, and how to prevent them

Companies are deploying AI tools that haven't been pressure-tested, and it's already backfiring.

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

20.06.2025 16:03 — 👍 1 🔁 1 💬 1 📌 0

Call for Submissions!

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

05.06.2025 18:12 — 👍 0 🔁 1 💬 0 📌 0

4/ Read more and check out the full results here:

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

#MLPerf #MLCommons #AI #MachineLearning #Benchmarking

04.06.2025 15:35 — 👍 1 🔁 0 💬 0 📌 0

3/ MLPerf Training v5.0 introduces the Llama 3.1 405B benchmark, our largest language model yet. We also saw big performance gains for Stable Diffusion and Llama 2.0 70B LoRA—AI training is getting faster and smarter.

04.06.2025 15:35 — 👍 0 🔁 0 💬 1 📌 0

2/ Thank you to all 20 submitters for driving progress in AI benchmarking:

AMD, ASUSTeK, Cisco, CoreWeave, Dell, GigaComputing, Google Cloud, HPE, IBM, Krai, Lambda, Lenovo, MangoBoost, Nebius, NVIDIA, Oracle, QCT, SCITIX, Supermicro, TinyCorp.

04.06.2025 15:35 — 👍 0 🔁 0 💬 1 📌 0

1/ The MLPerf Training v5.0 results are here—Let’s have a fresh look at the state of large-scale AI training! This round set a new record: 201 performance results from across the industry.

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

04.06.2025 15:35 — 👍 0 🔁 1 💬 1 📌 0

1st Workshop on Multilingual Data Quality Signals

Call for papers!

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

29.05.2025 17:18 — 👍 9 🔁 8 💬 0 📌 1

MLCommons is partnering with Nasscom to develop globally recognized AI reliability benchmarks, including India-specific, Hindi-language evaluations. Together, we are advancing trustworthy AI.

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

29.05.2025 15:07 — 👍 2 🔁 1 💬 0 📌 0

MLCommons MLPerf Training Expands with Llama 3.1 405B - MLCommons

MLCommons MLPerf Training Expands with Llama 3.1 405B

MLCommons' MLPerf Training suite has a new #pretraining #benchmark based on #Meta’s Llama 3.1 405B model. We use the same dataset with a bigger model and longer context, offering a more relevant and challenging measure for today’s #AI systems. mlcommons.org/2025/05/trai...

05.05.2025 16:22 — 👍 0 🔁 0 💬 0 📌 0

David Kanter MLPerf Measures AI Data Storage Performance

In this Tech Barometer podcast, MLCommons Co-founder David Kanter talks about creating the MLPerf benchmark to help enterprises understand AI workload performance of various data storage technologies.

As AI models grow, storage is key to #ML performance. MLCommons' @dkanter.bsky.social joins #Nutanix’s Tech Barometer podcast to explain why and how the #MLPerf #Storage #benchmark guides smarter #data #infrastructure for #AI.

Listen: www.nutanix.com/theforecastb...

#DataStorage #EnterpriseIT

30.04.2025 16:20 — 👍 1 🔁 2 💬 0 📌 0

3/ We want to thank all the participants: #Intel, #Microsoft,

#NVIDIA, #Qualcomm Technologies.

#MLPerf #MLCommons #Client

28.04.2025 15:12 — 👍 0 🔁 0 💬 0 📌 0

2/ This update broadens hardware compatibility and introduces improved device selection and updated software components, providing a transparent and standardized approach to measuring AI performance across next-generation platforms.

28.04.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

1/ MLCommons announces the release of MLPerf Client v0.6, the first open benchmark to support NPU and GPU acceleration on consumer AI PCs.

Read more: mlcommons.org/2025/04/mlpe...

28.04.2025 15:12 — 👍 0 🔁 0 💬 1 📌 1

MLCommons Releases French AILuminate Benchmark Demo Prompt Dataset to Github - MLCommons

MLCommons announces the release of two French language datasets for the AILuminate benchmark. A 1,200 prompt Creative-Commons licensed version, and 12,000 Practice Test prompts.

#MLCommons just released two new French prompt #datasets for #AILuminate:

🔹Demo set: 1,200+ prompts, free for AI safety testing

🔹Practice set: 12,000 prompts for deeper evaluation (on request)

Native speakers made both and are ready for #ModelBench. Details: mlcommons.org/2025/04/ailu...

#AI #AIRR

17.04.2025 15:44 — 👍 1 🔁 1 💬 0 📌 0

What is MLPerf? Understanding AI’s Top Benchmark

A constantly evolving set of real-world AI tests pushes Intel experts to boost performance, level the playing field and make AI more accessible to all.

"What’s admirable about MLPerf is that everything is shared and benchmarks are open sourced. Results need to be reproducible — no mystery can remain. This openness allows for more dynamic comparisons beyond raw side-by-side speed, like performance..."

newsroom.intel.com/artificial-i...

15.04.2025 15:40 — 👍 1 🔁 1 💬 0 📌 0

We also want to thank the additional technical contributors: Pablo Gonzalez, MLCommons; Anandhu Sooraj, MLCommons; Arjun Suresh, AMD (formerly at MLCommons)

07.04.2025 21:32 — 👍 0 🔁 0 💬 0 📌 0

Official account for the University of Cambridge. Follow us for research, news, events and student stories from the Cambridge community 🎓📚

AI, Cloud, Productivity, Computing, Gaming & Apps ☀️

We build secure, scalable, and private enterprise-grade AI technology to solve real-world business problems. Join us: http://cohere.com/careers

@Cohere.com's non-profit research lab and open science initiative that seeks to solve complex machine learning problems. Join us in exploring the unknown, together. https://cohere.com/research

AlgoPerf benchmark for faster neural network training via better training algorithms

Common Crawl is a non-profit foundation dedicated to the Open Web.

Global Healthcare Services Manager @ Hyland | Passionate about healthcare, digital health, AI & cloud | BSc, PRINCE2, MSP, L6σ Green Belt | Opinions are my own | https://linktr.ee/paulcochrane

Founder, The Tech Report and Ars Technica. Formerly AMD Radeon and Intel GPU groups. Now product management at MLCommons. Opinions are my own.

Data scientist, Principal AI Industry Analyst and Influencer - http://radicaldatascience.ai, having a blast teaching data science at UCLA #AI #DataScience #MachineLearning #Rstats

Academic at King's College London, director of research at the Open Data Institute. Working on the sociotechnicalities of data. Views my own.

All-in-One Social Media Management Platform

Trusted by 15,000+ agencies and brands

NLP, Linguistics, Cognitive Science, AI, ML, etc.

Job currently: Research Scientist (NYC)

Job formerly: NYU Linguistics, MSU Linguistics

winbuzzer.com - latest news, announcements, and rumors about tech and #AI #microsoft #google #meta #amazon #openai #anthropic #nvidia #mistral #xai #huggingface #llms

Follow on Telegram: https://t.me/+4Fs4KU-JgaMxNTZk

Hot Hardware was founded over 20 years ago in 1999, and covers computer products, gadget, science and related technology news, along with deep-dive tech product reviews.

🏢 Chief Product Officer @NexusTek.com https://nexustek.com

💬 https://cuthrell.com/@jay or @jay.cuthrell.com.ap.brid.gy

📫 fudge.org & hot.fudge.org or @fudge.org

👔 https://www.linkedin.com/in/jaycuthrell

ℹ️ Disclosure https://jaycuthrell.com/disclosure

Former sysadmin and storage consultant, present cat herder for Tech Field Day and Gestalt IT, future old man shouting “on-premises” at clouds

@SFoskett@TechFieldDay.net

Founder of MLCommons making machine learning better for everyone. MLPerf, CPUs, computer architecture, semiconductors, graphics, economics, writes RWT

Bringing the tech thought leader community together with the coolest technology companies. Part of The Futurum Group.

Site: https://TechFieldDay.com | YouTube: https://YouTube.com/TechFieldDay | Podcast: https://podcasters.spotify.com/pod/show/techfieldday