omg, never could imagine that I was ever scared about something like "auto compaction". It always feels like speed dating (never did it but that how I imagine it :)

01.12.2025 18:28 — 👍 0 🔁 0 💬 0 📌 0

I've felt in love with Claude Code's CLI UI. Super well done, and worth a try, even when you're not in the vibe-coding business.

27.06.2025 09:26 — 👍 0 🔁 0 💬 0 📌 0

I totally agree that this kind of 'reframing' of given concepts is dangerous, and I apologize for that 'no one writes em-dashes manually' 🙇

— is the correct form, but harder to type. Maybe that kind of semi-correct sloppiness (to use - instead) makes us human?

13.03.2025 15:24 — 👍 0 🔁 0 💬 1 📌 0

Of course, I already have you on my exception list :-)

Agreed, not every em dash is a decisive flag, but in combination with other indicators, it's an interesting data point that is often overlooked.

13.03.2025 11:43 — 👍 1 🔁 0 💬 1 📌 0

The easiest way to detect LLM-generated output: The usage of "—" (U+2014 : EM DASH) instead of "-" (U+002D : HYPHEN-MINUS). Nobody types an em dash manually, but ChatGPT at least LOVES it.

Corollary: Always check your ChatGPT output for hyphens 😜

13.03.2025 09:52 — 👍 0 🔁 0 💬 2 📌 0

Chili Pepper Seeds soaked 24h in chamilea tea for improved germination rates

Putting 190 seeds into mini greenhouses

Three mini greenhouses for growing chilli pepper

After one year break, we're back for the chilli pepper season 2025. Let's g(r)o(w) ! #chili

05.03.2025 11:27 — 👍 3 🔁 0 💬 0 📌 0

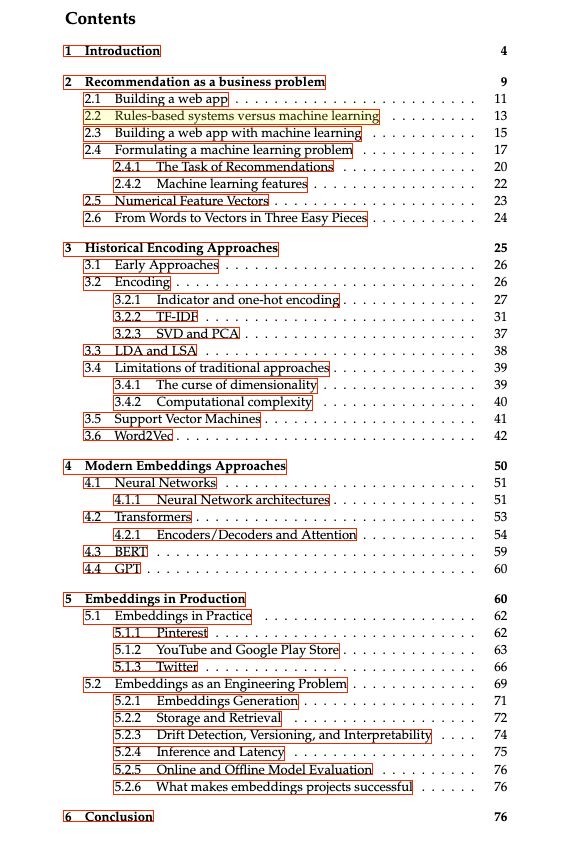

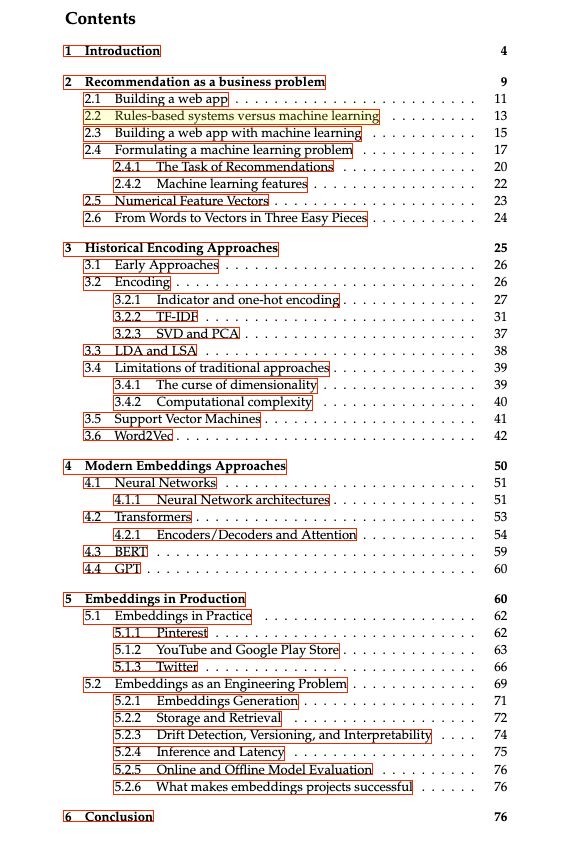

Book outline

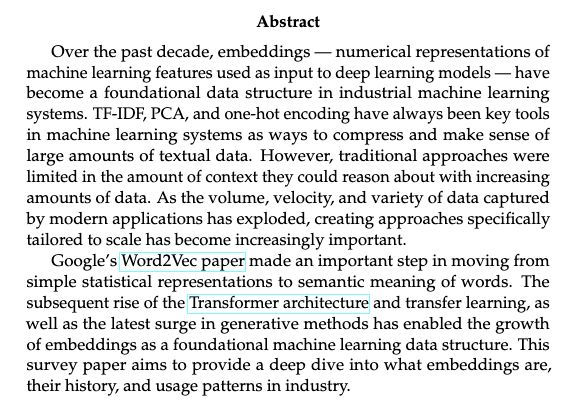

Over the past decade, embeddings — numerical representations of

machine learning features used as input to deep learning models — have

become a foundational data structure in industrial machine learning

systems. TF-IDF, PCA, and one-hot encoding have always been key tools

in machine learning systems as ways to compress and make sense of

large amounts of textual data. However, traditional approaches were

limited in the amount of context they could reason about with increasing

amounts of data. As the volume, velocity, and variety of data captured

by modern applications has exploded, creating approaches specifically

tailored to scale has become increasingly important.

Google’s Word2Vec paper made an important step in moving from

simple statistical representations to semantic meaning of words. The

subsequent rise of the Transformer architecture and transfer learning, as

well as the latest surge in generative methods has enabled the growth

of embeddings as a foundational machine learning data structure. This

survey paper aims to provide a deep dive into what embeddings are,

their history, and usage patterns in industry.

Cover image

Just realized BlueSky allows sharing valuable stuff cause it doesn't punish links. 🤩

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

22.11.2024 11:13 — 👍 653 🔁 101 💬 22 📌 6

RedHat on Bluesky go.bsky.app/Du6L1Ec

22.11.2024 06:49 — 👍 1 🔁 3 💬 0 📌 0

Software Architect, Passionate Developer, Java/Web Expert, Gamer, VR Enthusiast, Sci-Fi/Fantasy Fan, Photography Lover, Smooth Jazz Piano Player, Husband & Dad

My job is to make developers happy.

Developer Relations Engineer at https://bsky.app/profile/port-io.bsky.social

Java champion, OSS, husband and father.

5 🐱

AI and cognitive science, Founder and CEO (Geometric Intelligence, acquired by Uber). 8 books including Guitar Zero, Rebooting AI and Taming Silicon Valley.

Newsletter (50k subscribers): garymarcus.substack.com

Developer Advocate at Red Hat • Organizer KCD New York • Previously at MongoDB • containers, k8s, & everything in between

Programmer, author, speaker, founder Agile Developer, Inc., co-founder of @dev2next Conference, professor @CSatUH

Freelance Software Developer https://nilshartmann.net #Java #Spring #React #TypeScript #GraphQL

Founding list[float] engineer. Recsys. Personalization. Infra. Systems. Normcore code. Nutella. Vectors. Words. Vibes. Bad puns (soon).

https://vickiboykis.com/what_are_embeddings/

Hibernate team at IBM. Opinions and tastes are my own.

@yoannrodiere (X/Twitter)

@yrodiere@mastodon.social (Mastodon)

Engineer by training, communicator by profession, musician by passion. Open Source, open mind, open heart. Loves travel, turbulence, timpani. TW-SG-US-FI

Software Quality Engineer @ Red Hat, Mom, Wife, Brazilian, Python and Linux lover, learning to love Golang (she/her)

Tinkering with vLLM @RedHat

Distinguished Architect at Red Hat. Developer, Maintainer, Innovator. Sports and Travel Junkie

Senior Principal Software Engineer at Red Hat AI | Open Source Leader at KServe, Argo, Kubeflow, Kubernetes, CNCF | Maintainer of XGBoost, TensorFlow | Keynote Speaker | Author | Technical Advisor

More info: http://terrytangyuan.xyz

We are hiring!

Product Manager @ Red Hat for OpenShift

Product Manager @ Red Hat I OpenShift, Kubernetes, Cloud-Native, Containers, DevOps and App Delivery

Father, Husband, Engineer, Traveller. DE@RedHat.

https://www.karan.org/

Work: @RedHat

Host @Cloudcastpod.bsky.social

Alum: @WakeBaseball

Live: North Carolina

Red Hat | Kubernetes | CNCF | Open Source