This is the perfect amount of gossip. I need to know this, but I don't need to see a screenshot or want to know the specific dorky shit.

04.08.2025 04:00 — 👍 7 🔁 0 💬 1 📌 0

you can just do things (derogatory)

04.08.2025 03:21 — 👍 2 🔁 1 💬 0 📌 0

If the hierarchical reasoning model holds up under scrutiny, it can become the basis of someone’s "the sweet lesson" blog which we will be citing every time we can’t afford the compute resources to implement an idea.

04.08.2025 02:42 — 👍 17 🔁 2 💬 1 📌 0

They don’t frame it as a benchmark but as projection onto the “evil vector” :)

04.08.2025 01:16 — 👍 1 🔁 0 💬 0 📌 0

Universities are not unified organizations: they're more like an armed truce between a bunch of competing departments.

In that context, changing core curricula is incredibly hard and unrewarding work.

03.08.2025 23:38 — 👍 0 🔁 0 💬 0 📌 0

When I say "machine learning," that's not limited to language models or neural networks.

The implicit premise of my recommendation is that students are going to be interacting with systems produced by machine learning for the rest of their lives, and many will need to tune or adapt those systems.

03.08.2025 22:39 — 👍 1 🔁 0 💬 0 📌 0

what "statistical learning" is, what "out-of-distribution" means, what a neural network is, how performance is measured and reasons not to trust those measurements.

"What the models can do" is a moving target, but machine learning is a flexible mode of reasoning about problem representations.

03.08.2025 22:36 — 👍 1 🔁 0 💬 0 📌 0

My logic here is the same as for, like, biochemistry. We don't teach all students "here's how to use the Agilent 2024 Microarray Scanner"; but we do teach them what proteins are and what a gene is. That knowledge will serve them broadly.

Same with machine learning. They need bias vs variance, +

03.08.2025 22:36 — 👍 0 🔁 0 💬 1 📌 0

OpenAI is going to release an open model (1st since GPT 2) and GPT-5 within weeks of eachother. The open model is indicative of where impact is possible in the AI space, where GPT-5 isnt a huge step like GPT-4.

Open models & agents/products are big stories of 2025 so far.

03.08.2025 18:47 — 👍 21 🔁 3 💬 1 📌 1

With care, and lots of caveats about change, you can teach students about the affordances or limitations of today's models.

But for a required course, I would recommend fundamentals of ML. I think that will serve students in a wider range of ways for a longer time.

03.08.2025 22:23 — 👍 0 🔁 0 💬 1 📌 0

100% accurate as a description in 2020, but already needed a lot of serious qualification (related to post-training) in 2022. By 2024 it was really no longer right to say that these models lacked sensory grounding, and by 2025 it was misleading to say they worked by directly predicting next words.

03.08.2025 22:14 — 👍 0 🔁 0 💬 1 📌 0

I don't mean that I think these courses will be one-sided. Bergstrom is making an admirable effort to be balanced in that material.

But I do think the account of "what LLMs do" is susceptible to dating quite rapidly, even if we resist adding a phrase like "can only." E.g., "fancy autocomplete" was+

03.08.2025 22:12 — 👍 1 🔁 0 💬 2 📌 0

The "LLM debunking" or even "media literacy for AI"-style material is going to date *so* fast. Much of it may be inapplicable by the time students graduate.

03.08.2025 22:02 — 👍 0 🔁 0 💬 1 📌 0

I think "AI literacy" is the path that will often be adopted — because it's easier to find people to teach it, and because faculty aren't ready to affirm that machine learning is going to be central to 21c life.

But I really think we would serve students better by teaching fundamentals. +

03.08.2025 22:02 — 👍 0 🔁 0 💬 1 📌 0

Yes, I mean for all students.

03.08.2025 21:44 — 👍 2 🔁 0 💬 1 📌 0

Yep, implementing stuff by hand is definitely the way to understand it

03.08.2025 21:34 — 👍 0 🔁 0 💬 0 📌 0

I think the answer is probably just “inertia”

The stated rationale would be something like “they can take that to fulfill their quantitative requirement,” plus probably a bit of throat-clearing about not wanting to be narrowly vocational

But actually just inertia

03.08.2025 20:46 — 👍 1 🔁 0 💬 1 📌 0

Text Shot: Something that has become undeniable this month is that the best available open weight models now come from the Chinese AI labs.

I continue to have a lot of love for Mistral, Gemma and Llama but my feeling is that Qwen, Moonshot and Z.ai have positively smoked them over the course of July.

The best available open weight LLMs now come from China https://simonwillison.net/2025/Jul/30/chinese-models/ #AI #OpenSource

03.08.2025 20:31 — 👍 14 🔁 4 💬 0 📌 0

"It's called transfer learning if it works and overfitting if it doesn't."

03.08.2025 20:32 — 👍 48 🔁 3 💬 3 📌 0

A problem that used to occur in underparameterized models

03.08.2025 19:39 — 👍 5 🔁 0 💬 0 📌 0

I hope you’re right, but I have learned that I usually underestimate both human inertia and the power of maladaptive institutions to persist nevertheless

03.08.2025 19:07 — 👍 0 🔁 0 💬 1 📌 0

most people are *bad* writers. if a tool is available that lets them be mediocre writers for one of the few times in their life when the quality of writing will matter for them, that's not a bad thing - assuming (big assumption) that they think to actually fact check it.

03.08.2025 16:53 — 👍 233 🔁 21 💬 17 📌 10

It's going to be much faster. Everything is going to be much faster (and that's a major issue).

03.08.2025 18:31 — 👍 3 🔁 1 💬 2 📌 0

Correct. We should be teaching all that in high school.

03.08.2025 18:39 — 👍 4 🔁 0 💬 1 📌 0

I disagree w Ted mostly but strongly agree w him here and I think a good question to ask is why core requirements have not been updated to include basics of computing

03.08.2025 18:17 — 👍 10 🔁 2 💬 1 📌 1

And also, if there’s a foundation of knowledge all students have, you can teach an ambitious Archeology class that builds on it, without having to convince your own dept to make it part of the major

03.08.2025 18:24 — 👍 11 🔁 1 💬 0 📌 0

The reasons are not purely instrumental. Students should know what overfitting is for the same reason they should know what a sentence or an equation is; it’s just a matter of understanding the logic that governs the world around you.

03.08.2025 18:15 — 👍 35 🔁 3 💬 4 📌 1

It’s going to take decades for this to happen, but an intro to machine learning should be required for first-year students.

03.08.2025 18:11 — 👍 65 🔁 4 💬 9 📌 5

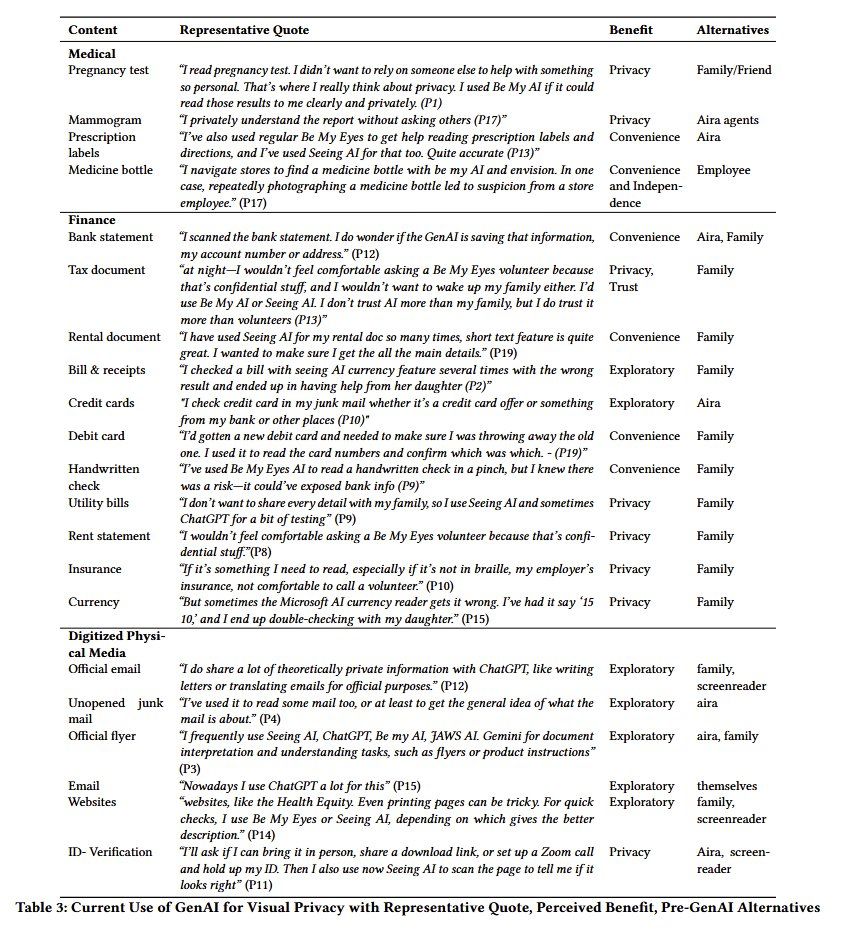

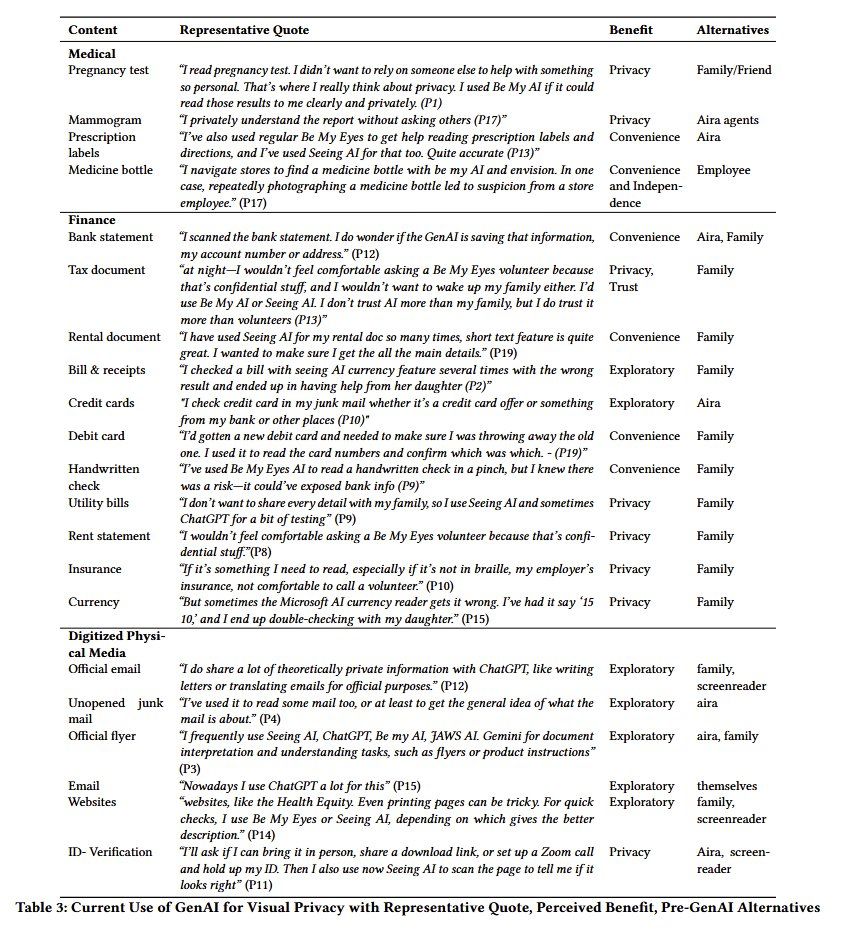

As a general purpose technology, AI has all kinds of unexpected uses that are hard to anticipate.

Example: this study finds blind users turn to AI to describe sensitive materials (pregnancy tests, checking appearance), they know it is not 100% accurate, but it provides privacy where there was none

03.08.2025 17:11 — 👍 87 🔁 10 💬 5 📌 4

Absentee mathematician gone teaching. Aspiring statistician. Original AndOrNot_robot. Stats & maths education.

They/them - AMAB

Interests: Math. Engineering. Engineering Games. 3d printing and industrial design. Education.

Job: SRE at Google working on L7 Networking. If you use Google Cloud, my team maintains the top level systems that deliver *all* your traffic.

Canadian law professor. Transnational law, labour regulation, cooperatives and corporate governance, knowledge politics. Currently obsessed with the uses of legal scholarship. Two time dad. Not even a jack of any trades.

Fulbright scholar and Wallenberg Postdoctoral fellow @uclamath | PhD in applied math from @uppsalauni | Interested in mathematical biology, collective behaviour and complex systems | linneagyllingberg.github.io

Sociopolitical malcontent. Chinese lit sometimes. 我的名字是一个问号。

networks, contagion, causality

faculty at MIT

もう心が疲れてしまいました。

いくらやっても返ってこない。報われない。

🔙率は10%にも満たないと思います。

こんなにやっているのになんで?

もう疲れたよ。誰か助けて。助けてくれない。

Batman & Psychology author, Popular Culture Psychology editor, distinguished professor, social psychologist, Wheel of Fortune champ. Teach on media, crime, mental health.

16 books, most recently on Stranger Things, Spider-Man, The Handmaid's Tale.

#blue

Digital data/machine learning/economics, focused on developing economies. Faculty at Columbia. dan.bjorkegren.com

Computational linguist and citoyen, NLP, Infosec, mostly. And of course every opinion of mine is representative for everyone i know

Computer science PhD — causal discovery for Earth science. Also interested in trustworthy ML.

Born and raised in New Mexico.

🎶Trying to make a dollar out of what makes cents.

[Machine] Learn to Save Earth 🌎🌏🌍

The latest technology news and analysis from the world's leading engineering magazine.

⚠️ CVE @ MITRE

✅ @hipcheck.mitre.org

🐗 @omnibor.io

💻 www.alilleybrinker.com/blog

Opinions are mine, you can’t have them.

Threat intel @ Intel 471 (@intel471.bsky.social). Personal account. Interests: Cybercrime, cyber threat intelligence, OSINT, data breaches, photography. Also produce Intel 471's "Cybercrime Exposed" podcast. #Australia

Seafloor mapper, Chief Scientist of smart mapping company Esri, Oregon State U. GIS/oceans professor, EC50, cyclist, 1st Black submersible diver to Challenger Deep, builds w/LEGO, raised in Hawaii. NAS, NAE, ORCID 0000-0002-2997-7611; She/her; views mine

PhD & lic. psychologist. Research and applied work at RISE Research Institutes of Sweden & Karolinska Institutet. R package for Rasch psychometrics: pgmj.github.io/easyRasch/

#openscience, #prevention, #psychometrics, #rstats, #photo

PhD candidate at MPI MiS, Lattice and SciencesPo médialab | Computational Social Science, Narratives, NLP, Complex Networks | https://pournaki.com

Defense analyst. Tech policy. Have a double life in CogSci/Philosophy of Mind. (It's confusing. Just go with it.)

All opinions entirely my own.