I'll be presenting this in person at NAACL, tomorrow at 11am in Ballroom C! Come on by - I'd love to chat with folks about this and all things interp / cog sci!

30.04.2025 13:46 — 👍 13 🔁 0 💬 0 📌 0I'll be presenting this in person at NAACL, tomorrow at 11am in Ballroom C! Come on by - I'd love to chat with folks about this and all things interp / cog sci!

30.04.2025 13:46 — 👍 13 🔁 0 💬 0 📌 0

Logo for MIB: A Mechanistic Interpretability Benchmark

Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements over prior work?

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

(NAACL) When reading a sentence, humans predict what's likely to come next. When the ending is unexpected, this leads to garden-path effects: e.g., "The child bought an ice cream smiled."

Do LLMs show similar mechanisms? @michaelwhanna.bsky.social and I investigate: arxiv.org/abs/2412.05353

🚨New arXiv preprint!🚨

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

Excited to say that this was accepted to NAACL—looking forward to presenting it in Albuquerque!

24.01.2025 17:03 — 👍 9 🔁 0 💬 1 📌 0

🚨Call for Papers🚨

The Re-Align Workshop is coming back to #ICLR2025

Our CfP is up! Come share your representational alignment work at our interdisciplinary workshop at

@iclr-conf.bsky.social

Deadline is 11:59 pm AOE on Feb 3rd

representational-alignment.github.io

Want to know the whole story? Check out the pre-print here! arxiv.org/abs/2412.05353 10/10

19.12.2024 13:40 — 👍 4 🔁 0 💬 1 📌 0Unexpectedly, we find that, when answering follow-up questions like "The boy fed the chicken smiled. Did the boy feed the chicken?", LMs don't repair or rely on earlier syntactic features! But they also don't generate new syntactic features. 9/10

19.12.2024 13:40 — 👍 2 🔁 0 💬 1 📌 0What do LMs do when the ambiguity is resolved? Do they repair their initial representations—which could look like adding on to the circuit we've shown? Or do they reanalyze—for example, by ignoring that circuit and using new syntactic features? 8/10

19.12.2024 13:40 — 👍 1 🔁 0 💬 1 📌 0

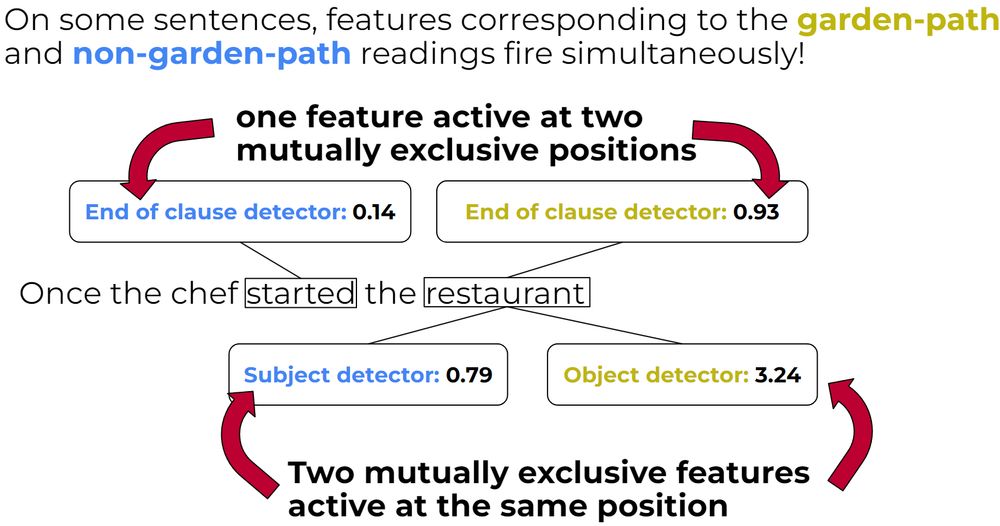

Do LMs represent multiple readings at once? We find that features corresponding to both readings (which should be mutually exclusive) fire at the same time! Structural probes yield the same conclusion, and rely on the same features to do so. 7/10

19.12.2024 13:40 — 👍 2 🔁 0 💬 1 📌 0

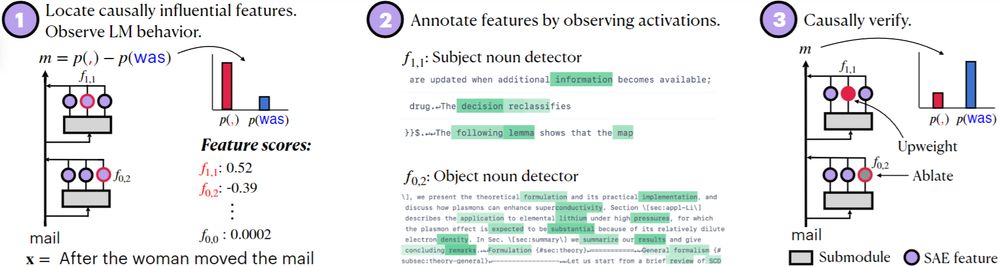

We can also verify that LMs use these features in the ways that we expect. By deactivating features corresponding to one reading, and strongly activating those corresponding to another, we can control the LM’s preferred reading! 6/10

19.12.2024 13:40 — 👍 1 🔁 0 💬 1 📌 0

We assemble these features into a circuit describing how they are built up! Earlier layers perform low-level word / part-of-speech detection, while higher-level syntactic features are constructed in later layers. 5/10

19.12.2024 13:40 — 👍 1 🔁 0 💬 1 📌 0

First, we decompose the LM’s activations into SAE features, and find those that cause it to prefer a given reading. Some represent syntactically relevant (and surprisingly complex) features, like subject/objecthood and the ends of subordinate clauses. 4/10

19.12.2024 13:40 — 👍 1 🔁 0 💬 1 📌 0How humans process sentences like these has been hotly debated. Do we consider only one reading, or many at once? And if we realize our reading was wrong, do we repair our representations or reanalyze from scratch? We set out to answer these questions in LMs! 3/10

19.12.2024 13:40 — 👍 2 🔁 0 💬 1 📌 0

We use garden path sentences as a case study. These are great stimuli for this purpose, as they initially suggest one reading, but are later revealed to have another—see figure for example. We propose to use these to do a mechanistic investigation of LM sentence processing! 2/10

19.12.2024 13:40 — 👍 3 🔁 0 💬 1 📌 0

Sentences are partially understood before they're fully read. How do LMs incrementally interpret their inputs?

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

🚨 PhD position alert! 🚨

I'm hiring a fully funded PhD student to work on mechanistic interpretability at @uva-amsterdam.bsky.social. If you're interested in reverse engineering modern deep learning architectures, please apply: vacatures.uva.nl/UvA/job/PhD-...

Would love to be added to this!

26.11.2024 15:50 — 👍 2 🔁 0 💬 0 📌 0Thanks for having me, and for the great questions and discussion! The pleasure was all mine 😁

22.11.2024 16:05 — 👍 2 🔁 0 💬 0 📌 0