"Densing Law of LLMs" paper: arxiv.org/abs/2412.04315

11.12.2024 11:40 — 👍 1 🔁 1 💬 0 📌 0@turingpost.bsky.social

Founder of the newsletter that explores AI & ML (https://www.turingpost.com) - AI 101 series - ML techniques - AI Unicorns profiles - Global dynamics - ML History - AI/ML Flashcards Haven't decided yet which handle to maintain: this or @kseniase

"Densing Law of LLMs" paper: arxiv.org/abs/2412.04315

11.12.2024 11:40 — 👍 1 🔁 1 💬 0 📌 0• The amount of work an LLM can handle on the same hardware is growing even faster than the improvements in model density or chip power alone.

That's why researchers suggest focusing on improving "density" instead of just aiming for bigger and more powerful models.

Here are the key findings from the study:

• Costs to run models are dropping as they are becoming more efficient.

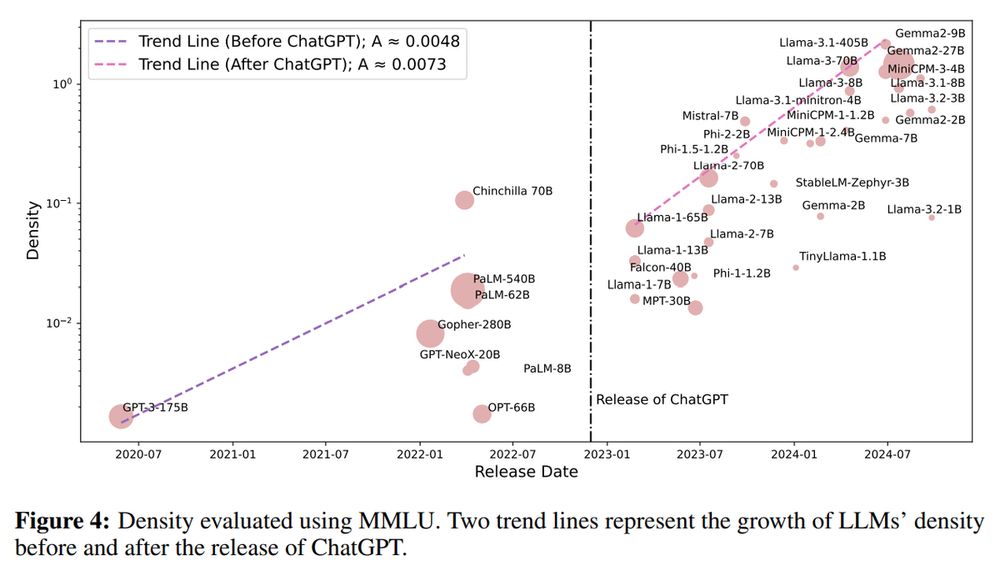

• The release of ChatGPT sped up the growth of efficiency of new models up to 50%!

• Techniques like pruning and distillation don’t necessarily make models more efficient.

Estimating of effective parameter size:

It combines a two-step process:

- Loss Estimation: Links a model's size and training data to its accuracy

- Performance Estimation: Uses a sigmoid function to predict how well a model performs based on its loss.

Scaling law:

The density of a model is the ratio of its effective parameter size to its actual parameter size.

If the effective size is close to or smaller than the actual size, the model is very efficient.

Why is density important?

A higher-density model can deliver better results without needing more resources, reducing computational costs, making models suitable for devices with limited resources, like smartphones and avoiding unnecessary energy use.

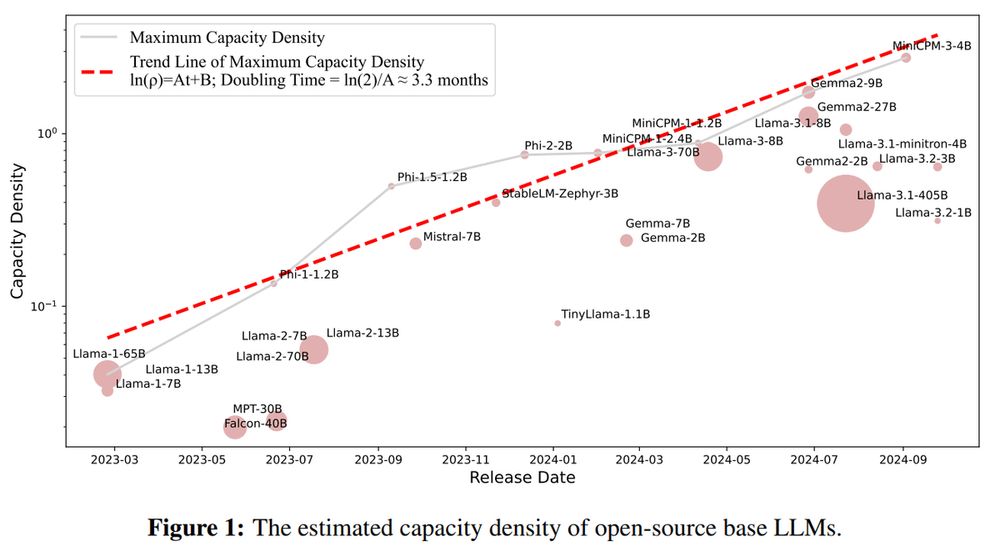

Interestingly, they found a trend, called Densing Law:

The capacity density of LLMs is doubling every 3 months, meaning that newer models are getting much better at balancing performance and size.

Let's look at this more precisely:

Reading about scaling laws recently I came by the interesting point:

Focus on a balance between models' size and performance is more important that aiming for larger models

Tsinghua University and ModelBest Inc propose the idea of “capacity density” to measure how efficiently a model uses its size

Explore more interesting ML/AI news in our free weekly newsletter -> www.turingpost.com/p/fod79

10.12.2024 22:46 — 👍 0 🔁 0 💬 0 📌 0

2. AI system from World Labs, co-founded by Fei-Fei Li:

Transforms a single image into interactive 3D scenes with varied art styles and realistic physics. You can explore, interact with elements and move within AI-generated environments directly in your web browser

www.youtube.com/watch?v=lPYJ...

1. GoogleDeepMind's Genie 2

Generates 3D environments with object interactions, animations, and physical effects from one image or text prompt. You can interact with them in real-time using a keyboard and mouse.

Paper: deepmind.google/discover/blo...

Our example: www.youtube.com/watch?v=YjO6...

An incredible shift is happening in spatial intelligence!

Here are 2 latest revolutional World Models, which create interactive 3D environments:

1. GoogleDeepMind's Genie 2

2. AI system from World Labs, co-founded by Fei-Fei Li

Explore more below 👇

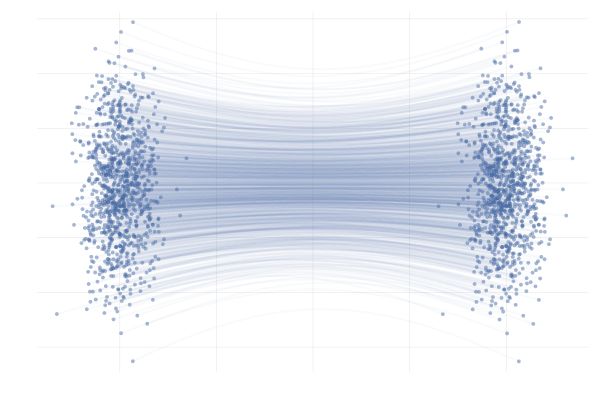

In our new AI 101 episode we discuss:

- FM concepts for optimizing the path from noise to realistic data

- Continuous Normalizing Flows (CNFs)

- Conditional Flow Matching

- Difference of FM and diffusion models

Find out more: turingpost.com/p/flowmatching

What is Flow Matching?

Flow Matching (FM) is used in top generative models, like Flux, F5-TTS, E2-TTS, and MovieGen with state-pf-the-art results. Some experts even say that FM might surpass diffusion models👇

Also, elevate your AI game with our free newsletter ↓

www.turingpost.com/subscribe

See other important AI/ML news in our free weekly newsletter: www.turingpost.com/p/fod78

05.12.2024 00:29 — 👍 0 🔁 0 💬 1 📌 0

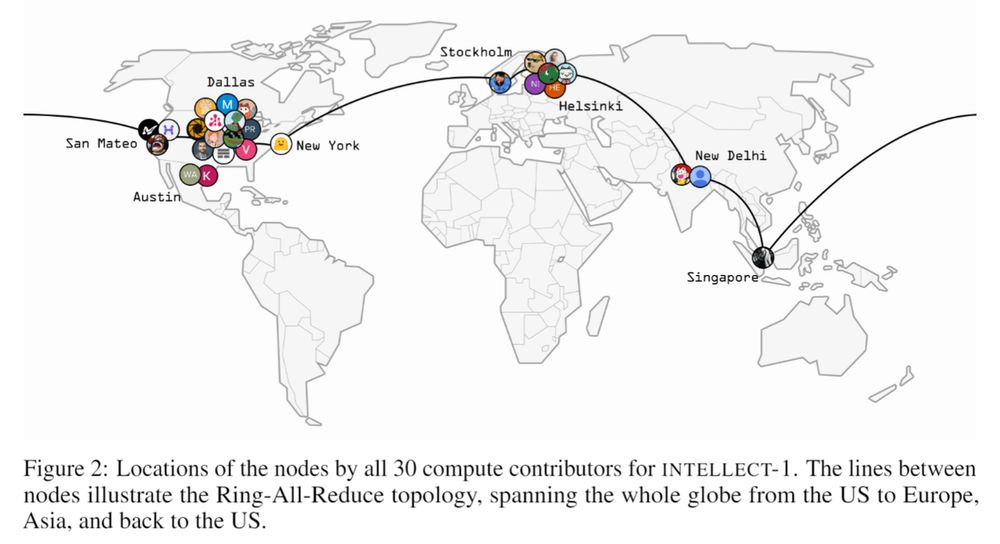

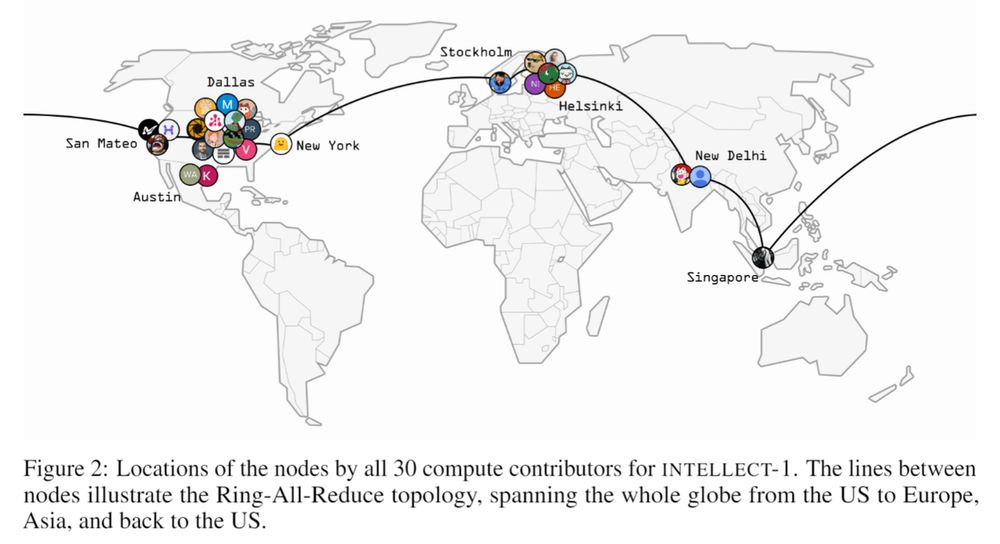

INTELLECT-1 by Prime Intellect

INTELLECT-1 is a 10B open-source LLM trained over 42 days on 1T tokens across 14 global nodes, leverages the PRIME framework for exceptional efficiency (400× bandwidth reduction).

github.com/PrimeIntelle...

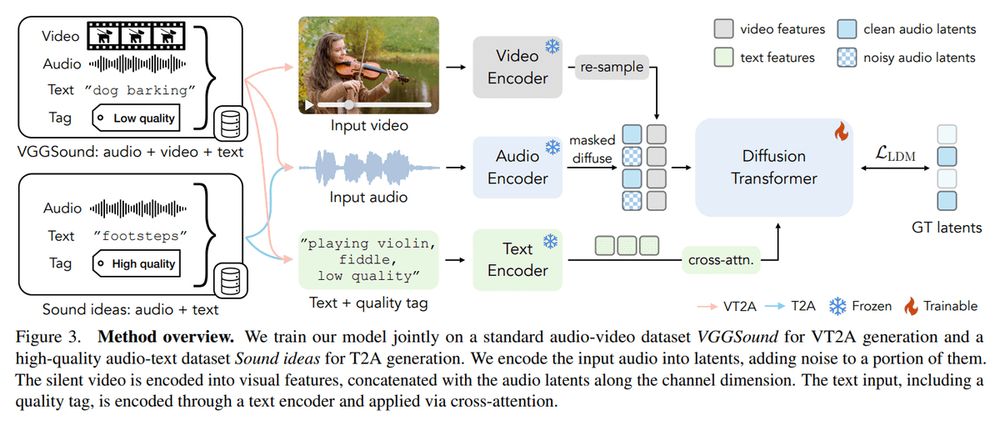

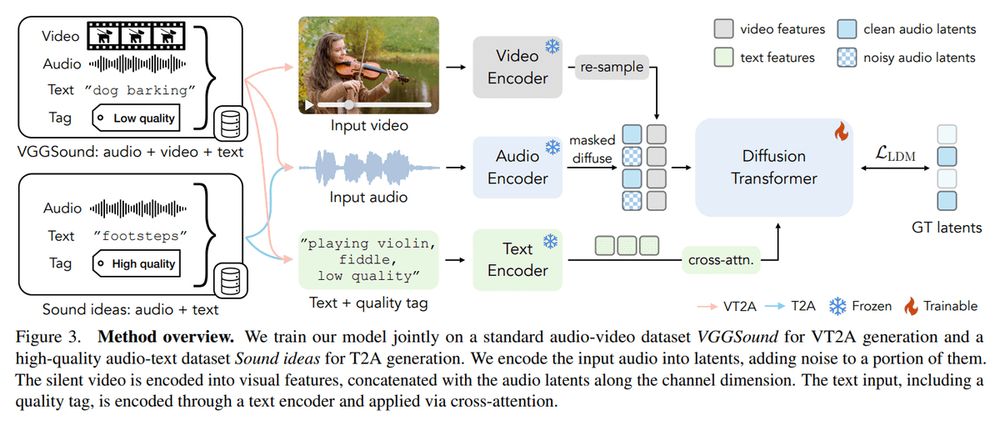

MultiFoley by Adobe Research

MultiFoley is an AI model generating high-quality sound effects from text, audio, and video inputs. Cool demos highlight its creative potential.

arxiv.org/abs/2411.17698

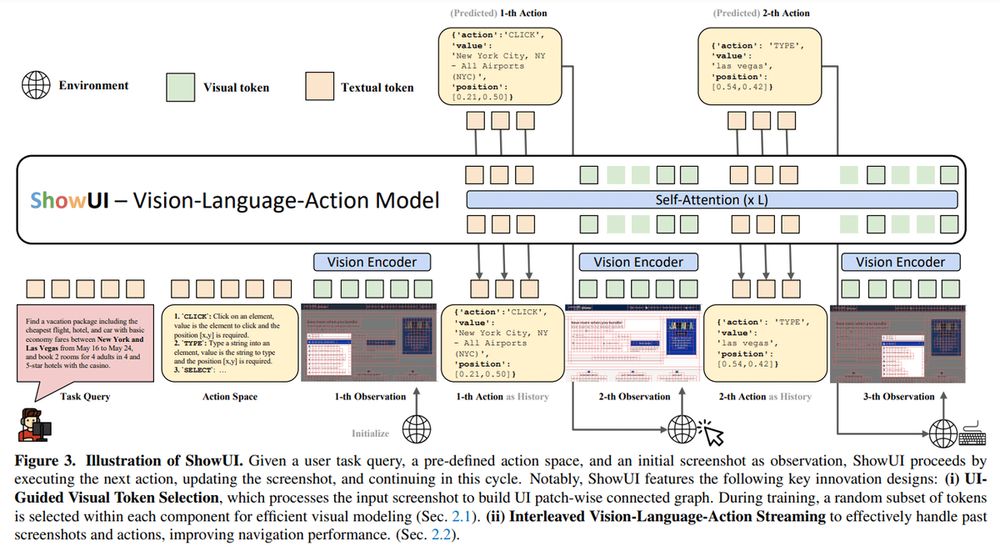

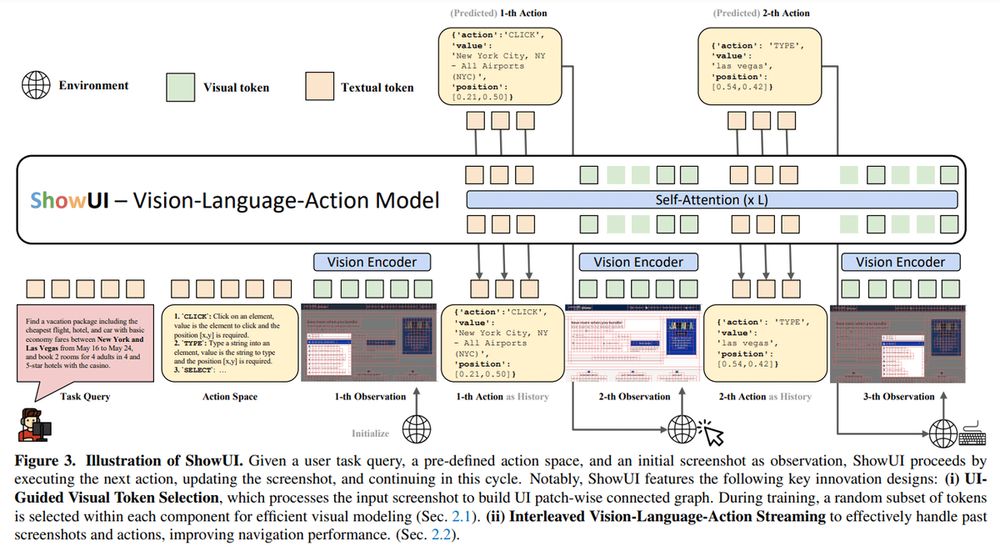

ShowUI by Show Lab, NUS, Microsoft

ShowUI is a 2B vision-language-action model tailored for GUI tasks:

- features UI-guided token selection (33% fewer tokens)

- interleaved streaming for multi-turn tasks

- 256K dataset

- achieves 75.1% zero-shot grounding accuracy

arxiv.org/abs/2411.17465

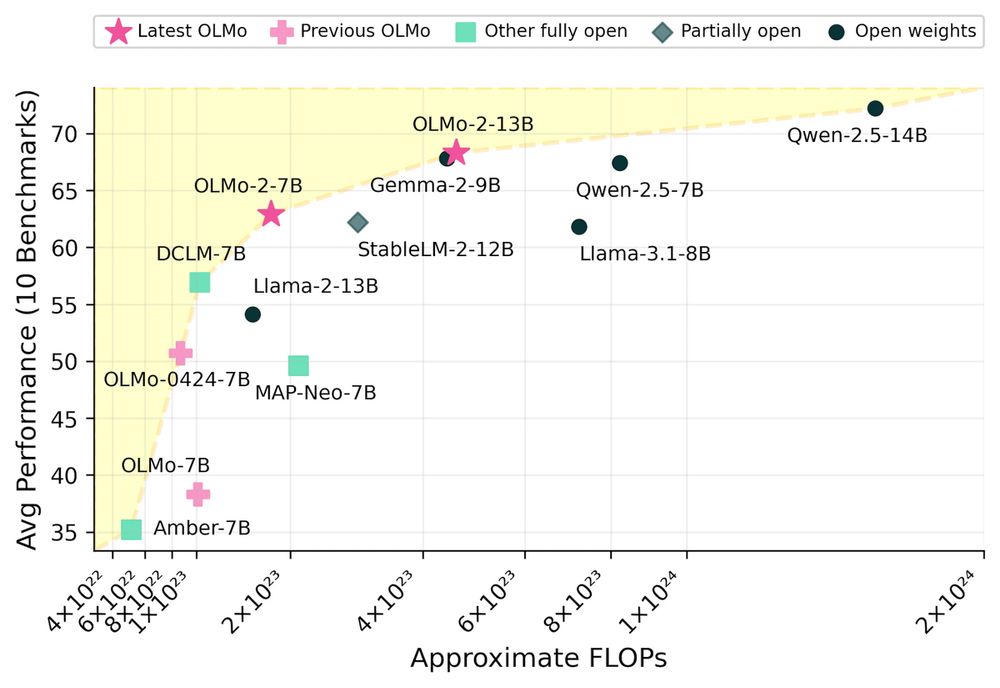

OLMo 2 by Allen AI

OLMo 2, a family of fully open LMs with 7B and 13B parameter, is trained on 5 trillion tokens.

allenai.org/blog/olmo2

Alibaba’s QwQ-32B

It excites with strong math, coding, and reasoning scores, ranking between Claude 3.5 Sonnet and OpenAI’s o1-mini.

- Optimized for consumer GPUs through quantization

- Open-sourced under Apache, revealing tokens and weights

huggingface.co/Qwen/QwQ-32B...

Amazing models of the week:

• Alibaba’s QwQ-32B

• OLMo 2 by Allen AI

• ShowUI by Show Lab, NUS, Microsoft

• Adobe's MultiFoley

• INTELLECT-1 by Prime Intellect

🧵

Like/repost the 1st post to support our work 🤍

Also, elevate your AI game with our free newsletter ↓

www.turingpost.com/subscribe

Find a complete list of the latest research papers in our free weekly digest: www.turingpost.com/p/fod78

02.12.2024 23:14 — 👍 0 🔁 0 💬 1 📌 0

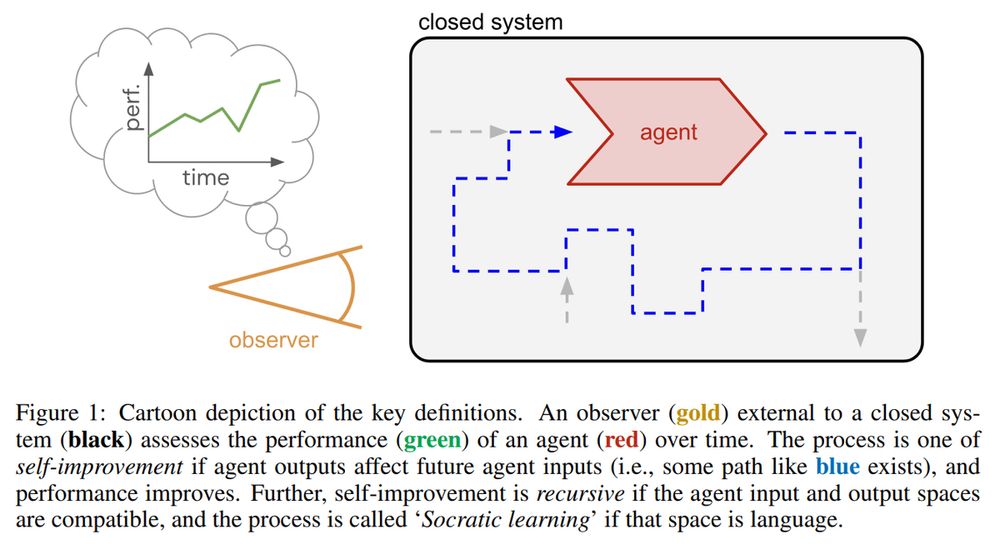

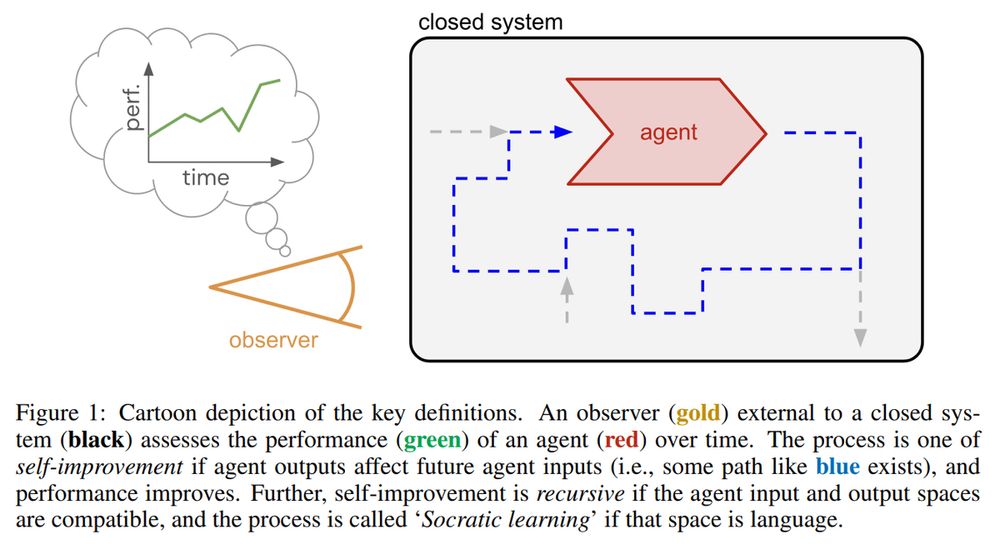

Boundless Socratic Learning with Language Games, Google DeepMind

This framework leverages recursive language-based "games" for self-improvement, focusing of feedback, coverage, and scalability. It suggests a roadmap for scalable AI via autonomous data gen and feedback loops

arxiv.org/abs/2411.16905

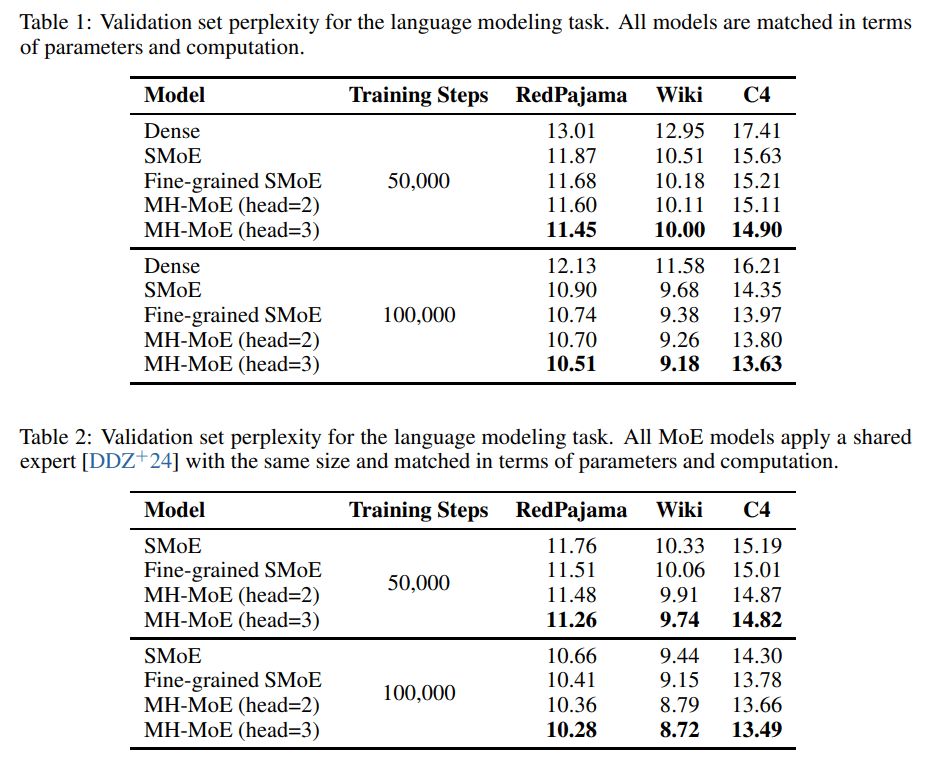

MH-MoE: Multi-Head Mixture-of-Experts

@msftresearch.bsky.social’s MH-MoE improves sparse MoE by adding multi-head attention, reducing perplexity without increasing FLOPs, and demonstrating robust performance under quantization.

arxiv.org/abs/2411.16205

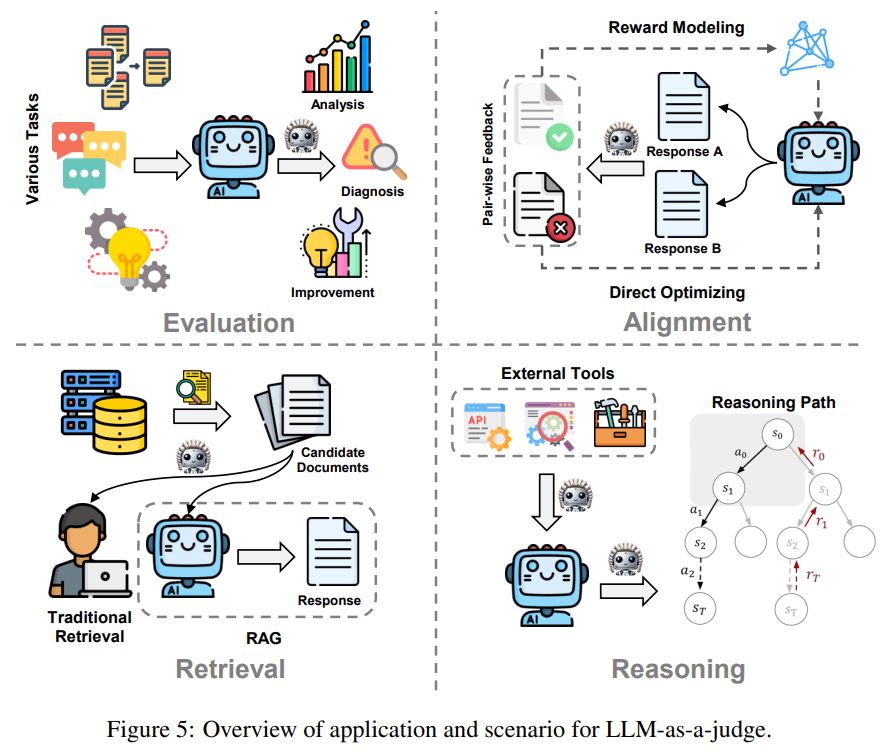

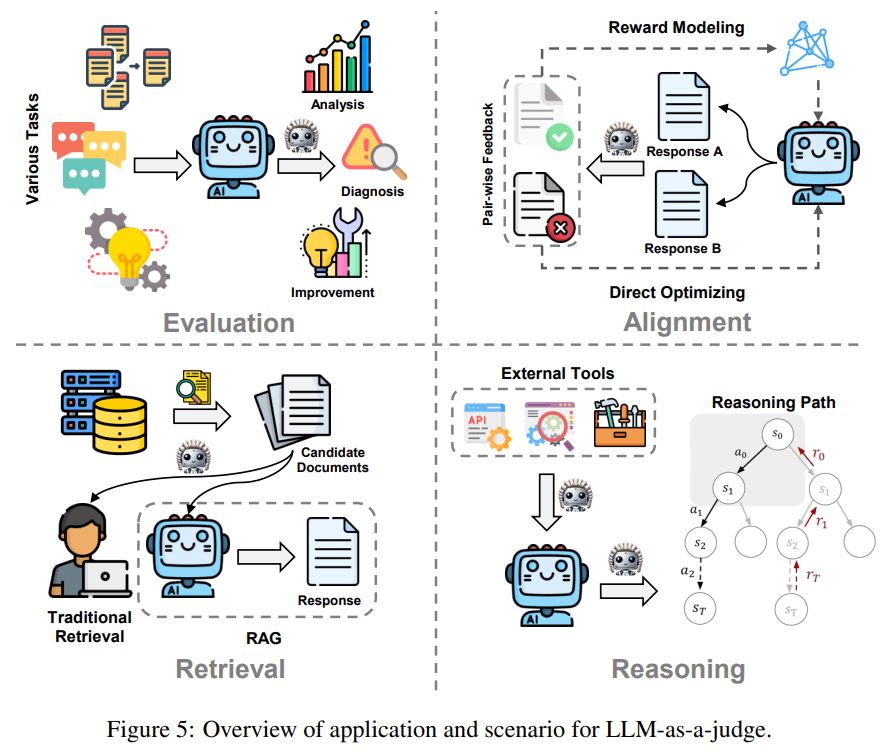

LLM-as-a-Judge:

Presents a taxonomy of methodologies and applications of LLMs for judgment tasks, highlighting bias, vulnerabilities, and self-judgment, with future directions in human-LLM collaboration and bias mitigation

arxiv.org/abs/2411.16594

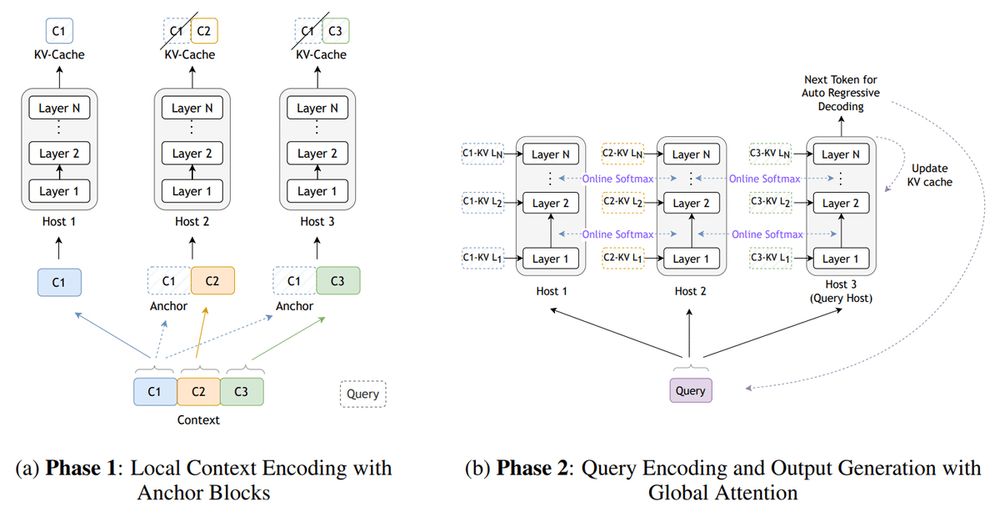

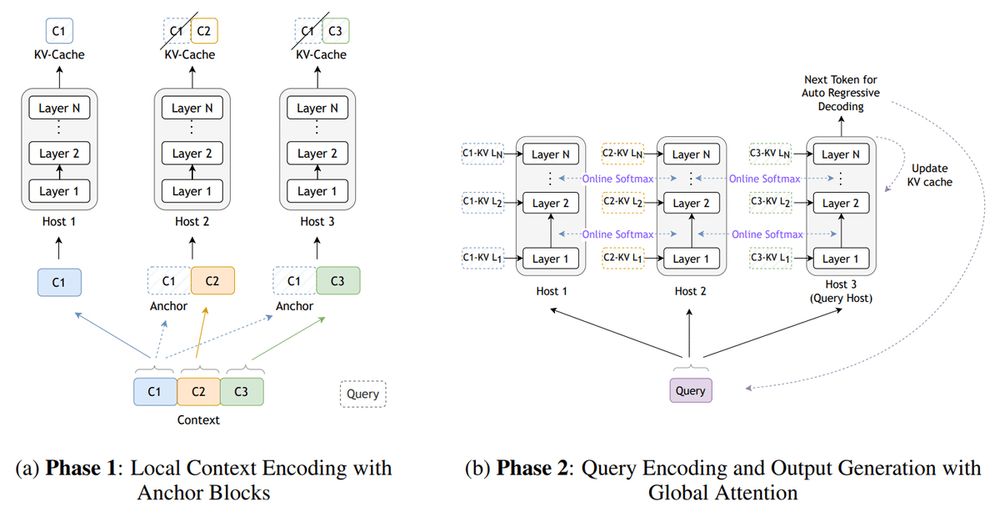

Star Attention:

NVIDIA introduced a block-sparse attention mechanism for Transformer-based LLMs. It uses local/global attention phases to achieve up to 11x inference speedup on sequences up to 1M tokens, retaining 95-100% accuracy.

arxiv.org/abs/2411.17116

Code: github.com/NVIDIA/Star-...

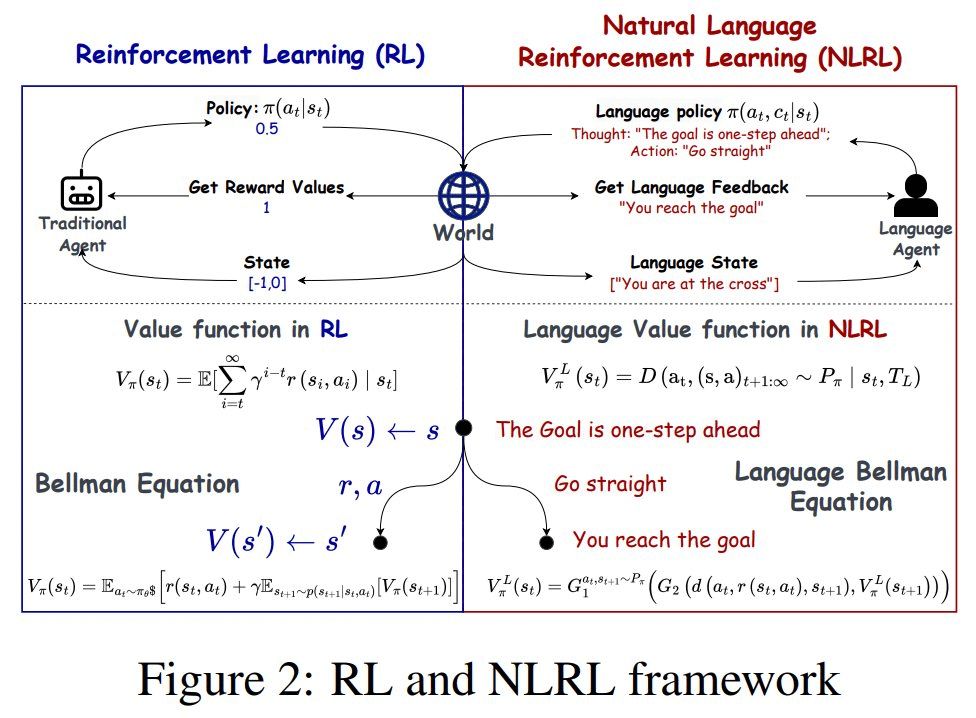

Natural Language Reinforcement Learning:

Redefines reinforcement learning components using natural language for interpretable and knowledge-rich decision-making.

arxiv.org/pdf/2411.14251 t.co/Kru1Hz1JcX

bsky.app/profile/turi...

Top 5 researches of the week:

• Natural Language Reinforcement Learning

• Star Attention, NVIDIA

• Opportunities and Challenges of LLM-as-a-judge

• MH-MoE: Multi-Head Mixture-of-Experts, @msftresearch.bsky.social

• Boundless Socratic Learning with Language Games, Google DeepMind

🧵