Tool use in insects: Assassin bugs apply resin to their forelegs before a stingless bee hunt. This makes the bees attack the bug in just the right position to be caught!

Videos will worth watching

www.pnas.org/doi/full/10....

@tmoldwin.bsky.social

Computational neuroscience: Plasticity, learning, connectomics.

Tool use in insects: Assassin bugs apply resin to their forelegs before a stingless bee hunt. This makes the bees attack the bug in just the right position to be caught!

Videos will worth watching

www.pnas.org/doi/full/10....

(At high spatiotemporal resolution.)

17.05.2025 08:27 — 👍 1 🔁 0 💬 0 📌 0In the grand scheme of things the main thing that matters is advances in microscopy and imaging methods. Almost all results in neuroscience are tentative because we can't see everything that's happening at the same time.

17.05.2025 08:26 — 👍 1 🔁 0 💬 1 📌 0I also have a stack of these, I call it 'apocalypse food'.

16.05.2025 19:30 — 👍 2 🔁 0 💬 0 📌 0You are correct about this.

16.05.2025 19:10 — 👍 1 🔁 0 💬 0 📌 0But so is every possible mapping,so the choice of a specific mapping is not contained within the data. Even the fact that the training data comes in X,y pairs is not sufficient to provide a mapping that generalizes in a specific way. The brain chooses a specific algorithm that generalizes well.

16.05.2025 19:10 — 👍 1 🔁 0 💬 0 📌 0(Consider that one can create an arbitrary mapping between a set of images and a set of two labels, thus the choice of a specific mapping is a reduction of entropy and thus constitutes information.)

16.05.2025 18:41 — 👍 0 🔁 0 💬 1 📌 0The set of weights that correctly classifies images as cats or dogs contains information that is not contained either in the set of training images or in the set of labels.

16.05.2025 18:38 — 👍 0 🔁 0 💬 2 📌 0Learning can generate information about the *mapping* between the object and the category. It doesn't generate information about the object (by itself) or the category (by itself) but the mapping is not subject to the data processing inequality for the data or the category individually.

16.05.2025 18:36 — 👍 3 🔁 0 💬 1 📌 0GPT is already pretty good at this. Maybe not perfect, but possibly as good as the median academic.

16.05.2025 06:46 — 👍 0 🔁 0 💬 1 📌 0What do you mean by 'generate information'? What is an example of someone making this sort of claim?

15.05.2025 19:14 — 👍 3 🔁 0 💬 1 📌 0Paying is best. Reviews should mostly be done by advanced grad students/postdocs who could use the cash.

13.05.2025 19:41 — 👍 1 🔁 0 💬 0 📌 0Why wouldn't you want your papers to be LLM-readable?

07.05.2025 15:21 — 👍 0 🔁 0 💬 1 📌 0If such a value to society exists, it should not be difficult for the PhD student to figure out how to articulate it themselves. A lack of independence of thought when it comes to this sort of thing would be much more concerning.

04.05.2025 15:14 — 👍 2 🔁 0 💬 0 📌 0Oh you were on that? Small world.

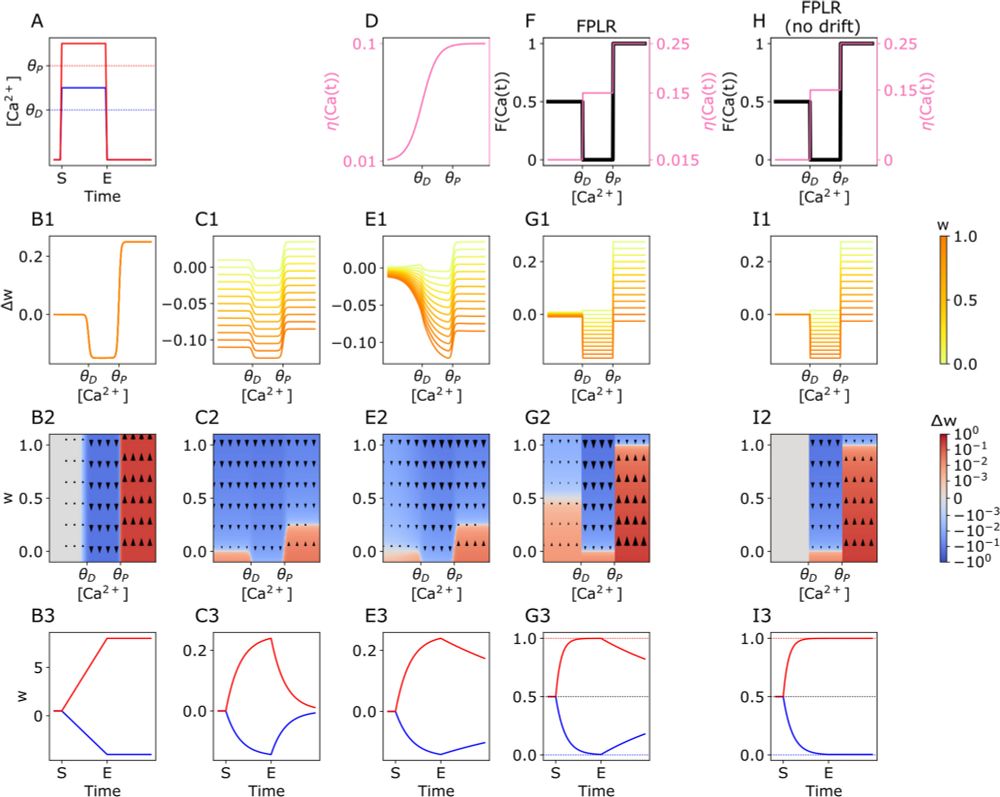

04.05.2025 07:44 — 👍 2 🔁 0 💬 0 📌 0But I do think in our efforts to engage with the previous work on this, we made this paper overly long and technical. We present the bottom-line formulation of the plasticity rule in the Calcitron paper.

03.05.2025 20:20 — 👍 1 🔁 0 💬 0 📌 0

One of the reasons we wrote this paper is because the calcium control is a great theory but there were two semi-conflicting mathematical forumlations of it, both of which had some inelegenancies. I think we managed to clean them up, and made it more 'theory'-like.

link.springer.com/article/10.1...

I know that e.g. Yuri Rodrigues has a paper that incorporates second messengers but at that point it's not really parsimonous any moren

03.05.2025 20:05 — 👍 1 🔁 0 💬 1 📌 0The leading theory for plasticity is calcium control, which I've done some work on. I do think that I've contributed on that front with the Calcitron and the FPLR framework which came out in the past few months. Anything beyond calcium control gets into simulation territory.

03.05.2025 20:05 — 👍 2 🔁 0 💬 2 📌 0The reason why it's less active now is because people kind of feel that single neuron theory has been solved. Like the LIF/Cable theory models are still pretty much accepted. Any additional work would almost necessarily add complexity and that complexity is mostly not needed for 'theory' questions.

03.05.2025 16:22 — 👍 3 🔁 0 💬 1 📌 0Hebbian learning? Associative attractor networks (e.g. Hopfield)? Calcium control hypothesis? Predictive coding? Efficient coding? There are textbooks about neuro theory.

03.05.2025 13:28 — 👍 5 🔁 0 💬 0 📌 0I kind of like the size of the single neuron theory community, it's the right size. The network theory community is IMHO way too big, there are like thousands of papers about Hopfield networks, that's probably too much.

03.05.2025 13:23 — 👍 4 🔁 0 💬 1 📌 0Not really true, there are a bunch of people doing work on e.g. single neuron biophysics, plasticity models, etc. Definitely not as big of a field but we exist.

03.05.2025 13:20 — 👍 5 🔁 0 💬 1 📌 0And 'because it's unethical in this situation' is not a valid response; the ethics are irrelevant to rigor and the epistemic question of the scientific approach to establishing truth in medicine.

02.05.2025 10:49 — 👍 0 🔁 0 💬 0 📌 0Exactly. There's no difference between a 'peer reviewer' appointed by a journal and you, a scientific peer, evaluating the publication yourself.

01.05.2025 15:29 — 👍 3 🔁 0 💬 1 📌 0

Ariel Krakowski interviews me on his podcast about brains, AI, plasticity, connectomics, consciousness, and everything in between.

open.spotify.com/episode/4m33...

If they're actually that great there's no problem, the university will want to keep them. But if their job would be at at risk from a younger competitor if not for tenure, that's evidence that they're not actually that great.

27.04.2025 17:54 — 👍 0 🔁 0 💬 0 📌 0Also a lot of professors build their research careers on outdated scientific trends, in science especially you don't want something holding you to the past.

27.04.2025 07:30 — 👍 0 🔁 0 💬 0 📌 0