LSE announces new centre to study animal sentience

The Jeremy Coller Centre for Animal Sentience at LSE will develop new approaches to studying the feelings of other animals scientifically.

An emotional day - I can announce I'll be the first director of The Jeremy Coller Centre for Animal Sentience at the LSE, supported by a £4m grant from the Jeremy Coller Foundation. Our mission: to develop better policies, laws and ways of caring for animals. (1/2)

www.lse.ac.uk/News/Latest-...

25.03.2025 10:42 — 👍 707 🔁 132 💬 53 📌 12

Post-Doctoral Associate/Research Scientist, New York University

- PhilJobs:JFP

Post-Doctoral Associate/Research Scientist, New York University

An international database of jobs for philosophers

Two or more 2-year Postdoc / Research Scientist positions at NYU to work on issues tied to artificial consciousness. Strong research track record with expertise in AI expected. No teaching. Salary around $62K. Details and application materials are here philjobs.org/job/show/28878

16.03.2025 13:02 — 👍 8 🔁 2 💬 1 📌 0

Events

Spring 2025

The NYU Wild Animal Welfare Program is thrilled to be hosting an online panel with Heather Browning and Oscar Horta on March 19 at 12pm ET! This event will settle once and for all the question whether wild animal welfare is net positive or negative. RSVP below :)

sites.google.com/nyu.edu/wild...

04.03.2025 14:40 — 👍 22 🔁 6 💬 0 📌 2

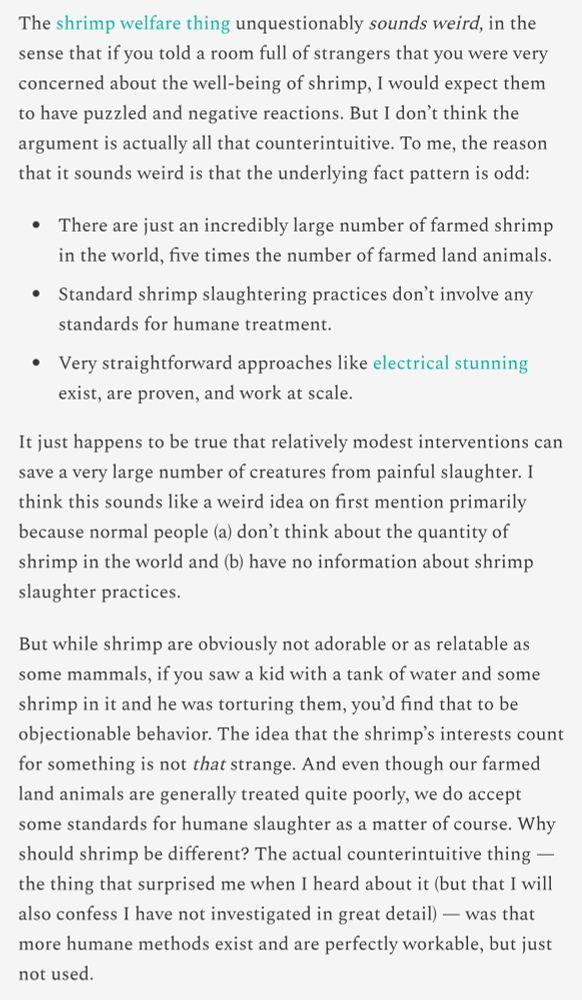

Should we care more about shrimp?

www.slowboring.com/p/mailbag-mo...

28.02.2025 12:48 — 👍 73 🔁 6 💬 10 📌 1

In philosophy at least, this is uncommon but the editor would be fully within their rights. The reviewers only make recommendations; the editors are supposed to use their independent judgement when appropriate.

20.02.2025 13:33 — 👍 1 🔁 0 💬 0 📌 0

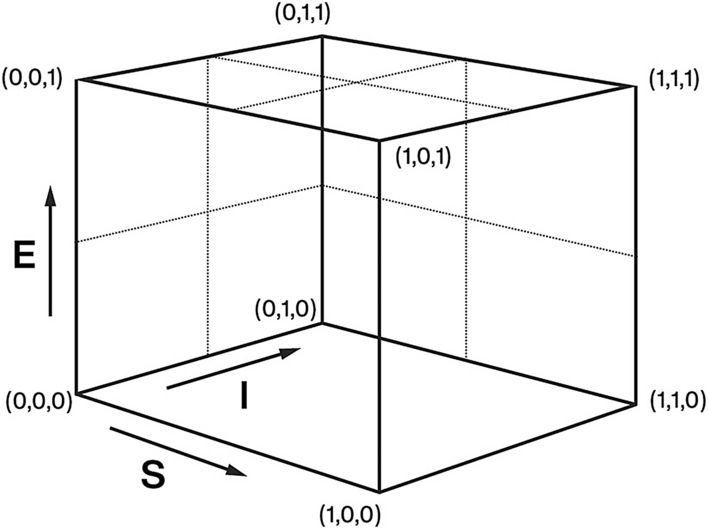

LLMs and World Models, Part 1

How do Large Language Models Make Sense of Their “Worlds”?

Do large language models develop "emergent" models of the world? My latest Substack posts explore this claim and more generally the nature of "world models":

LLMs and World Models, Part 1: aiguide.substack.com/p/llms-and-w...

LLMs and World Models, Part 2: aiguide.substack.com/p/llms-and-w...

13.02.2025 22:30 — 👍 212 🔁 59 💬 14 📌 10

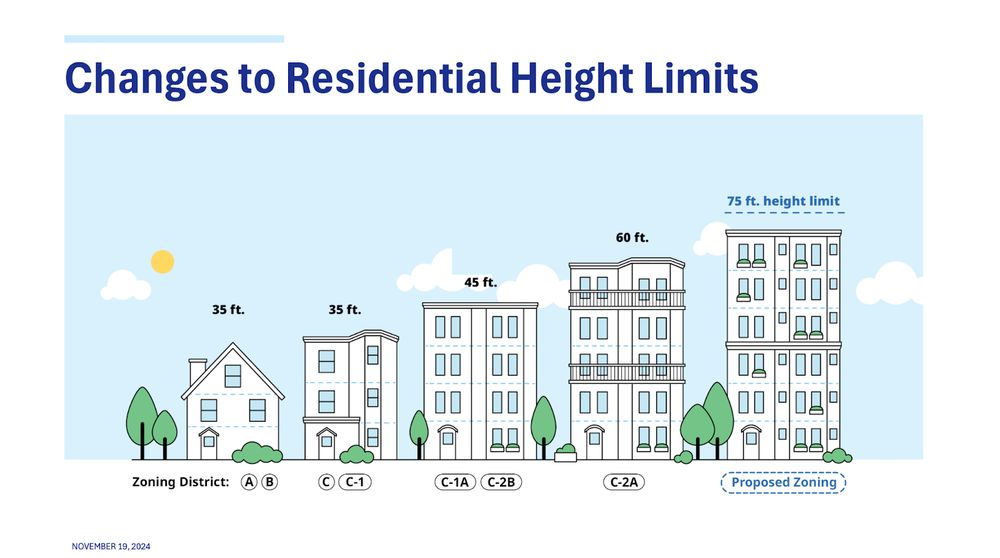

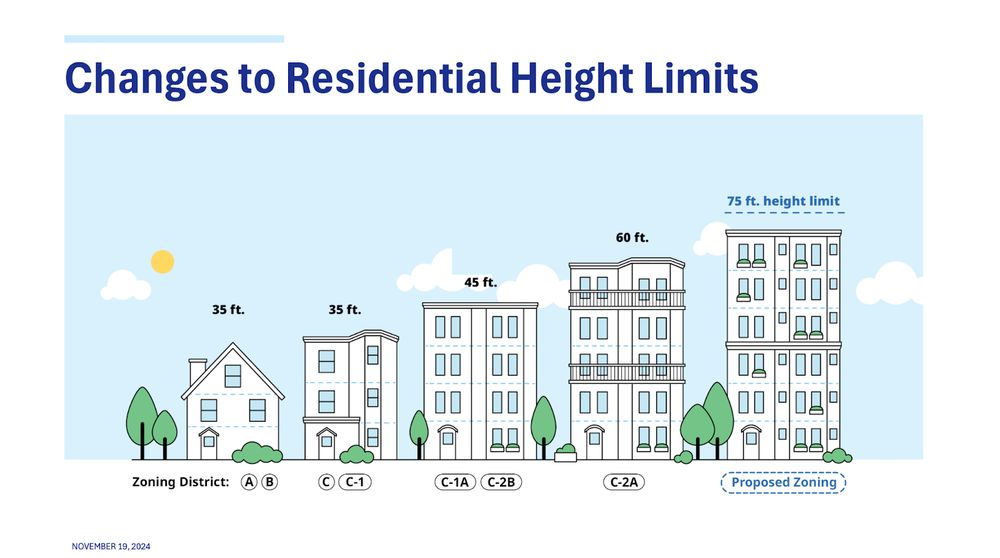

I can’t believe it - after years of advocacy, exclusionary zoning has ended in Cambridge.

We just passed the single most comprehensive rezoning in the US—legalizing multifamily housing up to 6 stories citywide in a Paris style

Here’s the details 🧵

11.02.2025 01:46 — 👍 2458 🔁 492 💬 66 📌 234

This is one reason why I think manuscripts should contain a robustness checks section. This would make it normal for researchers to conduct additional analyses, and for reviewers to request additional analyses, that ask: if key analyses are done this other reasonable way, are the results different?

07.02.2025 14:24 — 👍 13 🔁 4 💬 1 📌 0

Evaluating Artificial Consciousness 2025 - Sciencesconf.org

Call for abstracts: Workshop “Evaluating Artificial Consciousness”:

eac-2025.sciencesconf.org

10-11 June 2025 at RUB Bochum

#PhilMind #consciousness #consci #sentience #Ethics #CogSci

17.01.2025 14:18 — 👍 17 🔁 8 💬 1 📌 2

Cherish every day this thing isn't spreading from human to human.

27.12.2024 16:48 — 👍 10 🔁 2 💬 0 📌 0

Title card: Alignment Faking in Large Language Models by Greenblatt et al.

New work from my team at Anthropic in collaboration with Redwood Research. I think this is plausibly the most important AGI safety result of the year. Cross-posting the thread below:

18.12.2024 17:46 — 👍 126 🔁 29 💬 5 📌 11

New in print: "Let's Hope We're Not Living in a Simulation":

In Reality+, David Chalmers suggests that it wouldn't be too bad if we lived in a computer simulation. I argue on the contrary that if we live in a simulation, we ought to attach a significant conditional credence to 1/3

17.12.2024 19:21 — 👍 36 🔁 7 💬 1 📌 0

The Ramsey philosophy of biology lab at KU Leuven, Belgium.

https://www.theramseylab.org • #HPbio #philsci #philsky #evosky #paleosky #cogsci

Philosopher, Postdoc at Bielefeld University

Lecturer in Philosophy at the University of Reading |

Most of my recent writing is on animal consciousness and welfare

Black lab dad. Writer and ambient musician. The Dynasty of the Divine coming soon!

www.adamrowan.info

Preorder my book: https://www.amazon.com/Dynasty-Divine-Adam-Rowan-ebook/dp/B0FS3G3WWH/ref

M.A Philosophy at Queen’s University Belfast.

Phenomenology - Social Epistemology - Philosophy of Mind - Philosophy of Pornography

Events Coordinator for British Postgraduate Philosophy Association

My Links:

https://linktr.ee/amcdermottphilosophy

PhD student at Oxford University

Moral philosophy, global priorities research, animal ethics

A Mind Wanderer.

Philosophy of Cognitive Science

Meditation

Bochum University

Philosopher of Science, PhD, MD. Dean’s Professor and Chancellor’s fellow, Logic and Philosophy of Science, UC Irvine.

https://sites.socsci.uci.edu/~rossl/

Crime Lady. NYT Crime & Mystery columnist. Author, SCOUNDREL and THE REAL LOLITA. Editor, UNSPEAKABLE ACTS and EVIDENCE OF THINGS SEEN.

Next nonfiction book (November 2025): WITHOUT CONSENT

http://www.linktr.ee/sarahweinman

The Association for the Scientific Study of Consciousness. Our next conference is: https://assc2025.gr/. Maintained by @mariandrnh.bsky.social

PhD candidate | philosophy of AI 🤖 🌱 | Aix-Marseille Université

https://eloise-boisseau.fr/en

PhD in philosophy. Philosopher in the field of philosophy of consciousness and philosophy of AI.

https://www.researchgate.net/profile/Katarina-Marcincinova

Philosopher of mind/cog sci studying animal minds, memory,

consciousness, temporal representation, & implications for animal ethics/policy.

Asst. Prof. @ Ashoka University, India. Formerly postdoc @ London School of Economics & Johns Hopkins University

Bundestagsabgeordnete für Karlsruhe, Direktmandat

🥙 gesunde Ernährung für alle

🐾 Tiere schützen

💚 Klima retten

🤝 zusammenhalten

🌱💪 plant powered

mainly Bochum/Ruhrgebiet | Bündnis 90/Die Grünen, Vorsitzende Ausschuss für Arbeit, Soziales & Gesundheit | sonst viel zu Migration, Flucht, Seenotrettung und Italien

Philosophy postdoc @ CPAI, University of Copenhagen.

Researching artificial intelligence, mental representation, representational formats, concepts.

PhD 2021 @ Monash University

🏠 https://iwanrwilliams.wordpress.com

Professional science communicator -- working on evidence-based ways to change our food systems for good. Barcelona based. Bird dad.

https://bjornjohannolafsson.substack.com/

Strategy Fellow, Global Health & Wellbeing @open_phil. Views my own

AI Program Officer at Longview Philanthropy. Own views.

🔸 giving 10% of my lifetime income to effective charities via Giving What We Can