Less than 4 weeks to go. #btconf Düsseldorf. About time to get your ticket! beyondtellerrand.com/events/dusse...

09.04.2025 06:36 — 👍 5 🔁 2 💬 0 📌 0@quasimondo.bsky.social

Artist, Neurographer, AI Prompteur, Purveyor of Systems, Data Dumpster Diver, Information Recycler

Less than 4 weeks to go. #btconf Düsseldorf. About time to get your ticket! beyondtellerrand.com/events/dusse...

09.04.2025 06:36 — 👍 5 🔁 2 💬 0 📌 0

“To understand how we’ve achieved AGI, why it's only happened recently, after decades of failed attempts, & what this tells us about our own minds, we must re-examine our most fundamental assumptions — not just about AI, but the nature of computing.”

— @blaiseaguera.bsky.social & James Manyika

#ai

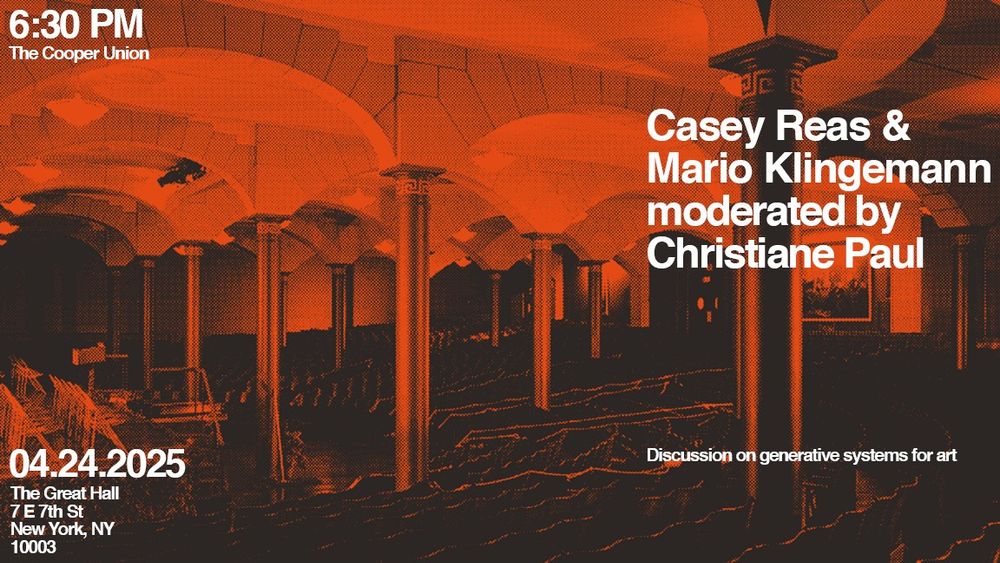

On April 24th I have the great honor to share the stage with Casey Reas and Christiane Paul for a conversation about generative systems at the Great Hall at The Cooper Union in New York City.

www.eventbrite.com/e/conversati...

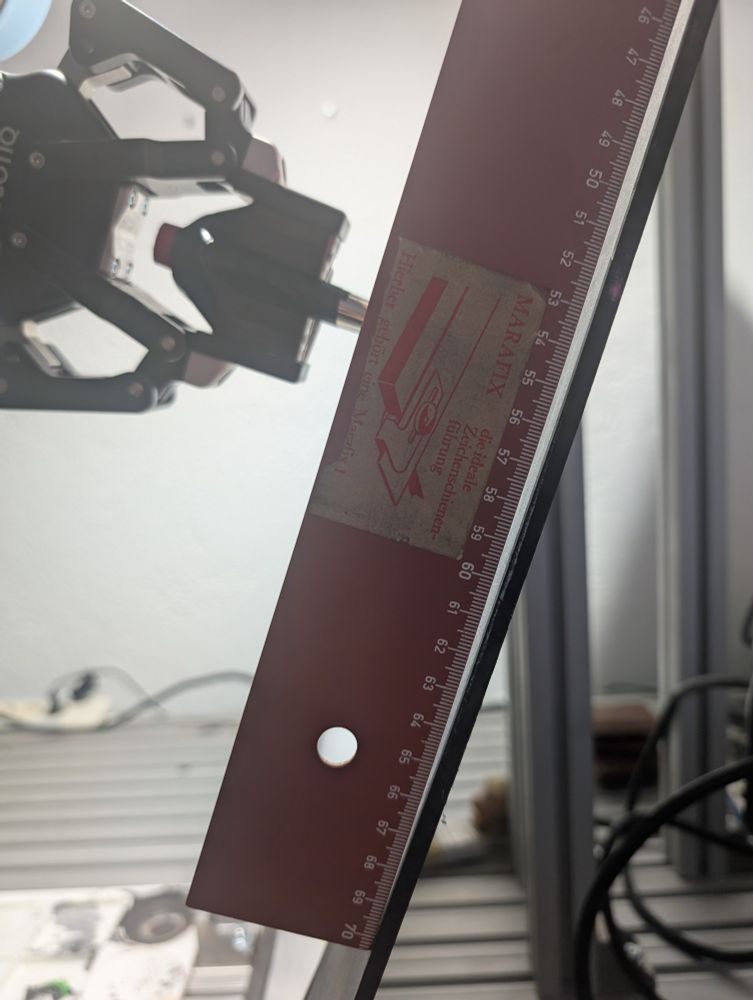

Oops, I should have checked that a bit earlier: turns out my dawing board bends down 5 mm in the center. Not great if a millimeter more or less already makes the stroke width vary considerably.

04.04.2025 16:16 — 👍 7 🔁 0 💬 1 📌 0

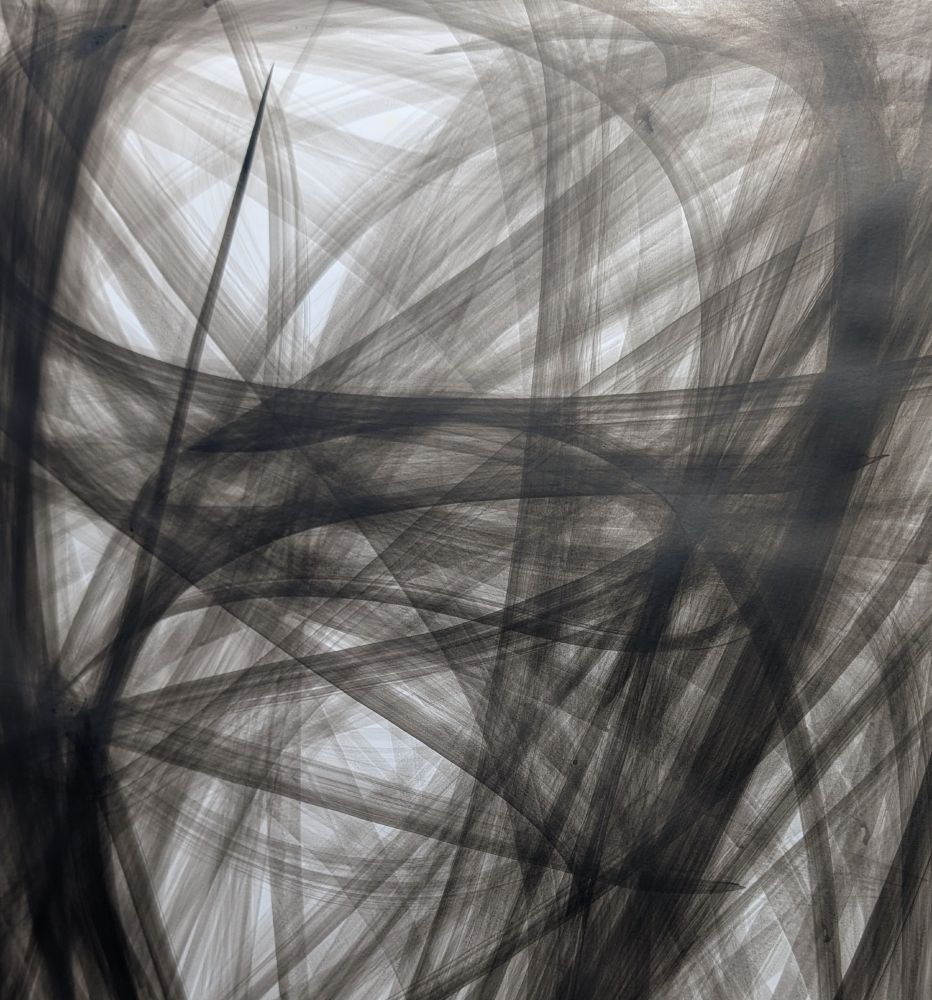

Starting to get some more trust in my robot not running into a wall or trying to pierce my canvas, so I dare to allow it some "broad strokes".

04.04.2025 06:51 — 👍 27 🔁 2 💬 0 📌 0I hope you explain to them that training a LoRA is not the same as training a GAN or a DiffusionModel from scratch. Since in case of the former you just nudge a model that has already been trained on millions of "other people's" images into the desired areas of its latent space.

23.02.2025 17:35 — 👍 1 🔁 0 💬 1 📌 0

Maybe my installation "Circuit Training" does match the criteria which is creating its own dataset in the exhibition context by inviting the audience into its automated photo-booth to become the training data:

www.youtube.com/watch?v=lXan...

Ah - re-reading your post - when you say "from scratch", does that include taking the actual photos, too, and not just finding and categorizing them? In that case of course I am off-topic here.

21.02.2025 13:39 — 👍 1 🔁 0 💬 2 📌 0There was no real alternative back in the early days, if you weren't into just creating dogs, flowers, hotel rooms or tourist attractions (which to my knowledge were the only pre-trained models available back then)

21.02.2025 13:34 — 👍 1 🔁 0 💬 1 📌 0

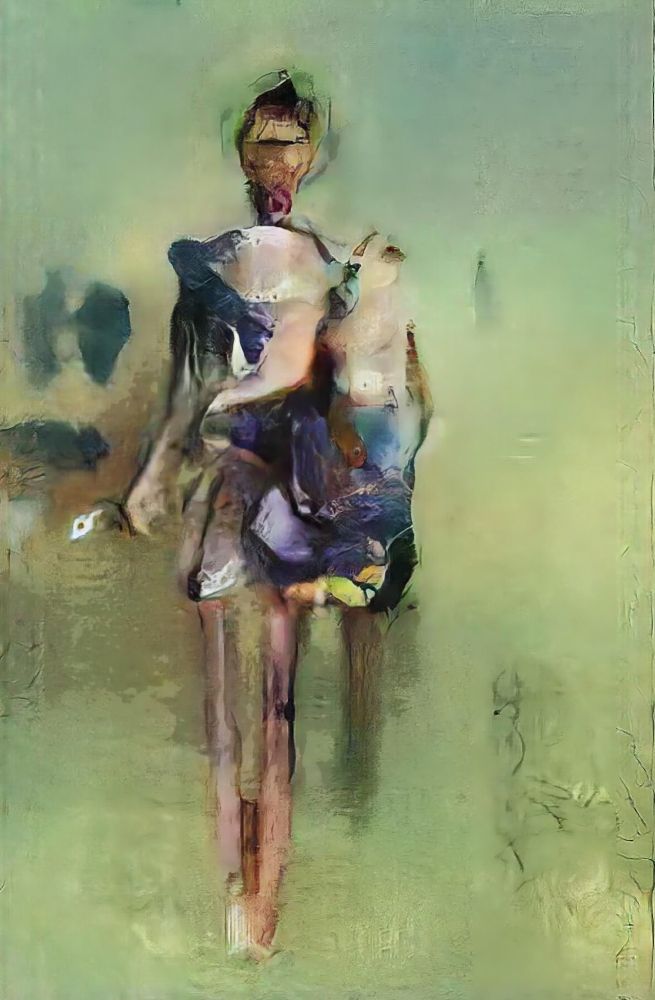

All my early AI work was using models I had trained on my own datasets that I had collected/curated myself.

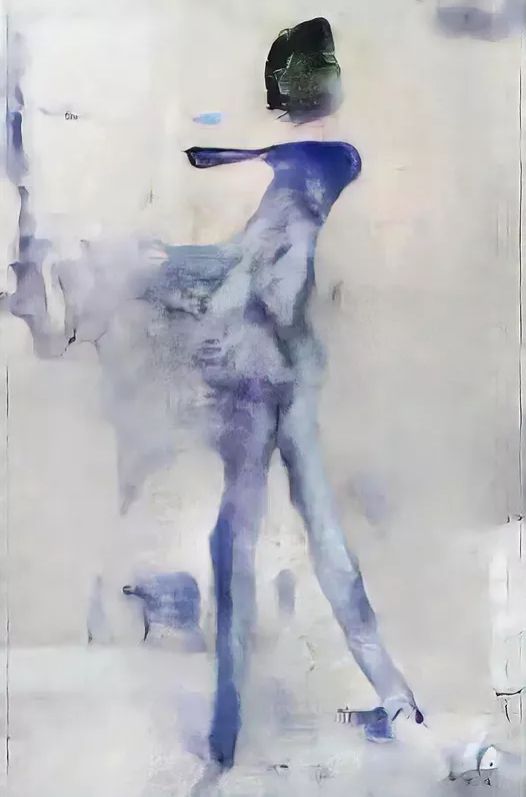

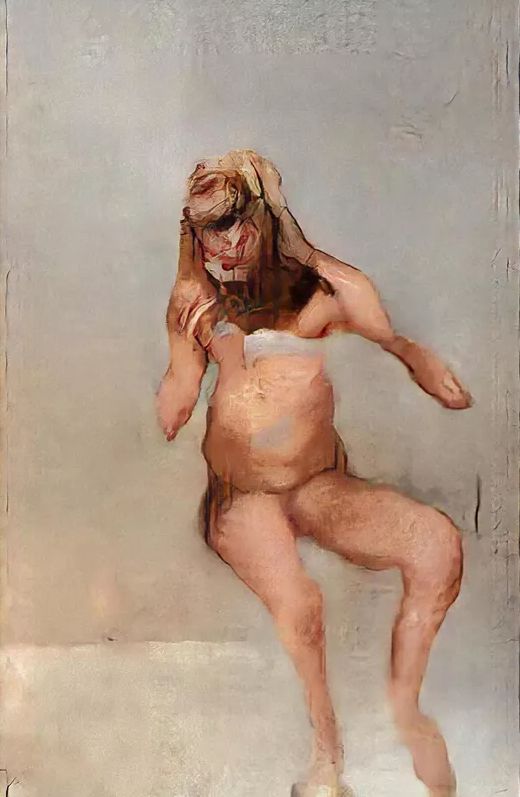

These for example are from my 2017 "Imposture" series that was trained on images and poses extracted from pornographic images.

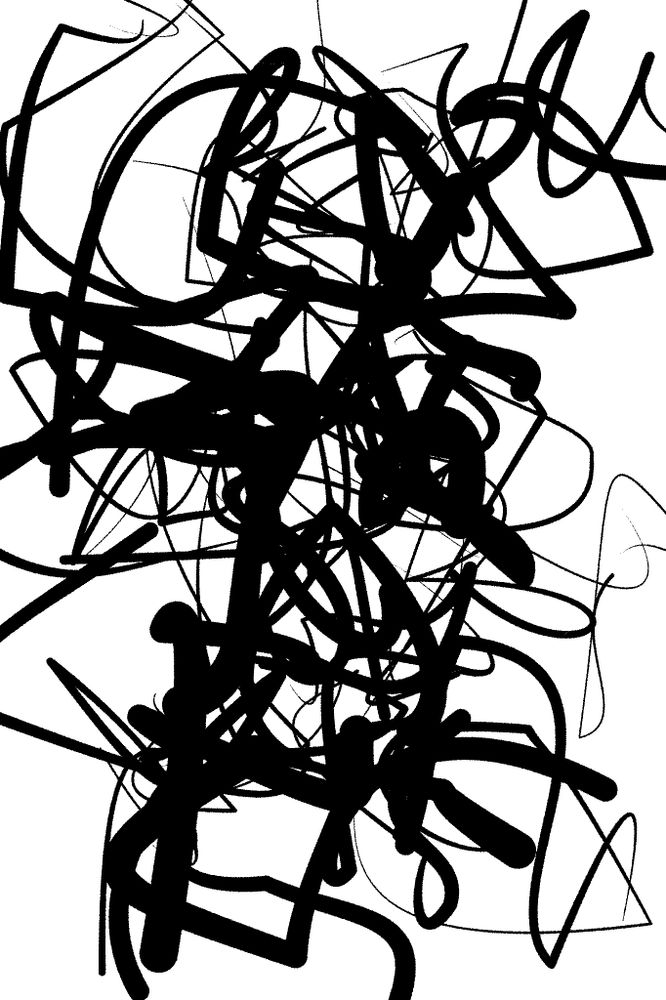

Of course the loss explodes if I measure similarity instead of distance, duh. I still don't know what it tries to optimize for here, but I kind of like the Moomin-graffiti vibes.

07.02.2025 08:32 — 👍 25 🔁 1 💬 1 📌 0

Maybe I should turn down the learning rate somewhat. But it does explode in a quite interesting way.

06.02.2025 20:16 — 👍 21 🔁 1 💬 0 📌 0It's easier for the algorithm to work with shorter segments.

05.02.2025 18:04 — 👍 20 🔁 2 💬 0 📌 0Different starting conditions and target.

05.02.2025 13:31 — 👍 19 🔁 0 💬 1 📌 1I have started to experiment again with diffvg and gradient descent on expressive vector strokes.

05.02.2025 11:20 — 👍 101 🔁 8 💬 3 📌 0Oh, I fear that it will probably hurt your soul even more if you hear that Botto is now even creating #generativeart sketches with P5.js. And whilst a lot of the results are rather beginner level quality, some of them are quite interesting.

28.01.2025 06:57 — 👍 1 🔁 0 💬 1 📌 0

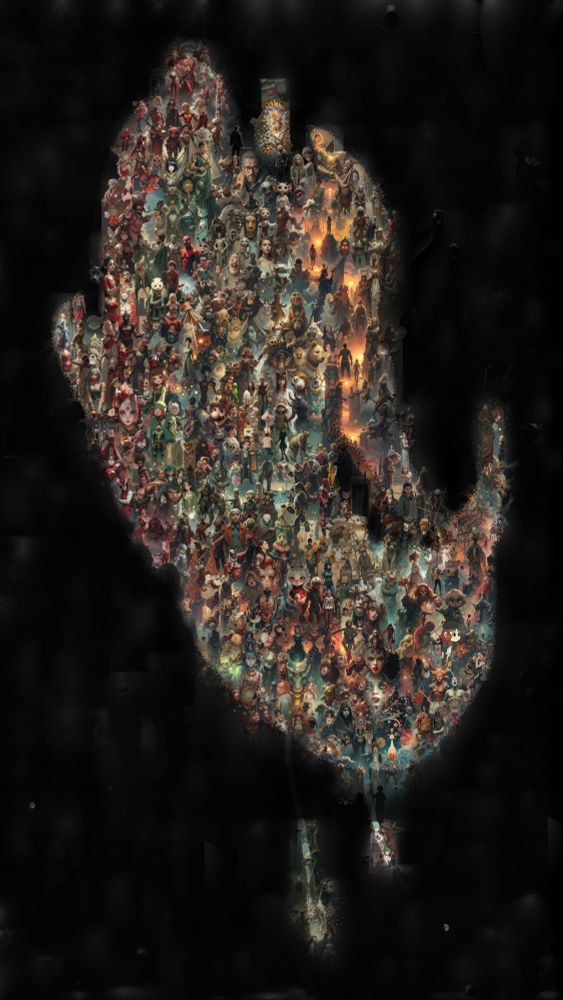

I'll be at @unireps.bsky.social this Saturday presenting a new experimental pipeline to visually explore structured neural network representations. The core idea is to take thousands of prompts that activate a concept, and then cluster and draw them using MultiDiffusion. 🧵👇

11.12.2024 23:18 — 👍 31 🔁 8 💬 2 📌 0

And for a glimpse into the "state of the art" at the time this is a good summary for what happened in 2016 and who was around:

www.alt-ai.net#watch

Those years might not line up with when the papers were published which typically was earlier, but for AI art the important dates were when the models and code were actually shared.

28.11.2024 08:45 — 👍 5 🔁 0 💬 1 📌 0From my personal recollection I would say the history was:

2014 Image Classifiers (AlexNet, GoogLeNet)

2015 DeepDream / RNN (text generation)

2016 PPGN / StyleTransfer

2017 VAE / pix2pix

2018 pix2pixHD / CycleGAN

2019 StyleGAN / BigGAN

2020 StyleGAN2 / CLIP / VQGAN

2021 StableDiffusion

One very important moment in the early days of GANs and their influence on what we know now as AI art was @phillipisola.bsky.social releasing pix2pix - the reason being that it allowed to create larger and more "artistic" outputs than @ian-goodfellow.bsky.social's original GAN from which it derived.

28.11.2024 08:21 — 👍 3 🔁 0 💬 1 📌 0The term "AI art" did not exist yet when people like Anna, Memo, Helena, Mike, Kyle, Gene, me and about maybe 5-10 others word-wide started experimenting artistically with them. So yes, I guess we shaped it.

Oh and diffusion models are not "early days" - that's like industrial age vs middle ages.

New year's resolution: remember to share more stuff in here.

18.11.2024 21:47 — 👍 76 🔁 1 💬 4 📌 1I mean an LLM writing code, like p5.js which resembles a system that generates visuals or animations using various parameters and pseudo-random number generators - which is what I guess most people would understand as typical "generative art". And yes maybe a human prompted the LLM do to that.

18.11.2024 21:44 — 👍 2 🔁 0 💬 1 📌 0This discussion is rather fruitless. AI art is generative in its nature - even the one that is just prompted. I wonder - what is your verdict on AI-coded "generative" (aka code-based/algorithmic) art? Does the code have to be handcrafted in order to be permitted to wear that label?

17.11.2024 14:13 — 👍 3 🔁 0 💬 1 📌 0Sounds like "outgroup by" :)

15.10.2023 11:54 — 👍 0 🔁 0 💬 1 📌 0What does it do?

13.10.2023 19:25 — 👍 0 🔁 0 💬 1 📌 0Ah, too bad - so I giess like it's either a lot of splats or muffled sound.

13.10.2023 19:24 — 👍 0 🔁 0 💬 1 📌 0It sounds like it is somewhat like a Fourier transform, but different.

13.10.2023 08:55 — 👍 1 🔁 0 💬 1 📌 0Not sure though if spherical harmonics are too smooth for that or if it just a question of the right coefficient count.

13.10.2023 08:55 — 👍 1 🔁 0 💬 1 📌 0