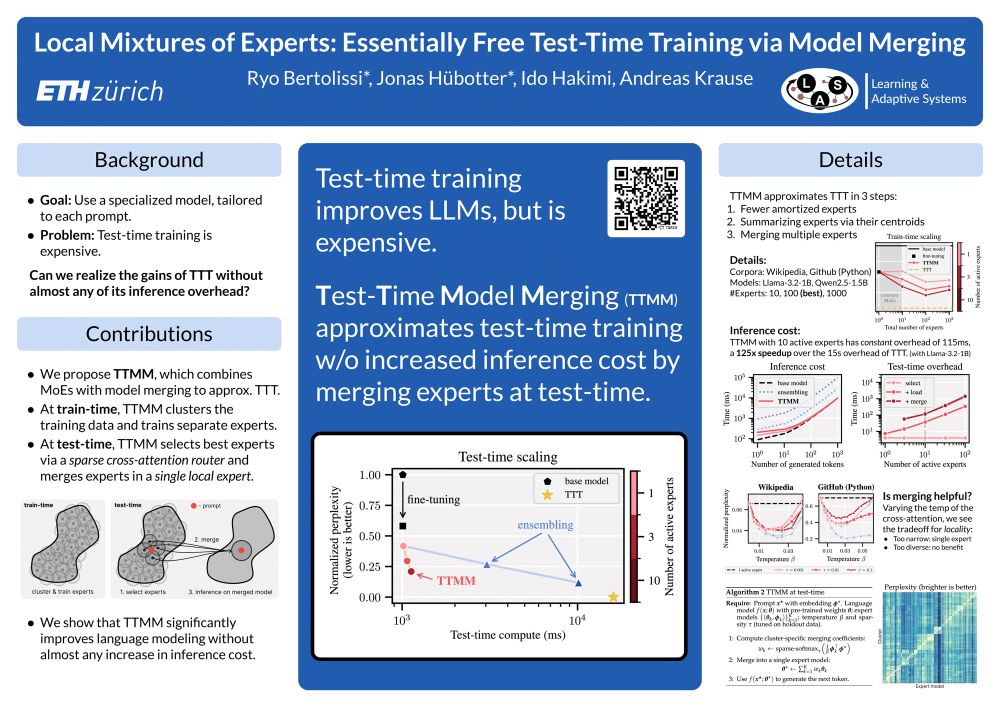

On my way to Montreal for COLM. Let me know if you’re also coming! I’d be very happy to catch up!

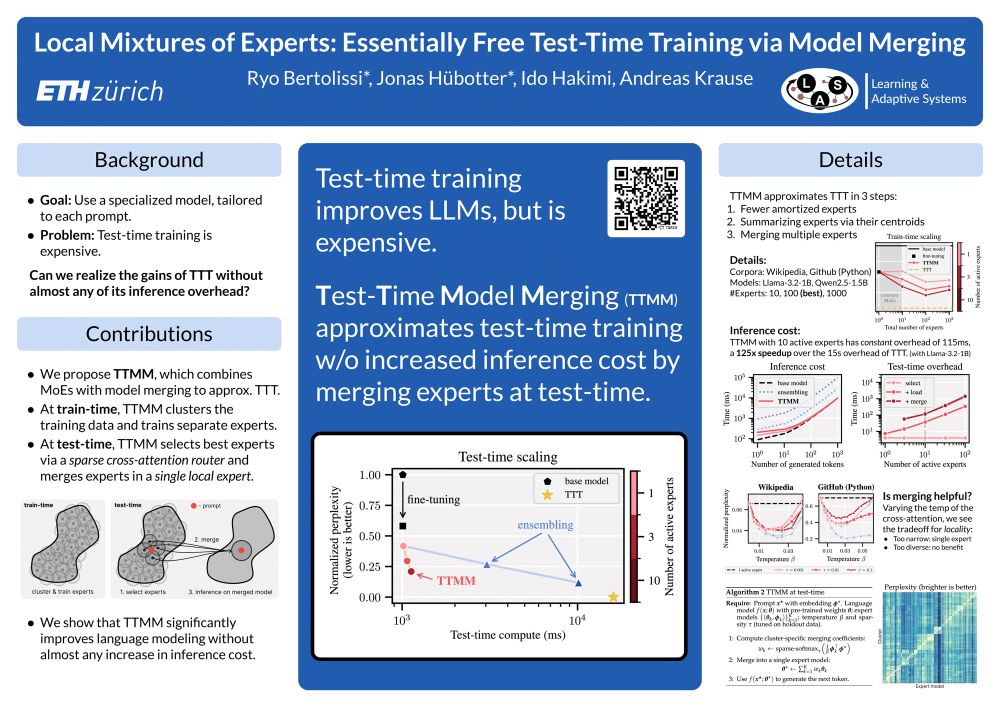

We present our poster at #1013 in the Wednesday morning session.

Joint work with the amazing Ryo Bertolissi, @idoh.bsky.social, @arkrause.bsky.social.

06.10.2025 10:52 — 👍 10 🔁 1 💬 0 📌 0

Paper: arxiv.org/pdf/2410.05026

Joint work with the amazing @marbaga.bsky.social, @gmartius.bsky.social, @arkrause.bsky.social

14.07.2025 19:38 — 👍 0 🔁 0 💬 0 📌 0

We propose an algorithm that does this by actively maximizing expected information gain of the demonstrations, with a couple of tricks to estimate this quantity and mitigate forgetting.

Interestingly, this solution is viable even without any information about pre-training!

14.07.2025 19:35 — 👍 0 🔁 0 💬 1 📌 0

In our ICML paper, we study fine-tuning a generalist policy for multiple tasks. We ask, provided a pre-trained policy, how can we maximize multi-task performance with a minimal number of additional demonstrations?

📌 We are presenting a possible solution on Wed, 11am to 1.30pm at B2-B3 W-609!

14.07.2025 19:35 — 👍 10 🔁 4 💬 1 📌 0

Our method significantly improves accuracy (measured as perplexity) for large language models and achieves a new state-of-the-art on the Pile benchmark.

If you're interested in test-time training or active learning, come chat with me at our poster session!

21.04.2025 14:40 — 👍 2 🔁 0 💬 0 📌 0

We introduce SIFT, a novel data selection algorithm for test-time training of language models. Unlike traditional nearest neighbor methods, SIFT uses uncertainty estimates to select maximally informative data, balancing relevance & diversity.

21.04.2025 14:40 — 👍 2 🔁 0 💬 1 📌 0

Paper: arxiv.org/pdf/2410.08020

21.04.2025 14:38 — 👍 0 🔁 0 💬 1 📌 0

✨ Very excited to share that our work "Efficiently Learning at Test-Time: Active Fine-Tuning of LLMs" will be presented at ICLR! ✨

🗓️ Wednesday, April 23rd, 7:00–9:30 p.m. PDT

📍 Hall 3 + Hall 2B #257

Joint work with my fantastic collaborators Sascha Bongni,

@idoh.bsky.social, @arkrause.bsky.social

21.04.2025 14:37 — 👍 15 🔁 1 💬 1 📌 0

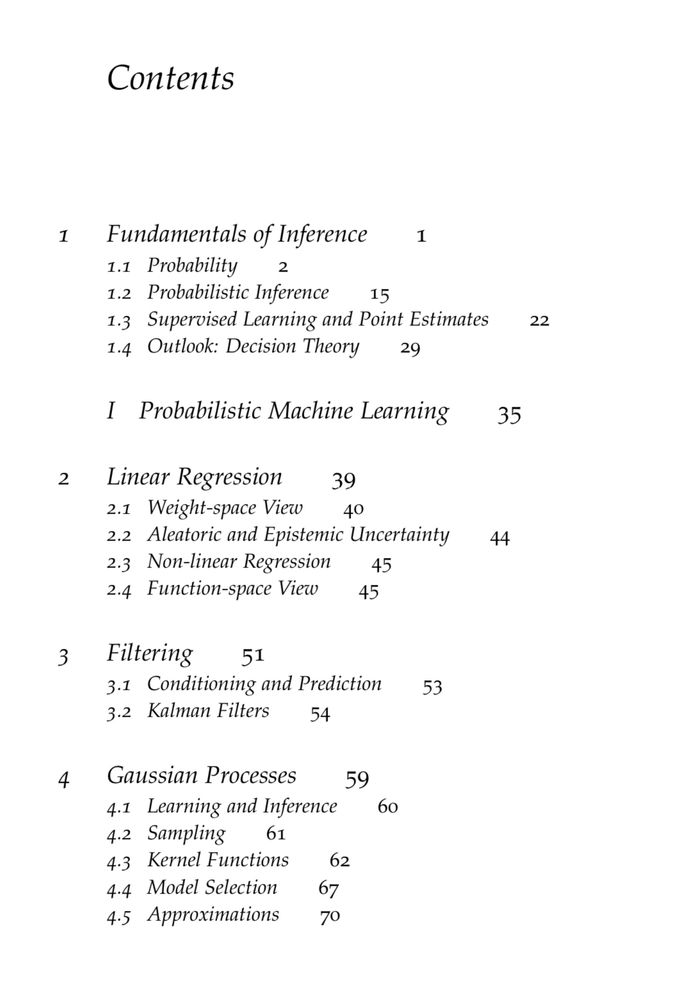

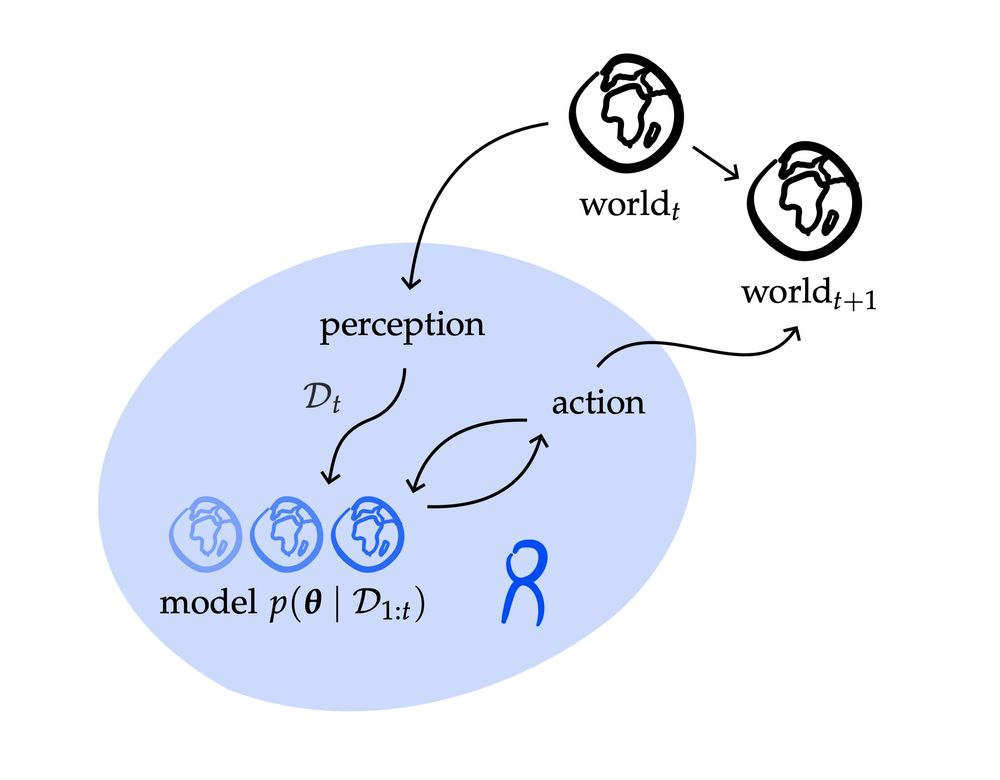

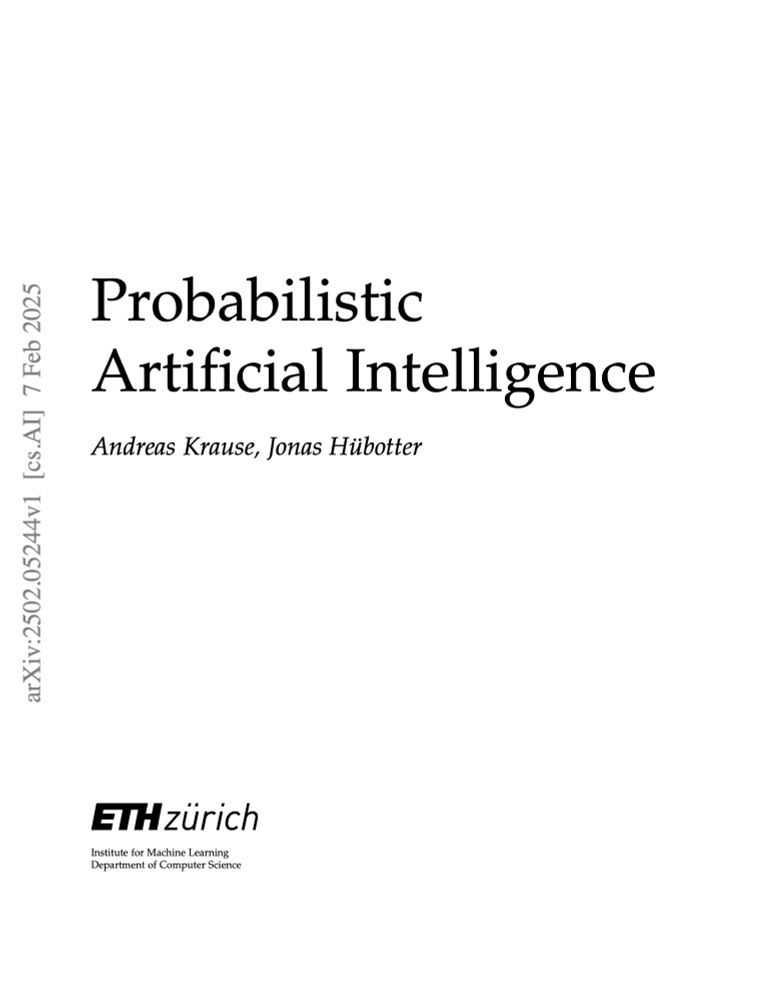

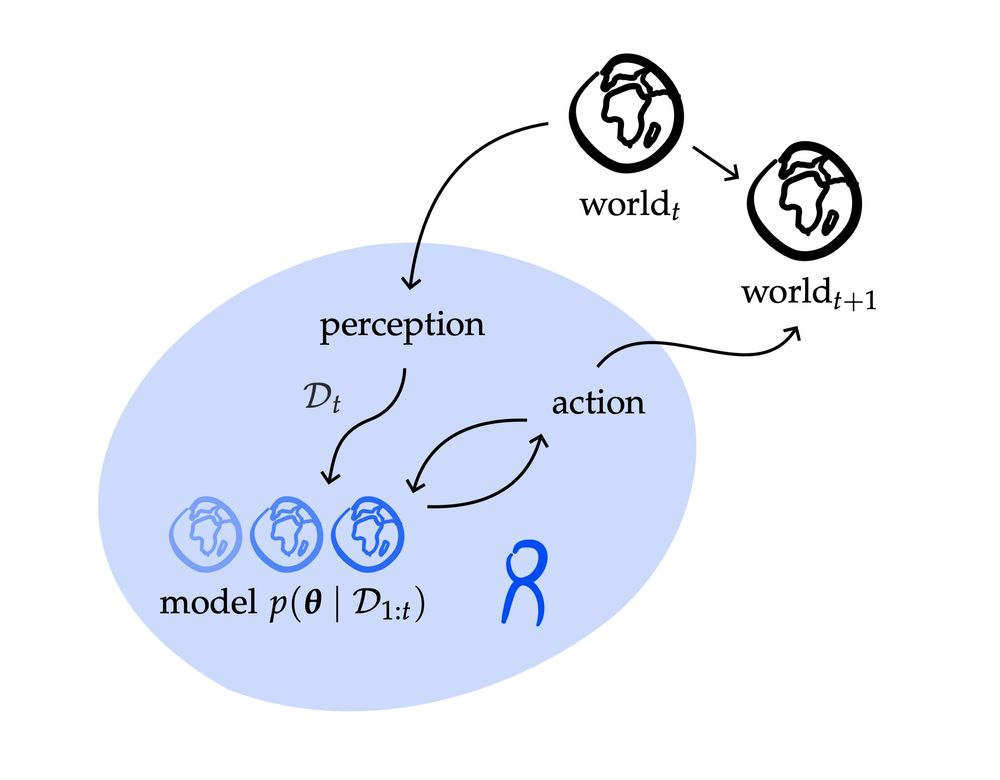

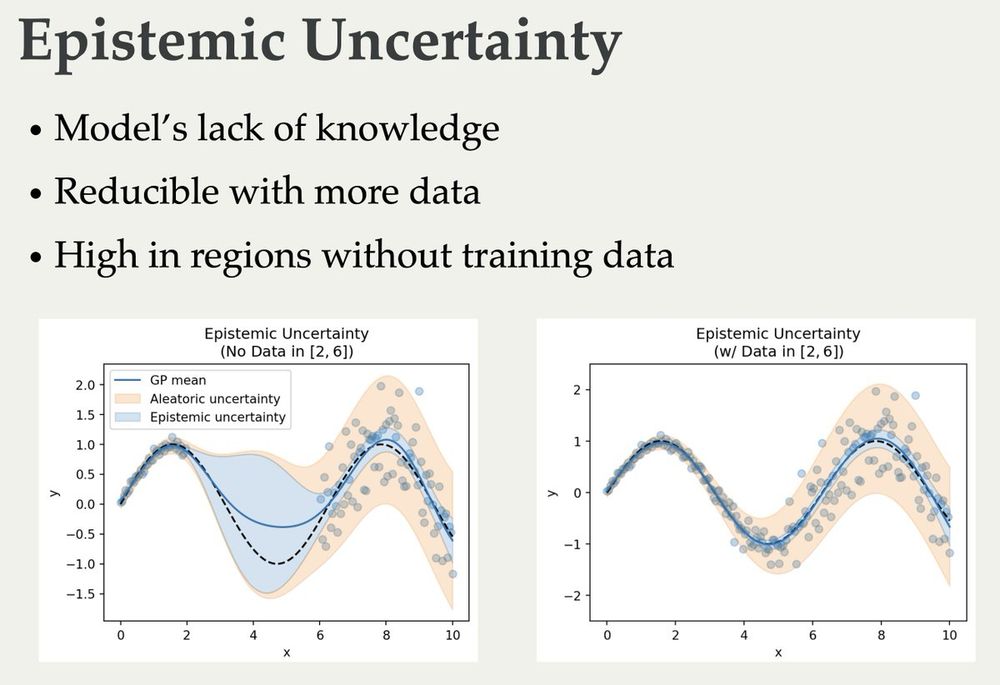

We've released our lecture notes for the course Probabilistic AI at ETH Zurich, covering uncertainty in ML and its importance for sequential decision making. Thanks a lot to @jonhue.bsky.social for his amazing effort and to everyone who contributed! We hope this resource is useful to you!

17.02.2025 07:19 — 👍 58 🔁 10 💬 1 📌 0

Unfortunately not as of now. We may also release Jupyter notebooks in the future, but this may take some time.

12.02.2025 22:25 — 👍 0 🔁 0 💬 1 📌 0

I'm glad you find this resource useful Maximilian!

11.02.2025 15:26 — 👍 1 🔁 0 💬 0 📌 0

Noted. Thanks for the suggestion!

11.02.2025 09:01 — 👍 1 🔁 0 💬 0 📌 0

Very glad to hear that they’ve been useful to you! :)

11.02.2025 08:37 — 👍 2 🔁 0 💬 1 📌 0

Huge thanks to the countless people that helped in the process of bringing this resource together!

11.02.2025 08:20 — 👍 2 🔁 0 💬 1 📌 0

I'm very excited to share notes on Probabilistic AI that I have been writing with @arkrause.bsky.social 🥳

arxiv.org/pdf/2502.05244

These notes aim to give a graduate-level introduction to probabilistic ML + sequential decision-making.

I'm super glad to be able to share them with all of you now!

11.02.2025 08:19 — 👍 122 🔁 25 💬 3 📌 3

Thou Shalt Not Overfit

Venting my spleen about the persistent inanity about overfitting.

Overfitting, as it is colloquially described in data science and machine learning, doesn’t exist. www.argmin.net/p/thou-shalt...

30.01.2025 15:35 — 👍 70 🔁 12 💬 11 📌 5

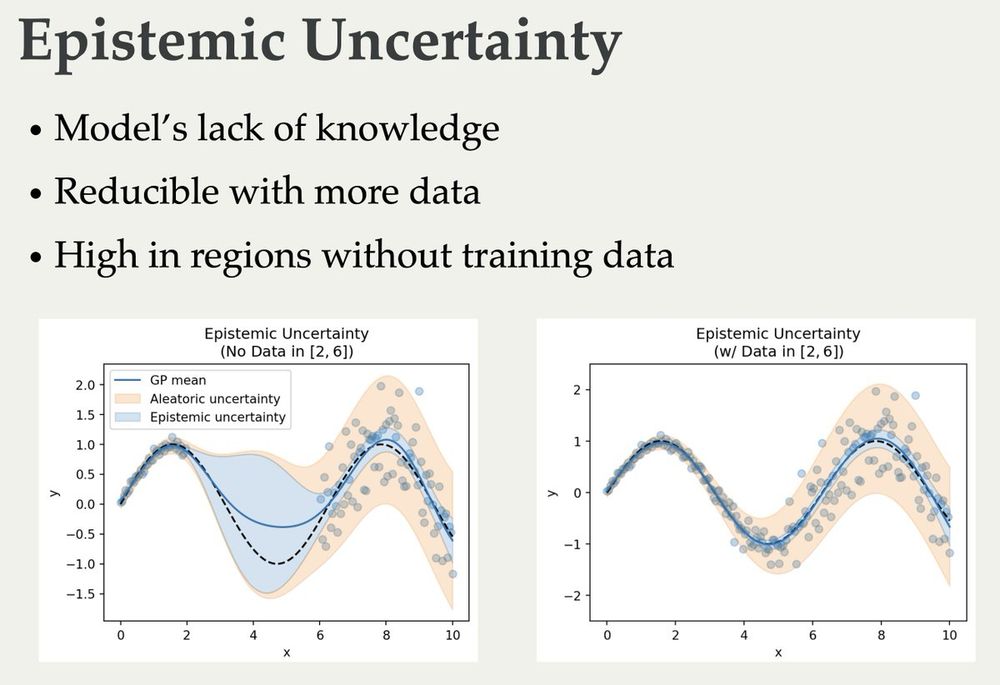

The slides for my lectures on (Bayesian) Active Learning, Information Theory, and Uncertainty are online now 🥳 They cover quite a bit from basic information theory to some recent papers:

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

17.12.2024 06:50 — 👍 178 🔁 28 💬 3 📌 0

Preprint: arxiv.org/pdf/2410.08020

13.12.2024 18:33 — 👍 2 🔁 0 💬 1 📌 0

Tomorrow I’ll be presenting our recent work on improving LLMs via local transductive learning in the FITML workshop at NeurIPS.

Join us for our ✨oral✨ at 10:30am in east exhibition hall A.

Joint work with my fantastic collaborators Sascha Bongni, @idoh.bsky.social, @arkrause.bsky.social

13.12.2024 18:32 — 👍 5 🔁 4 💬 1 📌 0

Paper: arxiv.org/pdf/2402.158...

Virtual poster: neurips.cc/virtual/2024...

11.12.2024 23:14 — 👍 0 🔁 0 💬 0 📌 0

We’re presenting our work “Transductive Active Learning: Theory and Applications” now at NeurIPS. Come join us in East at poster #4924!

Joint work with my fantastic collaborators Bhavya Sukhija, Lenart Treven, Yarden As, @arkrause.bsky.social

11.12.2024 19:53 — 👍 5 🔁 2 💬 1 📌 0

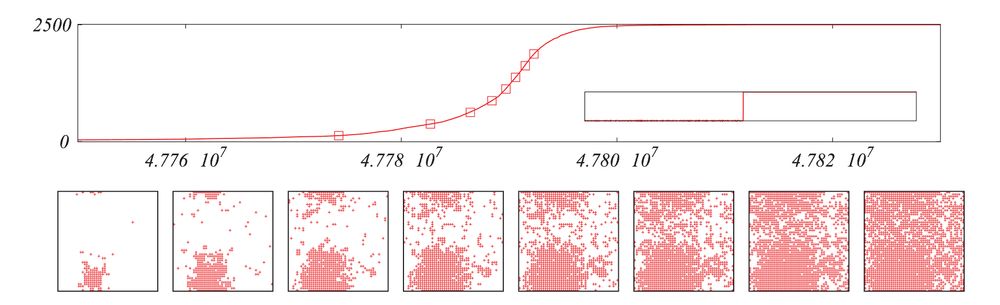

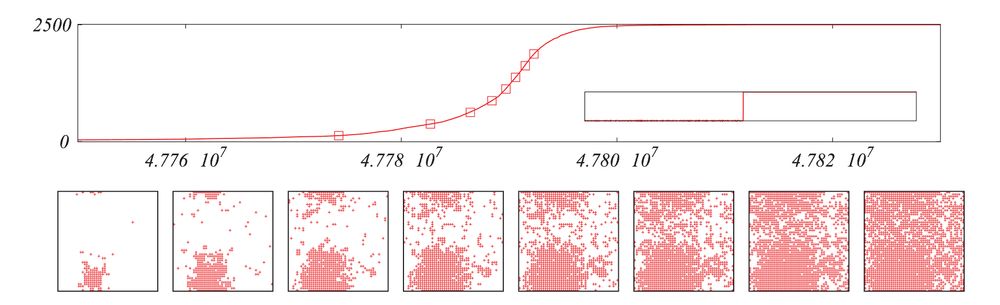

Spread of innovation in a small world network.

Assume that the nodes of a social network can choose between two alternative technologies: B and X.

A node using B receives a benefit with respect to X, but there is a benefit to using the same tech as the majority of your neighbors.

Assume everyone uses X at time t=0. Will they switch to B?

23.11.2024 22:48 — 👍 65 🔁 8 💬 3 📌 0

PhD student in Machine Learning at ETH Zurich & Max Planck Institute

Information and updates about RLC 2025 at the University of Alberta from Aug. 5th to 8th!

https://rl-conference.cc

Prof. Uni Tübingen, Machine Learning, Robotics, Haptics

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Research & Education @ ai.ethz.ch + Science @ ellis.eu · b4 AI PhD @epfl.ch & @ spotify.com @ amazon.com @ stanford.edu @ usc.edu @ kuleuven.be · he

arnoutdevos.github.io

Research Scientist at Google DeepMind

Postdoc at CMU. Trying to learn about deep learning faster than deep learning can learn about me.

avischwarzschild.com

posting about posts at new york magazine. have me on your podcast!

ETH/CLS PhD candidate focused on reinforcement learning and sports lover.

Doctoral fellow at ETH AI Center; RL, Controls, LLMs, AI

Official Bluesky page of the Computer Science Department at ETH Zurich. Collected media and news from and about the department.

Welcome to ETH AI Center! We are ethz.ch/en 's central hub leading the way towards trustworthy, accessible and inclusive #artificialintelligence

ai.ethz.ch

Research Scientist @Bioptimus. Previously at ETH Zürich, Max Planck Institute for Intelligent Systems, Google Research, EPFL, and RIKEN AIP.

aleximmer.github.io

Machine Learning Professor

https://cims.nyu.edu/~andrewgw

Machine Learning Scientist @ ETH Zurich, Active Learning, Sequence Design, GenAI

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

Senior Data Scientist at the Swiss Data Science Center