Excited to be speaking at the SPIGM workshop at NeurIPS tomorrow, 10:30-11 am, Room 20C. My talk will be "Probabilistic Inference is the Future of Foundation Models". See you there! spigmworkshopv3.github.io/schedule/

06.12.2025 02:06 — 👍 15 🔁 0 💬 1 📌 0

Forever would be good for me, even if it's eventually just rotating around in space.

26.10.2025 00:22 — 👍 1 🔁 0 💬 1 📌 0

A nice list. But, it doesn't actually go much beyond electronics. In terms of quality of life, I think some of these "conveniences" are a downgrade in practice. I miss blockbuster. I miss watching my favourite shows when they aired on a TV schedule. I miss 90s gaming. I miss being able to unplug.

12.10.2025 21:06 — 👍 10 🔁 0 💬 2 📌 0

Thank you!

24.09.2025 14:21 — 👍 1 🔁 0 💬 0 📌 0

YouTube video by Machine Learning Street Talk

The Real Reason Huge AI Models Actually Work

My full interview with MLStreetTalk has just been posted. I really enjoyed this conversation! We talk about the bitter lesson, scientific discovery, Bayesian inference, mysterious phenomena, and key principles for building intelligent systems. www.youtube.com/watch?v=M-jT...

23.09.2025 17:40 — 👍 32 🔁 6 💬 1 📌 0

I'm excited to be giving a keynote talk at the AutoML conference 9-10 am at Cornell Tech tomorrow! I'm presenting "Prescriptions for Universal Learning". I'll talk about how we can enable automation, which I'll argue is the defining feature of ML. 2025.automl.cc/program/

09.09.2025 13:27 — 👍 7 🔁 0 💬 0 📌 0

Research doesn't go in circles, but in spirals. We return to the same ideas, but in a different and augmented form.

01.09.2025 18:45 — 👍 22 🔁 0 💬 0 📌 0

Deep Learning’s Most Puzzling Phenomena Can Be Explained by Decades-Old Theory

Andrew Gordon Wilson argues that many generalization phenomena in deep learning can be explained using decades-old theoretical tools.

CDS/Courant Professor Andrew Gordon Wilson (@andrewgwils.bsky.social) argues mysterious behavior in deep learning can be explained by decades-old theory, not new paradigms: PAC-Bayes bounds, soft biases, and large models with a soft simplicity bias.

nyudatascience.medium.com/deep-learnin...

27.08.2025 14:46 — 👍 8 🔁 1 💬 0 📌 0

I have a confession to make. After 6 years, I stopped teaching belief propagation (but still cover graphical models). It felt like tedious bookkeeping around orderings of sums and notation. Have I strayed?

09.08.2025 20:29 — 👍 3 🔁 0 💬 2 📌 0

Regardless of whether you plan to use them in applications, everyone should learn about Gaussian processes, and Bayesian methods. They provide a foundation for reasoning about model construction and all sorts of deep learning behaviour that would otherwise appear mysterious.

09.08.2025 14:42 — 👍 56 🔁 6 💬 3 📌 0

A common takeaway from "the bitter lesson" is we don't need to put effort into encoding inductive biases, we just need compute. Nothing could be further from the truth! Better inductive biases mean better scaling exponents, which means exponential improvements with computation.

08.08.2025 13:47 — 👍 19 🔁 3 💬 1 📌 1

YouTube video by The Piano Experience

Glenn Gould plays Chopin Piano Sonata No. 3 in B minor Op.58

Gould mostly recorded baroque and early classical. He only recorded a single Chopin piece, as a one-off broadcast. But like many of his efforts, it's profoundly thought provoking, the end product as much Gould as it is Chopin. I love the last mvt (20:55+). www.youtube.com/watch?v=NAHE...

29.07.2025 17:44 — 👍 4 🔁 0 💬 0 📌 0

I don't think those things seem boring. But most research directions honestly are quite boring, because they are geared towards people pleasing --- going with the herd, seeking approval from others, and taking no risks. It's a great way to avoid making a contribution that changes any minds.

29.07.2025 02:39 — 👍 1 🔁 0 💬 1 📌 0

Whatever you do, just don't be boring.

28.07.2025 23:15 — 👍 4 🔁 0 💬 1 📌 1

YouTube video by LoG Meetup NYC

It's Time to Say Goodbye to Hard (equivariance) Constraints - Andrew Gordon Wilson

I had a great time presenting "It's Time to Say Goodbye to Hard Constraints" at the Flatiron Institute. In this talk, I describe a philosophy for model construction in machine learning. Video now online! www.youtube.com/watch?v=LxuN...

22.07.2025 19:28 — 👍 13 🔁 2 💬 0 📌 0

Excited to be presenting my paper "Deep Learning is Not So Mysterious or Different" tomorrow at ICML, 11 am - 1:30 pm, East Exhibition Hall A-B, E-500. I made a little video overview as part of the ICML process (viewable from Chrome): recorder-v3.slideslive.com#/share?share...

17.07.2025 00:16 — 👍 25 🔁 5 💬 0 📌 1

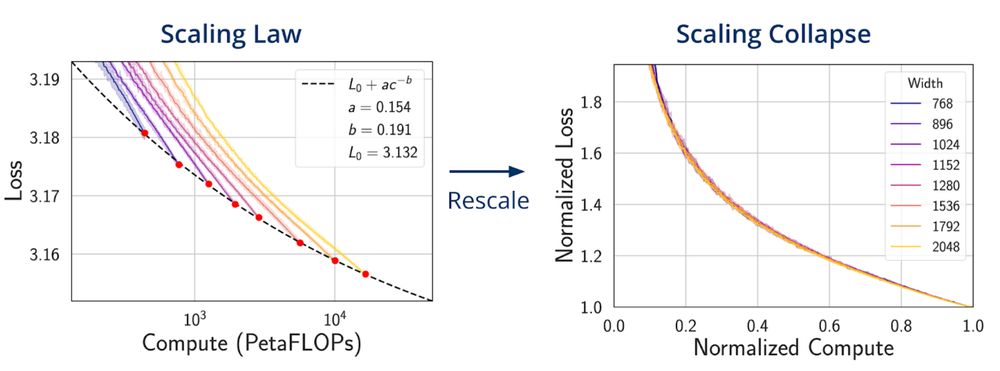

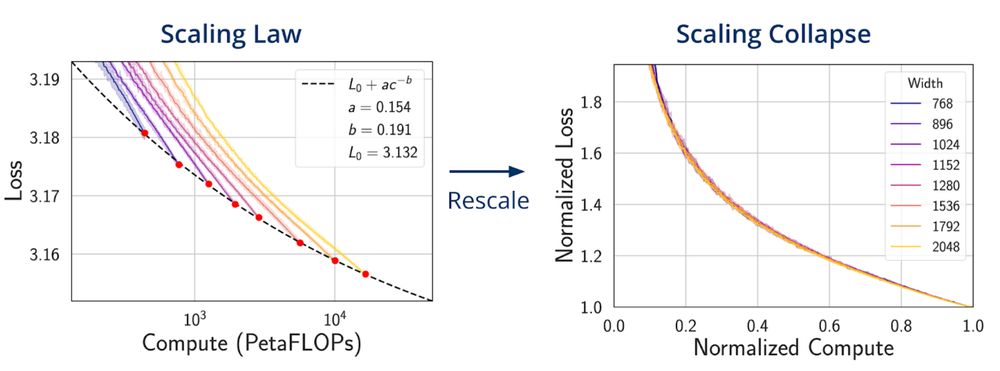

While scaling laws typically predict the final loss, we show that good scaling rules enable accurate predictions of entire loss curves of larger models from smaller ones! Shikai Qiu did an amazing job leading the paper, in collaboration with L. Xiao, J. Pennington, A. Agarwala. 3/3

08.07.2025 14:45 — 👍 2 🔁 0 💬 0 📌 0

In particular, scaling collapse allows us to transfer insights from experiments conducted at a very small scale to much larger models! Much more in the paper, including supercollapse: collapse between curves less than the noise floor of per-model loss curves across seeds. 2/3

08.07.2025 14:45 — 👍 2 🔁 0 💬 1 📌 0

Our new ICML paper discovers scaling collapse: through a simple affine transformation, whole training loss curves across model sizes with optimally scaled hypers collapse to a single universal curve! We explain the collapse, providing a diagnostic for model scaling.

arxiv.org/abs/2507.02119

1/3

08.07.2025 14:45 — 👍 31 🔁 5 💬 3 📌 0

I love havarti!

02.07.2025 15:52 — 👍 2 🔁 0 💬 0 📌 0

It sometimes feels like papers are written this way. <Make claim that may or may not be true but aligns with the paper's narrative> <find arbitrary reference that supposedly supports that claim, but may be making a different point entirely>. I guess grammarly is giving the people what they want?

26.06.2025 10:23 — 👍 1 🔁 0 💬 0 📌 0

Excited about our new ICML paper, showing how algebraic structure can be exploited for massive computational gains in population genetics.

25.06.2025 14:06 — 👍 3 🔁 1 💬 0 📌 0

Machine learning is perhaps the only discipline that has become less mature over time. A reverse metamorphosis, from butterfly to caterpillar.

24.06.2025 22:11 — 👍 22 🔁 3 💬 1 📌 0

AI this, AI that, the implications of AI for X... can we just never talk about AI again?

17.06.2025 20:27 — 👍 9 🔁 0 💬 1 📌 0

Really excited about our new paper, "Why Masking Diffusion Works: Condition on the Jump Schedule for Improved Discrete Diffusion". We explain the mysterious success of masking diffusion to propose new diffusion models that work well in a variety settings, including proteins, images, and text!

16.06.2025 14:29 — 👍 6 🔁 0 💬 0 📌 0

What's irrational is the idea that some group of authors writing a paper about something foundational also should be the team of people to put it into production in the real world and demonstrate its impact, all in one paper. That happens over years, and involves different interests and skills.

16.06.2025 14:26 — 👍 4 🔁 1 💬 1 📌 0

I find that this position is often more emotionally rooted than rational. It makes no sense to expect a paper on foundations to demonstrate significant real-world impact. As you say, it's a cumulative process carried out by different people, over time.

16.06.2025 09:43 — 👍 3 🔁 0 💬 1 📌 0

Sorry to miss it! Currently in Cambridge UK for a Newton Institute programme on uncertainty representation.

16.06.2025 09:14 — 👍 1 🔁 0 💬 1 📌 0

Great topic for a workshop!

15.06.2025 21:35 — 👍 3 🔁 0 💬 1 📌 0

YouTube video by Lex Fridman

Terence Tao: Hardest Problems in Mathematics, Physics & the Future of AI | Lex Fridman Podcast #472

A really outstanding interview of Terence Tao, providing an introduction to many topics, including the math of general relativity (youtube.com/watch?v=HUkB...). I love relativity, and in a recent(ish) paper we also consider the wave maps equation (section 5, arxiv.org/abs/2304.14994).

15.06.2025 20:25 — 👍 13 🔁 2 💬 0 📌 1

Founder of @pholus.co. Helping leaders protect reputation, prevent failure, and regain momentum when conventional playbooks fall short.

Prime Minister of Canada and Leader of the Liberal Party | Premier ministre du Canada et chef du Parti libéral

markcarney.ca

Google DeepMind Staff Scientist

Distinguished Visitor Cambridge University

Official account of the NYU Center for Data Science, the home of the Undergraduate, Master’s, and Ph.D. programs in data science. cds.nyu.edu

Astrophysicist turned Climate Scientist | AI for Science | Professor of Physics at @cuny.bsky.social; happy camper at @leapstc.bsky.social | Author, ML for Physics and Astronomy: https://shorturl.at/VJ9k9 | Lover of puns, data, good people, and unfinished

Running experiments @OpenAI + @ml-collective.bsky.social

Prev: Windscape AI, Uber AI Labs founding team, adviser Recursion Pharma, Cornell, Montreal, Caltech 🌻

Bioinformatics Scientist / Next Generation Sequencing, Single Cell and Spatial Biology, Next Generation Proteomics, Liquid Biopsy, SynBio, AI/ML in biotech // http://albertvilella.substack.com

Discover the Languages of Biology

Build computational models to (help) solve biology? Join us! https://www.deboramarkslab.com

DM or mail me!

head, Comp Systems Neurosci Lab @wigner centre. neuro + ML

Science and Shenanigans. Heart and Hope.

https://bunsenbernerbmd.com

Prof at the University of British Columbia. Research in statistics, ML, and AI for science. Views are my own. https://charlesm93.github.io./

Co-founder & Chief Scientist at Yutori. Prev: Senior Director leading FAIR Embodied AI at Meta, and Professor at Georgia Tech.

Chief Scientist & CEO QIM. UVa School of Data Science (Founder/Funder). Embrace Simplicity, Beware Complexity. Coding at CODE. Honesty. Sleep. Squash.

Senior Researcher@Criteo,

Rising Star in AI Ethics 2025 |

Fairness, Causality, GenAI

Co-founder and CEO, Mistral AI

AGI Cyberneticist @daiosai. Ex AI Safety Team lead @deepmind. PhD @CambridgeMLG. AI, Machine Learning, Information Theory, Causality, Decision Theory.

assistant prof at USC Data Sciences and Operations and Computer Science; phd Cornell ORIE.

data-driven decision-making, operations research/management, causal inference, algorithmic fairness/equity

bureaucratic justice warrior

angelamzhou.github.io

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine