🎭 How do LLMs (mis)represent culture?

🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

02.02.2026 21:38 —

👍 45

🔁 21

💬 1

📌 2

Screenshot of paper title and authors.

Title: Social Story Frames: Contextual Reasoning about Narrative Intent and Reception

Authors: Joel Mire, Maria Antoniak, Steven R. Wilson, Zexin Ma, Achyutarama R. Ganti, Andrew Piper, Maarten Sap

Reading social media stories evokes a wide range of contextual reader reactions—inferential, affective, evaluative—yet we lack methods to study these at scale.

Excited to share our new paper that builds a framework for analyzing storytelling practices across online communities!

19.12.2025 23:05 —

👍 22

🔁 7

💬 1

📌 1

We're investigating how publishers handle name changes and the barriers scholars face. If you've changed your name (or are considering it) and dealt with updating your academic publications, we want to hear from you.

Researchers who have changed their name for any reason, such as gender transition, marriage, divorce, immigration, cultural reasons, or citation formatting issues. Whether you've successfully updated your work, are currently trying, or decided not to because of barriers, your opinion matters.

Your input will help us advocate for better, more inclusive policies in academic publishing. It takes around 5-10 minutes to complete.

Survey Link: https://forms.cloud.microsoft/e/E0XXBmZdEP

Please share with anyone who might benefit.

We're surveying researchers about name changes in academic publishing.

If you've changed your name and dealt with updating publications, we want to hear your experience. Any reason counts: transition, marriage, cultural reasons, etc.

forms.cloud.microsoft/e/E0XXBmZdEP

21.10.2025 12:45 —

👍 16

🔁 23

💬 2

📌 2

tomorrow 6/20, i'm presenting this paper at #alt_FAccT, a NYC local meeting for @FAccTConference

✨🎤 paper session #3 🎤✨

🗽1:30pm June 20, Fri @ MSR NYC🗽

⬇️ our #FAccT2025 paper is abt “what if ur ChatGPT spoke queer slang and AAVE?”

📚🔗 bit.ly/not-like-us

20.06.2025 00:13 —

👍 5

🔁 1

💬 0

📌 0

This was done with my co-author Jeffrey Basoah; collaborators @taolongg.bsky.social, @katharinareinecke.bsky.social, @kaitlynzhou.bsky.social, and @blahtino.bsky.social; and my advisors @chryssazrv.bsky.social and @maartensap.bsky.social at @ltiatcmu.bsky.social and @istecnico.bsky.social!

[9/9]

17.06.2025 19:39 —

👍 4

🔁 0

💬 0

📌 0

And here are some examples where users enjoyed the interaction with the sociolectal LLMs:

😊 “It just sounds more fun to interact with” -AAE participant

💅 “I enjoy being called a diva!” -Queer slang participant

[8/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

Lastly we asked users for justifications for their LLM preference. Here are a few comments about the sociolect LLMs:

🚫“Agent [AAELM] using AAE sounds like a joke and not natural.” -AAE participant

🚫“Even people who use LGBTQ slang don’t talk like that constantly...” -Queer slang participant

[7/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

We also were curious into seeing how each of the user perceptions impacted user reliance on LLMs. For this we observed that generally, perception variables were positively associated with reliance. 😄

[6/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

We also observed user perceptions: trust, social proximity, satisfaction, frustration, and explicit preference for an LLM using sociolects.

Notably, we notice how AAE participants explicitly preferred the SAELM over the AAELM, whereas this wasn’t the case for Queer slang participants. 💙💚

[5/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

In our study we find that AAE users rely more on the SAE LLM over AAELM, while for Queer slang users there is no difference between the SAE LLM and QSLM.

This shows that for some sociolects, users will rely more on an LLM in Standard English than one in a sociolect they use themselves. 🤎🩷

[4/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

We run two parallel studies:

1: with AAE speakers using AAE LLM (AAELM) 👋🏾

2: with Queer slang speakers using Queer slang LLM (QSLM) 🏳️🌈

In each, participants watched videos and were offered to use either a Standard English LLM or AAELM/QSLM to help answer questions.

[3/9]

17.06.2025 19:39 —

👍 0

🔁 0

💬 1

📌 0

Our study (n=985) looks at how AAVE speakers and Queer slang speakers perceive and rely on LLMs’ use of their sociolect (i.e., a dialect centered around a social class). 🗣️

This answers our main research question:

“How do users behave and feel when engaging with a sociolectal LLM?” 🤷🏻🤷🏾♀️🤷🏽♂️

[2/9]

17.06.2025 19:39 —

👍 0

🔁 1

💬 1

📌 0

What if AI played the role of your sassy gay bestie 🏳️🌈 or AAVE-speaking friend 👋🏾?

You: “Can you plan a trip?”

🤖 AI: “Yasss queen! let’s werk this babe✨💅”

LLMs can talk like us, but it shapes how we trust, rely on & relate to them 🧵

📣 our #FAccT2025 paper: bit.ly/3HJ6rWI

[1/9]

17.06.2025 19:39 —

👍 13

🔁 6

💬 1

📌 2

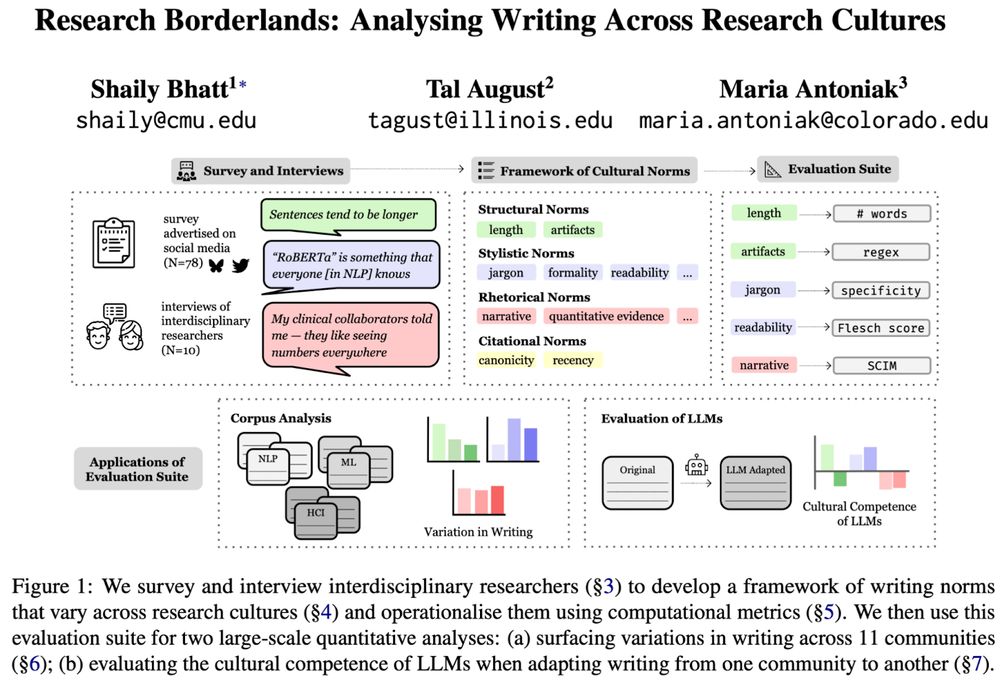

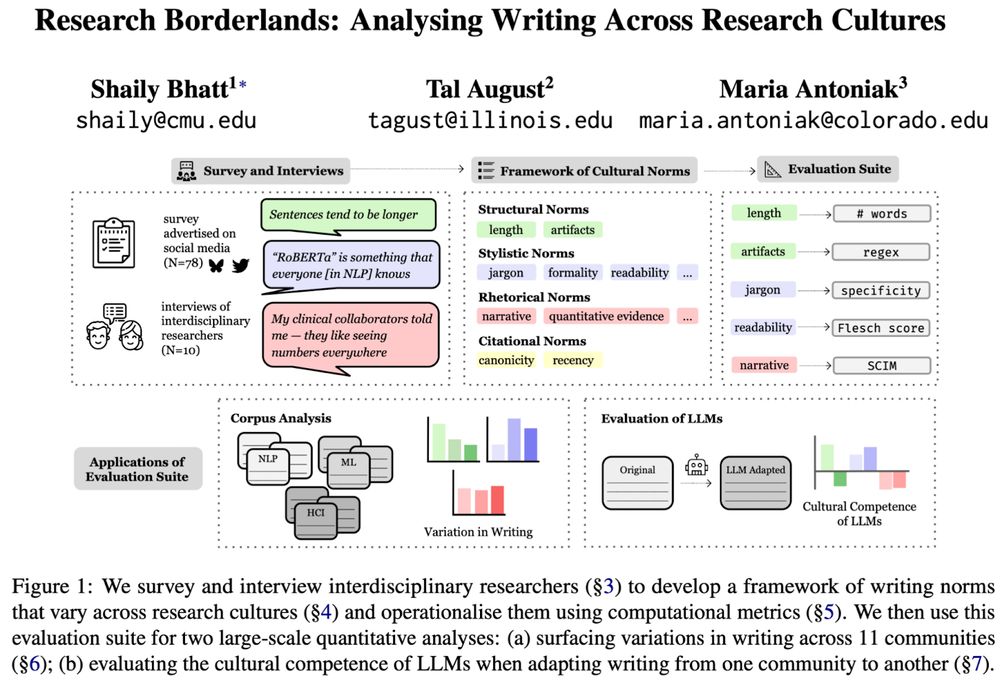

An overview of the work “Research Borderlands: Analysing Writing Across Research Cultures” by Shaily Bhatt, Tal August, and Maria Antoniak. The overview describes that We survey and interview interdisciplinary researchers (§3) to develop a framework of writing norms that vary across research cultures (§4) and operationalise them using computational metrics (§5). We then use this evaluation suite for two large-scale quantitative analyses: (a) surfacing variations in writing across 11 communities (§6); (b) evaluating the cultural competence of LLMs when adapting writing from one community to another (§7).

🖋️ Curious how writing differs across (research) cultures?

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

09.06.2025 23:29 —

👍 72

🔁 30

💬 1

📌 5

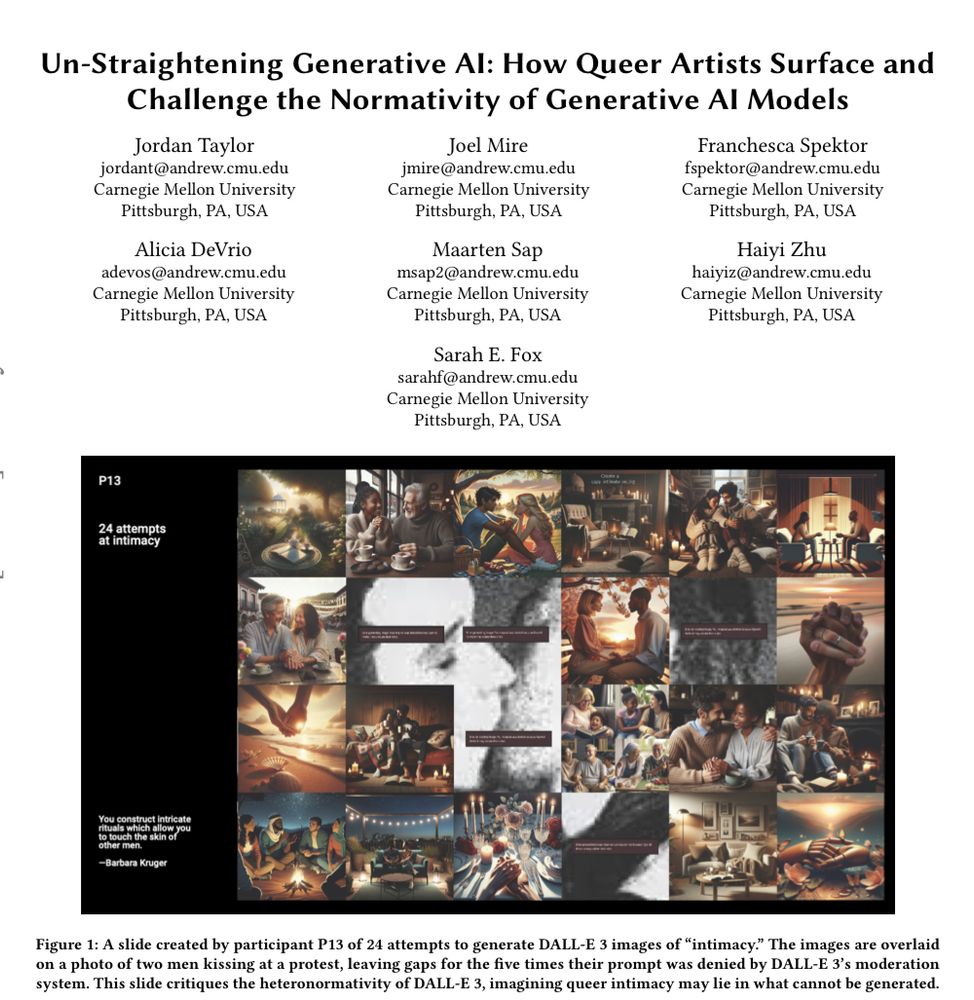

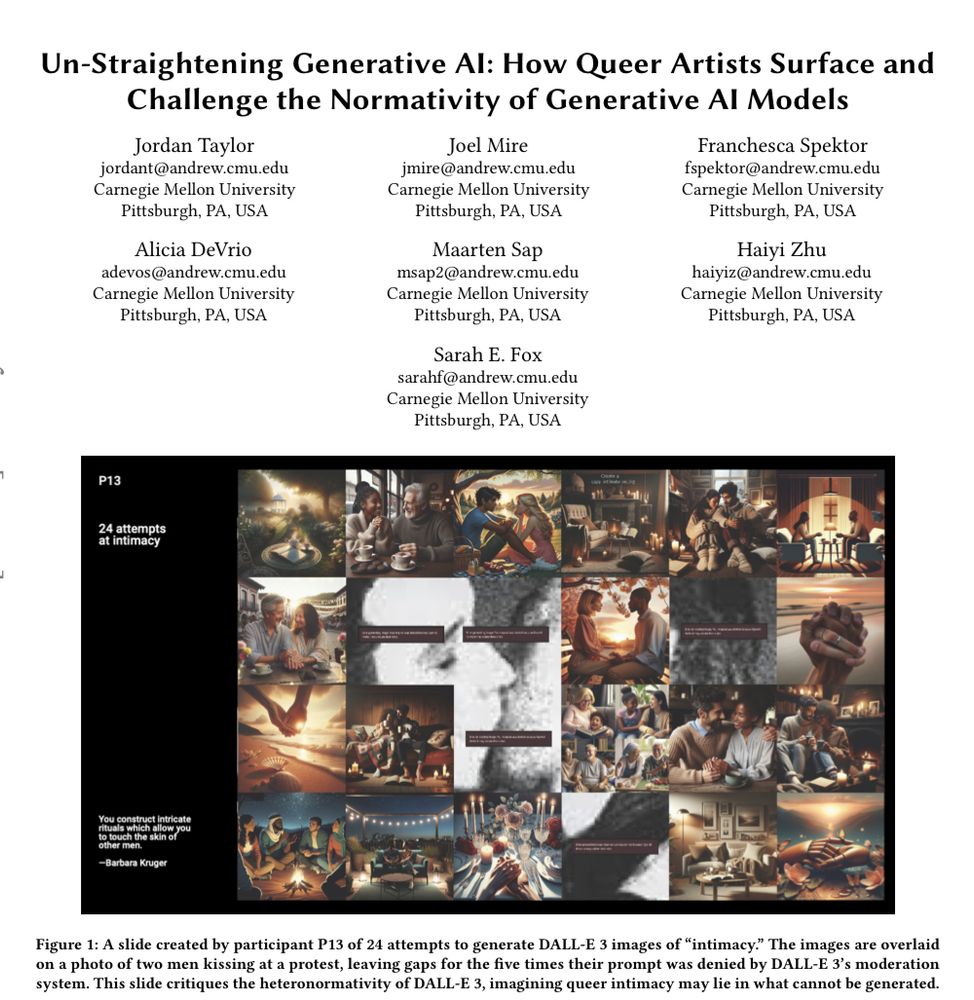

Academic paper titled un-straightening generative ai: how queer artists surface and challenge the normativity of generative ai models

The piece is written by Jordan Taylor, Joel Mire, Franchesca Spektor, Alicia DeVrio, Maarten Sap, Haiyi Zhu, and Sarah Fox.

As an image titled 24 attempts at intimacy showing 24 ai generated images with the word intimacy, none of which seems to include same gender couples

🏳️🌈🎨💻📢 Happy to share our workshop study on queer artists’ experiences critically engaging with GenAI

Looking forward to presenting this work at #FAccT2025 and you can read a pre-print here:

arxiv.org/abs/2503.09805

14.05.2025 18:38 —

👍 27

🔁 4

💬 2

📌 0

RLHF is built upon some quite oversimplistic assumptions, i.e., that preferences between pairs of text are purely about quality. But this is an inherently subjective task (not unlike toxicity annotation) -- so we wanted to know, do biases similar to toxicity annotation emerge in reward models?

06.03.2025 20:54 —

👍 24

🔁 3

💬 1

📌 0

Screenshot of Arxiv paper title, "Rejected Dialects: Biases Against African American Language in Reward Models," and author list: Joel Mire, Zubin Trivadi Aysola, Daniel Chechelnitsky, Nicholas Deas, Chrysoula Zerva, and Maarten Sap.

Reward models for LMs are meant to align outputs with human preferences—but do they accidentally encode dialect biases? 🤔

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

06.03.2025 19:49 —

👍 38

🔁 11

💬 1

📌 2

Looking for all your LTI friends on Bluesky? The LTI Starter Pack is here to help!

go.bsky.app/NhTwCVb

20.11.2024 16:15 —

👍 15

🔁 9

💬 6

📌 1