just realized bsky doesn't support gifs lol

15.12.2024 14:40 — 👍 1 🔁 0 💬 0 📌 0Zack Angelo

@zackangelo.bsky.social

building ai inference @ mixlayer

@zackangelo.bsky.social

building ai inference @ mixlayer

just realized bsky doesn't support gifs lol

15.12.2024 14:40 — 👍 1 🔁 0 💬 0 📌 0

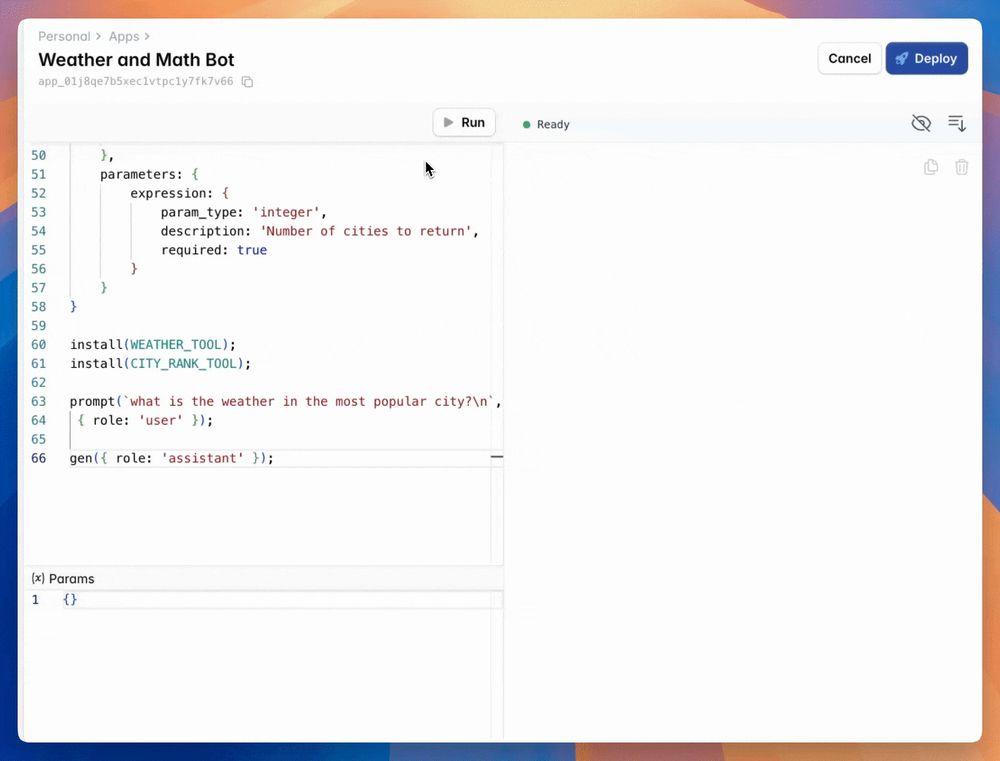

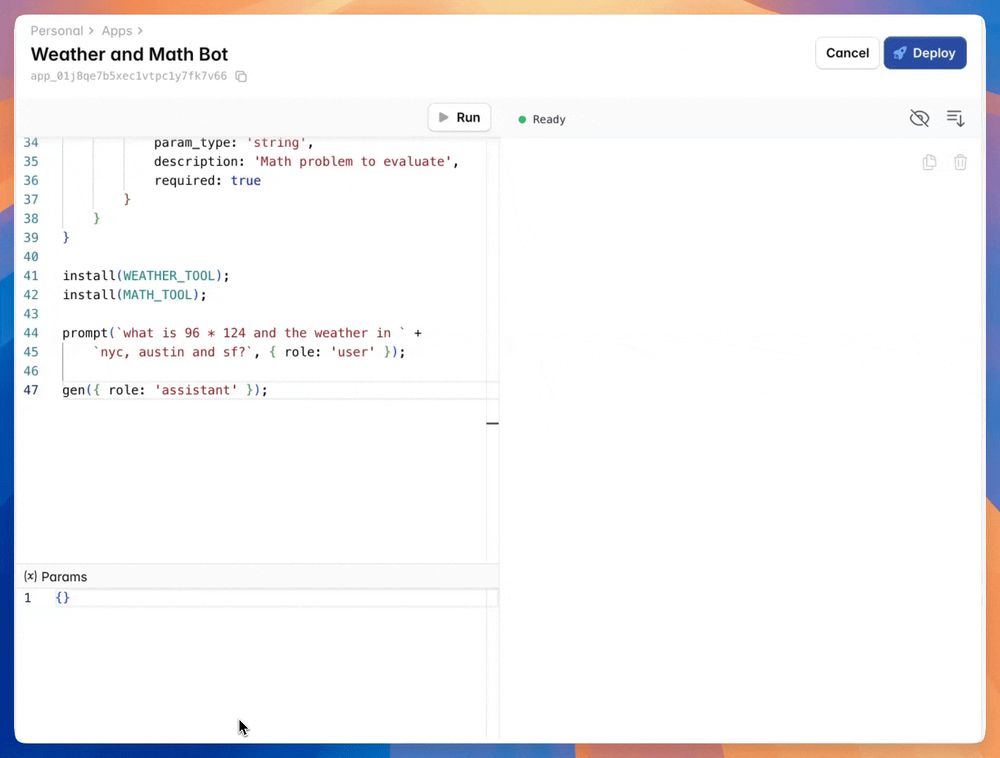

functions can even compose, here's the model using the output of one as the input into another

13.12.2024 20:24 — 👍 0 🔁 0 💬 1 📌 0

one of the most slept on capabilities of newer AI models is the ability to call multiple tools in a single shot. here's the newest llama 70b running on mixlayer calling 4 tools (lookup weather in 3 cities and perform some arithmetic)

13.12.2024 20:24 — 👍 2 🔁 0 💬 1 📌 0

Want to play around with chain of thought and some other prompting techniques? I put up a few

Mixlayer demos on Meta's Llama 3.1 8b in this blog post. www.mixlayer.com/blog/2024-12...

weird that the instruction tuned Llama3 8b models are downloaded less than the original?

04.12.2024 15:53 — 👍 0 🔁 0 💬 1 📌 0I doubt they switch to a lower precision model, but would not be surprised if they start using a quantized or fp8 KV cache. Much easier to switch out dynamically in response to load vs the model weights.

23.11.2024 17:43 — 👍 0 🔁 0 💬 0 📌 0Crazy to think that a 1M token context window will be the norm soon.

Doesn't look like this model has made it onto HF yet (just a space, no weights), curious to learn more about the sparse attention mechanism.

qwenlm.github.io/blog/qwen2.5...

woke up in a 3am fit of terror last night bc I dreamt I left an 8x a100 gpu cluster running by accident 🫠

17.11.2024 13:58 — 👍 2 🔁 0 💬 0 📌 0