I made an interactive conversation analysis of the (world changing?) #Trump #Zelenskyy #Vance conversation. (Transcript from @apnews.com)

claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

@jonathanmall.bsky.social

LLMs, AI, Psychology and Speaking - Seeking to infuse technology with empathy. That's why I founded www.neuroflash.com. An AI platform driving brand-aligned marketing, and helping people connect and understand each other's perspectives.

I made an interactive conversation analysis of the (world changing?) #Trump #Zelenskyy #Vance conversation. (Transcript from @apnews.com)

claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

Interactive version: claude.site/artifacts/f8...

02.03.2025 12:15 — 👍 1 🔁 0 💬 0 📌 0Indeed, looks like an export mistake. It's correct in the dynamic version.

02.03.2025 12:14 — 👍 1 🔁 0 💬 0 📌 0

The words we choose reveal more than we think. Language isn't just communication — it's a reflection of power, strategy, and worldview.

02.03.2025 11:19 — 👍 1 🔁 2 💬 2 📌 0

- Vance emphasized American interests and positioned himself as defending diplomacy.

02.03.2025 11:19 — 👍 1 🔁 1 💬 1 📌 0

- Zelensky focused on historical context ("2014," "occupied") and used "we" to suggest collective identity "We are staying in our country, staying strong."

02.03.2025 11:19 — 👍 0 🔁 1 💬 1 📌 0

- Trump dominated the conversation (48.1% of total words) and used "you" 28 times, creating a confrontational tone "You don't have the cards. You're buried there."

- Trump's language was heavy on negation and control ("don't," "not," "no").

I analyzed the Trump-Zelensky-Vance Transcript from @apnews.com, to see what stands behind their words - speaking volumes about power dynamics. 📊

Key Insights in thread

Very interesting topic, will give it a read next year :)

31.12.2024 12:56 — 👍 2 🔁 0 💬 0 📌 0Great approach. A good feedback signal is worth a lot in any of these complex agentic workflows

10.12.2024 11:16 — 👍 0 🔁 0 💬 0 📌 0

What is better than an LLM as a Judge? Right, an Agent as a Judge! Meta created an Agent-as-a-Judge to evaluate code agents to enable intermediate feedback alongside DevAI a new benchmark of 55 realistic development tasks.

Paper: huggingface.co/papers/2410....

There is ZERO connection between vaccines and autism!

NONE!

Why is the government not capable wiping the floor with Nigel Farage every single day like this? Alastair Campbell effortlessly destroys him over his Brexit legacy which by almost every economic and financial measure has been disastrous for the country

06.12.2024 06:41 — 👍 9465 🔁 2367 💬 559 📌 166

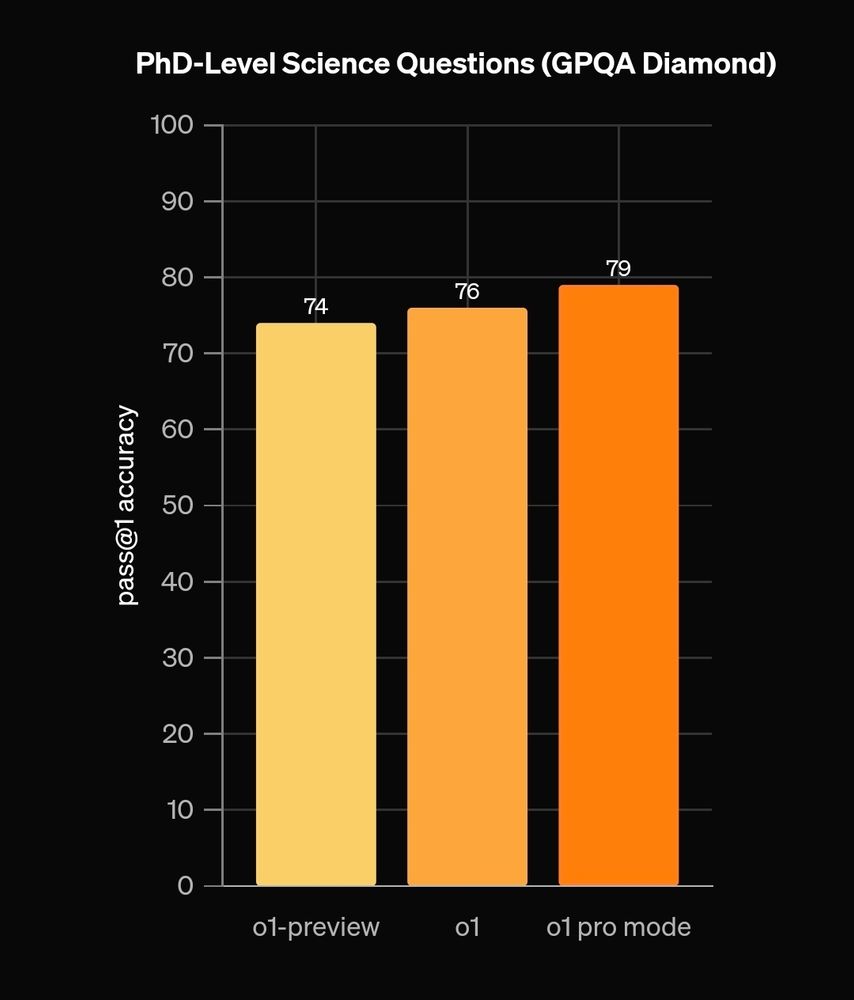

OpenAIs o1-pro model is only marginally better than the o1 model. But 10x the price ($20 to $200) to get access, not sure that's worth the qual increase.

Alternatively, do your own agentic or chain of thought prompting. Or go full professional with #dspy or #adalflow for auto prompt optimization.

When predicting human sentiment responses for (german) words, Qwen 2.5 Coder 32B is doing worse than gpt4oMini.

R squared is

0.845 for GPT4oMini

0.714 for Qwen2.4 Coder 32B

Many more comparisons to follow :)

Do you know a good LLM for European Languages?

Using Perplexity instead of prompting to predict the more likely outcome of neuroscience experiments. Beating human experts by roughly 20% - wow.

Could help to make research more efficient Imho. Especially since they've older models to achieve this!

www.nature.com/articles/s41...

Menlo Ventures shows that enterprise usage of anthropic has doubled in the last year.

Could it be because it's often better than openai? - for coding it's my go to model.

A year ago, Claude 2.1 had a 200K token context window with 27% accuracy on needle-in-haystack tests. Now, Alibaba's Qwen2.5-Turbo claims a 1M token window with 100% recall on similar tests.

@alanthompson.net

Koala

30.11.2024 08:24 — 👍 1 🔁 0 💬 0 📌 0

SIFT introduces test-time learning by selecting highly relevant and non-redundant data for fine-tuning. Some call it Q-star 2.0. It optimizes information gain, reducing prediction uncertainty while using minimal compute - neat.

paper: arxiv.org/abs/2305.18466

Video: www.youtube.com/watch?v=vei7...

Performant and actually open LLM was just released. (with training data and recipes etc.) will be testing OLMO soon. allenai.org/olmo

30.11.2024 07:50 — 👍 3 🔁 0 💬 0 📌 0Been trying that (with contemporary people) and it works. You can test it's accuracy of you have responses from your copied subjects. For historical figures, that could be decisions or text they have written.

Not saying it'll work, but at least you could estimate whether it does or doesn't.

Are instruct models becoming "too obedient"? When I prompt them with brand guidelines, and leave certain phrasen in, the llm will try to put those into virtually every content :D

Prompt engineering can mitigate, but its certainly different from the DaVinci days (gpt3)...old school completion.

Happy Thanksgiving, everyone!

While you are drinking wine and drowning in turkey, I would encourage folks to think about:

What if Bluesky really is our shot at breaking the web2/socmedia stranglehold on collective sensemaking...

What kinds of trillionaire and sovereign attacks are coming?

🚀 Just ran a simulation on 1,000 word ratings using human data + our improved LLM prompt for simulating responses. Results? We explain almost 80% of the variance! This approach could revolutionize how we model human emotions—customized for specific groups. 🔥 #AI #EmotionModeling

28.11.2024 12:08 — 👍 4 🔁 0 💬 0 📌 0It's great, but we find the setup to be great too.. :)

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

Try adalflow, it combines dspy and textgrad... But it's a bit more complex to setup.

21.11.2024 21:33 — 👍 0 🔁 0 💬 1 📌 0