The main conceptual contribution is a way to sidestep the Ω(log n) barrier introduced by standard probabilistic metric embeddings. Instead, Yingxi & Mingwei found a clever way to bound our algorithm’s cost directly on a deterministic embedding & compare it to OPT, bounded via majorization arguments.

27.01.2026 17:54 — 👍 1 🔁 0 💬 0 📌 0

We:

• Move 𝗯𝗲𝘆𝗼𝗻𝗱 the standard 𝗶.𝗶.𝗱. model: each request comes from its own distribution with a mild smoothness condition.

• Require 𝗻𝗼 𝗱𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻𝗮𝗹 𝗸𝗻𝗼𝘄𝗹𝗲𝗱𝗴𝗲: we use only one sample from each request distribution.

• Achieve an 𝗢(𝟭) competitive ratio for d-dimensional Euclidean metrics for d > 2.

27.01.2026 17:54 — 👍 0 🔁 0 💬 1 📌 0

We study a classic online metric matching problem in which n servers (e.g., rideshare drivers) are available in advance and n requests (e.g., riders) arrive one by one. Each request must be immediately matched to an available server, paying the distance between the two in an underlying metric.

27.01.2026 17:54 — 👍 0 🔁 0 💬 1 📌 0

YouTube video by Mingwei Yang

ITCS 2026 - Smoothed Analysis of Online Metric Matching with a Single Sample

This week at the Innovations in Theoretical Computer Science (ITCS) conference, Mingwei Yang is presenting our paper:

𝗦𝗺𝗼𝗼𝘁𝗵𝗲𝗱 𝗔𝗻𝗮𝗹𝘆𝘀𝗶𝘀 𝗼𝗳 𝗢𝗻𝗹𝗶𝗻𝗲 𝗠𝗲𝘁𝗿𝗶𝗰 𝗠𝗮𝘁𝗰𝗵𝗶𝗻𝗴 𝘄𝗶𝘁𝗵 𝗮 𝗦𝗶𝗻𝗴𝗹𝗲 𝗦𝗮𝗺𝗽𝗹𝗲: 𝗕𝗲𝘆𝗼𝗻𝗱 𝗠𝗲𝘁𝗿𝗶𝗰 𝗗𝗶𝘀𝘁𝗼𝗿𝘁𝗶𝗼𝗻

by Yingxi Li, myself, and Mingwei Yang

See Mingwei's talk here: youtu.be/yEBPI9c7OE8?...

27.01.2026 17:54 — 👍 6 🔁 0 💬 1 📌 0

LLMs for Optimization Tutorial

Fair Clustering Tutorial

Tutorial page (agenda + reading list): conlaw.github.io/llm_opt_tuto...

Thanks to Léonard Boussioux and Madeleine Udell for helping put the proposal together.

20.01.2026 01:50 — 👍 0 🔁 0 💬 0 📌 0

Optimization is central to planning, scheduling, and decision-making, but deploying solvers requires deep expertise. Our tutorial covers how LLMs can support the end-to-end optimization pipeline (model formulation, solver configuration, and model validation) and highlights open research directions.

20.01.2026 01:50 — 👍 1 🔁 0 💬 1 📌 0

Topic 4: Theoretical Guarantees

- Optimizing Solution-Samplers for Combinatorial Problems: The Landscape of Policy-Gradient Methods (Caramanis et al., NeurIPS’23)

- Approximation Algorithms for Combinatorial Optimization with Predictions (Antoniadis et al., ICLR’25)

02.12.2025 21:55 — 👍 1 🔁 0 💬 0 📌 0

Topic 3: Math Optimization

- OptiMUS-0.3: Using LLMs to Model and Solve Optimization Problems at Scale (AhmadiTeshnizi et al., arXiv’25)

- Contrastive Predict-and-Search for Mixed Integer Linear Programs (Huang et al., ICML’24)

- Differentiable Integer Linear Programming (Geng et al., ICLR’25)

02.12.2025 21:55 — 👍 2 🔁 0 💬 1 📌 0

Topic 2: Graph Neural Networks

- One Model, Any CSP: GNNs as Fast Global Search Heuristics for Constraint Satisfaction (Tönshoff et al., IJCAI’23)

- Dual Algorithmic Reasoning (Numeroso et al., ICLR’23)

- DIFUSCO: Graph-based Diffusion Solvers for Combinatorial Optimization (Sun & Yang, NeurIPS’23)

02.12.2025 21:55 — 👍 2 🔁 0 💬 1 📌 0

Topic 1: Transformers & LLMs

- What Learning Algorithm is In-Context Learning? (Akyürek et al., ICLR’23)

- Transformers as Statisticians (Bai et al., NeurIPS’23)

- We Need An Algorithmic Understanding of Generative AI (Eberle et al., ICML’25)

- Evolution of Heuristics (Liu et al., ICML’24)

02.12.2025 21:55 — 👍 3 🔁 0 💬 1 📌 0

I’m excited to share the materials from my Stanford seminar course, “AI for Algorithmic Reasoning and Optimization”: vitercik.github.io/ai4algs_25/. It covered formal algorithmic frameworks for analyzing LLM reasoning, GNNs for combinatorial/mathematical optimization, and theoretical guarantees.

02.12.2025 21:55 — 👍 4 🔁 2 💬 1 📌 0

On top of his research, my PhD students and I can attest that he’s a thoughtful, generous collaborator and mentor.

Please don’t hesitate to reach out if you’d like me to share my very strong recommendation letter.

(Photo credit: @cpaior.bsky.social.)

16.11.2025 18:53 — 👍 2 🔁 0 💬 0 📌 0

Please keep an eye out for Connor Lawless (@lawlessopt.bsky.social) on the faculty job market! Connor is a Stanford Human-Centered AI Postdoc, co-hosted by myself and Madeleine Udell. His research combines ML, computational optimization, and HCI, with the goal of building human-centered AI systems.

16.11.2025 18:52 — 👍 6 🔁 1 💬 1 📌 1

Understanding Fixed Predictions via Confined Regions

Machine learning models can assign fixed predictions that preclude individuals from changing their outcome. Existing approaches to audit fixed predictions do so on a pointwise basis, which requires ac...

Excited to be chatting about our new paper "Understanding Fixed Predictions via Confined Regions" (joint work with @berkustun.bsky.social, Lily Weng, and Madeleine Udell) at #ICML2025!

🕐 Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📍East Exhibition Hall A-B #E-1104

🔗 arxiv.org/abs/2502.16380

14.07.2025 16:08 — 👍 5 🔁 3 💬 1 📌 0

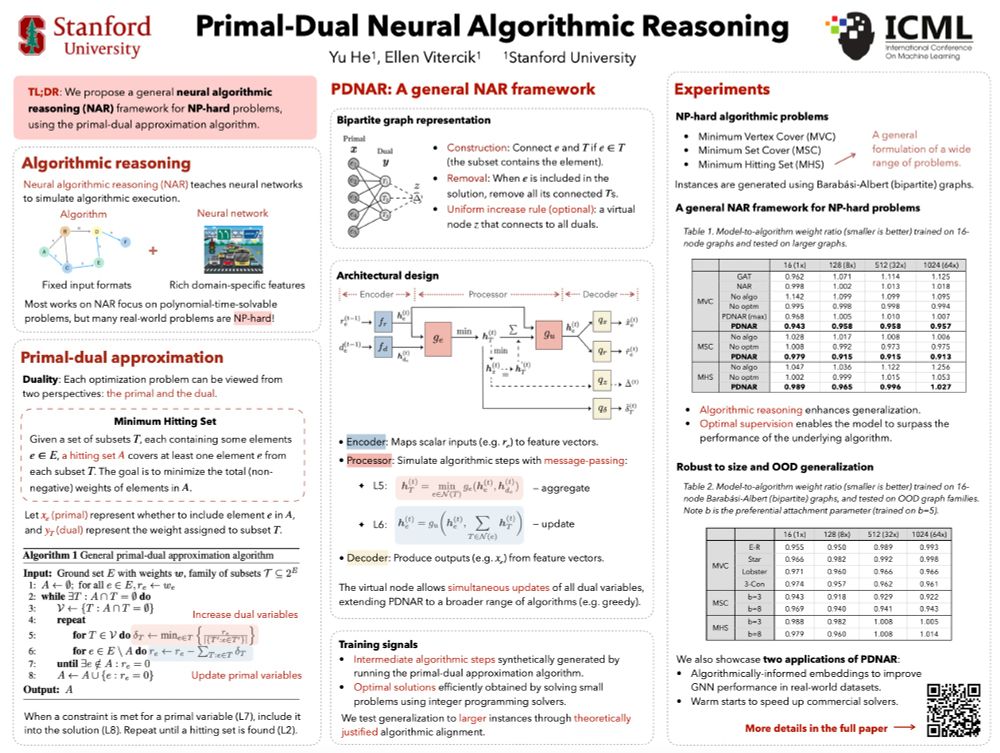

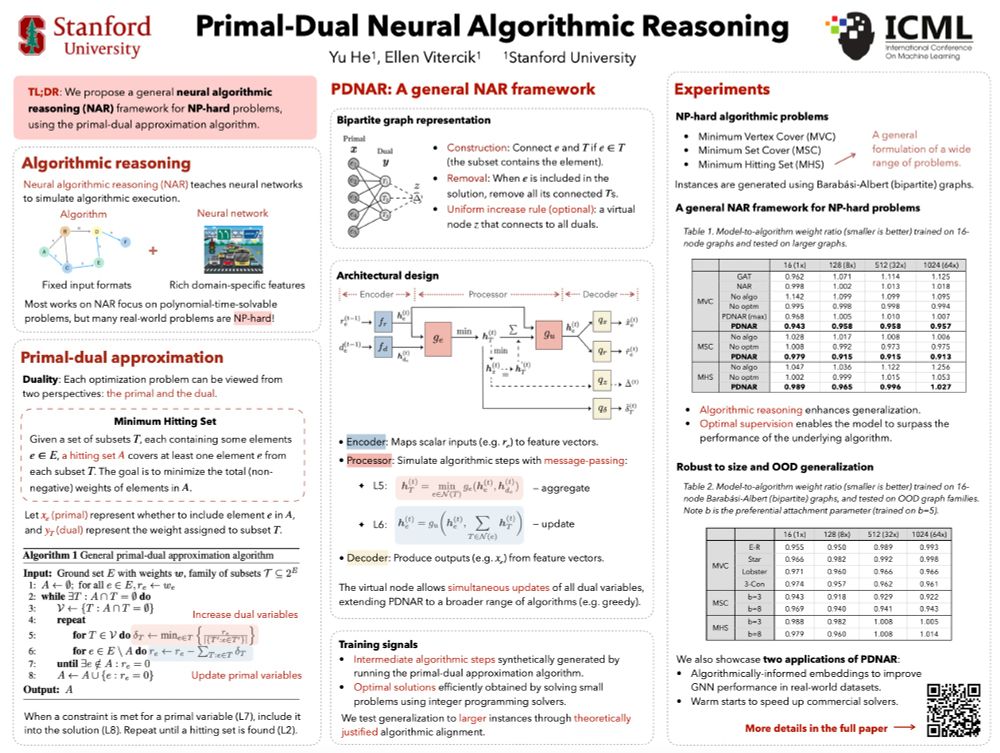

Our ✨spotlight paper✨ "Primal-Dual Neural Algorithmic Reasoning" is coming to #ICML2025!

We bring Neural Algorithmic Reasoning (NAR) to the NP-hard frontier 💥

🗓 Poster session: Tuesday 11:00–13:30

📍 East Exhibition Hall A-B, # E-3003

🔗 openreview.net/pdf?id=iBpkz...

🧵

13.07.2025 21:34 — 👍 6 🔁 2 💬 1 📌 0

Join us for a Wikipedia edit-a-thon at #ACMEC25!

When: July 8th, 8PM-10PM

Where: Stanford Econ Landau 139

Website: sites.google.com/view/econcs-...

Come hangout, grab snacks, and edit/create Wikipedia pages for EC topics.

Suggest topics/articles that need attention: docs.google.com/spreadsheets...

02.07.2025 20:17 — 👍 12 🔁 3 💬 1 📌 0

Congrats Kira!!

05.04.2025 05:08 — 👍 0 🔁 0 💬 0 📌 0

Pulled a shoulder muscle trying to stay cool on the golf course in front of my PhD students and postdoc 😅 🏌♀️

12.12.2024 17:19 — 👍 18 🔁 0 💬 0 📌 0

📢 Join us at #NeurIPS2024 for an in-person Learning Theory Alliance mentorship event!

📅 When: Thurs, Dec 12 | 7:30-9:30 PM PST

🔥 What: Fireside chat w/ Misha Belkin (UCSD) on Learning Theory Research in the Era of LLMs, + mentoring tables w/ amazing mentors.

Don’t miss it if you’re at NeurIPS!

10.12.2024 14:52 — 👍 9 🔁 2 💬 0 📌 0

Hi Emily, could you please add me? Thanks for making it!

19.11.2024 15:05 — 👍 4 🔁 0 💬 1 📌 0

Can you add me? 😀

18.11.2024 04:26 — 👍 0 🔁 0 💬 1 📌 0

Postdoc @Microsoft Research NE

https://yifanwu.me

MIT postdoc, incoming UIUC CS prof

katedonahue.me

Senior Researcher Machine Learning at BIFOLD | TU Berlin 🇩🇪

Prev at IPAM | UCLA | BCCN

Interpretability | XAI | NLP & Humanities | ML for Science

professor of operations research at RWTH Aachen university, I like mathematical optimization in theory and practice; #orms

Head of AI @ NormalComputing. Tweets on Math, AI, Chess, Probability, ML, Algorithms and Randomness. Author of tensorcookbook.com

Professor, UC Davis #Mathematics. He/him. #DavisCA.

Made #SageMath pip-installable @passagemath.org.

https://github.com/mkoeppe #Python #OpenSource

#cutgeneratingfunctionology #optimization #orms

I block accounts to increase reading focus.

CS PhD student @Stanford | BA+MEng @Cambridge

dransyhe.github.io

Assistant Prof. USC Marshall in Data Science and Operations,

Postdoc UCBerkeley, PhD Stanford

Interdisciplinary research institute: applied mathematics & data-intensive high-performance computing

https://www.zib.de

Northwestern University assistant professor. Interested in evolution, robots, AI and ALife.

https://www.xenobot.group

Assistant Prof at UCSD. I work on safety, interpretability, and fairness in machine learning. www.berkustun.com

Assistant prof at Columbia IEOR developing AI for decision-making in planetary health.

https://lily-x.github.io

assistant prof at USC Data Sciences and Operations and Computer Science; phd Cornell ORIE.

data-driven decision-making, operations research/management, causal inference, algorithmic fairness/equity

bureaucratic justice warrior

angelamzhou.github.io

Prof @Wharton @Penn; machine learning for health & social good; foodie, gamer, homebody

Professor, Optimizer, Human-Pyramid Maker

AI & Transportation | MIT Associate Professor

Interests: AI for good, sociotechnical systems, machine learning, optimization, reinforcement learning, public policy, gov tech, open science.

Science is messy and beautiful.

http://www.wucathy.com

Gurobi developer, former CPLEX and SCIP developer, mixed integer programming